Integrations

What's new?

Prototype Testing

Live Website Testing

Feedback Surveys

Interview Studies

Card Sorting

Tree Testing

In-Product Prompts

Participant Management

Automated Reports

Templates Gallery

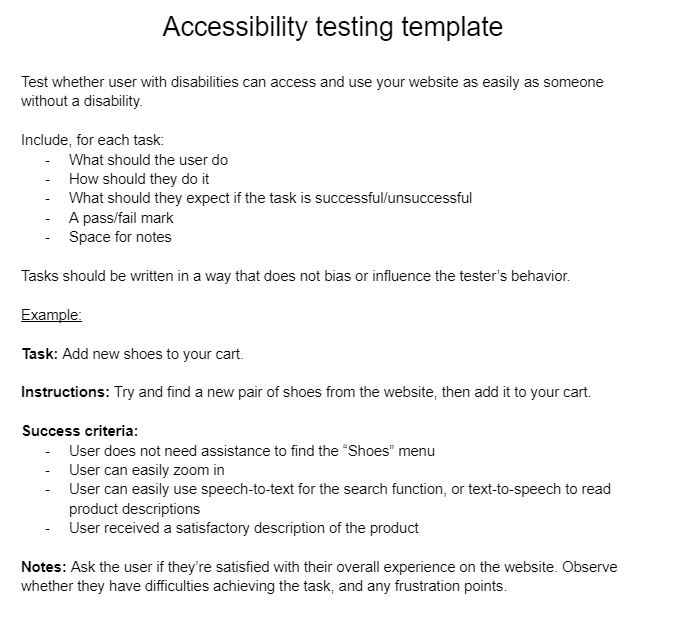

Choose from our library of pre-built mazes to copy, customize, and share with your own users

Browse all templates

Financial Services

Tech & Software

Product Designers

Product Managers

User Researchers

By use case

Concept & Idea Validation

Wireframe & Usability Test

Content & Copy Testing

Feedback & Satisfaction

Content Hub

Educational resources for product, research and design teams

Explore all resources

Question Bank

Research Maturity Model

Guides & Reports

Help Center

Future of User Research Report

The Optimal Path Podcast

Maze Guides | Resources Hub

A Beginner's Guide to Usability Testing

0% complete

5 Real-life usability testing examples & approaches to apply

Get a feel for what an actual test looks like with five real-life usability test examples from Shopify, Typeform, ElectricFeel, Movista, and Trint. You'll learn about these companies' test scenarios, the types of questions and tasks these designers and UX researchers asked, and the key things they learned.

If you've been working through this guide in order, you should now know pretty much everything you need to run your own usability test. All that’s left is to get your designs in front of some users.

Just arrived here? Here’s a quick re-cap to make sure you have the context you need:

- Usability testing is the practice of conducting tests with real users to see how easily they can navigate your product, understand how to use it, and achieve their goals

- There are many usability testing methods . Picking the right one is crucial for getting the insights you need.

- Qualitative usability testing involves more open-ended questions, and is good for sourcing ideas or validating early assumptions

- Quantitative testing is good for testing a higher number of people, which is useful for fine-tuning your design once you have a high-fidelity prototype

- If it’s too difficult to organize in-person tests, remote usability testing is a fast and cost-effective way to get the info you need

- Guerrilla usability testing is a great option for some fast, easy insights from real people

- Ask usability testing questions before, during, and after your test to give more context and detail to your results

Why you need usability testing studies & examples

While it’s essential to learn about each aspect of usability testing , it can be helpful to get a feel for what an actual test looks like before creating your first test plan. Creating user testing scenarios to get the feedback you need comes naturally after you’ve run a few tests, but it’s normal to feel less confident at first. Remember: running usability tests isn’t just useful for identifying usability problems and improving your product’s user experience—it’s also the best way to fine-tune your usability testing process.

For inspiration, this chapter contains real-world examples of usability tests, with some advice from designers and UX researchers on writing usability tasks and scenarios for testing products.

If you’re not sure whether you are at the right stage of the design process to conduct usability studies, the answer is almost certainly: yes !

It’s important to test your design as early and often as possible . As long as you have some kind of prototype, running a usability test will help you avoid relying on assumptions by involving real users from the beginning. So start testing early.

The scenarios, questions, and tasks you should create, as well as the overall testing process, will vary depending on the stage you’re at. Let’s look at five examples of usability tests at different stages in the design process.

Usability tests made easy

Find the real snags in your user journey and fix them. Maze makes it easier to make your product truly customer-centric.

Discovery phase usability test example: Shopify

The Shopify Experts Marketplace is a platform that connects Shopify merchants with trusted Shopify experts who have demonstrated proven expertise in the services they offer. All partners on the Experts Marketplace are experienced and skilled Shopify partners who help merchants grow their businesses by providing high-quality services.

Feature being tested

When Shopify merchants look for a Shopify-recommended service provider, the first page they find is the Expert profile . There, they can find an overview of services provided, recent client testimonials, examples of past work, and more. If a merchant finds the expert profile page easy to navigate, they’re more likely to reach out to experts and potentially hire them.

Usability testing approach

The Shopify team wanted to make sure they were including all the relevant information in the right place. To do so, they first gathered insights about what merchants would need to know about Experts from generative user interviews .

Once they knew what information was most important, they moved on to evaluative research and conducted card sorting and tree testing studies to evaluate the information architecture of the product.

At that stage of the research process, usability testing was the best way to understand how Expert profiles could create more value for users. Melanie Buset, User Experience Researcher at Spotify and former User Experience Researcher at Shopify, explains:

Now that we knew what information we needed to surface, we needed to evaluate how and where we surfaced this information. Usability testing provided us with insight into how well we were meeting user’s expectations.

Melanie Buset , User Experience Researcher

Melanie worked closely with the designer on the team to identify what the research questions should be. Based on these questions, the team created a UX research plan and a discussion guide for the usability test. After having tested the usability plan with coworkers, they recruited the participants and ran the actual test.

By usability testing, Melanie and the team were able to gather actionable feedback and implement changes quickly. They continued to test until they reached a point where users felt they had access to the most relevant information about Experts and felt ready to potentially hire them.

Test scenario

"Imagine that you’re interested in hiring a Shopify Expert to help with developing a marketing campaign.”

The team wanted to recreate a scenario that would be as close to the real world as possible. For this purpose, they selected participants who had previously been interested in hiring a Shopify Expert.

Task and question examples

Participants were first given a set of general tasks and asked to think aloud as much as possible and to share any feedback throughout the session. Melanie would ask them to show her how they would go about trying to find someone to hire via the Experts Marketplace and go through the process as if they were ready to hire someone.

If the participants got lost or weren't sure how to proceed, she would gently encourage them to try to find another way to accomplish their goal or to share what they would expect to do or see.

The team also asked participants more specific questions, such as:

- What information is helping you determine if an Expert is a good fit for your needs?

- What does this button mean? What happens if you click on it?

Unsure about what to ask in your usability test? Take a look at our guide to writing usability questions + examples 💭

The key thing they learned

After testing, we learned so much about what’s important to people when they’re looking to hire a freelancer for their business and specific projects. For example, people want to know upfront how freelancers will communicate with them, and they prefer profiles that feel more human and less transactional.

Ready-to-use Maze Templates for product discovery phase

Run a product discovery survey

Base your next product moves on your users’ needs, not your assumptions. With this template, you can develop a clear picture of what your audience wants so you can work on faster solutions to their problems.

See this template

Discover jobs to be done

Tap into the minds of your customers and discover their desired outcomes with this easy-to-use JTBD survey. Collect valuable, actionable feedback to help build more customer-centric products that helps users achieve their goals—and reduce their pain points.

Early-stage usability test example: ElectricFeel

ElectricFeel 's product is a software platform for entrepreneurs and public transport companies to launch, grow, and scale fleets of shared electric bicycles and mopeds. It includes a mobile app for riders to rent vehicles and a system for mobility companies to run day-to-day fleet operations.

When a new rider signs up to the ElectricFeel app, a fleet management team member from the mobility company has to verify their personal info and driver’s license before they can rent a vehicle.

The ElectricFeel team hypothesized that if they could make this process smoother for fleet management teams, they could reduce the time between someone registering and taking their first ride. This would make the overall experience for new riders more frictionless.

The idea to improve the rider activation process came from a wider user testing initiative, which the team saw as a vital first step before they started working on new designs. Product designer, Gerard Marti, explains:

To address the gap between how you want your product to be received and how it is received, it’s key to understand your users’ day-to-day experience.

Gerard Marti , Product Designer at ElectricFeel

After comparing the results of user persona workshops conducted both within the company and with real customers, the team used the insights to sketch wireframes of the new rider activation user interface.

Then Gerard ran some usability tests with fleet managers to validate whether the new designs actually made it easier to verify new riders, tweaking the design based on people’s feedback.

The next step in their process is conducting quantitative tests on alternative designs, then continuing to test and iterate the option that wins with more quantitative testing. Gerard sees quantitative testing as a vital step towards validating designs with real human behavior:

What people say and what they actually end up doing is not always the same. While opinions are important and you should listen to them, behavior is what matters in the end.

“You have four riders in the pipeline waiting to be accepted.”

Gerard would often leave the scenario at just this, as he wanted to observe the order in which users perceive each element of the design without sending them in a direction with a goal.

When testing early versions of designs, leaving the usability test scenario open lets you find out whether users naturally understand the purpose of the screen without prompting.

To generate a conversational and open atmosphere with participants, Gerard starts with open questions that don’t invite criticism or praise from the user:

- What do you see on the screen?

- What do you think this is for?

He then moves on to asking about specific elements of the design:

- What information do you find is most valuable?

- Are pictures or text more important for you?

By asking users to evaluate individual elements of the design, Gerard invites participants to give deeper consideration to their thought process when activating riders. This yields crucial insights on how the fundamentals of the interface should be designed.

After testing, we realized that people scan the page, look for the name, then check the image to see if it matches. So while we assumed the picture ID should be on the right, this insight revealed that it should be on the left.

Mid-stage usability test example: Typeform

Typeform is a people-friendly online form and survey maker. Its unique selling point is its focus on design, which aims to make the experience for respondents as smooth and interactive as possible. As a result, typeforms have a high completion rate.

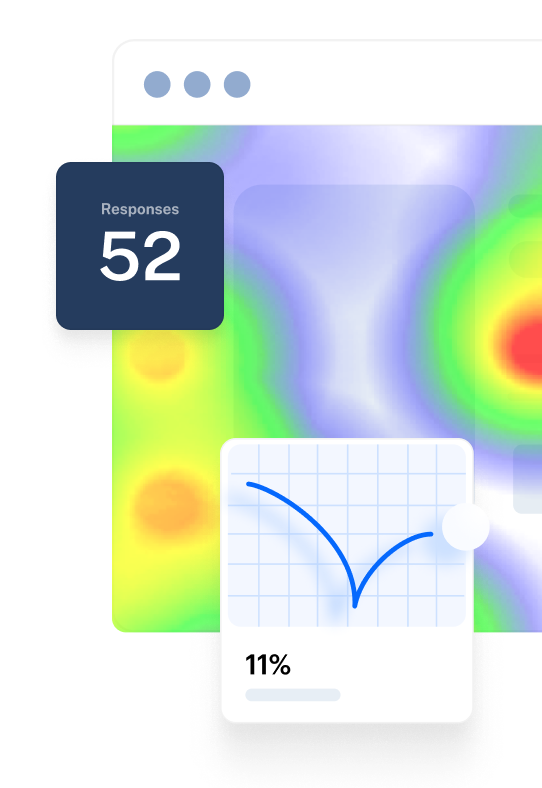

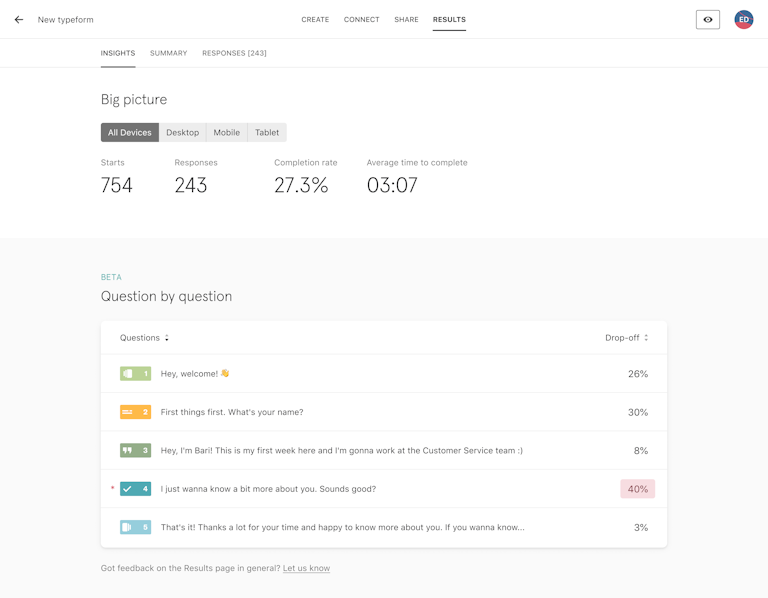

Since completion rates are a big deal for Typeform users, being able to see the exact questions where people leave your form was a highly requested feature for a long time. Typeform’s interface asks respondents one question at a time, so this is especially important. The feature is now called ‘Drop-off Analysis’.

Product tip ✨

Before you even start designing a prototype for a usability test, do research to discover the kind of products, features, or solutions that your target audience needs. Maze Discovery can help you validate ideas before you start designing.

Yuri Martins, Product Designer at Typeform, explains the point when his team felt like it was time to test their designs for the new Drop-off Analysis feature:

We had a lot of different ideas and drawings for how the feature could work. But we felt like we couldn’t commit to any of them without input from users to see things from their perspective.

Yuri Martins , Product Designer at Typeform

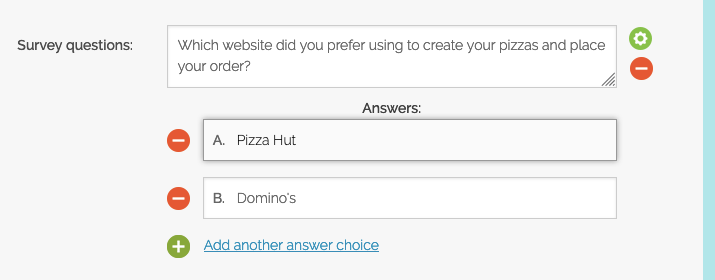

Fortunately, they had already contacted users and arranged some moderated tests one or two weeks before this point, anticipating that they’d need user feedback after the first design sprints. By the time the tests rolled around, Yuri had designed “a few alternative ways that users could achieve their objectives” in Figma.

Since the team wanted to iterate the design fast, they tested each prototype, then created a new updated version based on user feedback for the next testing session a day or two later. Yuri says they “kept running tests until we saw that feedback was repeating itself in a positive way.”

Finding participants is often the biggest obstacle to conducting usability tests. So schedule them in advance, then spend the following weeks refining what you’d like to test.

“One of your typeforms has already collected a number of responses. The info you see appears in the ‘Results’ page.”

This scenario was designed to be relatable for Typeform users that had already:

Made a typeform Shared it and collected responses Visited the ‘Results’ page to check on their responses. Choosing a scenario that appeals to this group of users ensured the feedback was as relevant as possible, as the people being tested were more likely to use the Drop-off Analysis feature to analyze their typeform’s results further.

Typeform’s Drop-off Analysis prototypes only existed in Figma at this point, which meant that users couldn’t interact with the design to complete usability tasks.

Instead, Yuri and the team came up with broader, more open-ended tasks and questions that aimed to test their assumptions about the design:

- Tell us what you understand about the information on this page.

- Describe anything missing that you would need to fully interpret the interface.

After the general questions, they asked questions about specific elements of the design to get feedback where they needed it most:

- At the drop-off point, what do you understand?

- What would you expect to see here?

- Does this information make sense to you?

This example shows that you don’t need a fully functional prototype to start testing your assumptions. For useful qualitative feedback midway through the design process, tweak your questions to be more open-ended .

Maze is fully integrated with Figma, so you can easily upload your designs and create an unmoderated usability test with your Figma prototype. Learn more .

We’d assumed that people would want to know how many respondents dropped off at each question. But by usability testing, we discovered that people were much more concerned with the percentage of respondents who dropped off—not the total number.

Late-stage usability test example: Movista

Movista is a workforce management software used by retail and manufacturing suppliers. It helps its users coordinate and execute tasks both in-store and in the field with a mobile app.

As part of a wider design update on their entire product, Movista is about to launch a new product for communications, messaging, chats, and sending announcements. This will let people in-store communicate with people out in the field better.

Movista’s new comms feature is at a late stage of the design process, so they tested a high fidelity prototype. Product designer, Matt Elbert, explains:

For the final round of usability testing before sending our designs to be developed, we wanted to test an MVP that’s as close as possible to the final product.

Matt Elbert , Product Designer at Movista

By this point, the team were confident about the fundamental aspects of the design. These tests were to iron out any final usability issues, which can be harder to identify during the process. By testing with a higher number of people, they hoped to get more statistically significant results to validate their designs before launch.

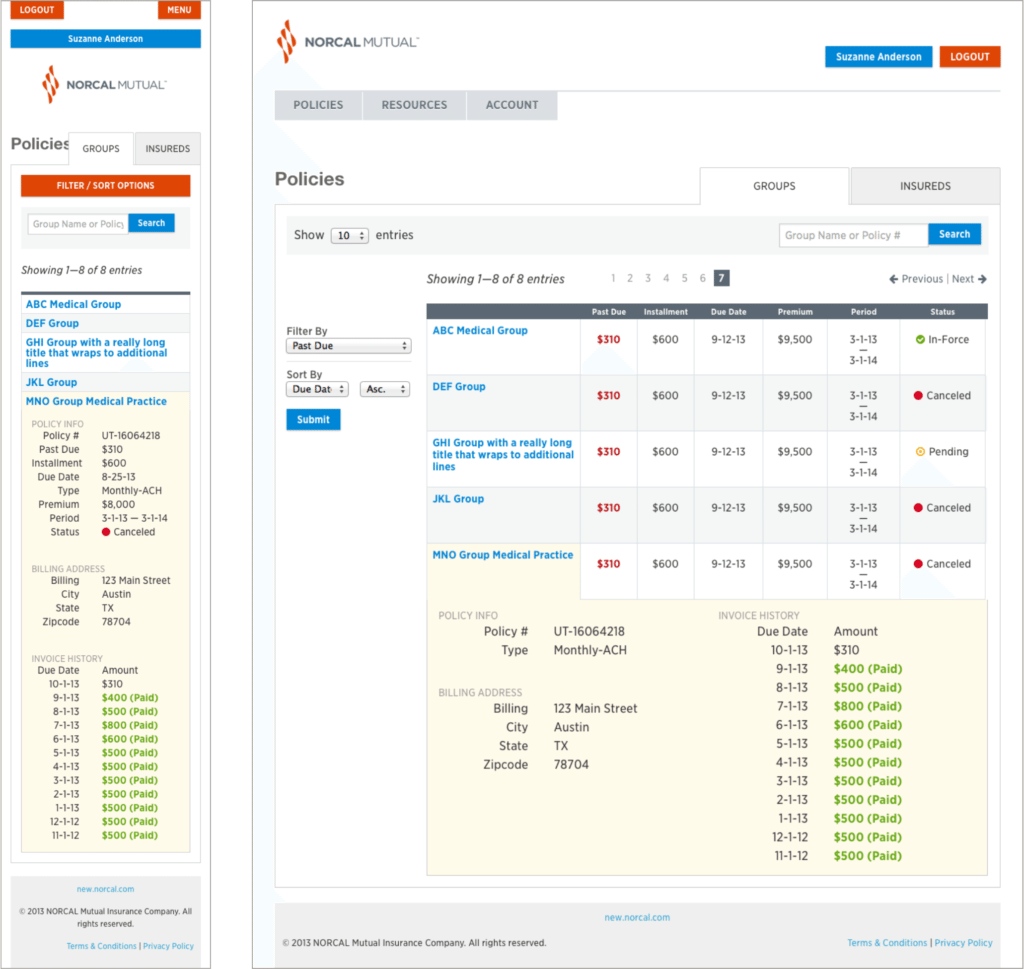

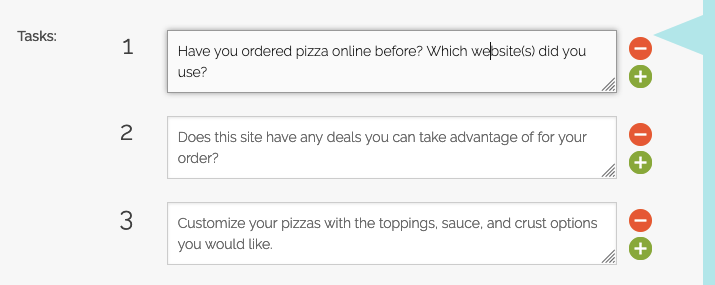

The team used Maze to conduct remote testing with their prototype, which included an overall goal broken down into tasks, and questions to find out how easy or difficult the previous step was.

“You have received new messages. Navigate to your messages.”

The usability tests would often begin in different parts of the product, with participants given a clear navigational goal. This prompts people to act straight away—without getting sidetracked by other areas of the app.

Matt advises people to be specific when using testing tools for unmoderated tests, as you won’t be there to make sure the user understands what you’re asking them to do.

The general format of the usability test was giving people a very specific task, then following up with an open question to ask participants how it went.

- How would you delete the message, “yeah, what’s up?” that you sent to Mark Fuentes.

- How did you find the experience of completing that task?

Matt and the team would also sometimes ask questions before a task to see if their designs matched users’ expectations:

- What options would you expect to be available in the menu on the top-right corner of the message?

“Questions like this are super useful because this is such a new feature that we don’t know for sure what people’s priorities are," said Matt. The team would rank people’s responses, then consider including different options if there was consistent demand for them.

Finally, Matt says it’s important to always include an invitation for participants to share any last thoughts at the end:

Some people might take a long time to complete a task because they’re checking out other areas of the product—not because they found it difficult. Letting people express their overall opinion stops these instances from skewing your test results.

Based on the insights we got from final results and feedback, we ended up shifting the step of selecting a recipient to much earlier in the process.

Live website usability test example: Trint

Trint is a speech-to-text platform for transcription and content creation. The tool uses artificial intelligence to automatically transcribe audio and video from different file formats and generate editable and shareable transcripts.

The ultimate goal of any B2B website is to attract visitors and convert them into loyal customers. The Trint team wanted to optimize their conversion funnel, and testing the website for usability was the best way to diagnose problems and find the right solutions.

The product team at Trint was already using quantitative data to understand what was happening on the website. They used Mixpanel to look at the conversion rates at every step of the funnel. However, it was never enough information to make design decisions. Lidia Sambito, UX Researcher at Trint, explains:

We had to use other pieces of evidence like usability testing to learn how people experienced our marketing funnel and how they felt throughout the customer journey before we were in a position to make the right changes.

Lidia Sambito , UX Researcher at Trint

Lidia worked closely with the product manager and the designer to identify the research questions and plan the sessions. She then recruited the participants and ran the usability test.

The test was run using Zoom. Lidia asked the participants to share their screens and moderated the sessions while the product designer was taking notes. All the sessions were recorded, and the observers could leave their comments by using the Realtime Transcription feature in Trint.

After each session, there was a 30-minute debrief with the team to discuss key takeaways, issues, and surprises. This helped the team reflect on what happened during the session and lay the groundwork for the larger synthesis.

To successfully synthesize the research findings, Lidia listened to the sessions, transcribed them using Trint, and then coded the data using different tags, such as pain points, needs, or goals. Finally, she held a workshop with the designer, engineer, and data scientist to identify common themes for each page of the onboarding process.

This research helped us understand how potential users move across the acquisition funnel and the most painful points of their experience. We identified the main problems and tackled them through ideation, prototyping, and more research.

"You have many files to transcribe and your colleague mentioned a software called Trint. He suggested you take a look at it."

Lidia and the team wanted to make the scenario as realistic as possible. They decided to use an open-ended scenario, giving participants minimal explanation about how to perform the task. The key was to see how users would spontaneously interact with the website.

During the test, the participants were asked to share their comments and thoughts while thinking out loud. The main tasks were:

- Walk me through how you would use Trint for the first time

- Show me what you would do next

Lidia would also ask participants more specific questions to get deeper insights. Here are some examples:

- What information is helping you determine if Trint is a good fit for your needs?

- Tell us what you understand about the information on this page

- Are pictures, videos, or text important for you?

We saw that the participants wanted to see and try out the product as early as possible. Still, it took several screens to get to the product. I recommended removing the onboarding survey. We also worked on the website's content to make it easier for people to understand what Trint is about.

Key usability testing takeaways

The examples above offer a heap of insight into how to conduct your usability test, so let’s end with a rundown of the main takeaways:

- Conduct usability testing early, and often: Users want to try a product out asap, and while it may be nerve-wracking to send a fresh product out there, it’s a great opportunity to gather feedback early in the design process. But don’t let that be your only usability test! Take the feedback, iterate, and test again.

- Check your biases, and be open to change: Don’t go into your usability test with opinions and expectations set in stone. Like any user research or testing, it’s a good idea to record your assumptions ahead of time. That way, if something comes up unexpectedly—for example, users don’t navigate the platform in the way you expect—you can run with it and consider new options, rather than feeling stuck in your ways or heartbroken over an idea. Remember, the user should always be at the center of the design.

- Don’t be afraid of a practice run: Usability tests are most effective when they run smoothly, so iron out any wrinkles by conducting a dry run before the real thing. Use colleagues or connections to double check your test, including any questions or software used. A test run may feel like an additional step, but it’s a lot quicker and cheaper than redoing your real test when an error occurs!

Frequently asked questions about usability testing examples

What is an example of usability testing?

Usability testing is a proven method to evaluate your product with real people by getting them to complete a list of tasks while observing and noting their interactions. For example, if you're designing a website for an e-commerce store that sells beauty products, a good way to test your design would be to ask the users to try to buy a particular hair care product.

By observing how users interact with your product, where they click, how long it takes them to select the specific product, and by listening to their feedback, you will be able to identify usability issues and areas of improvement.

How is usability testing performed?

Typically, during a usability test, users complete a set of tasks with a prototype or live product while observers watch and take notes of their interactions. The ultimate goal is to discover usability problems and see how users experience your product.

To run a successful usability test, you need to create a prototype and write an effective usability testing script to outline the goal of your research and the questions and tasks you're going to ask the users. You also need to recruit the participants, run the test, and finally analyze and report your test results.

What is usability testing?

Usability testing is the process of testing your product with real users, by asking them to complete a list of tasks while noting their interactions. The purpose of usability testing is to understand whether your product is usable for people to navigate and achieve their goals.

How do you carry out usability testing?

Usability testing can be carried out a number of ways. The most common methods of usability testing include utilizing online usability testing platforms, guerrilla testing, lab usability testing and phone/video interviews.

Start usability testing with Maze templates

Usability testing a new product

Validate usability across your wireframes and prototypes with real users early on. Use this pre-built template to capture valuable feedback on accessibility and user experience so you can see what’s working (and what isn’t).

Test mobile app usability

Help deliver a friction-free product experience for users on mobile. Test mobile app usability to discover pain points and validate expectations, so your users can scroll happily (and your Product team can keep smiling too).

11 Usability testing templates to try

6 Usability Testing Examples & Case Studies

Interested in analyzing real-world examples of successful usability tests?

In this article, we’ll be examining six examples of usability testing being conducted with substantial results.

Conducting usability testing takes only seven simple steps and does not have to require a massive budget. Yet it can achieve remarkable results for companies across all industries.

If you’re someone who cannot be convinced by theory alone, this is the guide for you. These are tried-and-tested case studies from well-known companies that showcase the true power of a successful usability test.

Here are the usability testing examples and case studies we’ll be covering in this article:

- McDonald’s

- AutoTrader.com

- Halo: Combat Evolved

Example #1: Ryanair

Ryanair is one of the world’s largest airline groups, carrying 152 million passengers each year. In 2014, the company launched Ryanair Labs, a digital innovation hub seeking to “reinvent online traveling”. To make this dream a reality, they went on a recruiting spree that resulted in a team of 200+ members. This team included user experience specialists, data analysts, software developers, and digital marketers – all working towards a common goal of improving the user experience of the Ryanair website.

What made matters more complicated, however, is that Ryanair’s website and app together received 1 billion visits per year. Working with a website this large, combined with the paper-thin profit margins of around 5% for the airline industry, Ryanair had no room for errors. To make matters even more stressful, one of the first missions for the new team included launching an entirely new website with a superior user experience.

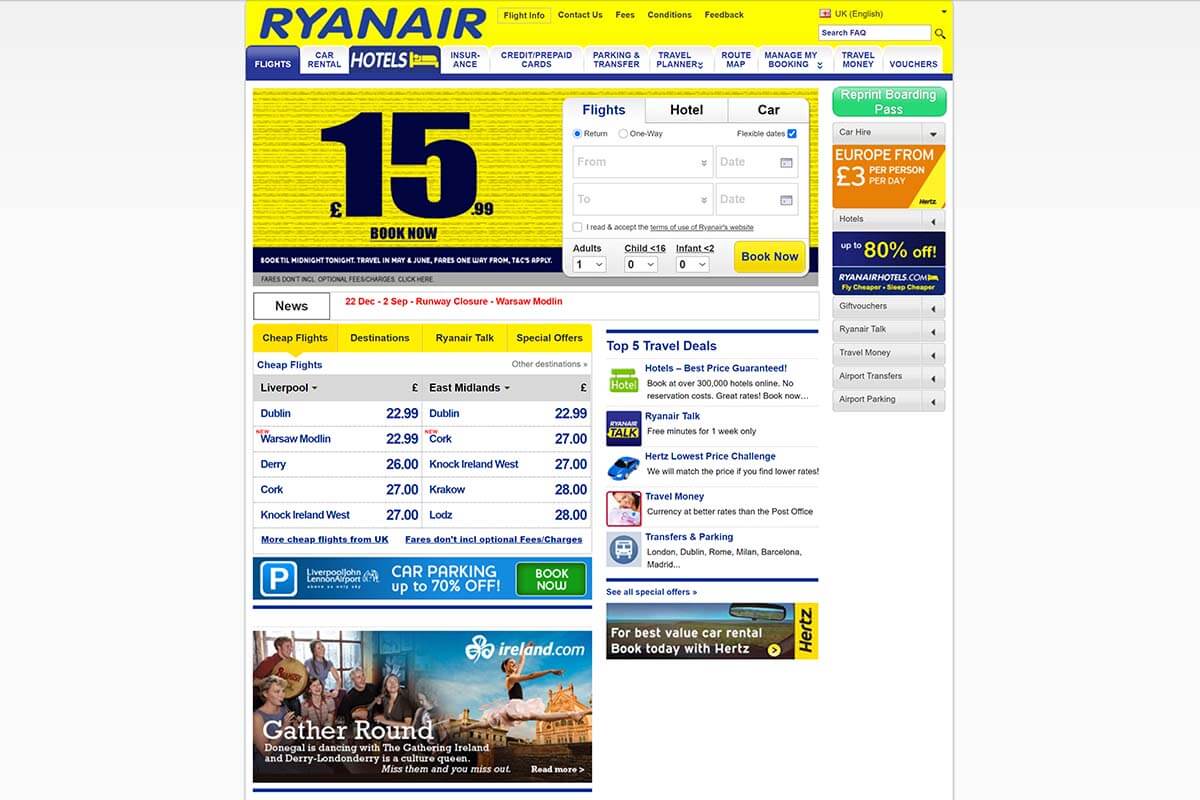

To give you a visual idea of what they were up against, take a look at their old website design:

Not great, not terrible. But the website undoubtedly needed a redesign for the 21st century.

This is what the Ryanair team set out to accomplish:

- Reducing the number of steps needed to book a flight on the website;

- Allowing customers to store their travel documents and payment cards on the website;

- Delivering a better mobile device user experience for both the website and app.

With these goals in mind, they chose remote and unmoderated usability testing types for their user tests. This by itself was a change for the team, as the Ryanair team had relied on in-lab, face-to-face testing until that point.

By collaborating with the UX agency UserZoom , however, new opportunities opened up for Ryanair. With UzerZoom’s massive roster of user testers, Ryanair could access large amounts of qualitative and quantitative usability data. Data that they badly needed during the design process of the new website.

By going with remote unmoderated usability testing, the Ryanair team managed to:

- Reduce the time spent on usability testing;

- Conduct simultaneous usability tests with hundreds of users and without geographical barriers;

- Increase the overall reach and scale of the tests;

- Carry out tests across many devices, operating systems, and multiple focus groups.

With continuous user testing, the new website was taken through alpha and beta testing in 2015. The end result of all work this was the vastly improved look, functionality, and user experience of the new website:

Even before launch, Ryanair knew that the new website was superior. Usability tests had shown that to be the case and they had no need to rely on “educated guesses”. This usability testing example demonstrates that a well-executed testing plan can give remarkable results.

Source: Ryanair case study by UserZoom

Example #2: McDonald’s

McDonald’s is one of the world’s largest fast-food restaurant chains, with a staggering 62 million daily customers . Yet, McDonald’s was late to embrace the mobile revolution as their smartphone app launched rather recently – in August 2015. In comparison, Starbucks’ smartphone app was already a booming success and accounted for 20% of its’ overall revenue in 2015.

Considering the competition, McDonald’s had some catching up to do. Before the launch of their app in the UK, they decided to hire UK-based SimpleUsability to identify any usability problems before release. The test plan involved conducting 20 usability tests, where the task scenarios covered the entire customer journey from end-to-end. In addition to that, the test plan included 225 end-user interviews.

Not exactly a large-scale usability study considering the massive size of McDonald’s, but it turned out to be valuable nonetheless. A number of usability issues were detected during the study:

- Poor visibility and interactivity of the call-to-action buttons;

- Communication problems between restaurants and the smartphone app;

- Lack of order customization and favoriting impaired the overall user experience.

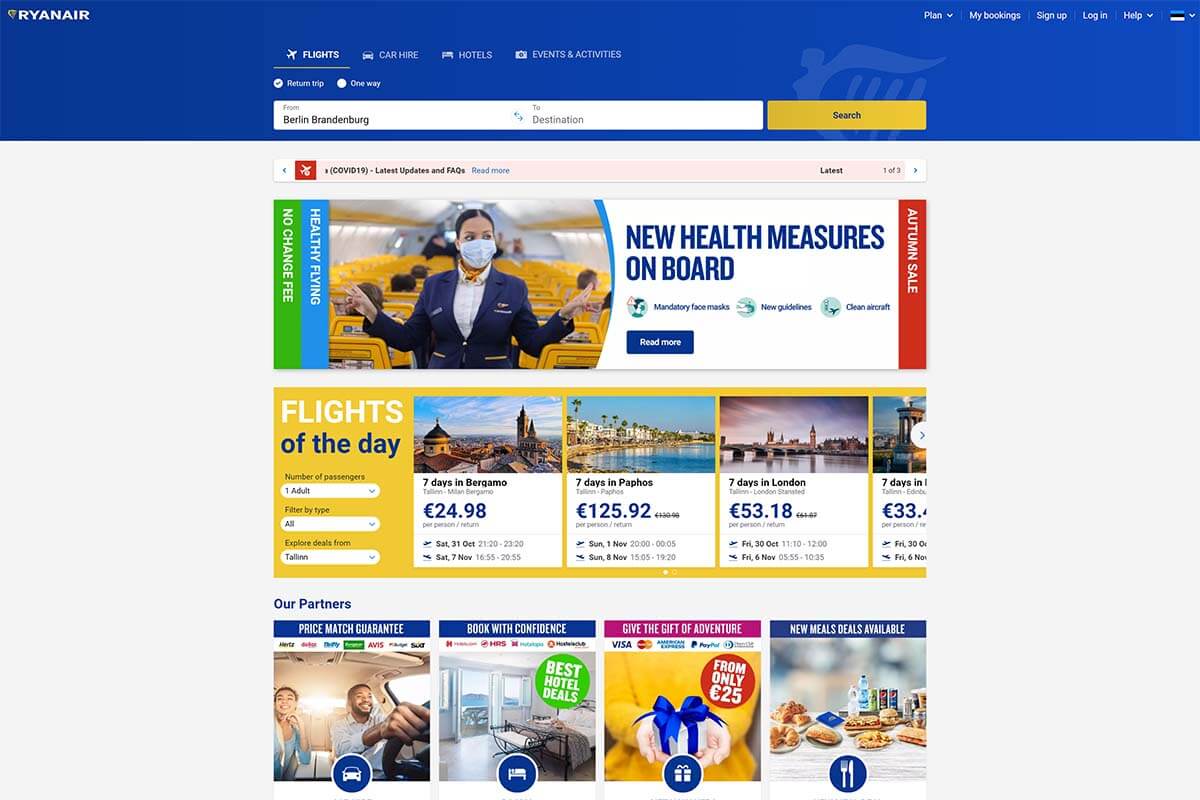

Here’s what the McDonald’s mobile app looks like today:

This case study demonstrates that investing even a tiny percentage of a company’s resources into usability testing can result in meaningful insights.

Source: McDonald’s case study by SimpleUsability

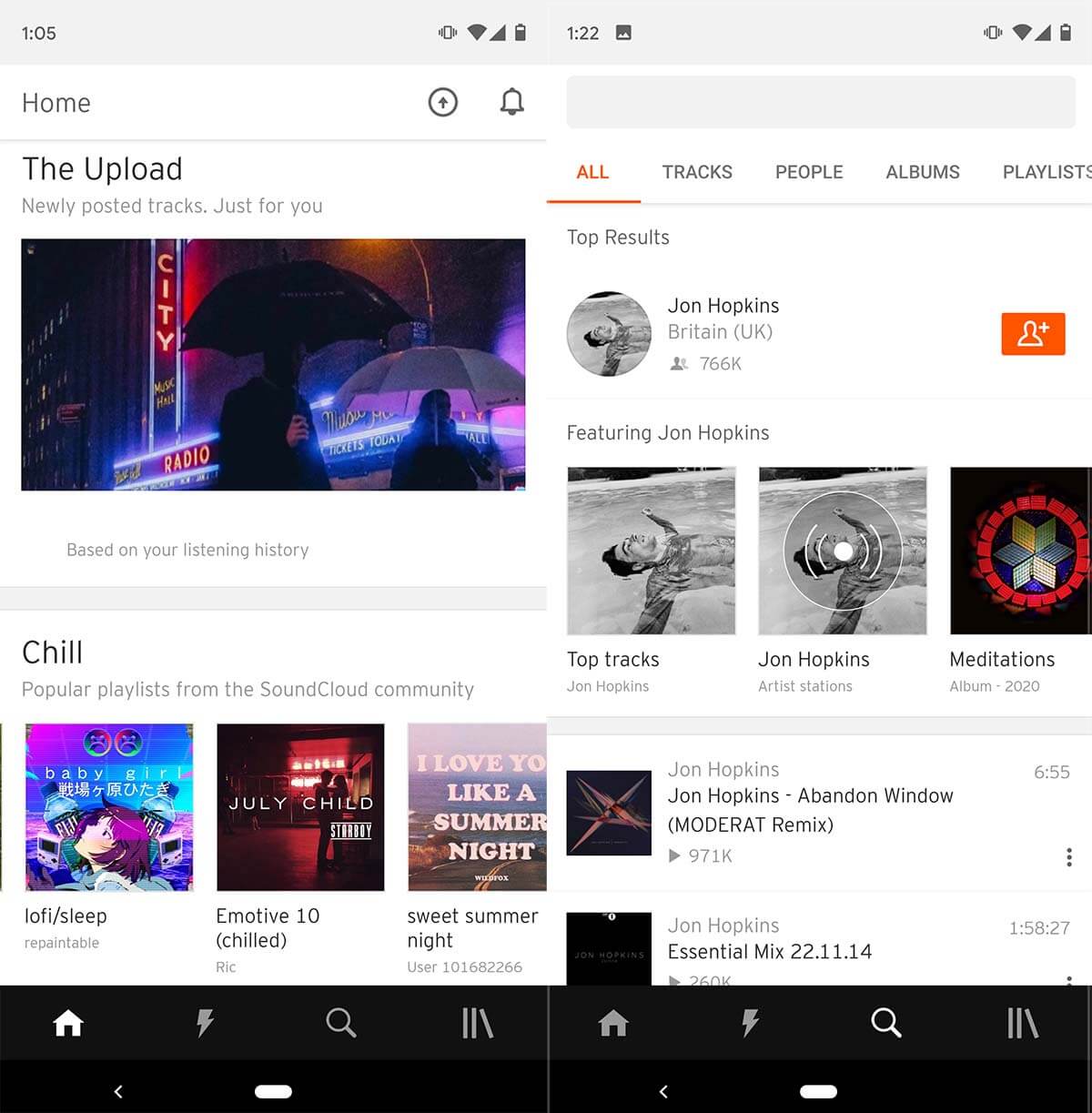

Example #3: SoundCloud

SoundCloud is the world’s largest music and audio distribution platform, with over 175 million unique monthly listeners . In 2019, SoundCloud hired test IO , a Berlin-based usability testing agency, to conduct continuous usability testing for the SoundCloud mobile app. With SoundCloud’s rigorous development schedule, the company needed regular human user testers to make sure that all new updates work across all devices and OS versions.

The key research objectives for SoundCloud’s regular usability studies were to:

- Provide a user-friendly listening experience for mobile app users;

- Identify and fix software bugs before wide release;

- Improve the mobile app development cycle.

In the very first usability tests, more than 150 usability issues (including 11 critical issues) were discovered. These issues likely wouldn’t have been discovered through internal bug testing. That is because the user testers experimented on the app from a plethora of devices and geographical locations (144 devices and 22 countries). Without remote usability testing, a testing scale as large as this would have been very difficult and expensive to achieve.

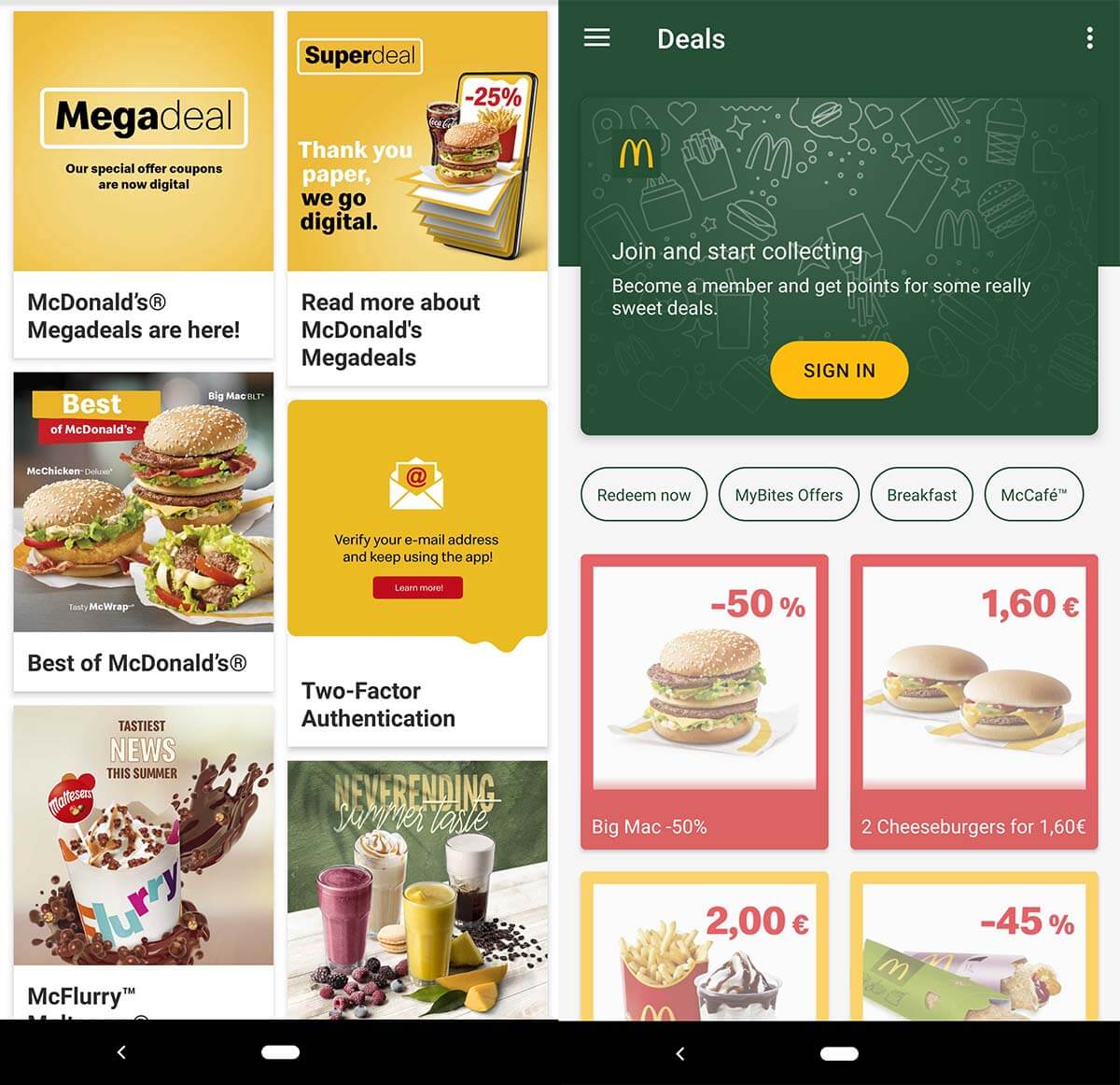

Today, SoundCloud’s mobile app looks like this:

This case study demonstrates the power of regular usability testing in products with frequent updates.

Source: SoundCloud case study (.pdf) by test IO

Example #4: AutoTrader.com

AutoTrader.com is one of the world’s largest online marketplaces for buying and selling used cars, with over 28 million monthly visitors . The mission of AutoTrader’s website is to empower car shoppers in the researching process by giving them all the tools necessary to make informed decisions about vehicle purchases.

Sounds fantastic.

However, with competitors such as CarGurus gaining increasing amounts of market share in the online car shopping industry, AutoTrader had to do reinvent itself to stay competitive.

In e-commerce, competitors with a superior website can gain massive followings in an instant. Fifty years ago this was not the case – well-established car marketplaces had massive car parks all over the country, and a newcomer would have little in ways to compete.

Nowadays, however, it’s all about user experience. Digital shoppers will flock to whichever site offers a better user experience. Websites unwilling or unable to improve their user experience over time will get left in the dust. No matter how big or small they are.

Going back to AutoTrader, the majority of its website traffic comes from organic Google search, meaning that in addition to website usability, search engine optimization (SEO) is a major priority for the company. According to John Muller from Google, changing the layout of a website can affect rankings , and that is why AutoTrader had to be careful with making any large-scale changes to their website.

AutoTrader did not have a large team of user researchers nor a massive budget dedicated to usability testing. But they did have Bradley Miller – Senior User Experience Researcher at the company. To test the usability of AutoTrader, Miller decided to partner with UserTesting.com to conduct live user interviews with AutoTrader users.

Through these live user interviews, Miller was able to:

- Find and connect with target personas;

- Communicate with car buyers from across the country;

- Reduce the costs of conducting usability tests while increasing the insights gained.

From these remote usability live interviews, Miller learned that the customer journey almost always begins from a single source: search engines. Here, it’s important to note that search engines rarely direct users to the homepage. Instead, they drive traffic to the inner pages of websites. In the case of AutoTrader, for example, only around 20% of search engine traffic goes to the homepage (data from SEMrush ).

These insights helped AutoTrader redesign their inner pages to better match the customer journey. They no longer assumed that any inner page visitor already has a greater contextual knowledge of the website. Instead, they started to treat each page as if it’s the initial point of entry by providing more contextual information right then and there inside the inner page.

This usability testing example demonstrates not only the power of user interviews but also the importance of understanding your customer journey and SEO.

Source: AutoTrader case study by UserTesting.com

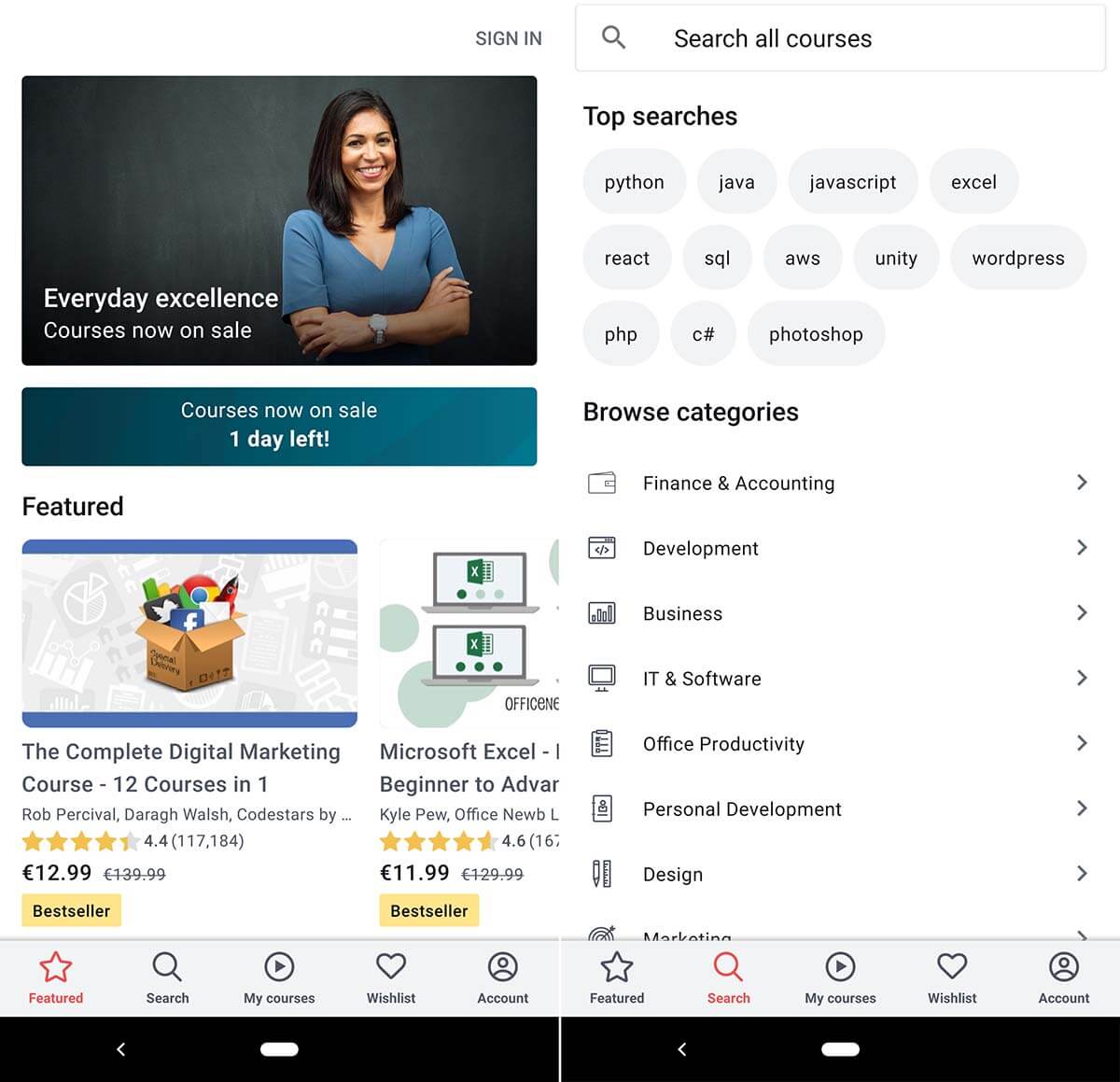

Example #5: Udemy

Udemy is one of the world’s largest online learning platforms with over 40 million students across the world. The e-learning giant also has a massively popular smartphone app, and the usability testing example in question was aimed at the smartphone users of Udemy.

To find out when, where, and why Udemy users chose to opt for the mobile app rather than the desktop version, Udemy conducted user tests. As Udemy is a 100% digital company, they chose fully remote unmoderated user testing as their testing method.

Test participants were asked to take small videos showing where they were located and what tasks they were focused on at the time of learning and recording.

What the user researchers found was that their initial theory of “users prefer using the mobile app while on the go” was false. Instead, what they found was that the majority of mobile app users were stationary. Udemy users, for various reasons, used the mobile app at home on the couch, or in a cafeteria. The key findings of this user test were utilized for the next year’s product and feature development.

This is what Udemy’s mobile app looks like today:

This usability testing case study demonstrates that a company’s perception of target audience behavior does not always match the behavior of the real end-users. And, that is why user testing is crucial.

Source: Udemy case study by UserTesting.com

Example #6: Halo: Combat Evolved

“Halo: Combat Evolved” was the first video game in the massively popular Halo franchise. It was developed by Bungie and published by Microsoft Game Studios in 2001. Within 10 years after its’ release, the Halo games sold more than 46 million copies worldwide and generated Microsoft more than $5 billion in video game and hardware sales. Owing it all to the usability test we’re about to discuss may be a bit of stretch, but usability testing the game during development was undeniably one of the factors that helped the franchise take off like a rocket.

In this usability study, the Halo team gathered a focus group of console gamers to try out their game’s prototype to see if they had fun playing the game. And, if they did not have fun – they wanted to find out what prevented them from doing so.

In the usability sessions, the researchers placed test subjects (players) in a large outdoor environment with enemies waiting for them across the open space.

The designers of the game expected the players to sprint closer towards the enemies, sparking a massive battle full of action and excitement. But, the test participants had a different plan in mind. Instead of putting themselves in danger by springing closer, they would stay at a maximum distance from the enemies and shoot from far across the outdoor space. While this was a safe and effective strategy, it proved to be rather uneventful and boring for the players.

To entice players to enjoy combat up close, the user researchers decided that changes would have to be made. Their solution – changing the size and color of the aiming indicator in the center of the screen to notify players when they were too far away from enemies.

Here, you can see the finalized aiming indicator in action:

Subsequent usability tests proved these changes to be effective, as the majority of user testers now engaged in combat from a closer distance.

User testing is not restricted to any particular industry, OS, or platform. Testing user experience is an invaluable tool for any product – not just for websites or mobile apps.

This example of usability testing from the video game industry shows that players (users) will optimize the fun out of a game if given the chance. It’s up to the designers to bring the fun back through well-designed game mechanics and notifications.

Source: “ Designing for Fun – User-Testing Case Studies ” by Randy J. Pagulayan

The Beginner’s Guide to Usability Testing [+ Sample Questions]

Published: July 28, 2021

In practically any discipline, it's a good idea to have others evaluate your work with fresh eyes, and this is especially true in user experience and web design. Otherwise, your partiality for your own work can skew your perception of it. Learning directly from the people that your work is actually for — your users — is what enables you to craft the best user experience possible.

UX and design professionals leverage usability testing to get user feedback on their product or website’s user experience all the time. In this post, you'll learn:

What usability testing is

- Its purpose and goals

- Scenarios where it can work

- Real-life examples and case studies

- How to conduct one of your own

- Scripted questions you can use along the way

What is usability testing?

Usability testing is a method of evaluating a product or website’s user experience. By testing the usability of their product or website with a representative group of their users or customers, UX researchers can determine if their actual users can easily and intuitively use their product or website.

UX researchers will usually conduct usability studies on each iteration of their product from its early development to its release.

During a usability study, the moderator asks participants in their individual user session to complete a series of tasks while the rest of the team observes and takes notes. By watching their actual users navigate their product or website and listening to their praises and concerns about it, they can see when the participants can quickly and successfully complete tasks and where they’re enjoying the user experience, encountering problems, and experiencing confusion.

After conducting their study, they’ll analyze the results and report any interesting insights to the project lead.

.png)

Free UX Research Kit + Templates

3 templates for conducting user tests, summarizing your UX research, and presenting your findings.

- User Testing Template

- UX Research Testing Report Template

- UX Research Presentation Template

You're all set!

Click this link to access this resource at any time.

What is the purpose of usability testing?

Usability testing allows researchers to uncover any problems with their product's user experience, decide how to fix these problems, and ultimately determine if the product is usable enough.

Identifying and fixing these early issues saves the company both time and money: Developers don’t have to overhaul the code of a poorly designed product that’s already built, and the product team is more likely to release it on schedule.

Benefits of Usability Testing

Usability testing has five major advantages over the other methods of examining a product's user experience (such as questionnaires or surveys):

- Usability testing provides an unbiased, accurate, and direct examination of your product or website’s user experience. By testing its usability on a sample of actual users who are detached from the amount of emotional investment your team has put into creating and designing the product or website, their feedback can resolve most of your team’s internal debates.

- Usability testing is convenient. To conduct your study, all you have to do is find a quiet room and bring in portable recording equipment. If you don’t have recording equipment, someone on your team can just take notes.

- Usability testing can tell you what your users do on your site or product and why they take these actions.

- Usability testing lets you address your product’s or website’s issues before you spend a ton of money creating something that ends up having a poor design.

- For your business, intuitive design boosts customer usage and their results, driving demand for your product.

Usability Testing Scenario Examples

Usability testing sounds great in theory, but what value does it provide in practice? Here's what it can do to actually make a difference for your product:

1. Identify points of friction in the usability of your product.

As Brian Halligan said at INBOUND 2019, "Dollars flow where friction is low." This just as true in UX as it is in sales or customer service. The more friction your product has, the more reason your users will have to find something that's easier to use.

Usability testing can uncover points of friction from customer feedback.

For example: "My process begins in Google Drive. I keep switching between windows and making multiple clicks just to copy and paste from Drive into this interface."

Even though the product team may have had that task in mind when they created the tool, seeing it in action and hearing the user's frustration uncovered a use case that the tool didn't compensate for. It might lead the team to solve for this problem by creating an easy import feature or way to access Drive within the interface to reduce the number of clicks the user needs to make to accomplish their task.

2. Stress test across many environments and use cases.

Our products don't exist in a vacuum, and sometimes development environments are unable to compensate for all the variables. Getting the product out and tested by users can uncover bugs that you may not have noticed while testing internally.

For example: "The check boxes disappear when I click on them."

Let's say that the team investigates why this might be, and they discover that the user is on a browser that's not commonly used (or a browser version that's outdated).

If the developers only tested across the browsers used in-house, they may have missed this bug, and it could have resulted in customer frustration.

3. Provide diverse perspectives from your user base.

While individuals in our customer bases have a lot in common (in particular, the things that led them to need and use our products), each individual is unique and brings a different perspective to the table. These perspectives are invaluable in uncovering issues that may not have occurred to your team.

For example: "I can't find where I'm supposed to click."

Upon further investigation, it's possible that this feedback came from a user who is color blind, leading your team to realize that the color choices did not create enough contrast for this user to navigate properly.

Insights from diverse perspectives can lead to design, architectural, copy, and accessibility improvements.

4. Give you clear insights into your product's strengths and weaknesses.

You likely have competitors in your industry whose products are better than yours in some areas and worse than yours in others. These variations in the market lead to competitive differences and opportunities. User feedback can help you close the gap on critical issues and identify what positioning is working.

For example: "This interface is so much easier to use and more attractive than [competitor product]. I just wish that I could also do [task] with it."

Two scenarios are possible based on that feedback:

- Your product can already accomplish the task the user wants. You just have to make it clear that the feature exists by improving copy or navigation.

- You have a really good opportunity to incorporate such a feature in future iterations of the product.

5. Inspire you with potential future additions or enhancements.

Speaking of future iterations, that comes to the next example of how usability testing can make a difference for your product: The feedback that you gather can inspire future improvements to your tool.

It's not just about rooting out issues but also envisioning where you can go next that will make the most difference for your customers. And who best to ask but your prospective and current customers themselves?

Usability Testing Examples & Case Studies

Now that you have an idea of the scenarios in which usability testing can help, here are some real-life examples of it in action:

1. User Fountain + Satchel

Satchel is a developer of education software, and their goal was to improve the experience of the site for their users. Consulting agency User Fountain conducted a usability test focusing on one question: "If you were interested in Satchel's product, how would you progress with getting more information about the product and its pricing?"

During the test, User Fountain noted significant frustration as users attempted to complete the task, particularly when it came to locating pricing information. Only 80% of users were successful.

Image Source

This led User Fountain to create the hypothesis that a "Get Pricing" link would make the process clearer for users. From there, they tested a new variation with such a link against a control version. The variant won, resulting in a 34% increase in demo requests.

By testing a hypothesis based on real feedback, friction was eliminated for the user, bringing real value to Satchel.

2. Kylie.Design + Digi-Key

Ecommerce site Digi-Key approached consultant Kylie.Design to uncover which site interactions had the highest success rates and what features those interactions had in common.

They conducted more than 120 tests and recorded:

- Click paths from each user

- Which actions were most common

- The success rates for each

This as well as the written and verbal feedback provided by participants informed the new design, which resulted in increasing purchaser success rates from 68.2% to 83.3%.

In essence, Digi-Key was able to identify their most successful features and double-down on them, improving the experience and their bottom line.

3. Sparkbox + An Academic Medical Center

An academic medical center in the midwest partnered with consulting agency Sparkbox to improve the patient experience on their homepage, where some features were suffering from low engagement.

Sparkbox conducted a usability study to determine what users wanted from the homepage and what didn't meet their expectations. From there, they were able to propose solutions to increase engagement.

For example, one key action was the ability to access electronic medical records. The new design based on user feedback increased the success rate from 45% to 94%.

This is a great example of putting the user's pains and desires front-and-center in a design.

The 9 Phases of a Usability Study

1. decide which part of your product or website you want to test..

Do you have any pressing questions about how your users will interact with certain parts of your design, like a particular interaction or workflow? Or are you wondering what users will do first when they land on your product page? Gather your thoughts about your product or website’s pros, cons, and areas of improvement, so you can create a solid hypothesis for your study.

2. Pick your study’s tasks.

Your participants' tasks should be your user’s most common goals when they interact with your product or website, like making a purchase.

3. Set a standard for success.

Once you know what to test and how to test it, make sure to set clear criteria to determine success for each task. For instance, when I was in a usability study for HubSpot’s Content Strategy tool, I had to add a blog post to a cluster and report exactly what I did. Setting a threshold of success and failure for each task lets you determine if your product's user experience is intuitive enough or not.

4. Write a study plan and script.

At the beginning of your script, you should include the purpose of the study, if you’ll be recording, some background on the product or website, questions to learn about the participants’ current knowledge of the product or website, and, finally, their tasks. To make your study consistent, unbiased, and scientific, moderators should follow the same script in each user session.

5. Delegate roles.

During your usability study, the moderator has to remain neutral, carefully guiding the participants through the tasks while strictly following the script. Whoever on your team is best at staying neutral, not giving into social pressure, and making participants feel comfortable while pushing them to complete the tasks should be your moderator

Note-taking during the study is also just as important. If there’s no recorded data, you can’t extract any insights that’ll prove or disprove your hypothesis. Your team’s most attentive listener should be your note-taker during the study.

6. Find your participants.

Screening and recruiting the right participants is the hardest part of usability testing. Most usability experts suggest you should only test five participants during each study , but your participants should also closely resemble your actual user base. With such a small sample size, it’s hard to replicate your actual user base in your study.

To recruit the ideal participants for your study, create the most detailed and specific persona as you possibly can and incentivize them to participate with a gift card or another monetary reward.

Recruiting colleagues from other departments who would potentially use your product is also another option. But you don’t want any of your team members to know the participants because their personal relationship can create bias -- since they want to be nice to each other, the researcher might help a user complete a task or the user might not want to constructively criticize the researcher’s product design.

7. Conduct the study.

During the actual study, you should ask your participants to complete one task at a time, without your help or guidance. If the participant asks you how to do something, don’t say anything. You want to see how long it takes users to figure out your interface.

Asking participants to “think out loud” is also an effective tactic -- you’ll know what’s going through a user’s head when they interact with your product or website.

After they complete each task, ask for their feedback, like if they expected to see what they just saw, if they would’ve completed the task if it wasn’t a test, if they would recommend your product to a friend, and what they would change about it. This qualitative data can pinpoint more pros and cons of your design.

8. Analyze your data.

You’ll collect a ton of qualitative data after your study. Analyzing it will help you discover patterns of problems, gauge the severity of each usability issue, and provide design recommendations to the engineering team.

When you analyze your data, make sure to pay attention to both the users’ performance and their feelings about the product. It’s not unusual for a participant to quickly and successfully achieve your goal but still feel negatively about the product experience.

9. Report your findings.

After extracting insights from your data, report the main takeaways and lay out the next steps for improving your product or website’s design and the enhancements you expect to see during the next round of testing.

The 3 Most Common Types of Usability Tests

1. hallway/guerilla usability testing.

This is where you set up your study somewhere with a lot of foot traffic. It allows you to ask randomly-selected people who have most likely never even heard of your product or website -- like passers-by -- to evaluate its user-experience.

2. Remote/Unmoderated Usability Testing

Remote/unmoderated usability testing has two main advantages: it uses third-party software to recruit target participants for your study, so you can spend less time recruiting and more time researching. It also allows your participants to interact with your interface by themselves and in their natural environment -- the software can record video and audio of your user completing tasks.

Letting participants interact with your design in their natural environment with no one breathing down their neck can give you more realistic, objective feedback. When you’re in the same room as your participants, it can prompt them to put more effort into completing your tasks since they don’t want to seem incompetent around an expert. Your perceived expertise can also lead to them to please you instead of being honest when you ask for their opinion, skewing your user experience's reactions and feedback.

3. Moderated Usability Testing

Moderated usability testing also has two main advantages: interacting with participants in person or through a video a call lets you ask them to elaborate on their comments if you don’t understand them, which is impossible to do in an unmoderated usability study. You’ll also be able to help your users understand the task and keep them on track if your instructions don’t initially register with them.

Usability Testing Script & Questions

Following one script or even a template of questions for every one of your usability studies wouldn't make any sense -- each study's subject matter is different. You'll need to tailor your questions to the things you want to learn, but most importantly, you'll need to know how to ask good questions.

1. When you [action], what's the first thing you do to [goal]?

Questions such as this one give insight into how users are inclined to interact with the tool and what their natural behavior is.

Julie Fischer, one of HubSpot's Senior UX researchers, gives this advice: "Don't ask leading questions that insert your own bias or opinion into the participants' mind. They'll end up doing what you want them to do instead of what they would do by themselves."

For example, "Find [x]" is a better than "Are you able to easily find [x]?" The latter inserts connotation that may affect how they use the product or answer the question.

2. How satisfied are you with the [attribute] of [feature]?

Avoid leading the participants by asking questions like "Is this feature too complicated?" Instead, gauge their satisfaction on a Likert scale that provides a number range from highly unsatisfied to highly satisfied. This will provide a less biased result than leading them to a negative answer they may not otherwise have had.

3. How do you use [feature]?

There may be multiple ways to achieve the same goal or utilize the same feature. This question will help uncover how users interact with a specific aspect of the product and what they find valuable.

4. What parts of [the product] do you use the most? Why?

This question is meant to help you understand the strengths of the product and what about it creates raving fans. This will indicate what you should absolutely keep and perhaps even lead to insights into what you can improve for other features.

5. What parts of [the product] do you use the least? Why?

This question is meant to uncover the weaknesses of the product or the friction in its use. That way, you can rectify any issues or plan future improvements to close the gap between user expectations and reality.

6. If you could change one thing about [feature] what would it be?

Because it's so similar to #5, you may get some of the same answers. However, you'd be surprised about the aspirational things that your users might say here.

7. What do you expect [action/feature] to do?

Here's another tip from Julie Fischer:

"When participants ask 'What will this do?' it's best to reply with the question 'What do you expect it do?' rather than telling them the answer."

Doing this can uncover user expectation as well as clarity issues with the copy.

Your Work Could Always Use a Fresh Perspective

Letting another person review and possibly criticize your work takes courage -- no one wants a bruised ego. But most of the time, when you allow people to constructively criticize or even rip apart your article or product design, especially when your work is intended to help these people, your final result will be better than you could've ever imagined.

Editor's note: This post was originally published in August 2018 and has been updated for comprehensiveness.

Don't forget to share this post!

Related articles.

The Top 13 Paid & Free Alternatives to Adobe Illustrator of 2023

Using Human-Centered Design to Create Better Products (with Examples)

![case study usability test 9 Breadcrumb Tips to Make Your Site Easier to Navigate [+ Examples]](https://blog.hubspot.com/hubfs/breadcrumb-navigation-tips.jpg)

9 Breadcrumb Tips to Make Your Site Easier to Navigate [+ Examples]

UX vs. UI: What's the Difference?

The 10 Best Storyboarding Software of 2022 for Any Budget

The Ultimate Guide to Designing for the User Experience

It’s the Little Things: How To Write Microcopy

10 Tips That Can Drastically Improve Your Website's User Experience

Intro to Adobe Fireworks: 6 Great Ways Designers Can Use This Software

Fitts's Law: The UX Hack that Will Strengthen Your Design

3 templates for conducting user tests, summarizing UX research, and presenting findings.

Marketing software that helps you drive revenue, save time and resources, and measure and optimize your investments — all on one easy-to-use platform

Usability Testing: Everything You Need to Know (Methods, Tools, and Examples)

As you crack into the world of UX design, there’s one thing you absolutely must understand and learn to practice like a pro: usability testing.

Precisely because it’s such a critical skill to master, it can be a lot to wrap your head around. What is it exactly, and how do you do it? How is it different from user testing? What are some actual methods that you can employ?

In this guide, we’ll give you everything you need to know about usability testing—the what, the why, and the how.

Here’s what we’ll cover:

- What is usability testing and why does it matter?

- Usability testing vs. user testing

- Formative vs. summative usability testing

- Attitudinal vs. behavioral research

Performance testing

Card sorting, tree testing, 5-second test, eye tracking.

- How to learn more about usability testing

Ready? Let’s dive in.

1. What is usability testing and why does it matter?

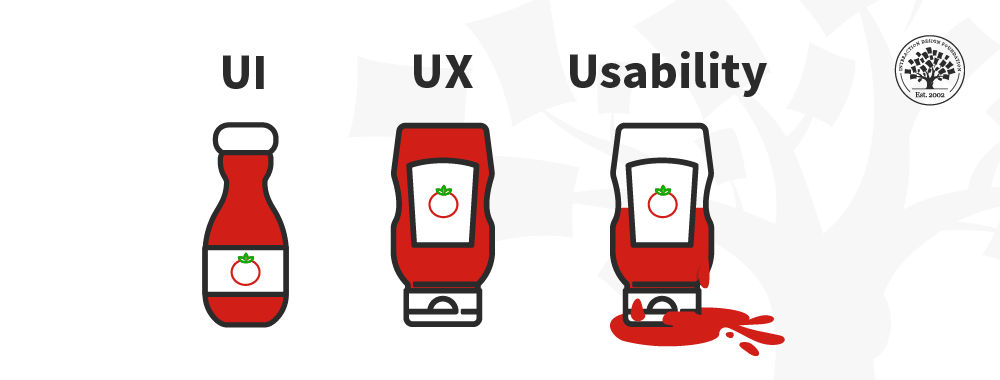

Simply put, usability testing is the process of discovering ways to improve your product by observing users as they engage with the product itself (or a prototype of the product). It’s a UX research method specifically trained on—you guessed it—the usability of your products. And what is usability ? Usability is a measure of how easily users can accomplish a given task with your product.

Usability testing, when executed well, uncovers pain points in the user journey and highlights barriers to good usability. It will also help you learn about your users’ behaviors and preferences as these relate to your product, and to discover opportunities to design for needs that you may have overlooked.

You can conduct usability testing at any point in the design process when you’ve turned initial ideas into design solutions, but the earlier the better. Test early and test often! You can conduct some kind of usability testing with low- and high- fidelity prototypes alike—and testing should continue after you’ve got a live, out-in-the-world product.

2. Usability testing vs. user testing

Though they sound similar and share a somewhat similar end goal, usability testing and user testing are two different things. We’ll look at the differences in a moment, but first, here’s what they have in common:

- Both share the end goal of creating a design solution to meet real user needs

- Both take the time to observe and listen to the user to hear from them what needs/pain points they experience

- Both look for feasible ways of meeting those needs or addressing those pain points

User testing essentially asks if this particular kind of user would want this particular kind of product—or what kind of product would benefit them in the first place. It is entirely user-focused.

Usability testing, on the other hand, is more product-focused and looks at users’ needs in the context of an existing product (even if that product is still in prototype stages of development). Usability testing takes your existing product and places it in the hands of your users (or potential users) to see how the product actually works for them—how they’re able to accomplish what they need to do with the product.

3. Formative vs. summative usability testing

Alright! Now that you understand what usability testing is, and what it isn’t, let’s get into the various types of usability testing out there.

There are two broad categories of usability testing that are important to understand— formative and summative . These have to do with when you conduct the testing and what your broad objectives are—what the overarching impact the testing should have on your product.

Formative usability testing:

- Is a qualitative research process

- Happens earlier in the design, development, or iteration process

- Seeks to understand what about the product needs to be improved

- Results in qualitative findings and ideation that you can incorporate into prototypes and wireframes

Summative usability testing:

- Is a research process that’s more quantitative in nature

- Happens later in the design, development, or iteration process

- Seeks to understand whether the solutions you are implementing (or have implemented) are effective

- Results in quantitative findings that can help determine broad areas for improvement or specific areas to fine-tune (this can go hand in hand with competitive analysis )

4. Attitudinal vs. behavioral research

Alongside the timing and purpose of the testing (formative vs. summative), it’s important to understand two broad categories that your research (both your objectives and your findings) will fall into: behavioral and attitudinal.

Attitudinal research is all about what people say—what they think and communicate about your product and how it works. Behavioral research focuses on what people do—how they actually do interact with your product and the feelings that surface as a result.

What people say and what people do are often two very different things. These two categories help define those differences, choose our testing methods more intentionally, and categorize our findings more effectively.

5. Five essential usability testing methods

Some usability testing methods are geared more towards uncovering either behavioral or attitudinal findings; but many have the potential to result in both.

Of the methods you’ll learn about in this section, performance testing has the greatest potential for targeting both—and will perhaps require the greatest amount of thoughtfulness regarding how you approach it.

Naturally, then, we’ll spend a little more time on that method than the other four, though that in no way diminishes their usefulness! Here are the methods we’ll cover:

These are merely five common and/or interesting methods—it is not a comprehensive list of every method you can use to get inside the hearts and minds of your users. But it’s a place to start. So here we go!

In performance testing, you sit down with a user and give them a task (or set of tasks) to complete with the product.

This is often a combination of methods and approaches that will allow you to interview users, see how they use your product, and find out how they feel about the experience afterward. Depending on your approach, you’ll observe them, take notes, and/or ask usability testing questions before, after, or along the way.

Performance testing is by far the most talked-about form of usability testing—especially as it’s often combined with other methods. Performance testing is what most commonly comes to mind in discussions of usability testing as a whole, and it’s what many UX design certification programs focus on—because it’s so broadly useful and adaptive.

While there’s no one right way to conduct performance testing, there are a number of approaches and combinations of methods you can use, and you’ll want to be intentional about it.

It’s a method that you can adapt to your objectives—so make sure you do! Ask yourself what kind of attitudinal or behavioral findings you’re really looking for, how much time you’ll have for each testing session, and what methods or approaches will help you reach your objectives most efficiently.

Performance testing is often combined with user interviews . For a quick guide on how to ask great questions during this part of a testing session, watch this video:

Even if you choose not to combine performance testing with user interviews, good performance testing will still involve some degree of questioning and moderating.

Performance testing typically results in a pretty massive chunk of qualitative insights, so you’ll need to devote a fair amount of intention and planning before you jump in.

Maximize the usefulness of your research by being thoughtful about the task(s) you assign and what approach you take to moderating the sessions. As your test participants go about the task(s) you assign, you’ll watch, take notes, and ask questions either during or after the test—depending on your approach.

Four approaches to performance testing

There are four ways you can go about moderating a performance test , and it’s worth understanding and choosing your approach (or combination of approaches) carefully and intentionally. As you choose, take time to consider:

- How much guidance the participant will actually need

- How intently participants will need to focus

- How guidance or prompting from you might affect results or observations

With these things in mind, let’s look at the four approaches.

Concurrent Think Aloud (CTA)

With this approach, you’ll encourage participants to externalize their thought process—to think out loud. Your job during the session will be to keep them talking through what they’re looking for, what they’re doing and why, and what they think about the results of their actions.

A CTA approach often uncovers a lot of nuanced details in the user journey, but if your objectives include anything related to the accuracy or time for task completion, you might be better off with a Retrospective Think Aloud.

Retrospective Think Aloud (RTA)

Here, you’ll allow participants to complete their tasks and recount the journey afterward . They can complete tasks in a more realistic time frame and degree of accuracy, though there will certainly be nuanced details of participants’ thoughts and feelings you’ll miss out on.

Concurrent Probing (CP)

With Concurrent Probing, you ask participants about their experience as they’re having it. You prompt them for details on their expectations, reasons for particular actions, and feeling about results.

This approach can be distracting, but used in combination with CTA, you can allow participants to complete the tasks and prompt only when you see a particularly interesting aspect of their experience, and you’d like to know more. Again, if accuracy and timing are critical objectives, you might be better off with Retrospective Probing.

Retrospective Probing (RP)

If you note that a participant says or does something interesting as they complete their task(s), you can note it and ask them about it later—this is Retrospective Probing. This is an approach very often combined with CTA or RTA to ensure that you’re not missing out on those nuanced details of their experience without distracting them from actually completing the task.

Whew! There’s your quick overview of performance testing. To learn more about it, read to the final section of this article: How to learn more about usability testing.

With this under our belts, let’s move on to our other four essential usability testing methods.

Card sorting is a way of testing the usability of your information architecture. You give users blank cards (open card sorting) or cards labeled with the names and short descriptions of the main items/sections of the product (closed card sorting), then ask them to sort the cards into piles according to which items seem to go best together. You can go even further by asking them to sort the cards into larger groups and to name the groups or piles.

Rather than structuring your site or app according to your understanding of the product, card sorting allows the information architecture to mirror the way your users are thinking.

This is a great technique to employ very early in the design process as it is inexpensive and will save the time and expense of making structural adjustments later in the process. And there’s no technology required! If you want to conduct it remotely, though, there are tools like OptimalSort that do this effectively.

For more on how to conduct card sorting, watch this video:

Tree testing is a great follow up to card sorting, but it can be conducted on its own as well. In tree testing, you create a visual information hierarchy (or “tree) and ask users to complete a task using the tree. For example, you might ask users, “You want to accomplish X with this product. Where do you go to do that?” Then you observe how easily users are able to find what they’re looking for.

This is another great technique to employ early in the design process. It can be conducted with paper prototypes or spreadsheets, but you can also use tools such as TreeJack to accomplish this digitally and remotely.

In the 5-second test, you expose your users to one portion of your product (one screen, probably the top half of it) for five seconds and then interview them to see what they took away regarding:

- The product/page’s purpose and main features or elements

- The intended audience and trustworthiness of the brand

- Their impression of the usability and design of the product

You can conduct this kind of testing in person rather simply, or remotely with tools like UsabilityHub .

This one may seem somewhat new, but it’s been around for a while–though the tools and technology around it have evolved. Eye tracking on its own isn’t enough to determine usability, but it’s a great compliment to your other usability testing measures.

In eye tracking you literally track where most users’ eyes land on the screen you’re designing. The reason this is important is that you want to make sure that the elements users’ eyes are drawn to are the ones that communicate the most important information. This is a difficult one to conduct in any kind of analog fashion, but there are a lot of tools out there that make it simple— CrazyEgg and HotJar are both great places to start.

6. How to learn more about usability testing

There you have it: your 15-minute overview of the what, why, and how of usability testing. But don’t stop here! Usability testing and UX research as a whole have a deeply humanizing impact on the design process. It’s a fascinating field to discover and the result of this kind of work has the power of keeping companies, design teams, and even the lone designer accountable to what matters most: the needs of the end user.

If you’d like to learn more about usability testing and UX research, take the free UX Research for Beginners Course with CareerFoundry. This tutorial is jam-packed with information that will give you a deeper understanding of the value of this kind of testing as well as a number of other UX research methods.

You can also enroll in a UX design course or bootcamp to get a comprehensive understanding of the entire UX design process (to which usability testing and UX research are an integral part). For guidance on the best programs, check out our list of the 10 best UX design certification programs . And if you’ve already started your learning process, and you’re thinking about the job hunt, here are the top 5 UX research interview questions to be ready for.

For further reading about usability testing and UX research, check out these other articles:

- How to conduct usability testing: a step-by-step guide

- What does a UX researcher actually do? The ultimate career guide

- 11 usability heuristics every designer should know

- How to conduct a UX audit

- Learn about our financial technology consulting, UX design, and engineering services

- Investing and Wealth

- Fintech SaaS

- Why Praxent

- Capabilities

- Capabilities demo

- Schedule a call

- Uncategorized

- Development

- Life at Praxent

- Project Management

- UX & Design

- Tech & Business News

- Product Management

- Financial Services Innovators

- UX Insights

Usability Testing Case Studies: Validate Assumptions and Build Software with Confidence

We define usability and examine some usability testing case studies to demonstrate the benefits.

As we’ve said before, one of the most important benefits of software prototyping is the early ability to conduct usability testing. The truth of the matter is that no one will use your product if it’s not easy and intuitive or if it doesn’t solve a problem that users have in the first place.

The easiest way to make sure your software project meets these requirements is with usability testing, and the most effective way to implement usability testing early in the development process is with a prototype .

What Is Usability Testing?