- Prompt Library

- DS/AI Trends

- Stats Tools

- Interview Questions

- Generative AI

- Machine Learning

- Deep Learning

Linear regression hypothesis testing: Concepts, Examples

In relation to machine learning , linear regression is defined as a predictive modeling technique that allows us to build a model which can help predict continuous response variables as a function of a linear combination of explanatory or predictor variables. While training linear regression models, we need to rely on hypothesis testing in relation to determining the relationship between the response and predictor variables. In the case of the linear regression model, two types of hypothesis testing are done. They are T-tests and F-tests . In other words, there are two types of statistics that are used to assess whether linear regression models exist representing response and predictor variables. They are t-statistics and f-statistics. As data scientists , it is of utmost importance to determine if linear regression is the correct choice of model for our particular problem and this can be done by performing hypothesis testing related to linear regression response and predictor variables. Many times, it is found that these concepts are not very clear with a lot many data scientists. In this blog post, we will discuss linear regression and hypothesis testing related to t-statistics and f-statistics . We will also provide an example to help illustrate how these concepts work.

Table of Contents

What are linear regression models?

A linear regression model can be defined as the function approximation that represents a continuous response variable as a function of one or more predictor variables. While building a linear regression model, the goal is to identify a linear equation that best predicts or models the relationship between the response or dependent variable and one or more predictor or independent variables.

There are two different kinds of linear regression models. They are as follows:

- Simple or Univariate linear regression models : These are linear regression models that are used to build a linear relationship between one response or dependent variable and one predictor or independent variable. The form of the equation that represents a simple linear regression model is Y=mX+b, where m is the coefficients of the predictor variable and b is bias. When considering the linear regression line, m represents the slope and b represents the intercept.

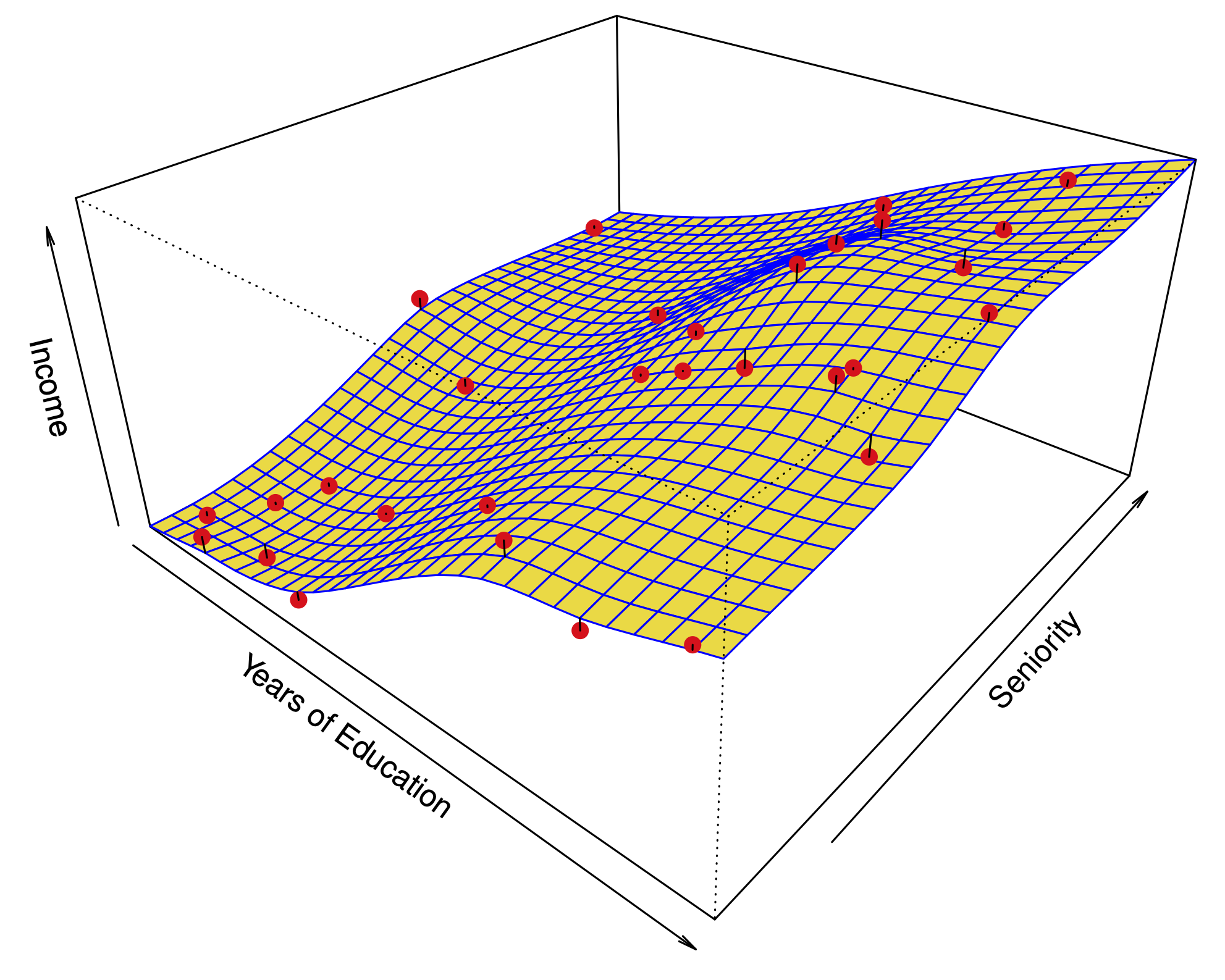

- Multiple or Multi-variate linear regression models : These are linear regression models that are used to build a linear relationship between one response or dependent variable and more than one predictor or independent variable. The form of the equation that represents a multiple linear regression model is Y=b0+b1X1+ b2X2 + … + bnXn, where bi represents the coefficients of the ith predictor variable. In this type of linear regression model, each predictor variable has its own coefficient that is used to calculate the predicted value of the response variable.

While training linear regression models, the requirement is to determine the coefficients which can result in the best-fitted linear regression line. The learning algorithm used to find the most appropriate coefficients is known as least squares regression . In the least-squares regression method, the coefficients are calculated using the least-squares error function. The main objective of this method is to minimize or reduce the sum of squared residuals between actual and predicted response values. The sum of squared residuals is also called the residual sum of squares (RSS). The outcome of executing the least-squares regression method is coefficients that minimize the linear regression cost function .

The residual e of the ith observation is represented as the following where [latex]Y_i[/latex] is the ith observation and [latex]\hat{Y_i}[/latex] is the prediction for ith observation or the value of response variable for ith observation.

[latex]e_i = Y_i – \hat{Y_i}[/latex]

The residual sum of squares can be represented as the following:

[latex]RSS = e_1^2 + e_2^2 + e_3^2 + … + e_n^2[/latex]

The least-squares method represents the algorithm that minimizes the above term, RSS.

Once the coefficients are determined, can it be claimed that these coefficients are the most appropriate ones for linear regression? The answer is no. After all, the coefficients are only the estimates and thus, there will be standard errors associated with each of the coefficients. Recall that the standard error is used to calculate the confidence interval in which the mean value of the population parameter would exist. In other words, it represents the error of estimating a population parameter based on the sample data. The value of the standard error is calculated as the standard deviation of the sample divided by the square root of the sample size. The formula below represents the standard error of a mean.

[latex]SE(\mu) = \frac{\sigma}{\sqrt(N)}[/latex]

Thus, without analyzing aspects such as the standard error associated with the coefficients, it cannot be claimed that the linear regression coefficients are the most suitable ones without performing hypothesis testing. This is where hypothesis testing is needed . Before we get into why we need hypothesis testing with the linear regression model, let’s briefly learn about what is hypothesis testing?

Train a Multiple Linear Regression Model using R

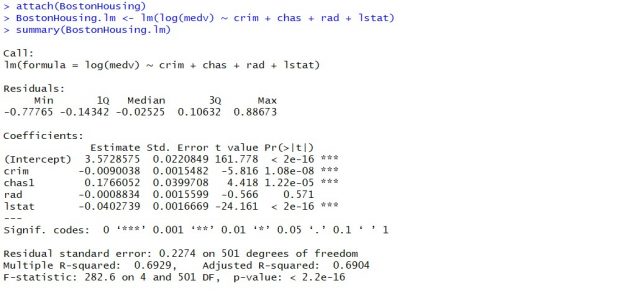

Before getting into understanding the hypothesis testing concepts in relation to the linear regression model, let’s train a multi-variate or multiple linear regression model and print the summary output of the model which will be referred to, in the next section.

The data used for creating a multi-linear regression model is BostonHousing which can be loaded in RStudioby installing mlbench package. The code is shown below:

install.packages(“mlbench”) library(mlbench) data(“BostonHousing”)

Once the data is loaded, the code shown below can be used to create the linear regression model.

attach(BostonHousing) BostonHousing.lm <- lm(log(medv) ~ crim + chas + rad + lstat) summary(BostonHousing.lm)

Executing the above command will result in the creation of a linear regression model with the response variable as medv and predictor variables as crim, chas, rad, and lstat. The following represents the details related to the response and predictor variables:

- log(medv) : Log of the median value of owner-occupied homes in USD 1000’s

- crim : Per capita crime rate by town

- chas : Charles River dummy variable (= 1 if tract bounds river; 0 otherwise)

- rad : Index of accessibility to radial highways

- lstat : Percentage of the lower status of the population

The following will be the output of the summary command that prints the details relating to the model including hypothesis testing details for coefficients (t-statistics) and the model as a whole (f-statistics)

Hypothesis tests & Linear Regression Models

Hypothesis tests are the statistical procedure that is used to test a claim or assumption about the underlying distribution of a population based on the sample data. Here are key steps of doing hypothesis tests with linear regression models:

- Hypothesis formulation for T-tests: In the case of linear regression, the claim is made that there exists a relationship between response and predictor variables, and the claim is represented using the non-zero value of coefficients of predictor variables in the linear equation or regression model. This is formulated as an alternate hypothesis. Thus, the null hypothesis is set that there is no relationship between response and the predictor variables . Hence, the coefficients related to each of the predictor variables is equal to zero (0). So, if the linear regression model is Y = a0 + a1x1 + a2x2 + a3x3, then the null hypothesis for each test states that a1 = 0, a2 = 0, a3 = 0 etc. For all the predictor variables, individual hypothesis testing is done to determine whether the relationship between response and that particular predictor variable is statistically significant based on the sample data used for training the model. Thus, if there are, say, 5 features, there will be five hypothesis tests and each will have an associated null and alternate hypothesis.

- Hypothesis formulation for F-test : In addition, there is a hypothesis test done around the claim that there is a linear regression model representing the response variable and all the predictor variables. The null hypothesis is that the linear regression model does not exist . This essentially means that the value of all the coefficients is equal to zero. So, if the linear regression model is Y = a0 + a1x1 + a2x2 + a3x3, then the null hypothesis states that a1 = a2 = a3 = 0.

- F-statistics for testing hypothesis for linear regression model : F-test is used to test the null hypothesis that a linear regression model does not exist, representing the relationship between the response variable y and the predictor variables x1, x2, x3, x4 and x5. The null hypothesis can also be represented as x1 = x2 = x3 = x4 = x5 = 0. F-statistics is calculated as a function of sum of squares residuals for restricted regression (representing linear regression model with only intercept or bias and all the values of coefficients as zero) and sum of squares residuals for unrestricted regression (representing linear regression model). In the above diagram, note the value of f-statistics as 15.66 against the degrees of freedom as 5 and 194.

- Evaluate t-statistics against the critical value/region : After calculating the value of t-statistics for each coefficient, it is now time to make a decision about whether to accept or reject the null hypothesis. In order for this decision to be made, one needs to set a significance level, which is also known as the alpha level. The significance level of 0.05 is usually set for rejecting the null hypothesis or otherwise. If the value of t-statistics fall in the critical region, the null hypothesis is rejected. Or, if the p-value comes out to be less than 0.05, the null hypothesis is rejected.

- Evaluate f-statistics against the critical value/region : The value of F-statistics and the p-value is evaluated for testing the null hypothesis that the linear regression model representing response and predictor variables does not exist. If the value of f-statistics is more than the critical value at the level of significance as 0.05, the null hypothesis is rejected. This means that the linear model exists with at least one valid coefficients.

- Draw conclusions : The final step of hypothesis testing is to draw a conclusion by interpreting the results in terms of the original claim or hypothesis. If the null hypothesis of one or more predictor variables is rejected, it represents the fact that the relationship between the response and the predictor variable is not statistically significant based on the evidence or the sample data we used for training the model. Similarly, if the f-statistics value lies in the critical region and the value of the p-value is less than the alpha value usually set as 0.05, one can say that there exists a linear regression model.

Why hypothesis tests for linear regression models?

The reasons why we need to do hypothesis tests in case of a linear regression model are following:

- By creating the model, we are establishing a new truth (claims) about the relationship between response or dependent variable with one or more predictor or independent variables. In order to justify the truth, there are needed one or more tests. These tests can be termed as an act of testing the claim (or new truth) or in other words, hypothesis tests.

- One kind of test is required to test the relationship between response and each of the predictor variables (hence, T-tests)

- Another kind of test is required to test the linear regression model representation as a whole. This is called F-test.

While training linear regression models, hypothesis testing is done to determine whether the relationship between the response and each of the predictor variables is statistically significant or otherwise. The coefficients related to each of the predictor variables is determined. Then, individual hypothesis tests are done to determine whether the relationship between response and that particular predictor variable is statistically significant based on the sample data used for training the model. If at least one of the null hypotheses is rejected, it represents the fact that there exists no relationship between response and that particular predictor variable. T-statistics is used for performing the hypothesis testing because the standard deviation of the sampling distribution is unknown. The value of t-statistics is compared with the critical value from the t-distribution table in order to make a decision about whether to accept or reject the null hypothesis regarding the relationship between the response and predictor variables. If the value falls in the critical region, then the null hypothesis is rejected which means that there is no relationship between response and that predictor variable. In addition to T-tests, F-test is performed to test the null hypothesis that the linear regression model does not exist and that the value of all the coefficients is zero (0). Learn more about the linear regression and t-test in this blog – Linear regression t-test: formula, example .

Recent Posts

- Agentic Reasoning Design Patterns in AI: Examples - October 18, 2024

- LLMs for Adaptive Learning & Personalized Education - October 8, 2024

- Sparse Mixture of Experts (MoE) Models: Examples - October 6, 2024

Ajitesh Kumar

One response.

Very informative

- Search for:

ChatGPT Prompts (250+)

- Generate Design Ideas for App

- Expand Feature Set of App

- Create a User Journey Map for App

- Generate Visual Design Ideas for App

- Generate a List of Competitors for App

- Agentic Reasoning Design Patterns in AI: Examples

- LLMs for Adaptive Learning & Personalized Education

- Sparse Mixture of Experts (MoE) Models: Examples

- Anxiety Disorder Detection & Machine Learning Techniques

- Confounder Features & Machine Learning Models: Examples

Data Science / AI Trends

- • Sentiment Analysis Real World Examples

- • Prepend any arxiv.org link with talk2 to load the paper into a responsive chat application

- • Custom LLM and AI Agents (RAG) On Structured + Unstructured Data - AI Brain For Your Organization

- • Guides, papers, lecture, notebooks and resources for prompt engineering

- • Common tricks to make LLMs efficient and stable

Free Online Tools

- Create Scatter Plots Online for your Excel Data

- Histogram / Frequency Distribution Creation Tool

- Online Pie Chart Maker Tool

- Z-test vs T-test Decision Tool

- Independent samples t-test calculator

Recent Comments

I found it very helpful. However the differences are not too understandable for me

Very Nice Explaination. Thankyiu very much,

in your case E respresent Member or Oraganization which include on e or more peers?

Such a informative post. Keep it up

Thank you....for your support. you given a good solution for me.

Linear regression - Hypothesis testing

by Marco Taboga , PhD

This lecture discusses how to perform tests of hypotheses about the coefficients of a linear regression model estimated by ordinary least squares (OLS).

Table of contents

Normal vs non-normal model

The linear regression model, matrix notation, tests of hypothesis in the normal linear regression model, test of a restriction on a single coefficient (t test), test of a set of linear restrictions (f test), tests based on maximum likelihood procedures (wald, lagrange multiplier, likelihood ratio), tests of hypothesis when the ols estimator is asymptotically normal, test of a restriction on a single coefficient (z test), test of a set of linear restrictions (chi-square test), learn more about regression analysis.

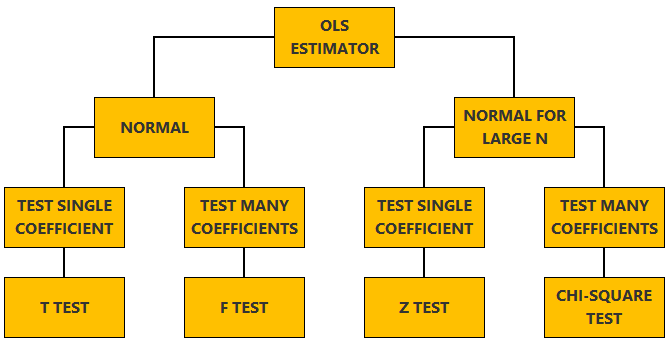

The lecture is divided in two parts:

in the first part, we discuss hypothesis testing in the normal linear regression model , in which the OLS estimator of the coefficients has a normal distribution conditional on the matrix of regressors;

in the second part, we show how to carry out hypothesis tests in linear regression analyses where the hypothesis of normality holds only in large samples (i.e., the OLS estimator can be proved to be asymptotically normal).

We also denote:

We now explain how to derive tests about the coefficients of the normal linear regression model.

It can be proved (see the lecture about the normal linear regression model ) that the assumption of conditional normality implies that:

How the acceptance region is determined depends not only on the desired size of the test , but also on whether the test is:

one-tailed (only one of the two things, i.e., either smaller or larger, is possible).

For more details on how to determine the acceptance region, see the glossary entry on critical values .

![hypothesis testing simple linear regression [eq28]](https://www.statlect.com/images/linear-regression-hypothesis-testing__90.png)

The F test is one-tailed .

A critical value in the right tail of the F distribution is chosen so as to achieve the desired size of the test.

Then, the null hypothesis is rejected if the F statistics is larger than the critical value.

In this section we explain how to perform hypothesis tests about the coefficients of a linear regression model when the OLS estimator is asymptotically normal.

As we have shown in the lecture on the properties of the OLS estimator , in several cases (i.e., under different sets of assumptions) it can be proved that:

These two properties are used to derive the asymptotic distribution of the test statistics used in hypothesis testing.

The test can be either one-tailed or two-tailed . The same comments made for the t-test apply here.

![hypothesis testing simple linear regression [eq50]](https://www.statlect.com/images/linear-regression-hypothesis-testing__175.png)

Like the F test, also the Chi-square test is usually one-tailed .

The desired size of the test is achieved by appropriately choosing a critical value in the right tail of the Chi-square distribution.

The null is rejected if the Chi-square statistics is larger than the critical value.

Want to learn more about regression analysis? Here are some suggestions:

R squared of a linear regression ;

Gauss-Markov theorem ;

Generalized Least Squares ;

Multicollinearity ;

Dummy variables ;

Selection of linear regression models

Partitioned regression ;

Ridge regression .

How to cite

Please cite as:

Taboga, Marco (2021). "Linear regression - Hypothesis testing", Lectures on probability theory and mathematical statistics. Kindle Direct Publishing. Online appendix. https://www.statlect.com/fundamentals-of-statistics/linear-regression-hypothesis-testing.

Most of the learning materials found on this website are now available in a traditional textbook format.

- F distribution

- Beta distribution

- Conditional probability

- Central Limit Theorem

- Binomial distribution

- Mean square convergence

- Delta method

- Almost sure convergence

- Mathematical tools

- Fundamentals of probability

- Probability distributions

- Asymptotic theory

- Fundamentals of statistics

- About Statlect

- Cookies, privacy and terms of use

- Loss function

- Almost sure

- Type I error

- Precision matrix

- Integrable variable

- To enhance your privacy,

- we removed the social buttons,

- but don't forget to share .

Simple linear regression

Simple linear regression #.

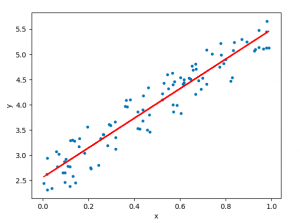

Fig. 9 Simple linear regression #

Errors: \(\varepsilon_i \sim N(0,\sigma^2)\quad \text{i.i.d.}\)

Fit: the estimates \(\hat\beta_0\) and \(\hat\beta_1\) are chosen to minimize the (training) residual sum of squares (RSS):

Sample code: advertising data #

Estimates \(\hat\beta_0\) and \(\hat\beta_1\) #.

A little calculus shows that the minimizers of the RSS are:

Assessing the accuracy of \(\hat \beta_0\) and \(\hat\beta_1\) #

Fig. 10 How variable is the regression line? #

Based on our model #

The Standard Errors for the parameters are:

95% confidence intervals:

Hypothesis test #

Null hypothesis \(H_0\) : There is no relationship between \(X\) and \(Y\) .

Alternative hypothesis \(H_a\) : There is some relationship between \(X\) and \(Y\) .

Based on our model: this translates to

\(H_0\) : \(\beta_1=0\) .

\(H_a\) : \(\beta_1\neq 0\) .

Test statistic:

Under the null hypothesis, this has a \(t\) -distribution with \(n-2\) degrees of freedom.

Sample output: advertising data #

Interpreting the hypothesis test #.

If we reject the null hypothesis, can we assume there is an exact linear relationship?

No. A quadratic relationship may be a better fit, for example. This test assumes the simple linear regression model is correct which precludes a quadratic relationship.

If we don’t reject the null hypothesis, can we assume there is no relationship between \(X\) and \(Y\) ?

No. This test is based on the model we posited above and is only powerful against certain monotone alternatives. There could be more complex non-linear relationships.

IMAGES

VIDEO

COMMENTS

The test statistic value is the same value of the t-test for correlation even though they used different formulas. We look in the same place using technology as the correlation test. The test statistic is greater than the critical value of 2.160 and in the rejection region. The decision is to reject \(H_{0}\).

F-statistics for testing hypothesis for linear regression model: F-test is used to test the null hypothesis that a linear regression model does not exist, representing the relationship between the response variable y and the predictor variables x1, x2, x3, x4 and x5. The null hypothesis can also be represented as x1 = x2 = x3 = x4 = x5 = 0.

Simple Linear Regression ANOVA Hypothesis Test Example: Rainfall and sales of sunglasses We will now describe a hypothesis test to determine if the regression model is meaningful; in other words, does the value of \(X\) in any way help predict the expected value of \(Y\)?

218 CHAPTER 9. SIMPLE LINEAR REGRESSION 9.2 Statistical hypotheses For simple linear regression, the chief null hypothesis is H 0: β 1 = 0, and the corresponding alternative hypothesis is H 1: β 1 6= 0. If this null hypothesis is true, then, from E(Y) = β 0 + β 1x we can see that the population mean of Y is β 0 for

Simple linear regression is a model that describes the relationship between one dependent and one independent variable using a straight line. FAQ ... Hypothesis testing. Hypothesis testing guide; Null vs. alternative hypotheses; Statistical significance; p value; Type I & Type II errors; Statistical power;

Normal vs non-normal model. The lecture is divided in two parts: in the first part, we discuss hypothesis testing in the normal linear regression model, in which the OLS estimator of the coefficients has a normal distribution conditional on the matrix of regressors; . in the second part, we show how to carry out hypothesis tests in linear regression analyses where the hypothesis of normality ...

This test assumes the simple linear regression model is correct which precludes a quadratic relationship. If we don't reject the null hypothesis, can we assume there is no relationship between \(X\) and \(Y\)? No. This test is based on the model we posited above and is only powerful against certain monotone alternatives. There could be more ...

Simple Linear Regression, t-test, and ANOVA. Simple linear regression is used in hypothesis testing and is central in t-tests, and analysis of variance (ANOVA). Simple linear regression and t-test. A t-test is often used to determine whether the slope of the regression line is significantly different from zero. This test helps us understand ...

This page titled 14.4: Hypothesis Test for Simple Linear Regression is shared under a CC BY-SA 4.0 license and was authored, remixed, and/or curated by Maurice A. Geraghty via source content that was edited to the style and standards of the LibreTexts platform.

A simple regression was used to test the hypothesis that hours of sleep would predict quiz scores. Consistent with the hypothesis, hours of sleep was a significant predictor of quiz scores, \(F(1, 8) = 70.54\), \(p\) < .05. Approximately 89.8% of the variance in quiz scores was accounted for by variance in hours of sleep.