6 Usability Testing Examples & Case Studies

Interested in analyzing real-world examples of successful usability tests?

In this article, we’ll be examining six examples of usability testing being conducted with substantial results.

Conducting usability testing takes only seven simple steps and does not have to require a massive budget. Yet it can achieve remarkable results for companies across all industries.

If you’re someone who cannot be convinced by theory alone, this is the guide for you. These are tried-and-tested case studies from well-known companies that showcase the true power of a successful usability test.

Here are the usability testing examples and case studies we’ll be covering in this article:

- McDonald’s

- AutoTrader.com

- Halo: Combat Evolved

Example #1: Ryanair

Ryanair is one of the world’s largest airline groups, carrying 152 million passengers each year. In 2014, the company launched Ryanair Labs, a digital innovation hub seeking to “reinvent online traveling”. To make this dream a reality, they went on a recruiting spree that resulted in a team of 200+ members. This team included user experience specialists, data analysts, software developers, and digital marketers – all working towards a common goal of improving the user experience of the Ryanair website.

What made matters more complicated, however, is that Ryanair’s website and app together received 1 billion visits per year. Working with a website this large, combined with the paper-thin profit margins of around 5% for the airline industry, Ryanair had no room for errors. To make matters even more stressful, one of the first missions for the new team included launching an entirely new website with a superior user experience.

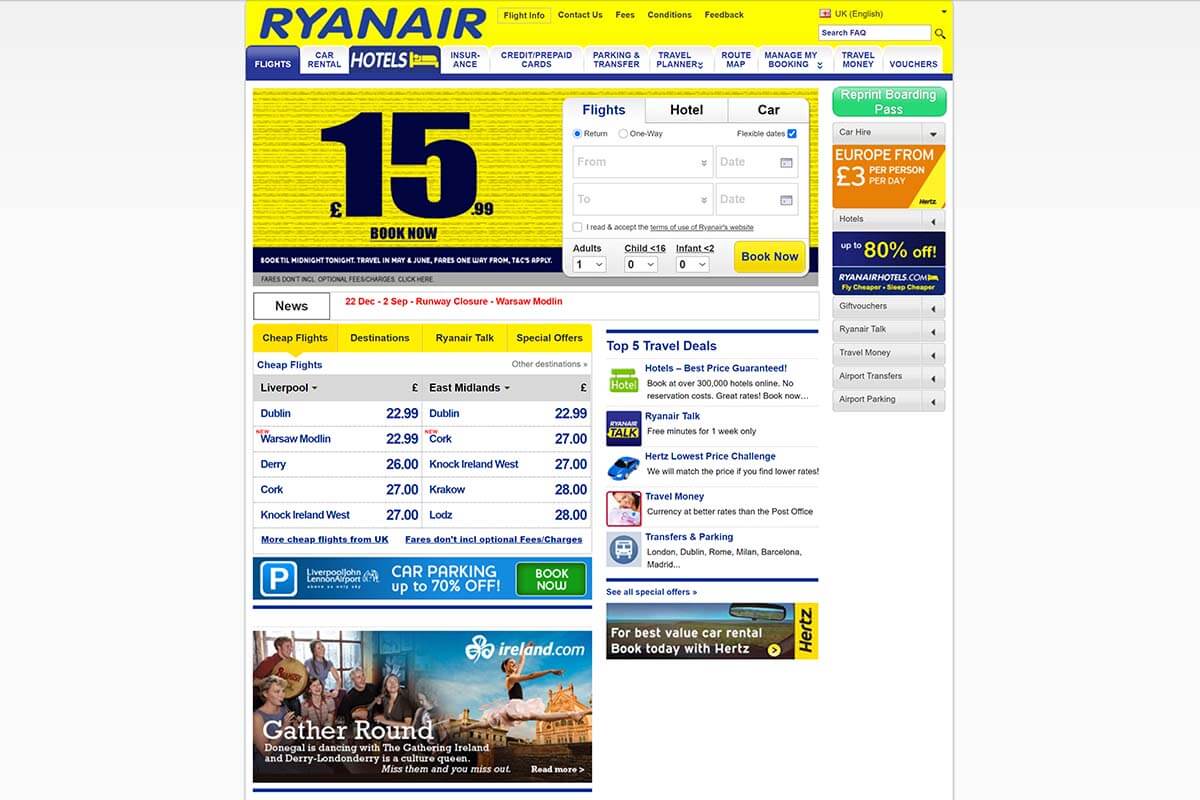

To give you a visual idea of what they were up against, take a look at their old website design:

Not great, not terrible. But the website undoubtedly needed a redesign for the 21st century.

This is what the Ryanair team set out to accomplish:

- Reducing the number of steps needed to book a flight on the website;

- Allowing customers to store their travel documents and payment cards on the website;

- Delivering a better mobile device user experience for both the website and app.

With these goals in mind, they chose remote and unmoderated usability testing types for their user tests. This by itself was a change for the team, as the Ryanair team had relied on in-lab, face-to-face testing until that point.

By collaborating with the UX agency UserZoom , however, new opportunities opened up for Ryanair. With UzerZoom’s massive roster of user testers, Ryanair could access large amounts of qualitative and quantitative usability data. Data that they badly needed during the design process of the new website.

By going with remote unmoderated usability testing, the Ryanair team managed to:

- Reduce the time spent on usability testing;

- Conduct simultaneous usability tests with hundreds of users and without geographical barriers;

- Increase the overall reach and scale of the tests;

- Carry out tests across many devices, operating systems, and multiple focus groups.

With continuous user testing, the new website was taken through alpha and beta testing in 2015. The end result of all work this was the vastly improved look, functionality, and user experience of the new website:

Even before launch, Ryanair knew that the new website was superior. Usability tests had shown that to be the case and they had no need to rely on “educated guesses”. This usability testing example demonstrates that a well-executed testing plan can give remarkable results.

Source: Ryanair case study by UserZoom

Example #2: McDonald’s

McDonald’s is one of the world’s largest fast-food restaurant chains, with a staggering 62 million daily customers . Yet, McDonald’s was late to embrace the mobile revolution as their smartphone app launched rather recently – in August 2015. In comparison, Starbucks’ smartphone app was already a booming success and accounted for 20% of its’ overall revenue in 2015.

Considering the competition, McDonald’s had some catching up to do. Before the launch of their app in the UK, they decided to hire UK-based SimpleUsability to identify any usability problems before release. The test plan involved conducting 20 usability tests, where the task scenarios covered the entire customer journey from end-to-end. In addition to that, the test plan included 225 end-user interviews.

Not exactly a large-scale usability study considering the massive size of McDonald’s, but it turned out to be valuable nonetheless. A number of usability issues were detected during the study:

- Poor visibility and interactivity of the call-to-action buttons;

- Communication problems between restaurants and the smartphone app;

- Lack of order customization and favoriting impaired the overall user experience.

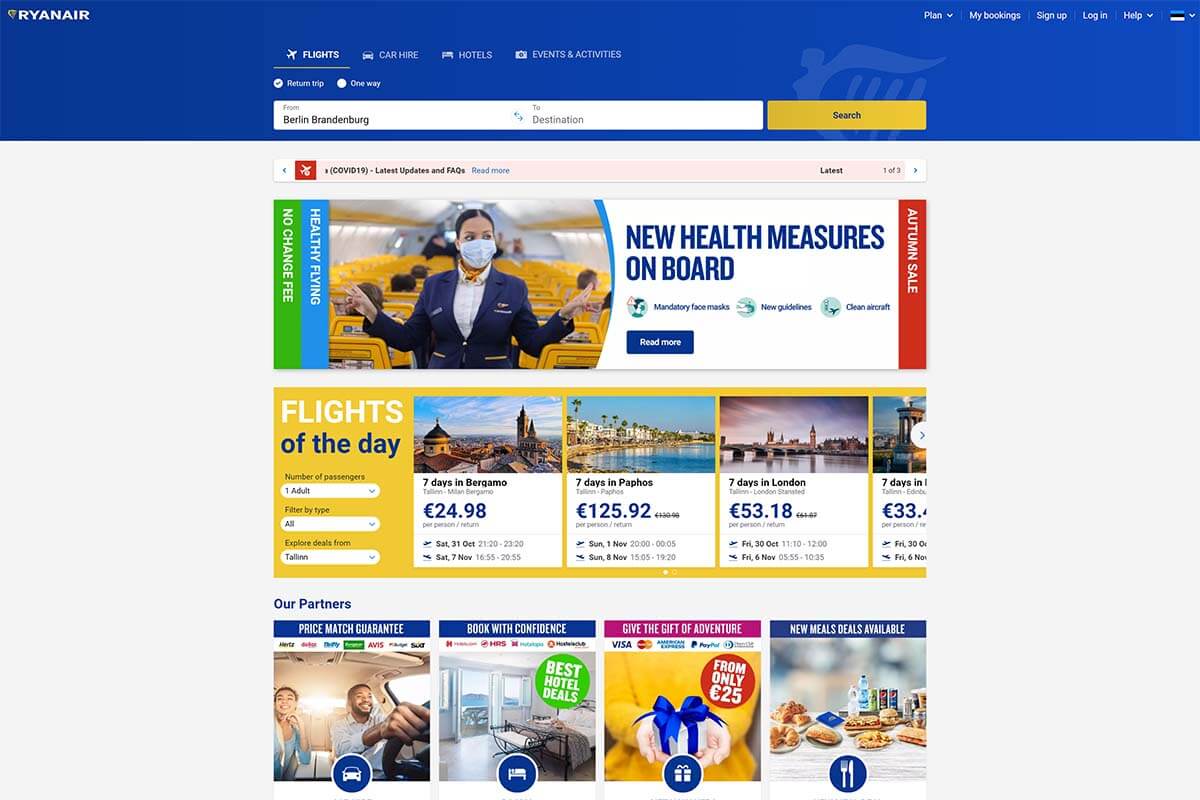

Here’s what the McDonald’s mobile app looks like today:

This case study demonstrates that investing even a tiny percentage of a company’s resources into usability testing can result in meaningful insights.

Source: McDonald’s case study by SimpleUsability

Example #3: SoundCloud

SoundCloud is the world’s largest music and audio distribution platform, with over 175 million unique monthly listeners . In 2019, SoundCloud hired test IO , a Berlin-based usability testing agency, to conduct continuous usability testing for the SoundCloud mobile app. With SoundCloud’s rigorous development schedule, the company needed regular human user testers to make sure that all new updates work across all devices and OS versions.

The key research objectives for SoundCloud’s regular usability studies were to:

- Provide a user-friendly listening experience for mobile app users;

- Identify and fix software bugs before wide release;

- Improve the mobile app development cycle.

In the very first usability tests, more than 150 usability issues (including 11 critical issues) were discovered. These issues likely wouldn’t have been discovered through internal bug testing. That is because the user testers experimented on the app from a plethora of devices and geographical locations (144 devices and 22 countries). Without remote usability testing, a testing scale as large as this would have been very difficult and expensive to achieve.

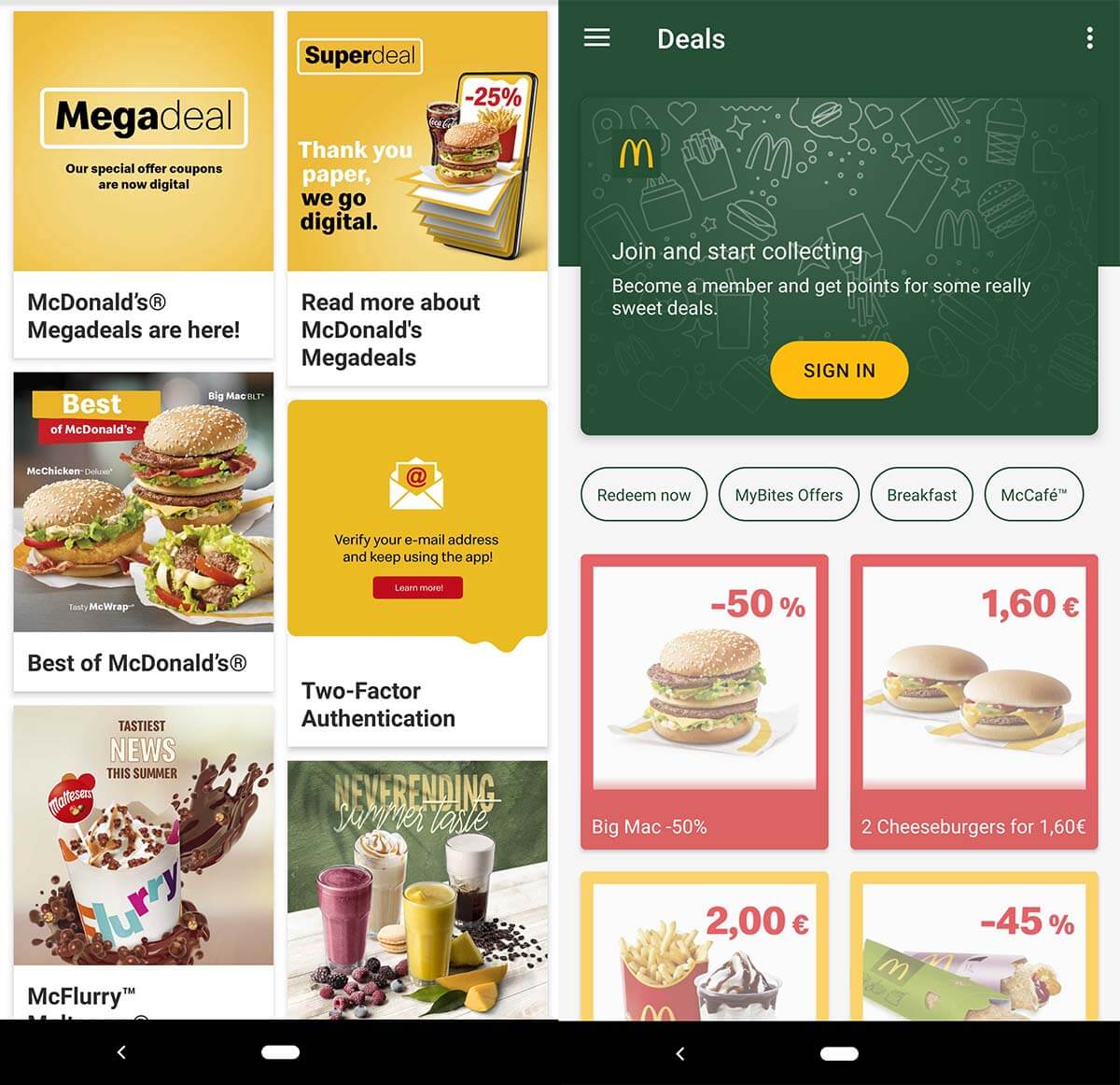

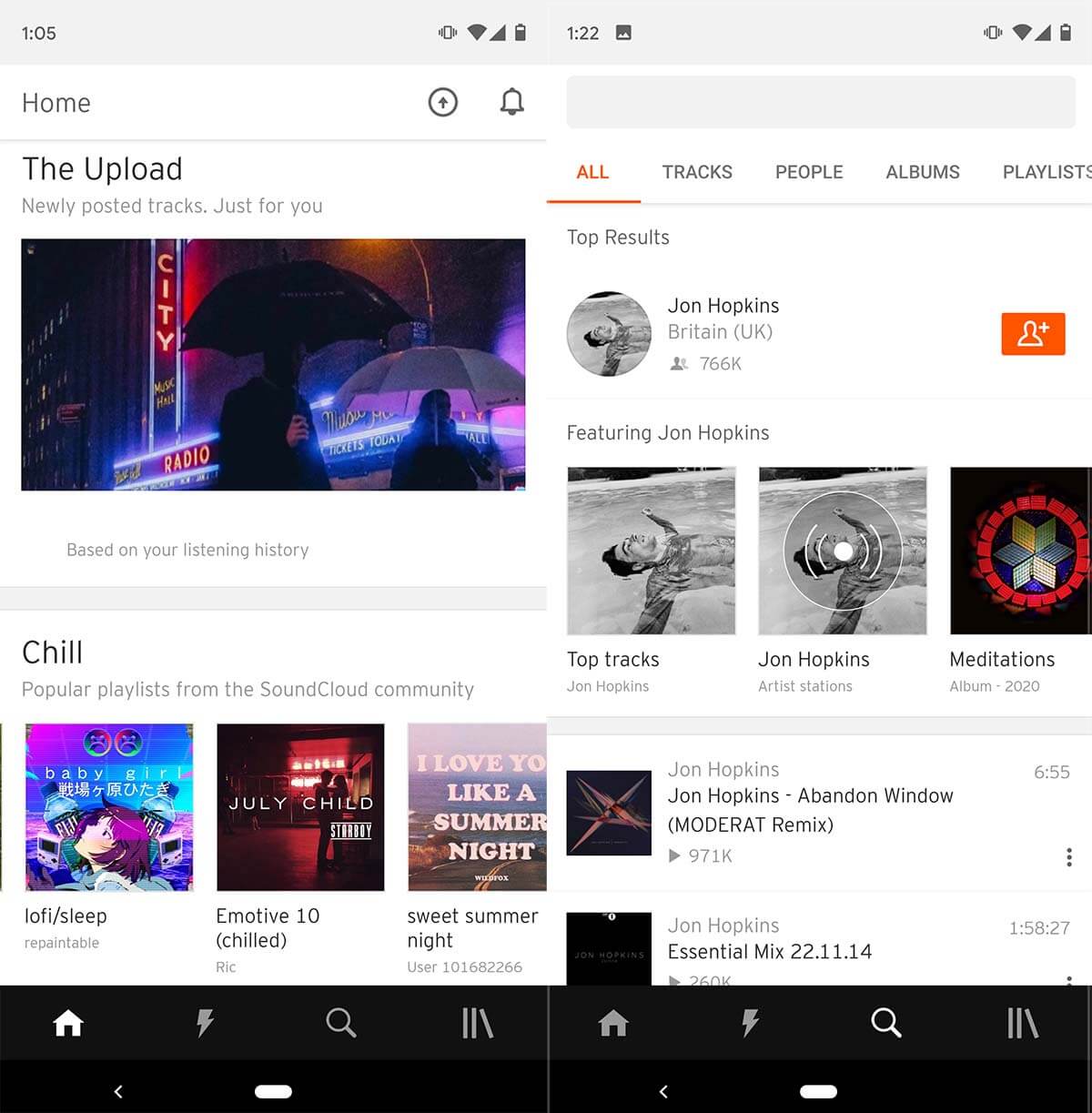

Today, SoundCloud’s mobile app looks like this:

This case study demonstrates the power of regular usability testing in products with frequent updates.

Source: SoundCloud case study (.pdf) by test IO

Example #4: AutoTrader.com

AutoTrader.com is one of the world’s largest online marketplaces for buying and selling used cars, with over 28 million monthly visitors . The mission of AutoTrader’s website is to empower car shoppers in the researching process by giving them all the tools necessary to make informed decisions about vehicle purchases.

Sounds fantastic.

However, with competitors such as CarGurus gaining increasing amounts of market share in the online car shopping industry, AutoTrader had to do reinvent itself to stay competitive.

In e-commerce, competitors with a superior website can gain massive followings in an instant. Fifty years ago this was not the case – well-established car marketplaces had massive car parks all over the country, and a newcomer would have little in ways to compete.

Nowadays, however, it’s all about user experience. Digital shoppers will flock to whichever site offers a better user experience. Websites unwilling or unable to improve their user experience over time will get left in the dust. No matter how big or small they are.

Going back to AutoTrader, the majority of its website traffic comes from organic Google search, meaning that in addition to website usability, search engine optimization (SEO) is a major priority for the company. According to John Muller from Google, changing the layout of a website can affect rankings , and that is why AutoTrader had to be careful with making any large-scale changes to their website.

AutoTrader did not have a large team of user researchers nor a massive budget dedicated to usability testing. But they did have Bradley Miller – Senior User Experience Researcher at the company. To test the usability of AutoTrader, Miller decided to partner with UserTesting.com to conduct live user interviews with AutoTrader users.

Through these live user interviews, Miller was able to:

- Find and connect with target personas;

- Communicate with car buyers from across the country;

- Reduce the costs of conducting usability tests while increasing the insights gained.

From these remote usability live interviews, Miller learned that the customer journey almost always begins from a single source: search engines. Here, it’s important to note that search engines rarely direct users to the homepage. Instead, they drive traffic to the inner pages of websites. In the case of AutoTrader, for example, only around 20% of search engine traffic goes to the homepage (data from SEMrush ).

These insights helped AutoTrader redesign their inner pages to better match the customer journey. They no longer assumed that any inner page visitor already has a greater contextual knowledge of the website. Instead, they started to treat each page as if it’s the initial point of entry by providing more contextual information right then and there inside the inner page.

This usability testing example demonstrates not only the power of user interviews but also the importance of understanding your customer journey and SEO.

Source: AutoTrader case study by UserTesting.com

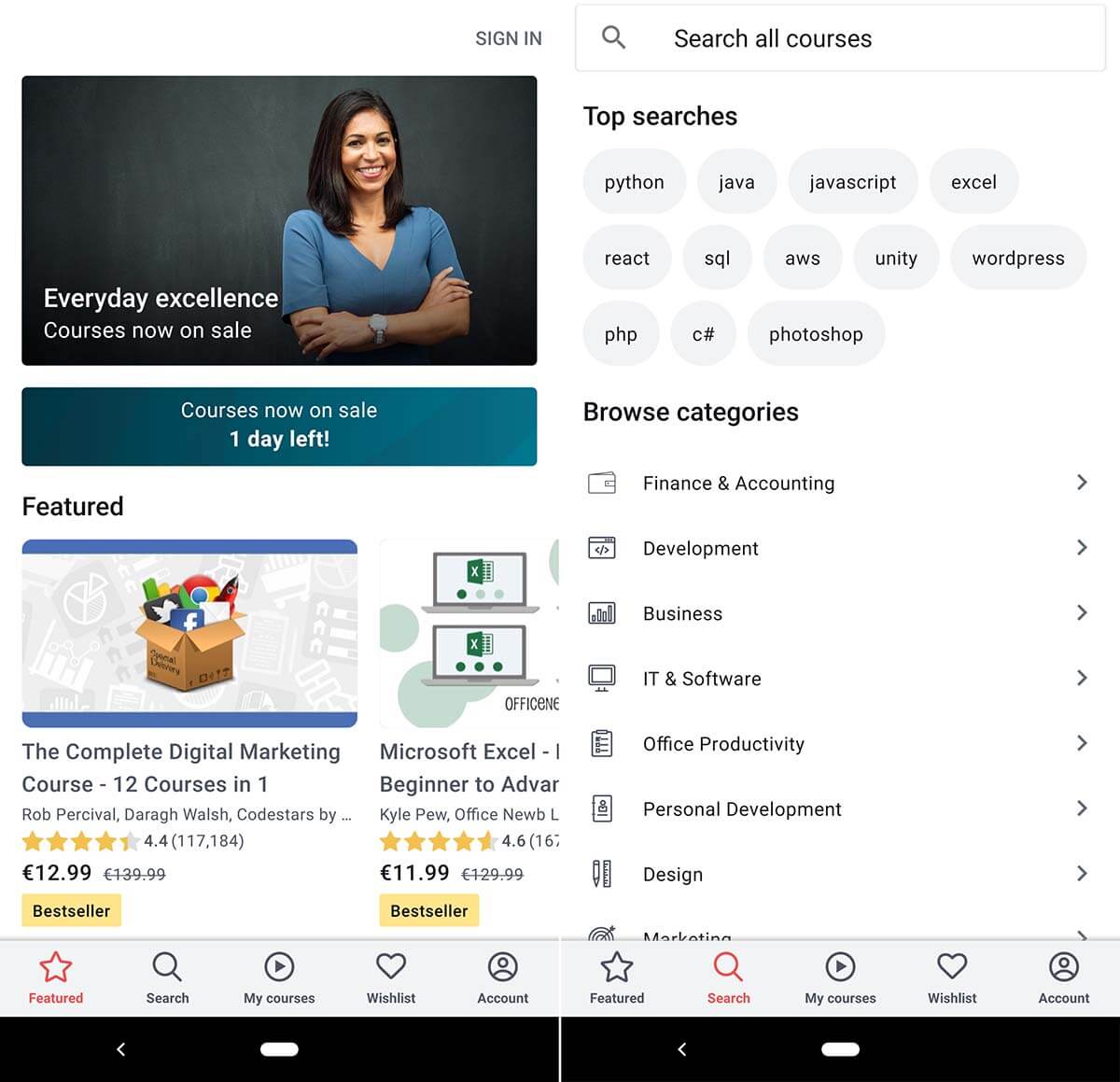

Example #5: Udemy

Udemy is one of the world’s largest online learning platforms with over 40 million students across the world. The e-learning giant also has a massively popular smartphone app, and the usability testing example in question was aimed at the smartphone users of Udemy.

To find out when, where, and why Udemy users chose to opt for the mobile app rather than the desktop version, Udemy conducted user tests. As Udemy is a 100% digital company, they chose fully remote unmoderated user testing as their testing method.

Test participants were asked to take small videos showing where they were located and what tasks they were focused on at the time of learning and recording.

What the user researchers found was that their initial theory of “users prefer using the mobile app while on the go” was false. Instead, what they found was that the majority of mobile app users were stationary. Udemy users, for various reasons, used the mobile app at home on the couch, or in a cafeteria. The key findings of this user test were utilized for the next year’s product and feature development.

This is what Udemy’s mobile app looks like today:

This usability testing case study demonstrates that a company’s perception of target audience behavior does not always match the behavior of the real end-users. And, that is why user testing is crucial.

Source: Udemy case study by UserTesting.com

Example #6: Halo: Combat Evolved

“Halo: Combat Evolved” was the first video game in the massively popular Halo franchise. It was developed by Bungie and published by Microsoft Game Studios in 2001. Within 10 years after its’ release, the Halo games sold more than 46 million copies worldwide and generated Microsoft more than $5 billion in video game and hardware sales. Owing it all to the usability test we’re about to discuss may be a bit of stretch, but usability testing the game during development was undeniably one of the factors that helped the franchise take off like a rocket.

In this usability study, the Halo team gathered a focus group of console gamers to try out their game’s prototype to see if they had fun playing the game. And, if they did not have fun – they wanted to find out what prevented them from doing so.

In the usability sessions, the researchers placed test subjects (players) in a large outdoor environment with enemies waiting for them across the open space.

The designers of the game expected the players to sprint closer towards the enemies, sparking a massive battle full of action and excitement. But, the test participants had a different plan in mind. Instead of putting themselves in danger by springing closer, they would stay at a maximum distance from the enemies and shoot from far across the outdoor space. While this was a safe and effective strategy, it proved to be rather uneventful and boring for the players.

To entice players to enjoy combat up close, the user researchers decided that changes would have to be made. Their solution – changing the size and color of the aiming indicator in the center of the screen to notify players when they were too far away from enemies.

Here, you can see the finalized aiming indicator in action:

Subsequent usability tests proved these changes to be effective, as the majority of user testers now engaged in combat from a closer distance.

User testing is not restricted to any particular industry, OS, or platform. Testing user experience is an invaluable tool for any product – not just for websites or mobile apps.

This example of usability testing from the video game industry shows that players (users) will optimize the fun out of a game if given the chance. It’s up to the designers to bring the fun back through well-designed game mechanics and notifications.

Source: “ Designing for Fun – User-Testing Case Studies ” by Randy J. Pagulayan

- Learn about our financial technology consulting, UX design, and engineering services

- Investing and Wealth

- Fintech SaaS

- Why Praxent

- Capabilities

- Capabilities demo

- Schedule a call

- Uncategorized

- Development

- Life at Praxent

- Project Management

- UX & Design

- Tech & Business News

- Product Management

- Financial Services Innovators

- UX Insights

Usability Testing Case Studies: Validate Assumptions and Build Software with Confidence

We define usability and examine some usability testing case studies to demonstrate the benefits.

As we’ve said before, one of the most important benefits of software prototyping is the early ability to conduct usability testing. The truth of the matter is that no one will use your product if it’s not easy and intuitive or if it doesn’t solve a problem that users have in the first place.

The easiest way to make sure your software project meets these requirements is with usability testing, and the most effective way to implement usability testing early in the development process is with a prototype .

What Is Usability Testing?

Usability testing is the process of studying potential end-users as they interact with a product prototype. Usability testing occurs before you develop and launch a product, and is an essential planning step that can guide a product’s features, functions and purpose. Developing with a clear purpose and research-based data will ensure your goals and plans are in alignment with what an end user wants and needs, and as a result that your product will be more likely to succeed. Usability testing is a type of user research, and like all user research is instrumental in building more informed products that contribute to a business’ long term success.

Intentionally observing real-life people as they interact with a product is an important step in effective user experience design that should not be missed. Without usability testing, it’s very difficult to determine or validate that your product will provide something people are willing to pay for. Companies that don’t invest in this type of upfront testing often create products that are built around their own goals, as opposed to those of their customers, which do not always align. People don’t simply want products just because they exist, and users sometimes approach applications in unexpected ways. Thus, usability testing is key for confidence building during product development.

In this post, we look at a few usability testing examples to illustrate how the process works and why it’s so essential to the overall development process.

Create Your Own User Journey Maps in Sketch or Illustrator

Maximize ROI on usability tests and foster smart decisions for new products with user journey maps.

Get the free step-by-step guide and handy template..

>> Download the e-book and templates for creating user journey maps in Sketch and Illustrator.

User Testing Case Studies

Usability testing case study #1: cisco, usability testing for user experience.

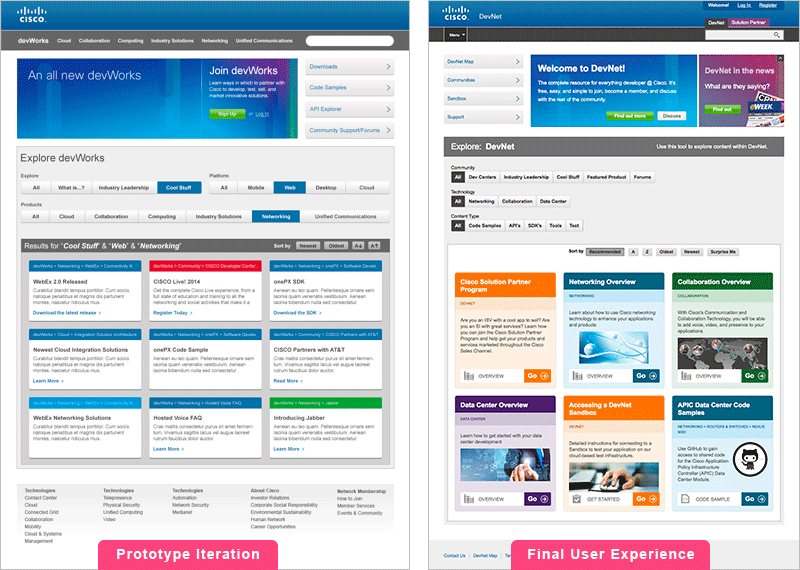

We worked with Cisco’s developer program group to craft a new, more immersive user experience for Cisco DevNet, their developer resources website. Their usability case study illustrates how we tackled their challenge, and the instrumental role that an effective prototyping strategy played in the process.

The Challenge

The depth and breadth of content on Cisco’s DevNet had spawned hundreds of micro-sites, each with different organizational structures and their own navigation paradigms. Existing visitors to the site would only visit a few specific pages, meaning they were never exposed to newly released tools and technologies. Also, new visitors struggled to discover where to begin or how to find the resources most relevant to them. Users were missing out on a lot of valuable resources, and the user experience was less than ideal.

ClickModel® Usability Testing

Cisco wanted to implement a new user experience to the homepage of DevNet in order to make it easier to dive from the homepage deep within the site’s resources to find information on a particular tool or technology. We were charged with prototyping the proposed user experience, so that Cisco could conduct usability testing with developer focus groups. To build our prototype, we implemented our ClickModel tool.

Confidence to Move Forward with Development

The ClickModel prototype emulated the new site that would appear to users. The prototype prompted insightful feedback from the developer focus groups regarding both the proposed information architecture and the priority and placement of various navigational elements on the homepage and subsequent interior landing pages. The prototype also made it easier to collect feedback on the utility of a proposed color-coding scheme for sorting resources into major technology categories.

This feedback and testing allowed Cisco’s DevNet project to course correct in the Structure, Skeleton, and Surface areas before they spent significant money building in the wrong direction. Cisco took their prototype in-house and moved forward decisively and with confidence to create better resources for the developer community.

DeveloperProgram.com runs developer programs for some of the world’s largest technology and telecoms companies. We rely on our partner Praxent who understands our business, our clients, the developer’s needs, and are able to articulate that into a portal design that is easy to navigate and understand, with the foresight to create an infrastructure that allows for untethered growth. The design team is a pleasure to work with, quickly comprehending our needs and converting that to tangible deliverables, on time and always outstanding.

— Steve Glagow, Executive Vice President • DeveloperProgram.com

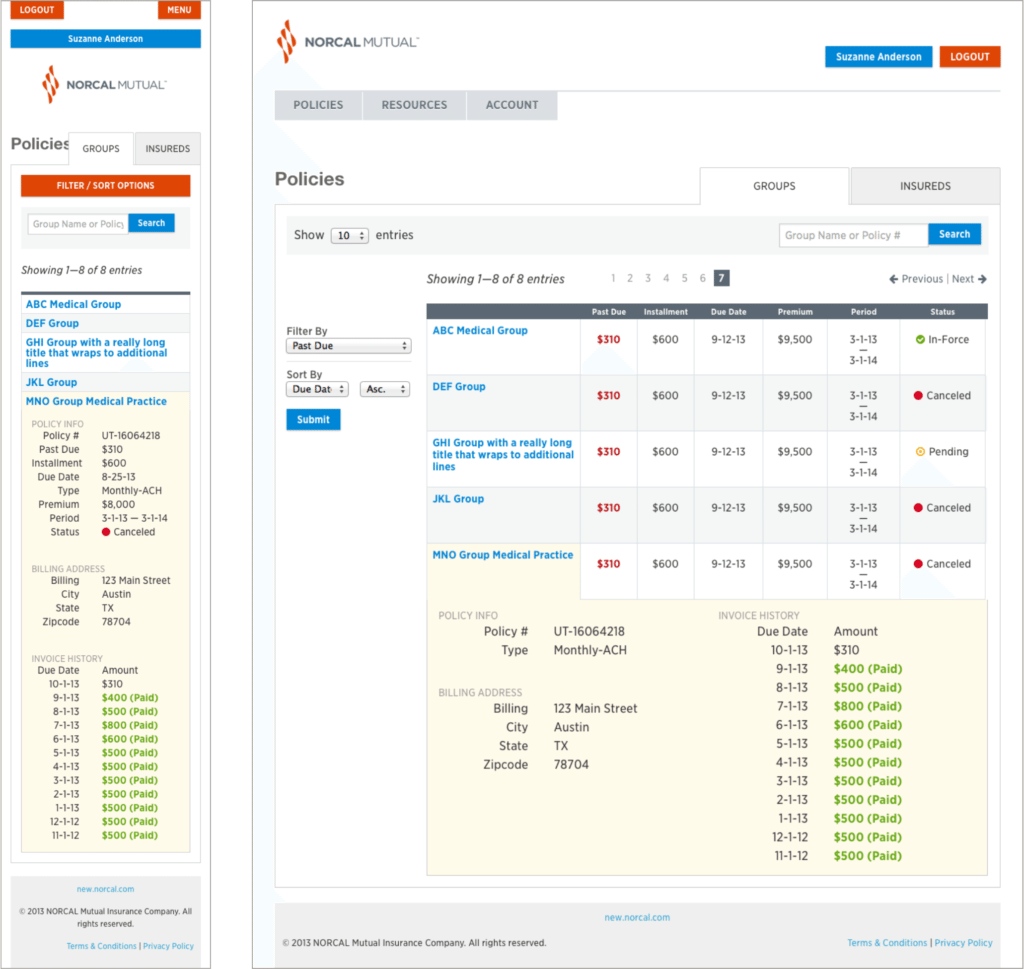

Usability Testing Case Study #2: NORCAL

Responsive data displays with usability testing.

In the wake of a corporate merger, NORCAL, a provider of medical professional liability insurance, was looking to build a new online portal. The portal would allow their insurance brokers to review their book of business and track which policyholders were behind on payments. Their billing department was inundated with phone inquiries from brokers who needed information about specific policyholder accounts, which was hindering their ability to attend to important billing tasks.

NORCAL’s insurance brokers are constantly on the go, so it was crucial that the proposed portal not just be accessible by mobile smartphones and tablets, but the portal be optimized specifically for use on those devices.

A native app solution was discussed, but NORCAL determined early on that they wanted to invest in a responsive web application that could be accessed on desktops and mobile devices by both their internal teams and brokers in the field.

Prototyping to the Rescue

The primary user experience challenge tackled during the engagement was how to display complex data tables in a way that would be equally useful on large screen desktop computers as well as handheld smartphone screens. Since multi touch smartphone devices don’t have cursors, they can’t display information using hover states like a desktop computer can.

During the ClickModel process, we prototyped various on- and off-screen methods of data interaction displays for NORCAL’s team to review and test. This provided a few real-life usability testing examples of how they might tackle their problem.

Interacting with the clickable, tappable prototype on both desktop and mobile devices gave NORCAL crucial insight to determine what pieces of data were most essential to be displayed on the smaller smartphone screens and which additional data fields would be displayed only on desktop screens.

The ClickModel iterative prototyping process provided a clear-cut way for stakeholders from billing, marketing, and engineering to communicate effectively about the user experience. This led to important consensus and direction regarding feature requirements and scope, which was able to guide their project as they moved forward.

What Next? Getting Started With Usability Testing Studies for UX

As you can see, there are many benefits of having a prototype that looks, feels and acts real. In the two usability testing case studies above, ClickModel was an effective tool to build such prototypes, and helping clients garner the information and data-backed insight they needed to proceed with confidence. Learn more about our testing process, and how it also leads to reliable project estimates that are so important as you move forward with the development process.

ClickModel® Overview Guide

Leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

This site uses Akismet to reduce spam. Learn how your comment data is processed .

The Beginner’s Guide to Usability Testing [+ Sample Questions]

Published: July 28, 2021

In practically any discipline, it's a good idea to have others evaluate your work with fresh eyes, and this is especially true in user experience and web design. Otherwise, your partiality for your own work can skew your perception of it. Learning directly from the people that your work is actually for — your users — is what enables you to craft the best user experience possible.

UX and design professionals leverage usability testing to get user feedback on their product or website’s user experience all the time. In this post, you'll learn:

What usability testing is

- Its purpose and goals

- Scenarios where it can work

- Real-life examples and case studies

- How to conduct one of your own

- Scripted questions you can use along the way

What is usability testing?

Usability testing is a method of evaluating a product or website’s user experience. By testing the usability of their product or website with a representative group of their users or customers, UX researchers can determine if their actual users can easily and intuitively use their product or website.

UX researchers will usually conduct usability studies on each iteration of their product from its early development to its release.

During a usability study, the moderator asks participants in their individual user session to complete a series of tasks while the rest of the team observes and takes notes. By watching their actual users navigate their product or website and listening to their praises and concerns about it, they can see when the participants can quickly and successfully complete tasks and where they’re enjoying the user experience, encountering problems, and experiencing confusion.

After conducting their study, they’ll analyze the results and report any interesting insights to the project lead.

.png)

Free UX Research Kit + Templates

3 templates for conducting user tests, summarizing your UX research, and presenting your findings.

- User Testing Template

- UX Research Testing Report Template

- UX Research Presentation Template

You're all set!

Click this link to access this resource at any time.

What is the purpose of usability testing?

Usability testing allows researchers to uncover any problems with their product's user experience, decide how to fix these problems, and ultimately determine if the product is usable enough.

Identifying and fixing these early issues saves the company both time and money: Developers don’t have to overhaul the code of a poorly designed product that’s already built, and the product team is more likely to release it on schedule.

Benefits of Usability Testing

Usability testing has five major advantages over the other methods of examining a product's user experience (such as questionnaires or surveys):

- Usability testing provides an unbiased, accurate, and direct examination of your product or website’s user experience. By testing its usability on a sample of actual users who are detached from the amount of emotional investment your team has put into creating and designing the product or website, their feedback can resolve most of your team’s internal debates.

- Usability testing is convenient. To conduct your study, all you have to do is find a quiet room and bring in portable recording equipment. If you don’t have recording equipment, someone on your team can just take notes.

- Usability testing can tell you what your users do on your site or product and why they take these actions.

- Usability testing lets you address your product’s or website’s issues before you spend a ton of money creating something that ends up having a poor design.

- For your business, intuitive design boosts customer usage and their results, driving demand for your product.

Usability Testing Scenario Examples

Usability testing sounds great in theory, but what value does it provide in practice? Here's what it can do to actually make a difference for your product:

1. Identify points of friction in the usability of your product.

As Brian Halligan said at INBOUND 2019, "Dollars flow where friction is low." This just as true in UX as it is in sales or customer service. The more friction your product has, the more reason your users will have to find something that's easier to use.

Usability testing can uncover points of friction from customer feedback.

For example: "My process begins in Google Drive. I keep switching between windows and making multiple clicks just to copy and paste from Drive into this interface."

Even though the product team may have had that task in mind when they created the tool, seeing it in action and hearing the user's frustration uncovered a use case that the tool didn't compensate for. It might lead the team to solve for this problem by creating an easy import feature or way to access Drive within the interface to reduce the number of clicks the user needs to make to accomplish their task.

2. Stress test across many environments and use cases.

Our products don't exist in a vacuum, and sometimes development environments are unable to compensate for all the variables. Getting the product out and tested by users can uncover bugs that you may not have noticed while testing internally.

For example: "The check boxes disappear when I click on them."

Let's say that the team investigates why this might be, and they discover that the user is on a browser that's not commonly used (or a browser version that's outdated).

If the developers only tested across the browsers used in-house, they may have missed this bug, and it could have resulted in customer frustration.

3. Provide diverse perspectives from your user base.

While individuals in our customer bases have a lot in common (in particular, the things that led them to need and use our products), each individual is unique and brings a different perspective to the table. These perspectives are invaluable in uncovering issues that may not have occurred to your team.

For example: "I can't find where I'm supposed to click."

Upon further investigation, it's possible that this feedback came from a user who is color blind, leading your team to realize that the color choices did not create enough contrast for this user to navigate properly.

Insights from diverse perspectives can lead to design, architectural, copy, and accessibility improvements.

4. Give you clear insights into your product's strengths and weaknesses.

You likely have competitors in your industry whose products are better than yours in some areas and worse than yours in others. These variations in the market lead to competitive differences and opportunities. User feedback can help you close the gap on critical issues and identify what positioning is working.

For example: "This interface is so much easier to use and more attractive than [competitor product]. I just wish that I could also do [task] with it."

Two scenarios are possible based on that feedback:

- Your product can already accomplish the task the user wants. You just have to make it clear that the feature exists by improving copy or navigation.

- You have a really good opportunity to incorporate such a feature in future iterations of the product.

5. Inspire you with potential future additions or enhancements.

Speaking of future iterations, that comes to the next example of how usability testing can make a difference for your product: The feedback that you gather can inspire future improvements to your tool.

It's not just about rooting out issues but also envisioning where you can go next that will make the most difference for your customers. And who best to ask but your prospective and current customers themselves?

Usability Testing Examples & Case Studies

Now that you have an idea of the scenarios in which usability testing can help, here are some real-life examples of it in action:

1. User Fountain + Satchel

Satchel is a developer of education software, and their goal was to improve the experience of the site for their users. Consulting agency User Fountain conducted a usability test focusing on one question: "If you were interested in Satchel's product, how would you progress with getting more information about the product and its pricing?"

During the test, User Fountain noted significant frustration as users attempted to complete the task, particularly when it came to locating pricing information. Only 80% of users were successful.

Image Source

This led User Fountain to create the hypothesis that a "Get Pricing" link would make the process clearer for users. From there, they tested a new variation with such a link against a control version. The variant won, resulting in a 34% increase in demo requests.

By testing a hypothesis based on real feedback, friction was eliminated for the user, bringing real value to Satchel.

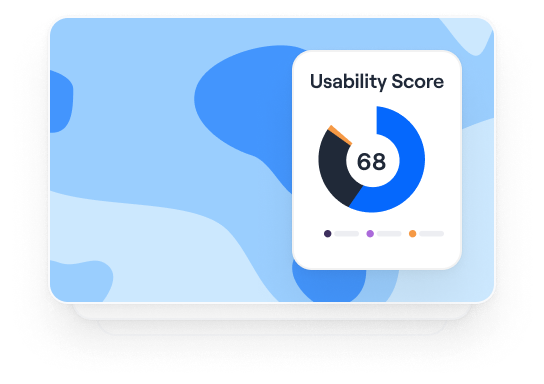

2. Kylie.Design + Digi-Key

Ecommerce site Digi-Key approached consultant Kylie.Design to uncover which site interactions had the highest success rates and what features those interactions had in common.

They conducted more than 120 tests and recorded:

- Click paths from each user

- Which actions were most common

- The success rates for each

This as well as the written and verbal feedback provided by participants informed the new design, which resulted in increasing purchaser success rates from 68.2% to 83.3%.

In essence, Digi-Key was able to identify their most successful features and double-down on them, improving the experience and their bottom line.

3. Sparkbox + An Academic Medical Center

An academic medical center in the midwest partnered with consulting agency Sparkbox to improve the patient experience on their homepage, where some features were suffering from low engagement.

Sparkbox conducted a usability study to determine what users wanted from the homepage and what didn't meet their expectations. From there, they were able to propose solutions to increase engagement.

For example, one key action was the ability to access electronic medical records. The new design based on user feedback increased the success rate from 45% to 94%.

This is a great example of putting the user's pains and desires front-and-center in a design.

The 9 Phases of a Usability Study

1. decide which part of your product or website you want to test..

Do you have any pressing questions about how your users will interact with certain parts of your design, like a particular interaction or workflow? Or are you wondering what users will do first when they land on your product page? Gather your thoughts about your product or website’s pros, cons, and areas of improvement, so you can create a solid hypothesis for your study.

2. Pick your study’s tasks.

Your participants' tasks should be your user’s most common goals when they interact with your product or website, like making a purchase.

3. Set a standard for success.

Once you know what to test and how to test it, make sure to set clear criteria to determine success for each task. For instance, when I was in a usability study for HubSpot’s Content Strategy tool, I had to add a blog post to a cluster and report exactly what I did. Setting a threshold of success and failure for each task lets you determine if your product's user experience is intuitive enough or not.

4. Write a study plan and script.

At the beginning of your script, you should include the purpose of the study, if you’ll be recording, some background on the product or website, questions to learn about the participants’ current knowledge of the product or website, and, finally, their tasks. To make your study consistent, unbiased, and scientific, moderators should follow the same script in each user session.

5. Delegate roles.

During your usability study, the moderator has to remain neutral, carefully guiding the participants through the tasks while strictly following the script. Whoever on your team is best at staying neutral, not giving into social pressure, and making participants feel comfortable while pushing them to complete the tasks should be your moderator

Note-taking during the study is also just as important. If there’s no recorded data, you can’t extract any insights that’ll prove or disprove your hypothesis. Your team’s most attentive listener should be your note-taker during the study.

6. Find your participants.

Screening and recruiting the right participants is the hardest part of usability testing. Most usability experts suggest you should only test five participants during each study , but your participants should also closely resemble your actual user base. With such a small sample size, it’s hard to replicate your actual user base in your study.

To recruit the ideal participants for your study, create the most detailed and specific persona as you possibly can and incentivize them to participate with a gift card or another monetary reward.

Recruiting colleagues from other departments who would potentially use your product is also another option. But you don’t want any of your team members to know the participants because their personal relationship can create bias -- since they want to be nice to each other, the researcher might help a user complete a task or the user might not want to constructively criticize the researcher’s product design.

7. Conduct the study.

During the actual study, you should ask your participants to complete one task at a time, without your help or guidance. If the participant asks you how to do something, don’t say anything. You want to see how long it takes users to figure out your interface.

Asking participants to “think out loud” is also an effective tactic -- you’ll know what’s going through a user’s head when they interact with your product or website.

After they complete each task, ask for their feedback, like if they expected to see what they just saw, if they would’ve completed the task if it wasn’t a test, if they would recommend your product to a friend, and what they would change about it. This qualitative data can pinpoint more pros and cons of your design.

8. Analyze your data.

You’ll collect a ton of qualitative data after your study. Analyzing it will help you discover patterns of problems, gauge the severity of each usability issue, and provide design recommendations to the engineering team.

When you analyze your data, make sure to pay attention to both the users’ performance and their feelings about the product. It’s not unusual for a participant to quickly and successfully achieve your goal but still feel negatively about the product experience.

9. Report your findings.

After extracting insights from your data, report the main takeaways and lay out the next steps for improving your product or website’s design and the enhancements you expect to see during the next round of testing.

The 3 Most Common Types of Usability Tests

1. hallway/guerilla usability testing.

This is where you set up your study somewhere with a lot of foot traffic. It allows you to ask randomly-selected people who have most likely never even heard of your product or website -- like passers-by -- to evaluate its user-experience.

2. Remote/Unmoderated Usability Testing

Remote/unmoderated usability testing has two main advantages: it uses third-party software to recruit target participants for your study, so you can spend less time recruiting and more time researching. It also allows your participants to interact with your interface by themselves and in their natural environment -- the software can record video and audio of your user completing tasks.

Letting participants interact with your design in their natural environment with no one breathing down their neck can give you more realistic, objective feedback. When you’re in the same room as your participants, it can prompt them to put more effort into completing your tasks since they don’t want to seem incompetent around an expert. Your perceived expertise can also lead to them to please you instead of being honest when you ask for their opinion, skewing your user experience's reactions and feedback.

3. Moderated Usability Testing

Moderated usability testing also has two main advantages: interacting with participants in person or through a video a call lets you ask them to elaborate on their comments if you don’t understand them, which is impossible to do in an unmoderated usability study. You’ll also be able to help your users understand the task and keep them on track if your instructions don’t initially register with them.

Usability Testing Script & Questions

Following one script or even a template of questions for every one of your usability studies wouldn't make any sense -- each study's subject matter is different. You'll need to tailor your questions to the things you want to learn, but most importantly, you'll need to know how to ask good questions.

1. When you [action], what's the first thing you do to [goal]?

Questions such as this one give insight into how users are inclined to interact with the tool and what their natural behavior is.

Julie Fischer, one of HubSpot's Senior UX researchers, gives this advice: "Don't ask leading questions that insert your own bias or opinion into the participants' mind. They'll end up doing what you want them to do instead of what they would do by themselves."

For example, "Find [x]" is a better than "Are you able to easily find [x]?" The latter inserts connotation that may affect how they use the product or answer the question.

2. How satisfied are you with the [attribute] of [feature]?

Avoid leading the participants by asking questions like "Is this feature too complicated?" Instead, gauge their satisfaction on a Likert scale that provides a number range from highly unsatisfied to highly satisfied. This will provide a less biased result than leading them to a negative answer they may not otherwise have had.

3. How do you use [feature]?

There may be multiple ways to achieve the same goal or utilize the same feature. This question will help uncover how users interact with a specific aspect of the product and what they find valuable.

4. What parts of [the product] do you use the most? Why?

This question is meant to help you understand the strengths of the product and what about it creates raving fans. This will indicate what you should absolutely keep and perhaps even lead to insights into what you can improve for other features.

5. What parts of [the product] do you use the least? Why?

This question is meant to uncover the weaknesses of the product or the friction in its use. That way, you can rectify any issues or plan future improvements to close the gap between user expectations and reality.

6. If you could change one thing about [feature] what would it be?

Because it's so similar to #5, you may get some of the same answers. However, you'd be surprised about the aspirational things that your users might say here.

7. What do you expect [action/feature] to do?

Here's another tip from Julie Fischer:

"When participants ask 'What will this do?' it's best to reply with the question 'What do you expect it do?' rather than telling them the answer."

Doing this can uncover user expectation as well as clarity issues with the copy.

Your Work Could Always Use a Fresh Perspective

Letting another person review and possibly criticize your work takes courage -- no one wants a bruised ego. But most of the time, when you allow people to constructively criticize or even rip apart your article or product design, especially when your work is intended to help these people, your final result will be better than you could've ever imagined.

Editor's note: This post was originally published in August 2018 and has been updated for comprehensiveness.

Don't forget to share this post!

Related articles.

The Top 13 Paid & Free Alternatives to Adobe Illustrator of 2023

Using Human-Centered Design to Create Better Products (with Examples)

![case study usability test 9 Breadcrumb Tips to Make Your Site Easier to Navigate [+ Examples]](https://blog.hubspot.com/hubfs/breadcrumb-navigation-tips.jpg)

9 Breadcrumb Tips to Make Your Site Easier to Navigate [+ Examples]

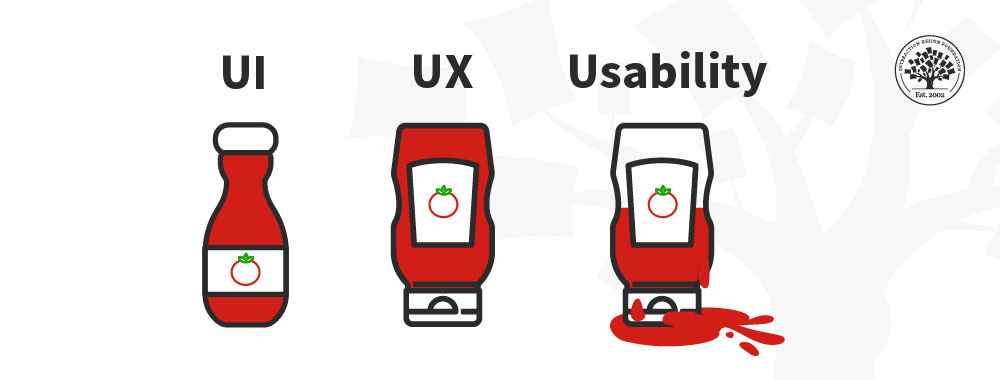

UX vs. UI: What's the Difference?

The 10 Best Storyboarding Software of 2022 for Any Budget

The Ultimate Guide to Designing for the User Experience

It’s the Little Things: How To Write Microcopy

10 Tips That Can Drastically Improve Your Website's User Experience

Intro to Adobe Fireworks: 6 Great Ways Designers Can Use This Software

Fitts's Law: The UX Hack that Will Strengthen Your Design

3 templates for conducting user tests, summarizing UX research, and presenting findings.

Marketing software that helps you drive revenue, save time and resources, and measure and optimize your investments — all on one easy-to-use platform

Skip navigation

- Log in to UX Certification

World Leaders in Research-Based User Experience

Usability Testing 101

December 1, 2019 2019-12-01

- Email article

- Share on LinkedIn

- Share on Twitter

Usability testing is a popular UX research methodology.

Definition: In a usability-testing session, a researcher (called a “facilitator” or a “moderator”) asks a participant to perform tasks, usually using one or more specific user interfaces. While the participant completes each task, the researcher observes the participant’s behavior and listens for feedback.

The phrase “usability testing” is often used interchangeably with “user testing.”

In This Article:

Why usability test, elements of usability testing, types of usability testing, cost of usability testing, nn/g resources for usability testing.

The goals of usability testing vary by study, but they usually include:

- Identifying problems in the design of the product or service

- Uncovering opportunities to improve

- Learning about the target user’s behavior and preferences

Why do we need to do usability testing? Won’t a good professional UX designer know how to design a great user interface? Even the best UX designers can’t design a perfect — or even good enough — user experience without iterative design driven by observations of real users and of their interactions with the design.

There are many variables in designing a modern user interface and there are even more variables in the human brain . The total number of combinations is huge. The only way to get UX design right is to test it.

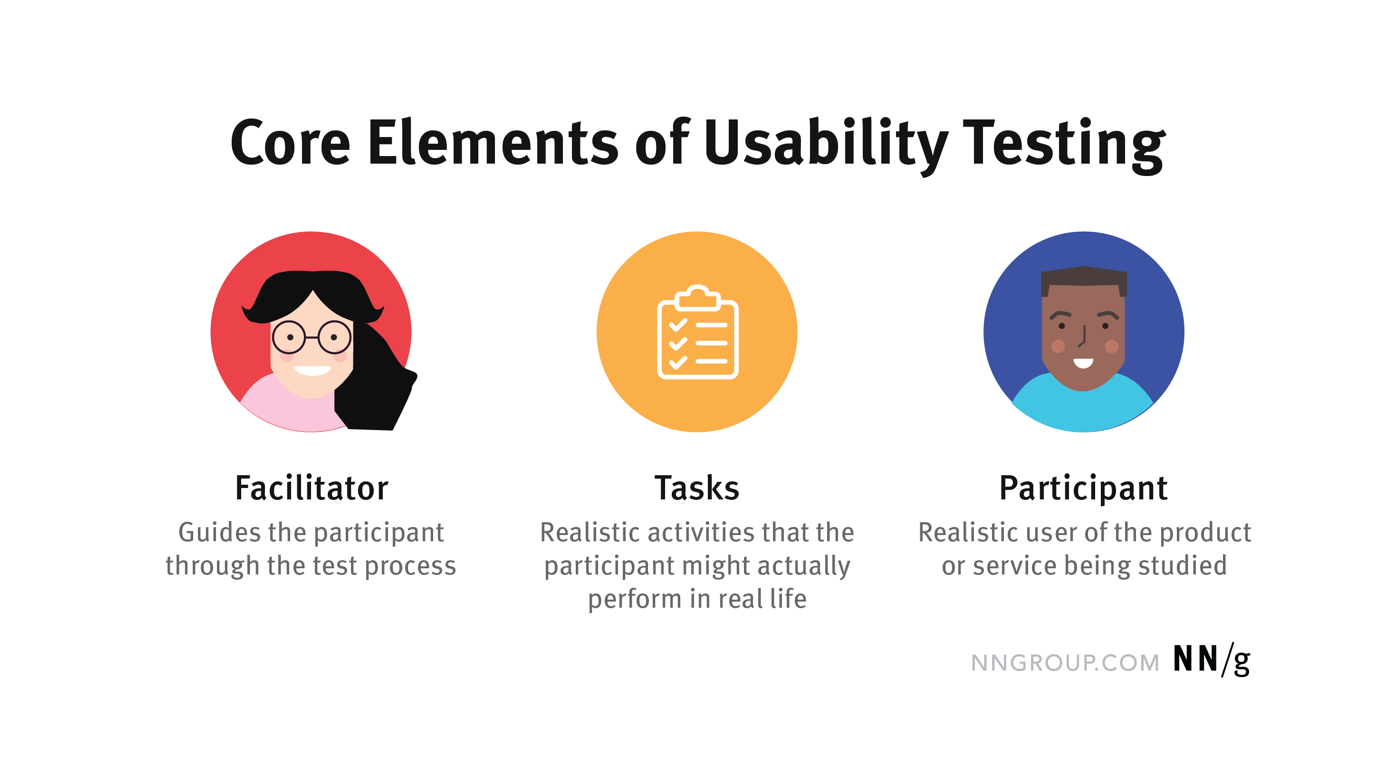

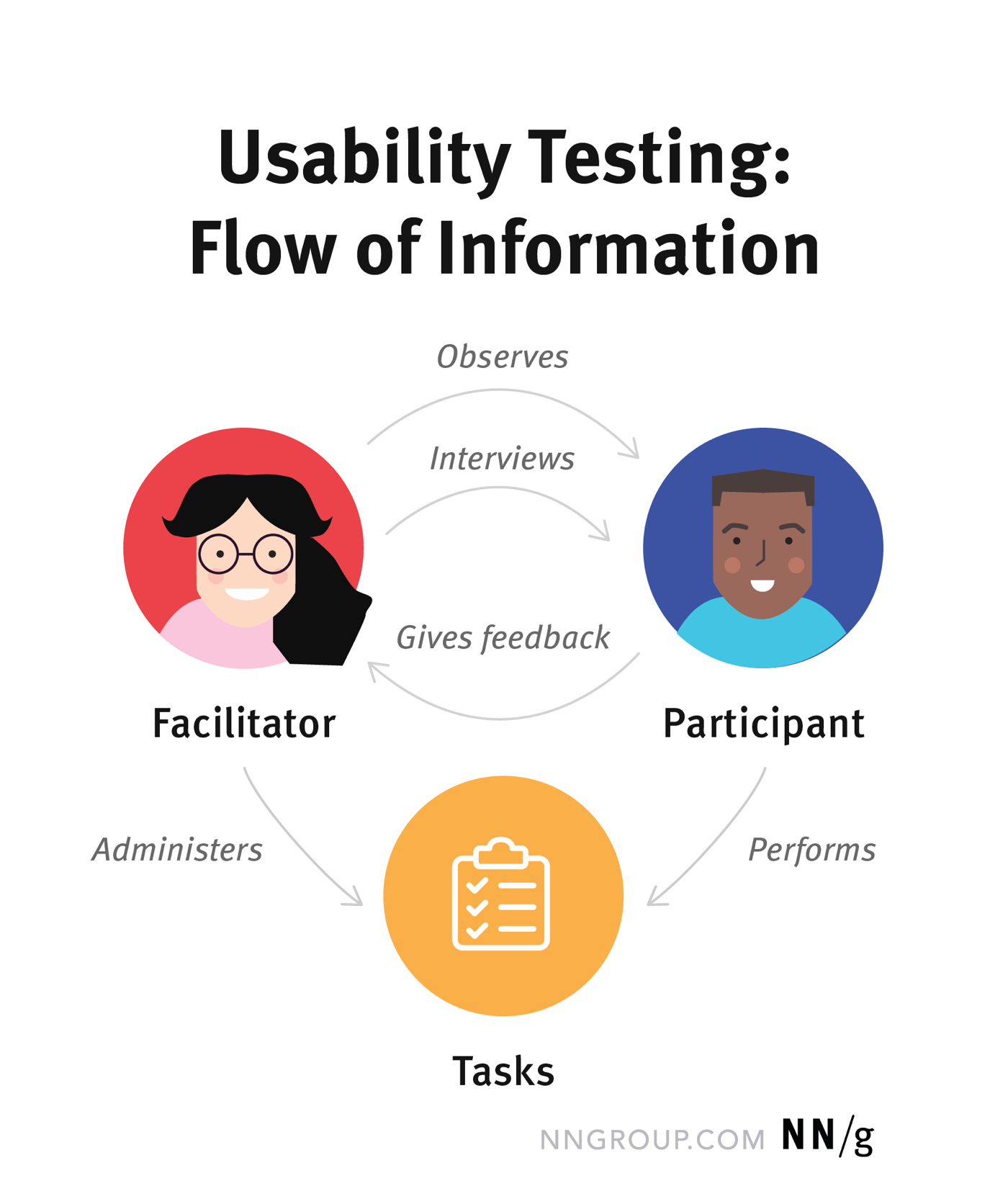

There are many different types of usability testing, but the core elements in most usability tests are the facilitator, the tasks, and the participant .

The facilitator administers tasks to the participant. As the participant performs these tasks, the facilitator observes the participant’s behavior and listens for feedback. The facilitator may also ask followup questions to elicit detail from the participant.

Facilitator

The facilitator guides the participant through the test process. She gives instructions, answers the participant’s questions, and asks followup questions.

The facilitator works to ensure that the test results in high-quality, valid data, without accidentally influencing the participant’s behavior . Achieving this balance is difficult and requires training.

(In one form of remote usability testing, called remote unmoderated testing , an application may perform some of the facilitator’s roles.)

The tasks in a usability test are realistic activities that the participant might perform in real life. They can be very specific or very open-ended, depending on the research questions and the type of usability testing.

Examples of tasks from real usability studies:

Your printer is showing “Error 5200”. How can you get rid of the error message? You're considering opening a new credit card with Wells Fargo. Please visit wellsfargo.com and decide which credit card you might want to open, if any. You’ve been told you need to speak to Tyler Smith from the Project Management department. Use the intranet to find out where they are located. Tell the researcher your answer.

Task wording is very important in usability testing. Small errors in the phrasing of a task can cause the participant to misunderstand what they’re asked to do or can influence how participants perform the task (a psychological phenomenon called priming ).

Task instructions can be delivered to the participant verbally (the facilitator might read them) or can be handed to a participant written on task sheets. We often ask participants to read the task instructions out loud. This helps ensure that the participant reads the instructions completely, and helps the researchers with their notetaking, because they always know which task the user is performing.

Participant

The participant should be a realistic user of the product or service being studied. That might mean that the user is already using the product or service in real life. Alternatively, in some cases, the participant might just have a similar background to the target user group, or might have the same needs, even if he isn’t already a user of the product.

Participants are often asked to think out loud during usability testing (called the “think-aloud method”). The facilitator might ask the participants to narrate their actions and thoughts as they perform tasks. The goal of this approach is to understand participants’ behaviors, goals, thoughts, and motivations.

Qualitative vs. Quantitative

Usability testing can be either qualitative or quantitative .

Qualitative usability testing focuses on collecting insights, findings, and anecdotes about how people use the product or service. Qualitative usability testing is best for discovering problems in the user experience. This form of usability testing is more common than quantitative usability testing. Quantitative usability testing focuses on collecting metrics that describe the user experience. Two of the metrics most commonly collected in quantitative usability testing are task success and time on task. Quantitative usability testing is best for collecting benchmarks .

The number of participants needed for a usability test varies depending on the type of study. For a typical qualitative usability study of a single user group, we recommend using five participants to uncover the majority of the most common problems in the product.

Remote vs. In-Person Testing

Remote usability tests are popular because they often require less time and money than in-person studies. There are two types of remote usability testing: moderated and unmoderated .

Remote moderated usability tests work very similarly to in-person studies. The facilitator still interacts with the participant and asks her to perform tasks. However, the facilitator and participant are in different physical locations. Usually, moderated tests can be performed using screen-sharing software like Skype or GoToMeeting. Remote unmoderated remote usability tests do not have the same facilitator–participant interaction as an in-person or moderated tests. The researcher uses a dedicated online remote-testing tool to set up written tasks for the participant. Then, the participant completes those tasks alone on her own time. The testing tool delivers the task instructions and any followup questions. After the participant completes her test, the researcher receives a recording of the session, along with metrics like task success.

Simple, “discount” usability studies can be inexpensive, though you usually must pay a few hundred dollars as incentives to participants. The testing session can take place in a conference room, and the simplest study will take 3 days of your time (assuming that you have already learned how to do it, and you have access to participants):

- Day 1: Plan the study

- Day 2: Test the 5 users

- Day 3: Analyze the findings and convert them into redesign recommendations for the next iteration

On the other hand, more-expensive research is sometimes required, and the cost can run into several hundred thousand dollars for the most elaborate studies.

Things that add cost include:

- Competitive testing of multiple designs

- International testing in multiple countries

- Testing with multiple user groups (or personas )

- Quantitative studies

- Use of fancy equipment like eyetrackers

- Needing a true usability lab or focus group room to allow others to observe

- Wanting a detailed analysis and report about the findings.

The return on investment (ROI) for advanced studies can still be high, though usually not as high as that for simple studies.

- Qualitative Usability Testing (Study Guide)

- User Testing: Why & How (Video)

- How to Conduct Usability Studies (Report)

- How to Set Up a Desktop Usability Test (Video)

- How to Set Up a Mobile Usability Test (Video)

- Turning User Goals into Task Scenarios for Usability Testing (Article)

- Usability Testing for Mobile Is Easy (Article)

Facilitating a Usability Test

For hands-on training and help honing your facilitation skills, check out our full-day course on usability testing .

- Talking with Participants During a Usability Test (Article)

- User Testing Facilitation Techniques (Video)

- Team Members Behaving Badly During Usability Tests (Article)

- Thinking Aloud: The #1 Usability Tool (Article)

Recruiting Participants

- Recruiting Test Participants for Usability Studies (Article)

- Why You Only Need to Test with 5 Users (Article)

- How Many Test Users in a Usability Study? (Article)

- Usability Testing with 5 Users: Design Process (Video)

- Usability Testing with 5 Users: ROI Criteria (Video)

- Usability Testing with 5 Users: Information Foraging (Video)

- Employees as Usability-Test Participants (Article)

- Using Usability-Test Participants Multiple Times (Video)

- Obtaining Consent for User Research (Article)

Remote Usability Testing

For detailed help planning, conducting, and analyzing remote user testing, check out our full-day seminar: Remote Usability Testing.

- Remote Usability Tests: Moderated and Unmoderated (Article)

- Remote Moderated Usability Tests: How and Why to Do Them (Article)

- Remote Unmoderated User Tests: How and Why to Do Them (Article)

- Tools for Unmoderated Usability Testing (Article)

Special Usability Testing Studies or User Groups

- Quantitative vs. Qualitative Usability Tests (Article)

- Conducting Usability Testing with Real Users’ Real Data (Article)

- How to Conduct Usability Studies for Accessibility (Report)

- Paper Prototyping: Getting User Data Before You Code (Article)

- Paper Prototyping 101 (Video)

- Beyond the NPS: Measuring Perceived Usability (Article)

- International Usability Testing (Article)

- Usability Testing with Minors (Article)

Free Downloads

Related courses, usability testing.

Learn how to plan, conduct, and analyze your own studies, whether in person or remote

ResearchOps: Scaling User Research

Orchestrate and optimize research to amplify its impact

Survey Design and Execution

Learn how to use surveys to drive and evaluate UX design

Related Topics

- User Testing User Testing

- Research Methods

Learn More:

Please accept marketing cookies to view the embedded video. https://www.youtube.com/watch?v=n8MnoJyl3W4

Data vs. Findings vs. Insights

Sara Ramaswamy · 3 min

Usability Test Facilitation: 6 Mistakes to Avoid

Kate Moran and Maria Rosala · 6 min

Help Users Think Aloud

Kate Kaplan · 4 min

Related Articles:

Avoid Leading Questions to Get Better Insights from Participants

Amy Schade · 4 min

‘But You Tested with Only 5 Users!’: Responding to Skepticism About Findings From Small Studies

Kathryn Whitenton · 8 min

Qualitative Usability Testing: Study Guide

Kate Moran · 5 min

Should You Run a Survey?

Maddie Brown · 6 min

Quantitative Research: Study Guide

Kate Moran · 8 min

7 Steps to Benchmark Your Product’s UX

Alita Joyce · 11 min

Usability Testing: Everything You Need to Know (Methods, Tools, and Examples)

As you crack into the world of UX design, there’s one thing you absolutely must understand and learn to practice like a pro: usability testing.

Precisely because it’s such a critical skill to master, it can be a lot to wrap your head around. What is it exactly, and how do you do it? How is it different from user testing? What are some actual methods that you can employ?

In this guide, we’ll give you everything you need to know about usability testing—the what, the why, and the how.

Here’s what we’ll cover:

- What is usability testing and why does it matter?

- Usability testing vs. user testing

- Formative vs. summative usability testing

- Attitudinal vs. behavioral research

Performance testing

Card sorting, tree testing, 5-second test, eye tracking.

- How to learn more about usability testing

Ready? Let’s dive in.

1. What is usability testing and why does it matter?

Simply put, usability testing is the process of discovering ways to improve your product by observing users as they engage with the product itself (or a prototype of the product). It’s a UX research method specifically trained on—you guessed it—the usability of your products. And what is usability ? Usability is a measure of how easily users can accomplish a given task with your product.

Usability testing, when executed well, uncovers pain points in the user journey and highlights barriers to good usability. It will also help you learn about your users’ behaviors and preferences as these relate to your product, and to discover opportunities to design for needs that you may have overlooked.

You can conduct usability testing at any point in the design process when you’ve turned initial ideas into design solutions, but the earlier the better. Test early and test often! You can conduct some kind of usability testing with low- and high- fidelity prototypes alike—and testing should continue after you’ve got a live, out-in-the-world product.

2. Usability testing vs. user testing

Though they sound similar and share a somewhat similar end goal, usability testing and user testing are two different things. We’ll look at the differences in a moment, but first, here’s what they have in common:

- Both share the end goal of creating a design solution to meet real user needs

- Both take the time to observe and listen to the user to hear from them what needs/pain points they experience

- Both look for feasible ways of meeting those needs or addressing those pain points

User testing essentially asks if this particular kind of user would want this particular kind of product—or what kind of product would benefit them in the first place. It is entirely user-focused.

Usability testing, on the other hand, is more product-focused and looks at users’ needs in the context of an existing product (even if that product is still in prototype stages of development). Usability testing takes your existing product and places it in the hands of your users (or potential users) to see how the product actually works for them—how they’re able to accomplish what they need to do with the product.

3. Formative vs. summative usability testing

Alright! Now that you understand what usability testing is, and what it isn’t, let’s get into the various types of usability testing out there.

There are two broad categories of usability testing that are important to understand— formative and summative . These have to do with when you conduct the testing and what your broad objectives are—what the overarching impact the testing should have on your product.

Formative usability testing:

- Is a qualitative research process

- Happens earlier in the design, development, or iteration process

- Seeks to understand what about the product needs to be improved

- Results in qualitative findings and ideation that you can incorporate into prototypes and wireframes

Summative usability testing:

- Is a research process that’s more quantitative in nature

- Happens later in the design, development, or iteration process

- Seeks to understand whether the solutions you are implementing (or have implemented) are effective

- Results in quantitative findings that can help determine broad areas for improvement or specific areas to fine-tune (this can go hand in hand with competitive analysis )

4. Attitudinal vs. behavioral research

Alongside the timing and purpose of the testing (formative vs. summative), it’s important to understand two broad categories that your research (both your objectives and your findings) will fall into: behavioral and attitudinal.

Attitudinal research is all about what people say—what they think and communicate about your product and how it works. Behavioral research focuses on what people do—how they actually do interact with your product and the feelings that surface as a result.

What people say and what people do are often two very different things. These two categories help define those differences, choose our testing methods more intentionally, and categorize our findings more effectively.

5. Five essential usability testing methods

Some usability testing methods are geared more towards uncovering either behavioral or attitudinal findings; but many have the potential to result in both.

Of the methods you’ll learn about in this section, performance testing has the greatest potential for targeting both—and will perhaps require the greatest amount of thoughtfulness regarding how you approach it.

Naturally, then, we’ll spend a little more time on that method than the other four, though that in no way diminishes their usefulness! Here are the methods we’ll cover:

These are merely five common and/or interesting methods—it is not a comprehensive list of every method you can use to get inside the hearts and minds of your users. But it’s a place to start. So here we go!

In performance testing, you sit down with a user and give them a task (or set of tasks) to complete with the product.

This is often a combination of methods and approaches that will allow you to interview users, see how they use your product, and find out how they feel about the experience afterward. Depending on your approach, you’ll observe them, take notes, and/or ask usability testing questions before, after, or along the way.

Performance testing is by far the most talked-about form of usability testing—especially as it’s often combined with other methods. Performance testing is what most commonly comes to mind in discussions of usability testing as a whole, and it’s what many UX design certification programs focus on—because it’s so broadly useful and adaptive.

While there’s no one right way to conduct performance testing, there are a number of approaches and combinations of methods you can use, and you’ll want to be intentional about it.

It’s a method that you can adapt to your objectives—so make sure you do! Ask yourself what kind of attitudinal or behavioral findings you’re really looking for, how much time you’ll have for each testing session, and what methods or approaches will help you reach your objectives most efficiently.

Performance testing is often combined with user interviews . For a quick guide on how to ask great questions during this part of a testing session, watch this video:

Even if you choose not to combine performance testing with user interviews, good performance testing will still involve some degree of questioning and moderating.

Performance testing typically results in a pretty massive chunk of qualitative insights, so you’ll need to devote a fair amount of intention and planning before you jump in.

Maximize the usefulness of your research by being thoughtful about the task(s) you assign and what approach you take to moderating the sessions. As your test participants go about the task(s) you assign, you’ll watch, take notes, and ask questions either during or after the test—depending on your approach.

Four approaches to performance testing

There are four ways you can go about moderating a performance test , and it’s worth understanding and choosing your approach (or combination of approaches) carefully and intentionally. As you choose, take time to consider:

- How much guidance the participant will actually need

- How intently participants will need to focus

- How guidance or prompting from you might affect results or observations

With these things in mind, let’s look at the four approaches.

Concurrent Think Aloud (CTA)

With this approach, you’ll encourage participants to externalize their thought process—to think out loud. Your job during the session will be to keep them talking through what they’re looking for, what they’re doing and why, and what they think about the results of their actions.

A CTA approach often uncovers a lot of nuanced details in the user journey, but if your objectives include anything related to the accuracy or time for task completion, you might be better off with a Retrospective Think Aloud.

Retrospective Think Aloud (RTA)

Here, you’ll allow participants to complete their tasks and recount the journey afterward . They can complete tasks in a more realistic time frame and degree of accuracy, though there will certainly be nuanced details of participants’ thoughts and feelings you’ll miss out on.

Concurrent Probing (CP)

With Concurrent Probing, you ask participants about their experience as they’re having it. You prompt them for details on their expectations, reasons for particular actions, and feeling about results.

This approach can be distracting, but used in combination with CTA, you can allow participants to complete the tasks and prompt only when you see a particularly interesting aspect of their experience, and you’d like to know more. Again, if accuracy and timing are critical objectives, you might be better off with Retrospective Probing.

Retrospective Probing (RP)

If you note that a participant says or does something interesting as they complete their task(s), you can note it and ask them about it later—this is Retrospective Probing. This is an approach very often combined with CTA or RTA to ensure that you’re not missing out on those nuanced details of their experience without distracting them from actually completing the task.

Whew! There’s your quick overview of performance testing. To learn more about it, read to the final section of this article: How to learn more about usability testing.

With this under our belts, let’s move on to our other four essential usability testing methods.

Card sorting is a way of testing the usability of your information architecture. You give users blank cards (open card sorting) or cards labeled with the names and short descriptions of the main items/sections of the product (closed card sorting), then ask them to sort the cards into piles according to which items seem to go best together. You can go even further by asking them to sort the cards into larger groups and to name the groups or piles.

Rather than structuring your site or app according to your understanding of the product, card sorting allows the information architecture to mirror the way your users are thinking.

This is a great technique to employ very early in the design process as it is inexpensive and will save the time and expense of making structural adjustments later in the process. And there’s no technology required! If you want to conduct it remotely, though, there are tools like OptimalSort that do this effectively.

For more on how to conduct card sorting, watch this video:

Tree testing is a great follow up to card sorting, but it can be conducted on its own as well. In tree testing, you create a visual information hierarchy (or “tree) and ask users to complete a task using the tree. For example, you might ask users, “You want to accomplish X with this product. Where do you go to do that?” Then you observe how easily users are able to find what they’re looking for.

This is another great technique to employ early in the design process. It can be conducted with paper prototypes or spreadsheets, but you can also use tools such as TreeJack to accomplish this digitally and remotely.

In the 5-second test, you expose your users to one portion of your product (one screen, probably the top half of it) for five seconds and then interview them to see what they took away regarding:

- The product/page’s purpose and main features or elements

- The intended audience and trustworthiness of the brand

- Their impression of the usability and design of the product

You can conduct this kind of testing in person rather simply, or remotely with tools like UsabilityHub .

This one may seem somewhat new, but it’s been around for a while–though the tools and technology around it have evolved. Eye tracking on its own isn’t enough to determine usability, but it’s a great compliment to your other usability testing measures.

In eye tracking you literally track where most users’ eyes land on the screen you’re designing. The reason this is important is that you want to make sure that the elements users’ eyes are drawn to are the ones that communicate the most important information. This is a difficult one to conduct in any kind of analog fashion, but there are a lot of tools out there that make it simple— CrazyEgg and HotJar are both great places to start.

6. How to learn more about usability testing

There you have it: your 15-minute overview of the what, why, and how of usability testing. But don’t stop here! Usability testing and UX research as a whole have a deeply humanizing impact on the design process. It’s a fascinating field to discover and the result of this kind of work has the power of keeping companies, design teams, and even the lone designer accountable to what matters most: the needs of the end user.

If you’d like to learn more about usability testing and UX research, take the free UX Research for Beginners Course with CareerFoundry. This tutorial is jam-packed with information that will give you a deeper understanding of the value of this kind of testing as well as a number of other UX research methods.

You can also enroll in a UX design course or bootcamp to get a comprehensive understanding of the entire UX design process (to which usability testing and UX research are an integral part). For guidance on the best programs, check out our list of the 10 best UX design certification programs . And if you’ve already started your learning process, and you’re thinking about the job hunt, here are the top 5 UX research interview questions to be ready for.

For further reading about usability testing and UX research, check out these other articles:

- How to conduct usability testing: a step-by-step guide

- What does a UX researcher actually do? The ultimate career guide

- 11 usability heuristics every designer should know

- How to conduct a UX audit

- Reviews / Why join our community?

- For companies

- Frequently asked questions

Usability Testing

What is usability testing.

Usability testing is the practice of testing how easy a design is to use with a group of representative users. It usually involves observing users as they attempt to complete tasks and can be done for different types of designs. It is often conducted repeatedly, from early development until a product’s release.

“It’s about catching customers in the act, and providing highly relevant and highly contextual information.”

— Paul Maritz, CEO at Pivotal

- Transcript loading…

Usability Testing Leads to the Right Products

Through usability testing, you can find design flaws you might otherwise overlook. When you watch how test users behave while they try to execute tasks, you’ll get vital insights into how well your design/product works. Then, you can leverage these insights to make improvements. Whenever you run a usability test, your chief objectives are to:

1) Determine whether testers can complete tasks successfully and independently .

2) Assess their performance and mental state as they try to complete tasks, to see how well your design works.

3) See how much users enjoy using it.

4) Identify problems and their severity .

5) Find solutions .

While usability tests can help you create the right products, they shouldn’t be the only tool in your UX research toolbox. If you just focus on the evaluation activity, you won’t improve the usability overall.

There are different methods for usability testing. Which one you choose depends on your product and where you are in your design process.

Usability Testing is an Iterative Process

To make usability testing work best, you should:

a. Define what you want to test . Ask yourself questions about your design/product. What aspect/s of it do you want to test? You can make a hypothesis from each answer. With a clear hypothesis, you’ll have the exact aspect you want to test.

b. Decide how to conduct your test – e.g., remotely. Define the scope of what to test (e.g., navigation) and stick to it throughout the test. When you test aspects individually, you’ll eventually build a broader view of how well your design works overall.

2) Set user tasks –

a. Prioritize the most important tasks to meet objectives (e.g., complete checkout), no more than 5 per participant. Allow a 60-minute timeframe.

b. Clearly define tasks with realistic goals .

c. Create scenarios where users can try to use the design naturally . That means you let them get to grips with it on their own rather than direct them with instructions.

3) Recruit testers – Know who your users are as a target group. Use screening questionnaires (e.g., Google Forms) to find suitable candidates. You can advertise and offer incentives . You can also find contacts through community groups , etc. If you test with only 5 users, you can still reveal 85% of core issues.

4) Facilitate/Moderate testing – Set up testing in a suitable environment . Observe and interview users . Notice issues . See if users fail to see things, go in the wrong direction or misinterpret rules. When you record usability sessions, you can more easily count the number of times users become confused. Ask users to think aloud and tell you how they feel as they go through the test. From this, you can check whether your designer’s mental model is accurate: Does what you think users can do with your design match what these test users show?

If you choose remote testing , you can moderate via Google Hangouts, etc., or use unmoderated testing. You can use this software to carry out remote moderated and unmoderated testing and have the benefit of tools such as heatmaps.

Keep usability tests smooth by following these guidelines.

1) Assess user behavior – Use these metrics:

Quantitative – time users take on a task, success and failure rates, effort (how many clicks users take, instances of confusion, etc.)

Qualitative – users’ stress responses (facial reactions, body-language changes, squinting, etc.), subjective satisfaction (which they give through a post-test questionnaire) and perceived level of effort/difficulty

2) Create a test report – Review video footage and analyzed data. Clearly define design issues and best practices. Involve the entire team.

Overall, you should test not your design’s functionality, but users’ experience of it . Some users may be too polite to be entirely honest about problems. So, always examine all data carefully.

Learn More about Usability Testing

Take our course on usability testing .

Here’s a quick-fire method to conduct usability testing .

See some real-world examples of usability testing .

Take some helpful usability testing tips .

Questions related to Usability Testing

To conduct usability testing effectively:

Start by defining clear, objective goals and recruit representative users.

Develop realistic tasks for participants to perform and set up a controlled, neutral environment for testing.

Observe user interactions, noting difficulties and successes, and gather qualitative and quantitative data.

After testing, analyze the results to identify areas for improvement.

For a comprehensive understanding and step-by-step guidance on conducting usability testing, refer to our specialized course on Conducting Usability Testing .

Conduct usability testing early and often, from the design phase to development and beyond. Early design testing uncovers issues when they are more accessible and less costly to fix. Regular assessments throughout the project lifecycle ensure continued alignment with user needs and preferences. Usability testing is crucial for new products and when redesigning existing ones to verify improvements and discover new problem areas. Dive deeper into optimal timing and methods for usability testing in our detailed article “Usability: A part of the User Experience.”

Incorporate insights from William Hudson, CEO of Syntagm, to enhance usability testing strategies. William recommends techniques like tree testing and first-click testing for early design phases to scrutinize navigation frameworks. These methods are exceptionally suitable for isolating and evaluating specific components without visual distractions, focusing strictly on user understanding of navigation. They're advantageous for their quantitative nature, producing actionable numbers and statistics rapidly, and being applicable at any project stage. Ideal for both new and existing solutions, they help identify problem areas and assess design elements effectively.

To conduct usability testing for a mobile application:

Start by identifying the target users and creating realistic tasks for them.

Collect data on their interactions and experiences to uncover issues and areas for improvement.

For instance, consider the concept of ‘tappability’ as explained by Frank Spillers, CEO: focusing on creating task-oriented, clear, and easily tappable elements is crucial.

Employing correct affordances and signifiers, like animations, can clarify interactions and enhance user experience, avoiding user frustration and errors. Dive deeper into mobile usability testing techniques and insights by watching our insightful video with Frank Spillers.

For most usability tests, the ideal number of participants depends on your project’s scope and goals. Our video featuring William Hudson, CEO of Syntagm, emphasizes the importance of quality in choosing participants as it significantly impacts the usability test's results.

He shares insightful experiences and stresses on carefully selecting and recruiting participants to ensure constructive and reliable feedback. The process involves meticulous planning and execution to identify and discard data from non-contributive participants and to provide meaningful and trustworthy insights are gathered to improve the interactive solution, be it an app or a website. Remember the emphasis on participant's attentiveness and consistency while performing tasks to avoid compromising the results. Watch the full video for a more comprehensive understanding of participant recruitment and usability testing.