- General Categories

- Mental Health

- IQ and Intelligence

- Bipolar Disorder

Verbal Behavior: Skinner’s Revolutionary Approach to Language and Communication

B.F. Skinner’s groundbreaking theory of verbal behavior shattered traditional notions of language, ushering in a new era of understanding human communication through the lens of behavioral psychology. This revolutionary approach to language acquisition and use sent shockwaves through the academic world, challenging long-held beliefs about how we learn to speak, write, and interact with one another.

Imagine, if you will, a world where every word you utter, every gesture you make, and every scribble on paper is shaped by the environment around you. This is the world Skinner invited us to explore – a world where language isn’t just a cognitive process but a behavior molded by consequences and reinforcement. It’s a bit like thinking of your words as tiny acrobats, each one performing based on the applause (or lack thereof) from the audience around you.

Verbal Behavior: More Than Just Talk

So, what exactly is verbal behavior? Well, it’s not your grandma’s grammar lesson, that’s for sure. Analysis of Verbal Behavior: Exploring Language Through a Behavioral Lens gives us a peek into this fascinating world. Skinner defined verbal behavior as any behavior reinforced through the mediation of another person. In simpler terms, it’s not just about the words coming out of your mouth – it’s about why they’re coming out and what happens when they do.

Think about it this way: when you were a kid and said “cookie” for the first time, and your mom handed you a delicious chocolate chip treat, you learned that saying “cookie” gets you cookies. That’s verbal behavior in action! It’s not just about the word itself, but the effect it has on the world around you.

This approach stands in stark contrast to traditional linguistics, which often treats language as a purely cognitive process. Skinner’s theory suggests that language, like any other behavior, is shaped by its consequences. It’s less about innate grammatical structures and more about learning what works in different situations.

Skinner’s Verbal Behavior: A Deep Dive

Now, let’s roll up our sleeves and dive into the nitty-gritty of Skinner’s theory. Picture Skinner as a linguistic Indiana Jones, venturing into uncharted territory armed with nothing but his keen observational skills and a burning curiosity about human behavior.

Skinner’s work on verbal behavior didn’t just appear out of thin air. It was the culmination of years of research and observation, building on his earlier work in operant conditioning. In 1957, he published “Verbal Behavior,” a book that would become both a landmark and a lightning rod in the field of psychology.

At the heart of Skinner’s theory are six primary verbal operants. These are like the different tools in your communication toolbox, each serving a unique purpose:

1. Mand: This is when you ask for something. “Pass the salt,” is a mand. 2. Tact: This is when you label or identify something. “That’s a cute dog!” is a tact. 3. Echoic: This is when you repeat what someone else says. It’s how we often learn new words as children. 4. Intraverbal: This is when your verbal behavior is controlled by other verbal behavior. Think of conversations or answering questions. 5. Textual: This refers to reading written words. 6. Transcription: This is writing or typing words you hear or see.

Skinner’s Analysis of Verbal Behavior: A Groundbreaking Approach to Language provides a more in-depth look at these operants and how they function in our daily lives.

Now, I know what you’re thinking – “This all sounds great, but surely it can’t be that simple?” And you’d be right. Skinner’s theory wasn’t without its critics. The most famous critique came from linguist Noam Chomsky, who argued that Skinner’s behaviorist approach couldn’t account for the creativity and complexity of human language.

Verbal Behavior in Action: From Classroom to Chatbot

But here’s where things get really interesting. Despite the controversies, Skinner’s ideas have found applications in a wide range of fields. Let’s take a whirlwind tour, shall we?

In language acquisition, Verbal Behavior Approach: Revolutionizing Language Acquisition in Autism Therapy has shown promising results. This approach focuses on teaching language based on its function rather than just its form. For children with autism spectrum disorders, this can be a game-changer, helping them understand not just what to say, but why and when to say it.

In the realm of second language learning, verbal behavior principles have influenced teaching methodologies. Instead of just memorizing vocabulary and grammar rules, students are encouraged to use language in functional contexts, reinforcing the connection between words and their real-world effects.

But it doesn’t stop there. The principles of verbal behavior have even found their way into the world of artificial intelligence and natural language processing. When you chat with a virtual assistant, you’re interacting with a system that’s been trained using principles not too dissimilar from Skinner’s ideas about reinforcement and consequences.

Verbal Behavior vs. The World

Now, let’s stir the pot a bit and see how verbal behavior stacks up against other language theories. Remember Chomsky’s critique? Well, that sparked what became known as the “cognitive revolution” in psychology. Chomsky argued for an innate language acquisition device in the brain, suggesting that humans are born with a built-in capacity for language.

This nature vs. nurture debate has raged on for decades. While Skinner emphasized the role of the environment in shaping language, cognitive approaches focus more on internal mental processes. It’s like the difference between learning to play piano by ear versus studying music theory – both approaches have their merits.

Interestingly, modern linguistic theories have started to find middle ground. Symbolic Behavior: Decoding Human Communication and Cognition explores how our use of symbols (including language) involves both learned behaviors and innate cognitive processes.

Empirical evidence has both supported and challenged verbal behavior theory. Studies have shown the effectiveness of verbal behavior interventions in language development, particularly for children with developmental disorders. However, critics argue that the theory struggles to explain the rapid acquisition of complex language structures in young children.

The Future is Verbal

So, where do we go from here? The field of verbal behavior is far from static. Current research is exploring how verbal behavior principles can be applied to new forms of communication in our digital age. After all, is a tweet really that different from what Skinner would call a “mand” or a “tact”?

We’re also seeing exciting developments in language intervention techniques. Autoclitic Verbal Behavior: Enhancing Communication Through Self-Referential Language is one area that’s gaining attention, focusing on how we modify and qualify our own verbal behavior.

As we move further into the digital age, the principles of verbal behavior are being applied to new forms of communication. From social media interactions to voice-activated assistants, our verbal behavior is constantly adapting to new environments and reinforcement contingencies.

But with great power comes great responsibility. As we develop more sophisticated ways of shaping verbal behavior, ethical considerations come into play. How do we ensure that these techniques are used to empower rather than manipulate? It’s a question that researchers and practitioners grapple with as the field continues to evolve.

The Last Word (For Now)

As we wrap up our whirlwind tour of verbal behavior, it’s clear that Skinner’s ideas continue to reverberate through the halls of psychology and linguistics. From the Father of Behavior Analysis and His Lasting Impact to modern applications in autism therapy and AI, verbal behavior theory has proven to be a versatile and enduring framework for understanding human communication.

Whether you’re a student of psychology, a language teacher, or just someone fascinated by the intricacies of human interaction, there’s something in verbal behavior theory for you. It challenges us to think differently about language – not just as words and grammar, but as a dynamic, functional tool shaped by our experiences and environment.

So the next time you find yourself in a conversation, take a moment to consider the complex dance of verbal behavior you’re engaged in. Are you manding, tacting, or maybe throwing in an autoclitic or two? The world of verbal behavior is all around us, waiting to be explored.

And who knows? Maybe this article itself is a form of verbal behavior, shaped by the reinforcement of your continued reading. If you’ve made it this far, consider it a successful intraverbal exchange between writer and reader. Now go forth and verbalize with newfound awareness!

References:

1. Skinner, B. F. (1957). Verbal behavior. Appleton-Century-Crofts.

2. Chomsky, N. (1959). A review of B. F. Skinner’s Verbal Behavior. Language, 35(1), 26-58.

3. Sundberg, M. L., & Michael, J. (2001). The benefits of Skinner’s analysis of verbal behavior for children with autism. Behavior Modification, 25(5), 698-724.

4. Sautter, R. A., & LeBlanc, L. A. (2006). Empirical applications of Skinner’s analysis of verbal behavior with humans. The Analysis of Verbal Behavior, 22(1), 35-48.

5. Greer, R. D., & Ross, D. E. (2008). Verbal behavior analysis: Inducing and expanding new verbal capabilities in children with language delays. Allyn & Bacon.

6. Schlinger, H. D. (2008). The long good-bye: Why B.F. Skinner’s Verbal Behavior is alive and well on the 50th anniversary of its publication. The Psychological Record, 58(3), 329-337.

7. Normand, M. P. (2009). Much ado about nothing? Some comments on B.F. Skinner’s definition of verbal behavior. The Behavior Analyst, 32(1), 185-190.

8. Petursdottir, A. I., & Carr, J. E. (2011). A review of recommendations for sequencing receptive and expressive language instruction. Journal of Applied Behavior Analysis, 44(4), 859-876.

9. Sundberg, M. L. (2014). The Verbal Behavior Milestones Assessment and Placement Program: The VB-MAPP (2nd ed.). AVB Press.

10. Dixon, M. R., Belisle, J., Stanley, C. R., Speelman, R. C., Rowsey, K. E., Kime, D., & Daar, J. H. (2017). Toward a behavior analysis of complex language for children with autism: Evaluating the relationship between PEAK and the VB-MAPP. Journal of Developmental and Physical Disabilities, 29(2), 341-351.

Was this article helpful?

Would you like to add any comments (optional), leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Post Comment

Related Resources

Neurodivergent Behavior: Recognizing and Embracing Diverse Cognitive Styles

Behavioral and Brain Sciences: Exploring the Frontiers of Mind and…

Behavior and Stimulus Relationship: Understanding the Connection

Subconscious Behavior: The Hidden Force Shaping Our Actions and Decisions

Watson Classical Conditioning: Exploring the Foundations of Behavioral Learning

Biological Approach in Psychology: Exploring Physical Causes of Behavior

Behavioral Analysis and Outcome Prediction: Tools and Techniques

Brain Regions Controlling Behavior: The Crucial Role of the Frontal…

REM Sleep Behavior Disorder and Parkinson’s Disease: A Critical Connection

Thyroid Erratic Behavior: Unraveling the Connection Between Hormones and Mood

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

Classifying Conversational Entrainment of Speech Behavior: An Expanded Framework and Review

Camille j wynn, stephanie a borrie.

- Author information

- Article notes

- Copyright and License information

Corresponding Author: Camille J. Wynn, [email protected] , 801-300-3600

Issue date 2022 Sep.

Conversational entrainment, also known as alignment, accommodation, convergence, and coordination, is broadly defined as similarity of communicative behavior between interlocutors. Within current literature, specific terminology, definitions, and measurement approaches are wide-ranging and highly variable. As new ways of measuring and quantifying entrainment are developed and research in this area continues to expand, consistent terminology and a means of organizing entrainment research is critical, affording cohesion and assimilation of knowledge. While systems for categorizing entrainment do exist, these efforts are not entirely comprehensive in that specific measurement approaches often used within entrainment literature cannot be categorized under existing frameworks. The purpose of this review article is twofold: First, we propose an expanded version of an earlier framework which allows for the categorization of all measures of entrainment of speech behaviors and includes refinements, additions, and explanations aimed at improving its clarity and accessibility. Second, we present an extensive literature review, demonstrating how current literature fits into the given framework. We conclude with a discussion of how the proposed entrainment framework presented herein can be used to unify efforts in entrainment research.

Keywords: Entrainment, Convergence, Alignment, Accommodation, Acoustic-Prosodic

1. Introduction

Conversation is synergistic. The communicative behaviors of interlocutors are coordinated and largely interdependent. One manifestation of this behavioral coordination and interdependency is conversational entrainment. Broadly defined as the similarity of communicative behaviors between conversing interlocutors, entrainment is known by a variety of names including alignment, accommodation, synchrony, convergence, coordination, and adaptation. Specific terms are often tied to particular fields, areas, or theoretical models and are thus different in subtle and nuanced ways. However, the terms are all used to describe, to some degree, the same overarching concept of similar behavior during conversational interactions.

While highly variable and context dependent (e.g., Dideriksen et al., 2020 ; Cai et al., 2021 ), entrainment is not restricted to one single aspect of communication. Rather, research has demonstrated its presence in several different areas. For example, entrainment occurs in many elements of speech across articulatory (e.g., articulatory precision), rhythmic (e.g., speech rate), and phonatory (e.g., fundamental frequency) dimensions (e.g., Ostrand & Chodroff, 2021 ; Borrie et al., 2019 ; Reichel et al., 2018 ). It has also been observed in linguistic features within both semantic (e.g., Brennan and Clark, 1996 ; Suffill et al., 2021 ) and syntactical domains (e.g., Branigan et al., 2000 ; Ivanova, 2020 ). Further, entrainment extends to nonverbal aspects of communication such as facial expressions (e.g., Drimalla et al., 2019 ; McIntosh, 2006 ), gestures (e.g., Holler & Wilkin, 2011 ; Louwerse et al., 2012 ) and body position and posture (e.g., Shockley et al., 2013 , Paxton & Dale, 2017 ).

Given its seeming ubiquity, the question arises as to why entrainment occurs so pervasively in communication. Different theoretical models offer different perspectives. The Interactive Alignment Model, for instance, posits entrainment as a largely automatic process achieved through “primitive and resource-free priming mechanism” ( Pickering & Garrod, 2004 , p. 172). Through this mechanism, conversational partners are able to adopt shared linguistic representations, reducing the cognitive load necessary for both speech production and comprehension. Contrastingly, the Communication Accommodation Theory suggests that communication is not simply a channel for information exchange, but a fundamental component of interpersonal relationships. According to this theory, interlocutors seek to gain approval from their conversation partner by becoming more similar to them. Thus, entrainment fulfills the need for “social integration or identification with another” ( Giles et al., 1991 , p. 18). While the exact mechanisms underlying entrainment are not yet clear, there is strong evidence to suggest that entrainment is a productive and useful phenomenon. For example, entrainment has been linked with greater relationship stability ( Ireland et al., 2011 ), higher levels of cooperation ( Manson et al., 2013 ), and better performance on collaborative goal-directed communication tasks (e.g., Borrie et al., 2019 ; Reitter & Moore, 2014 ). Further, interlocutors who exhibit high levels of entrainment are rated by their conversation partner as being more competent, persuasive, and likable than those with low levels of entrainment ( Chartrand & Barg, 1999 ; Schweitzer et al., 2017 ; Bailenson & Yee, 2005 ). Even studies relying on simulated interactions, where confounding factors are eliminated, have shown that entrainment is a beneficial and useful aspect of communication ( Polyanskaya et al., 2019 ; Miles et al., 2009 ).

In this paper, we focus specifically on speech entrainment, which we define as the interdependent similarity of speech behaviors between interlocutors. Within this realm, similarity of interlocutor behavior may be interpreted in several different ways. It is not surprising, therefore, that specific definitions and measurement approaches used in entrainment research are extensive. A review of the literature suggests that entrainment may, in actuality, be best conceptualized as a broad umbrella term, encompassing distinct variants of similar behavior between interlocutors. Yet, within existing literature, principle differences are often overlooked or unacknowledged. Even in cases where researchers have differentiated between types of entrainment, inconsistent terminology across studies makes it challenging to assimilate knowledge. In some instances, different terms are used to represent the same type of entrainment. For example, while relying on a similar analysis to quantify entrainment, Abney et al., (2014) used the term behavioral matching while Perez and colleagues (2016) used the term synchrony. In other cases, the same term is used to represent different concepts. The term convergence, for instance, is often interchanged with entrainment, representing the idea of general adaptation during conversation (e.g., Pardo, 2018 ), but is also used to denote a very specific type of entrainment (i.e., an increase in similarity over time; e.g., Edlund et al., 2009 ). As research in this area continues to grow, the adoption of a consistent system for organizing entrainment across research teams and studies would ensure greater cohesion and integration of knowledge.

In the field of computer science, a system for classifying speech entrainment 1 was introduced by Levitan and Hirschberg (2011) , and further described in Levitan’s doctoral dissertation (2014) . This framework offers a valuable way of organizing different types of entrainment, and is foundational for the work described herein. However, at present, it has not been widely adopted, particularly in areas outside of computer science. One potential reason that this may be the case is that the organizational structure of this framework is not entirely comprehensive in that specific measurement approaches currently used in entrainment literature cannot be categorized. That is, there are existing measures that do not fit within any of the existing categories as they are presently defined(e.g., Abel & Babel, 2017 ; Borrie et al., 2020b ; Schweitzer & Lewandowski, 2013 ). Additionally, definitions are, at times, ambiguous and difficult to navigate (i.e., definitions are often brief, not operational, and/or lack necessary distinctions, explanations, and examples). Thus, understanding and utilizing this framework when using analyses that differ from the analyses used by Levitan and colleagues can be challenging. This is particularly true for researchers in fields where entrainment is a relatively novel area of study (e.g., clinical fields of study) and terminology and measurement approaches may be unfamiliar.

The purpose of this paper is two-fold: First, we advance an expanded version of the framework presented by Levitan and Hirschberg (2011) and Levitan (2014) , with adjustments and additions aimed at increasing its clarity and accessibility. Second, we present an extensive literature review demonstrating how studies examining speech entrainment, which currently employ a range of different terminology, operational definitions, and measurement approaches, fit into the given framework. Through these aims, we offer five specific contributions: (1) a more comprehensive framework that allows for the categorization of all measures of speech entrainment; (2) a clear, well-defined set of terminology, with some definitions adopted from Levitan and colleagues (2011 ; 2014 ), and others containing variations, refinements, and/or expansions that provide added specificity and clarity; (3) clarifying explanations of various aspects of the framework to account for the nuanced differences between differing measurements approaches; (4) a demonstration of how various measurement approaches fit into the given framework with examples from current literature; and (5) a discussion of how this entrainment framework can be used to unify efforts in entrainment research.

2. Entrainment Classification Framework.

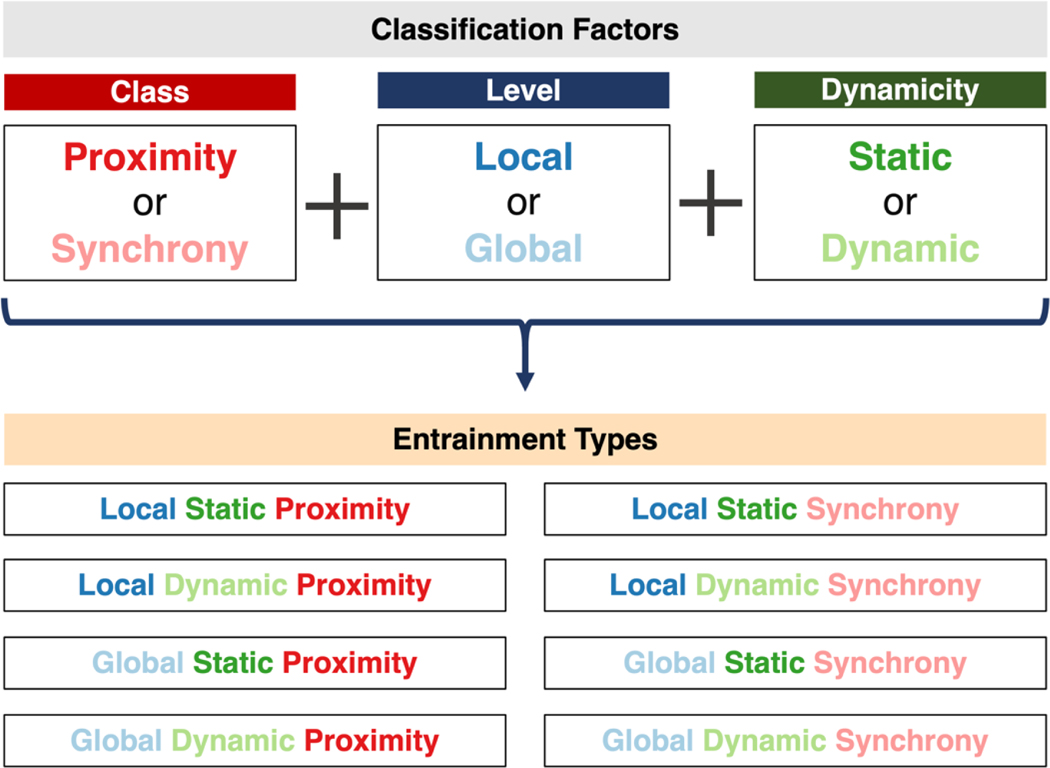

Levitan & Hirschberg’s original framework (2011) , includes five types of entrainment (global similarity, global convergence, local similarity, synchrony, and local convergence) divided by two classification factors (local vs. global and similarity vs. synchrony vs. convergence). Drawing upon questions raised in Levitan’s dissertation (2014) , we propose an expanded framework that includes eight entrainment types categorized by three dichotomous classification factors as illustrated in Figure 1 : the class of entrainment (i.e., synchrony or proximity), the temporal level of entrainment (i.e., global or local), and the dynamicity of entrainment (i.e., static or dynamic). We describe this framework below. To assist with our explanations of each entrainment subtype, we draw upon literature of speech entrainment in embodied face-face conversations and provide explicit examples using speech rate. However, we note that this framework may be applied to any speech feature and extends beyond naturalistic conversations, to include measurement of entrainment in more controlled methodological paradigms (see Wynn & Borrie, 2020 , for a review of different entrainment methodologies).

Comprehensive framework for classifying conversational entrainment which includes three dichotomous classification factors used to categorize eight entrainment types.

2.1. Entrainment Class

The first classification factor relates to the class of entrainment. Past research has employed several different systems to categorize entrainment class. Some researchers have focused on entrainment outcomes in their classification systems. For example, Street (1982) classified entrainment as either full or partial, depending on the degree to which interlocutors aligned their behavior with one another. Giles and colleagues (1991) noted that entrainment can be classified as symmetric if entrainment is mutual across both interlocutors and asymmetric if only one interlocutor entrains to the other. Other researchers have categorized entrainment based on the way in which it is measured. In their seminal work, Edlund et al., (2009) classified entrainment into two broad categories: synchrony, defined as “similarity in relative values” (p. 2779), and convergence, defined as “two parameters becoming more similar” (p. 2779). Levitan and Hirschberg (2011 ; see also Levitan, 2014 ) subsequently added an additional measure, proximity, defined as the “similarity of a feature over the entire conversation” (p. 3801). This terminology has been used across several studies (e.g., Reichel et al., 2018 ; Weise et al., 2019 ; Xia et al., 2014 ). We agree with the distinction between proximity and synchrony, and note that the operational definitions of these terms provided here are similar to those found in Levitan (2014) . However, we advance that convergence should be considered a subtype of proximity, and that in order to make this framework more comprehensive, a similar subtype of synchrony should be included. Accordingly, we categorize entrainment class into measures of proximity and synchrony and leave the discussion of convergence to the entrainment dynamicity section (i.e., 2.3) below.

Proximity is operationally defined here as similarity of speech features between interlocutors. For example, if both interlocutors used a similarly fast speech rate, the conversation would be characterized by high levels of proximity. In contrast, if one interlocutor used a fast speech rate and the other used a much slower speech rate, the conversation would be characterized by low levels of proximity. Several statistical methods have been used to quantify proximity in spoken dialogue. Often, researchers rely on objective measures, comparing acoustic feature values of interlocutors to one another. For example, researchers often compute absolute difference scores between the speech features of interlocutors and use them to compare differences between real data and sham data (e.g., Ostrand & Chodroff, 2021 ). Sometimes, these studies compare the differences between the features of two in-conversation interlocutors to differences between non-conversation interlocutors (i.e., speakers who did not converse with one another; e.g., Levitan et al., 2012 ; Reichel et al., 2018 ). Others examine differences between interlocutors on adjacent turns compared to nonadjacent turns (e.g., Levitan & Hirschberg, 2011 ; Lubold & Pon-Barry, 2014 ). Cohen Priva and Sanker (2019) introduced a different approach termed linear combination. In this approach, the overall degree of similarity between the speech features of two interlocutors is determined by comparing an interlocutor’s speech features during a conversation to their own baseline speech features and the baseline features of their conversation partner using linear mixed effect models. Other studies still have advanced more complex measures such as cross-recurrence quantification analysis (CRQA), a technique that quantifies how often and for how long the speech features of interlocutors visit similar states (e.g., Borrie et al., 2019 ; Duran & Fusaroli, 2017 , Fusaroli & Tylen, 2016 ). In another line of research, studies rely on perceptual measures, utilizing naïve listeners who judge the similarity of speech features between interlocutors. One common perceptual approach relies on AXB perceptual tests (e.g., Aguilar et al., 2016 , Pardo et al., 2018 ). In these approaches, listeners compare audio samples of words/phrases produced by one interlocutor to samples of words/phrases produced by their conversation partner both within and outside of the conversation. Proximity is then operationalized as the degree to which speech features within the conversation were evaluated as sounding more similar than samples outside the conversation. Perceptual measures may also involve ratings of similarity between interlocutors’ speech features on a Likert scale. Ratings of utterances near the beginning of the conversation are then compared to the end of the conversation to see if interlocutors became more similar across the conversation (e.g., Abel & Babel, 2016).

Synchrony is operationally defined here as similarity in movement (i.e., direction and magnitude of change) of speech features between interlocutors, regardless of the actual raw feature values of each interlocutor. Put another way, when two interlocutors are engaging in synchronous entrainment, they alter their speech features in parallel with one another (i.e., at a similar rate). For example, two interlocutors may have very dissimilar speech rates. However, as one interlocutor increases their rate of speech, the other may increase as well. Thus, though the interlocutors have low levels of proximity, they are moving in parallel with one another, indicating high levels of synchrony. Several statistical methods have been used to quantify synchrony in spoken dialogue. Most commonly, synchrony has been measured as the correlation coefficients 2 between the speech features of two interlocutors during a sequence of time points within a conversation (e.g., Ko et al., 2016 ; Borrie et al., 2015 ). A high degree of correlation reflects a high degree of similarity in the movement of speech features between interlocutors. Sometimes cross correlation is used (e.g., Abney et al., 2014 ; Pardo et al., 2010 ) to compare the speech features at one time point to the values of multiple time points throughout the conversation. Other researchers have employed multi-level modeling to evaluate if one interlocutor’s speech features are predictive of the features of their conversational partner on adjacent speaking turns (i.e., if they are moving in tandem with one another; Michalsky et al., 2016 ; Seidl et al., 2018 ; Wynn et al., 2022 ). Yet another approach measures synchrony using the square of correlation coefficients, mutual information, and mean of spectral coherence to determine the dependency of the feature values of conversing interlocutors (e.g., Lee et al., 2010 ). In their evaluation of synchrony, Reichel and colleagues (2018) compared the distance between one interlocutor’s speech feature value to their mean and the other interlocutor’s speech feature value to their mean. Speech was determined to exhibit synchrony if conversation partners were similar distances apart from their respective means, indicating parallel movement.

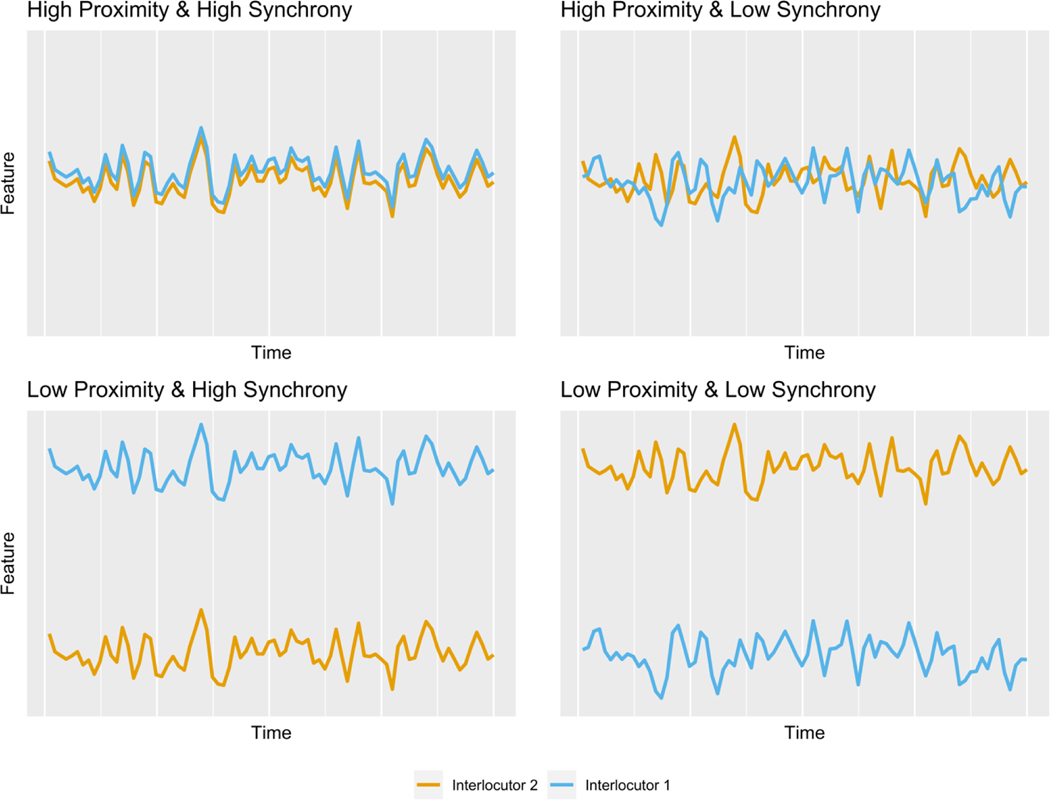

Regardless of the type of statistical method employed, the overarching principle is the same: proximity reflects similarity of speech features between interlocutors while synchrony reflects the similarity in the movement of these features between interlocutors. There are a couple of items to note regarding entrainment class. First, we acknowledge the use of terminology in previous literature denoting a phenomenon that directly contrasts synchrony and proximity. Terms such as anti-similarity, anti-synchrony, disentrainment, and complementary entrainment have all been used to describe the tendency for individuals to change their speech in a direction that moves away from, rather than towards, their partner ( Benus, 2014a ; De Looze & Rauzy, 2011 ; Perez et al., 2016 ; Levitan, 2014 ). While we recognize that such patterns do exist within conversation, we do not see a need to provide separate categorization for such states within the current framework. Rather, we consider the degree of entrainment to occur along a continuum across both proximity and synchrony measures. Within the realm of proximity, the degree of similarity may range from high levels of proximity (i.e., speech feature values are close together) to low levels of proximity (i.e., speech feature values are far apart). While levels of synchrony may also range from high (i.e., high positive correlation) to low (i.e., low positive correlation), there is the additional possibility of negative correlation which would indicate negative synchrony. Therefore, within the synchrony categorization, the continuum ranges from high levels of positive synchrony to high levels of negative synchrony. As an additional note, it is important to recognize that proximity and synchrony do not necessarily co-occur within a conversation, a characteristic also noted by Levitan (2014) . For example, two interlocutors may have very similar speech feature values as one another (i.e., a high degree of proximity). However, despite this similarity, they may alter their speech patterns in different ways during the conversation (i.e., a low degree of synchrony). An example of this concept is presented in Figure 2 .

Schematic of various combinations of high and low proximity and synchrony.

2.2. Entrainment Level

The second classification factor relates to the level of entrainment, referring to the temporal interval at which entrainment is measured. Previous literature has discussed entrainment level (e.g., Brennan & Clark, 1996 ; Capella, 1996 ; Neiderhoffer & Pennebacher, 2002 ), often noting differences between local entrainment (along a relatively small timescale) and global entrainment (along a relatively large timescale) and Levitan and colleagues (2011 ; 2014 ) introduced and further conceptualized these concepts in speech research in their initial framework. While we support the use of this classification factor, we see two challenges brought about by the way it is currently used across various studies. The first concern is that current definitions do not always encompass the entire range of intervals at which entrainment may be measured. For example, local entrainment is commonly defined as entrainment occurring at turn-exchanges and global entrainment as occurring across the entire conversation (e.g., Benus, 2014b ; Levitan, 2011 ). However, such definitions do not allow measurements taken at time points between these two extremes (e.g., across minutes of a conversation; Pardo, 2010 ) to be classified. Additionally, definitions used across the literature are inconsistent and, at times, contradict one another. For example, Weise et al., (2019) define local entrainment as occurring specifically at the level of inter-pausal units (i.e., pause-free units of speech from a single interlocutor; IPUs). Contrastingly, Levitan (2014) , uses a more wide-ranging definition, describing local entrainment as “alignment between interlocutors that occurs within a conversation” (p. 22). Reichel and colleagues (2018) provide yet another definition, noting that local entrainment must occur not only at the turn level but that there must be greater similarity between adjacent compared to nonadjacent turns. Given the range of descriptions, more consistent and inclusive definitions of local and global measurement are warranted.

Local entrainment is operationally defined here as similarity that occurs between units equal to or smaller than adjacent turns. Drawing once again on our example of speech rate, measures of local entrainment might include a comparison between the speech rate of an interlocutor on a given turn and the speech rate of their conversational partner in their subsequent turn. Within this definition, measures of local entrainment are relatively straightforward. Speech features are generally compared between adjacent turns or adjacent IPUs (e.g., Schweitzer, Walsh, & Schweitzer, 2017 ; Willi et al., 2018 ).

Global entrainment is operationally defined here as similarity that occurs across any time scale greater than adjacent turns. For example, a measure of global entrainment might compare the mean speech rate of one interlocutor to the mean speech rate of their conversational partner across the entire conversation. Put another way, in contrast to local entrainment, global entrainment introduces the concept of lag: that an interlocutor may be influenced by their conversation partner, but may not entrain to their immediate productions, instead aligning to the acoustic features of previous, non-adjacent turns. Not surprisingly, measures of global entrainment are more varied and often more complicated than measures of local entrainment. One common measurement approach has been to take the mean value for a given speech feature across an entire conversation (e.g., Cohen Priva & Sanker, 2018 ; Sawyer et al., 2017 ). Global entrainment, however, is not limited to this type of measure. For example, some studies have divided the conversation into equal sections (e.g., Gregory et al., 1997 ; Savino et al., 2010; Lehnert-LeHouillier, Terrazas, Sandoval, & Boren, 2020 ) and calculated the mean feature value of each section. Others have found the mean feature values across a specific unit of time (e.g., minutes; Pardo et al., 2010 , Gordon et al., 2015 ). A more complex way to capture global entrainment uses time-aligned moving averages (TAMA; e.g., Kousidis et al., 2009 ; De Looze et al., 2014 ; Ochi et al., 2019 ). This method involves analyzing speech segments of overlapping windows of predetermined, fixed lengths. Doing this allows for a smoother contour than local measures while still detecting behavioral modifications throughout the conversation that can be washed out in larger-scale measures.

There are a couple important ideas to note about entrainment level. First, global entrainment may include measures from individual turns (e.g., Reichel et al., 2018 ) or even smaller units such as phrases (e.g., Kim et al., 2011 ) and even phonemes (e.g., Lelong & Bailly, 2011 ). Key is that in all of these instances the comparisons are not necessarily between units in adjacent utterances. For example, in their measure of global entrainment, Reichel and colleagues (2018) compared the distance between values of two random turns in a conversation to the distance between values of two random points that were from different conversations. Thus, measurements, although extracted at the level of individual turn, were not taken of adjacent turns, and therefore would be classified as global entrainment. Second, as mentioned, one key difference between local and global entrainment is that global entrainment accounts for any potential lag between the speech behaviors of one interlocutor and similar behaviors in their conversation partner. While all measures of global entrainment account for lag indirectly, it is also possible to consider lag more directly. For example, using various forms of time series analysis, researchers have investigated the amount of lag that yields that highest degree of entrainment between conversation partners (e.g., Kousidis et al., 2009 ; Pardo et al., 2010 ; Street 1984 ). Further investigation into how to optimally measure lag within measures of global entrainment is needed.

2.3. Entrainment Dynamicity

The final classification factor in the proposed framework relates to dynamicity of entrainment. As mentioned earlier, past work has often used the term convergence to describe a distinct class of entrainment, contrasting it with measures of proximity and/or synchrony (e.g., Edlund et al., 2008; Levitan & Hirschberg, 2011 ). In this prior work, convergence was defined as “an increase in proximity over time” ( Levitan & Hirschberg, 2011 , p. 3801) However, given the definition presented by Levitan and Hirschberg, we see convergence as a specific subtype of proximity. Additionally, we note that there are studies in the present literature which also investigate changes in synchrony over time , something that was not taken into consideration in Levitan’s original framework. Therefore, we propose that within class (i.e., proximity or synchrony) and within level (i.e., global or local), entrainment should be classified as dynamic or static.

Static entrainment is operationally defined here as similarity without the statistical consideration of changes across time. A static model may indicate that two interlocutors employed proximate and/or synchronous speech rates with one another. However, the output would not reveal anything about whether proximity or synchrony changed in magnitude (i.e., increased or decreased) over the course of the conversation. Such static models of entrainment are very common in current literature (e.g., Cohen Priva et al., 2017 ; Schweitzer, Lewandowski, & Duran, 2017 ; Ostrand & Chodroff, 2021 ).

Dynamic entrainment is operationally defined here as a change in similarity across time. Dynamic entrainment may be a change in the similarity of speech features (i.e., dynamic proximity). For example, again, employing the example of speech rate, one interlocutor may begin the conversation with a faster speech rate, while their conversational partner begins with a slower rate. During the conversation, the faster interlocutor may slow down while the slower interlocutor speeds up, both converging on a mid-way speech rate by the end of the conversation. In contrast, dynamic entrainment may be a change in the similarity of movement of speech features over time (i.e., dynamic synchrony). For example, the movement patterns of two interlocutors may be dissimilar from one another at the beginning of a conversation (e.g., one may increase their speech rate while the other decreases their rate). However, over the course of the conversation, they may begin to align their speech movements with one another (e.g., as one increases their speech rate, the other increases their rate as well). There are several ways in which past research of dynamic entrainment has accounted for change in behavior across time. Some researchers have divided conversations into two or more sections and compared differences in entrainment between these sections (e.g., Lehnert-LeHouillier et al., 2020 ; Abel & Babel, 2017 ; Gordon et al., 2014). For example, De Looze and colleagues (2014) examined dynamic synchrony by using t-tests to compare the correlation coefficients between interlocutors’ speech feature values in the first and the second half of the conversation. Other studies include time as a variable, examining its relationship with entrainment using correlations (e.g., Levitan et al., 2015 ; Xia et al., 2014 ) or statistical interactions (e.g., Schweitzer & Lewandowski, 2013 ; Lewandowski & Jilka, 2019 ; Borrie et al., 2020b ). For instance, Weise and colleagues (2019) examined dynamic proximity by analyzing the correlation between proximity scores (i.e., absolute difference between two interlocutors’ feature values) and time (i.e., number of turn exchanges), to determine if the degree of entrainment was predicted by the amount of time in the conversation that had transpired.

As with the other entrainment factors, there are some important considerations regarding dynamicity. First, as mentioned previously, both proximity and synchrony can be dynamic. Proximity deals specifically with changes in the speech features over time. Contrastingly synchrony deals specifically with changes in the degree to which interlocutors are parallel with one another throughout the conversation. An example of this can be viewed in Figure 3 . Next, although a measure of change in similarity over time is required for entrainment to be classified as dynamic, the direction of that change may vary—changes in similarity may be convergent (i.e., similarity increases over time) or divergent (i.e., similarity decreases over time). Second, dynamic entrainment models, according to our given definition, require not only a consideration of time but a consideration in change in similarity over time. There are several instances where time has been included within a statistical analysis, but this consideration does not deal with the specific ways that entrainment changes over the course of the conversation. For example, as mentioned previously, some studies have used time series analysis, examining the effects of an interlocutor’s speech values across past time points on the acoustic values of their conversational partner (e.g., Kousidis et al., 2009 ; Pardo et al., 2010 ; Street 1984 ). However, as these analyses did not investigate how entrainment changed across the course of the conversation, it would not be considered a dynamic model. Finally, while the majority of literature examines the concept of dynamicity on a linear timescale, a few studies have utilized nonlinear methods (e.g., Bonin et al., 2013 ; Vaughan, 2011 ). That is, entrainment may not steadily increase across an entire conversation, but rather may increase within some portions and decrease within others, depending on a variety of factors. Further research examining nonlinear methods to capture entrainment dynamicity is warranted.

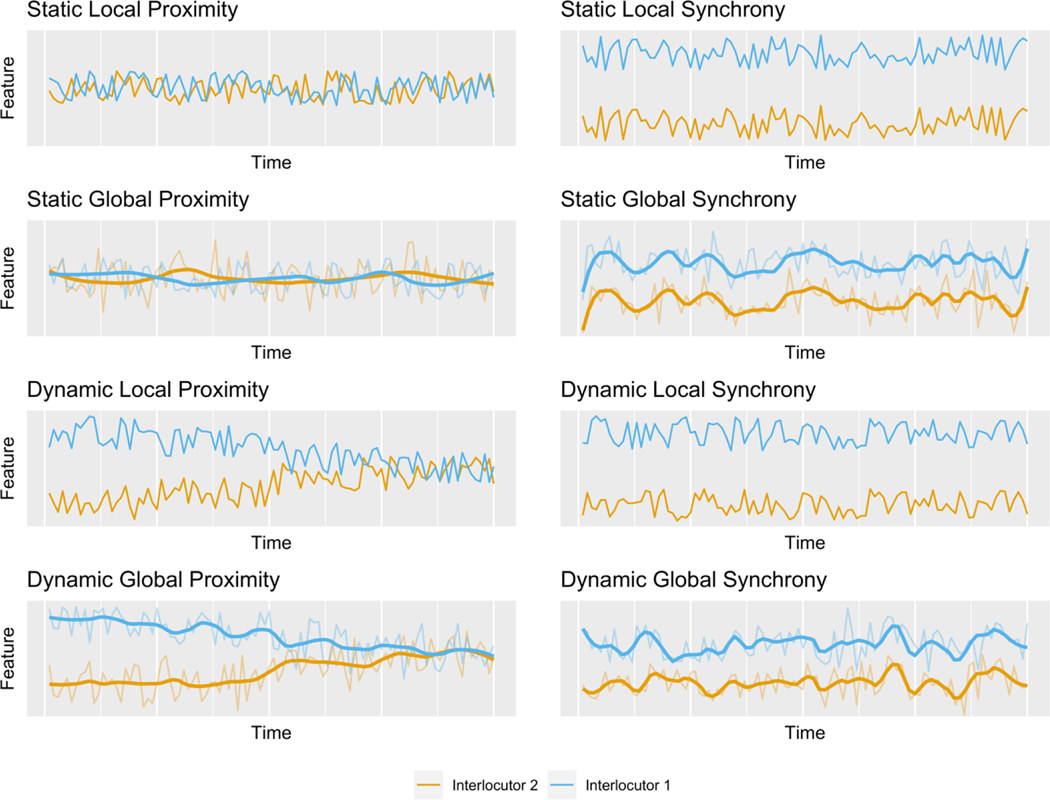

Figure 3. Schematic depicting conversations exhibiting each type of entrainment.

Note. Proximity is represented by turns where speech features of interlocutors are similar to one another. Synchrony is represented by turns where the speech features of interlocutors move in parallel with one another. Conversations exhibiting local entrainment show similarity across adjacent turns. Conversations exhibiting global entrainment, at times, show dissimilar behavior across adjacent turns. However, as represented by the bold line, overall similarity is evident when data is aggregated across larger portions of the conversation. Conversations exhibiting static entrainment show constant similarity across the course of the conversation. Conversations exhibiting dynamic synchrony show dissimilar behavior at the start of the conversation. However, as time increases the behaviors of interlocutors become more similar.

3. Practical Application of the Framework

Given these three dichotomous factors, the proposed expanded framework consists of eight different types of entrainment, as depicted in Figure 1 . To support understanding, we provide a schematic depicting conversations exhibiting each of the eight types of entrainment in Figure 3 . Our review of the literature provides evidence that all of these types have been used to capture entrainment (see also Table 1 ). The use of the current framework provides several benefits. First, the organization of this framework is comprehensive, making it suitable for researchers employing a wide variety of measurement approaches. Additionally, the use of clear and all-encompassing definitions, in-depth explanations, and examples drawn from current literature ensures that this framework is easily accessible. Finally, while we have focused on research involving naturalistic dyadic conversations, this classification system can also be extended to more controlled studies of entrained behavior.

Examples of existing literature with entrainment classified according to the expanded classification framework

Note . When the same citation appears more than once in the table, it reflects multiple analyses examining different types of entrainment within the given paper. CRQA= cross recurrence quantification analysis; IPU = inter-pausal unit; TAMA = time aligned moving averages.

Going forward, explicit documentation of the specific type/s of entrainment being studied, using terminology described herein, will allow for increased understanding on the nature of the investigation as well as more informative and comprehensive comparisons across studies. To highlight the framework’s utility, we provide an example of one area where its adoption may be particularly relevant. While a relatively new area of investigation, recent research has shown that challenges in entrainment persist in a number of clinical populations characterized by disruptions in speech production and perception including autism spectrum disorder (ASD; Wynn et al., 2018 ), dysarthria ( Borrie et al., 2020a ), hearing impairment ( Freeman & Pisoni, 2017 ), and traumatic brain injury (TBI; Gordon et al., 2015 ). Currently, studies in this area often offer a general umbrella term (e.g., entrainment), without acknowledging or differentiating different entrainment types. Without careful consideration, one might assume that all of these studies investigated the same type of behavior; however, using the proposed classification framework reveals different types of entrainment. Wynn and colleagues (2018) , for example, examined entrainment in individuals with ASD using static local synchrony while Ochi and colleagues (2019) used static global synchrony. Such distinctions may carry important implications. For instance, investigation of different entrainment types may explain diverging outcomes between studies of the same population. For instance, while Gordon and colleagues (2015) found entrainment disruptions in the conversations of individuals with a TBI, Borrie et al., (2020b) found no significant difference between the entrainment patterns of neurotypical participants and participants with TBI. Although there are a number of factors that may lead to contrasting findings (i.e., different aspects of speech being measured), it is possible that differences in entrainment types being studied (i.e., dynamic global proximity vs. static local synchrony) may contribute to these discrepancies. Beyond clinical research, there are many gaps in our knowledge of the way entrainment is most frequently manifest, the factors that predict entrainment, and outcomes of highly entrained conversations. While conversational research is inherently complicated and there are many intricacies to untangle, using this framework to classify differences in the types of entrainment being studied is one important step towards better understanding the complexities of this phenomenon.

4. Limitations

It is important to note a few limitations of this work. First, while the expanded framework can be used to comprehensively categorize entrainment of speech behaviors, it is less useful when considering other types of entrainment, such as entrainment of linguistic or kinesthetic behaviors. This is particularly true of the classification category of entrainment class (i.e., proximity and synchrony). For example, linguistic entrainment cannot be categorized as being synchronous as linguistic patterns do not reflect the same type of continuous movement exhibited in speech (e.g., an interlocuter either uses the same lexical item or not). Next, this framework offers important factors which should be considered when comparing entrainment across different studies. However, it does not (and indeed cannot) represent an exhaustive list of all such factors. Other components of the methodology, such as the dimension(s) of speech being examined and the context of the conversation should also be considered (for examples of other considerations, see Rasenberg et al., 2020 ; Fusaroli et al., 2013 ). Finally, in our review of the literature, we provide examples of different methodologies that are currently being used in entrainment research. However, we note that this review does not provide a detailed description of all such methodologies nor does it serve as an exhaustive list of every methodology employed.

4. Conclusion

As we continue to advance research in the area of entrainment, progress will be most optimal when efforts are unified and collaborative. The goal of the expanded framework described herein is to facilitate this, offering a uniform language and consistent organization system to study entrainment. With use, this framework will enable us to collectively build a more cohesive and informative body of literature, advancing our understanding of conversational entrainment.

Highlights.

To increase organization and cohesion in speech entrainment research, we provide:

An expanded version of an earlier framework to categorize types of speech entrainment

A literature review showing how current literature fits into the framework

A discussion of how the framework can be used to unify research efforts

Acknowledgements

This research was supported by the National Institute on Deafness and Other Communication Disorders, National Institutes of Health Grant R21DC016084 (PI: Borrie). We gratefully acknowledge Tyson Barrett and Elizabeth Wynn for their assistance in creating the figures for this project.

While the term acoustic-prosodic entrainment is used by Levitan and Hirschberg (2011) and is common throughout the literature, we have opted to use the term speech entrainment. This decision was made to account for all dimensions of speech (i.e., articulation and phonation in addition to prosody) as well as both acoustic and perceptual measures of entrainment.

It is important to note that correlation analyses may be used for proximity measures as well as synchrony measures. The key difference is that measures of synchrony utilize correlations between specific feature values within a conversation, thus measuring similarity of movement of interlocutors throughout the conversation. Contrastingly, measures of proximity utilize correlations between mean feature values across conversations. Thus correlations are being used to measure similarity between features values, compared to the feature values of other interlocutors in other conversations.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Author Statement

Camille Wynn: Conceptualization, Writing- Original Draft, Visualization

Stephanie Borrie: Conceptualization, Writing- Review & Editing, Supervision, Funding Acquisition

- Abel J, & Babel M. (2017). Cognitive Load Reduces Perceived Linguistic Convergence Between Dyads. Language and Speech, 3, 479. [ DOI ] [ PubMed ] [ Google Scholar ]

- Abney DH., Paxton A., Dale R., & Kello CT. (2014). Complexity matching in dyadic conversation. Journal of Experimental Psychology: General, 143(6), 2304–2315. [ DOI ] [ PubMed ] [ Google Scholar ]

- Aguilar LJ, Downey G, Krauss RM, Pardo JS, Lane S, & Bolger N. (2016). A dyadic perspective on speech accommodation and social connection: both partners’ rejection sensitivity matter. Journal of Personality, 84, 165–177. 10.1111/jopy.12149. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Bailenson JN, & Yee N. (2005). Digital chameleons automatic assimilation of nonverbal gestures in immersive virtual environments. Psychological Science, 16(10), 814–819. [ DOI ] [ PubMed ] [ Google Scholar ]

- Beňuš Š (2014a). Conversational Entrainment in the Use of Discourse Markers. In: BasisS S, Esposito A, & Morabito F, (Eds.) Recent advances of neural networks models and applications, smart innovations, systems, and technologies (vol. 26, pp. 345–352). Springer. [ Google Scholar ]

- Beňuš Š (2014b). Social aspects of entrainment in spoken interaction. Cognitive Computation, 6(4), 802–813. [ Google Scholar ]

- Bonin F, De Looze C, Ghosh S, Gilmartin E, Vogel C, Campbell N, Polychroniou A, Salamin H, & Vinciarelli A. (2013). Investigating fine temporal dynamics of prosodic and lexical accommodation. In Proceedings of the Annual Conference of the International Speech Communication Association (pp. 539–543). INTERSPEECH. [ Google Scholar ]

- Borrie SA, Barrett TS, Liss JM, & Berisha V. (2020a). Sync pending: Characterizing conversational entrainment in dysarthria using a multidimensional, clinically-informed approach. Journal of Speech, Language, and Hearing Research, 63(1), 83–94. 10.3389/fpsyg.2015.01187 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Borrie SA., Barrett TS., Willi MM., & Berisha V. (2019). Syncing up for a good conversation: A clinically meaningful methodology for capturing conversational entrainment in the speech domain. Journal of Speech, Language, and Hearing Research, 62(2), 283–296. 10.1044/2018_JSLHR-S-18-0210 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Borrie SA, Lubold N, & Pon-Barry H. (2015). Disordered speech disrupts conversational entrainment: A study of acoustic-prosodic entrainment and communicative success in populations with communication challenges. Frontiers in Psychology, 6, 1187. 10.3389/fpsyg.2015.01187 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Borrie SA, Wynn CJ, Berisha V, Lubold N, Willi MM, Coelho CA, & Barrett TS, (2020b). Conversational coordination of articulation responses to context: A clinical test case with traumatic brain injury. Journal of Speech, Language, and Hearing Research. 63, 2567–2577. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Branigan HP, Pickering MJ, & Cleland AA (2000). Syntactic co-ordination in dialogue. [ DOI ] [ PubMed ]

- Brennan SE, & Clark HH (1996). Conceptual pacts and lexical choice in conversation. Journal of Experimental Psychology: Learning, Memory, and Cognition, 22(6), 1482–1493. [ DOI ] [ PubMed ] [ Google Scholar ]

- Cai ZG, Sun Z, & Zhao N. (2021). Interlocutor modelling in lexical alignment: The role of linguistic competence. Journal of Memory and Language, 121. 10.1016/j.jml.2021.104278 [ DOI ] [ Google Scholar ]

- Chartrand TL, & Bargh JA (1999). The chameleon effect: The perception–behavior link and social interaction. Journal of Personality and Social Psychology, 76(6), 893. [ DOI ] [ PubMed ] [ Google Scholar ]

- Cappella JN. (1996). Dynamic coordination of vocal and kinesic behavior in dyadic interaction: Methods, problems, and interpersonal outcomes. In Watt J,& VanLear C. (Eds.), Dynamic patterns in communication processes (pp. 353–386). Thousand Oaks, CA: Sage. [ Google Scholar ]

- Cohen Priva U, & Sanker C. (2018). Distinct behaviors in convergence across measures. In Proceedings of the 40th Annual Conference of the Cognitive Science Society, (pp. 1518–1523). Cognitive Science Society. [ Google Scholar ]

- Cohen Priva U, & Sanker C. (2019). Limitations of difference-in-difference for measuring convergence. Laboratory Phonology, 10(1). 10.5334/labphon.200 [ DOI ] [ Google Scholar ]

- Cohen Priva U, Edelist L, & Gleason E. (2017). Converging to the baseline: Corpus evidence for convergence in speech rate to interlocutor’s baseline. Journal of the Acoustical Society of America, 141(5), 2989. [ DOI ] [ PubMed ] [ Google Scholar ]

- De Looze C, Scherer S, Vaughan B, & Campbell N. (2014). Investigating automatic measurements of prosodic accommodation and its dynamics in social interaction. Speech Communication, 58, 11–34. 10.1016/j.specom.2013.10.002 [ DOI ] [ Google Scholar ]

- De Looze CD, & Rauzy S. (2011). Measuring speakers’ similarity in speech by means of prosodic cues: Methods and potential. In Proceedings of the ICPhS (pp. 1294–1297). International Congress of Phonetic Sciences. [ Google Scholar ]

- Dideriksen C, Christiansen MH, Tylén K, Dingemanse M, & Fusaroli R. (2020). Quantifying the interplay of conversational devices in building mutual understanding. PsyArXiv. 10.31234/osf.io/a5r74. [ DOI ] [ PubMed ]

- Drimalla H, Landwehr N, Hess U, & Dziobek I. (2019). From face to face: The contribution of facial mimicry to cognitive and emotional empathy. Cognition and Emotion, 33(8), 1672–1686. 10.1080/02699931.2019.1596068 [ DOI ] [ PubMed ] [ Google Scholar ]

- Duran ND, & Fusaroli R. (2017). Conversing with a devil’s advocate: Interpersonal coordination in deception and disagreement. PLoS ONE, 12(6), 1–25. 10.1371/journal.pone.0178140 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Edlund J., Heldner M., & Hirschberg J. (2009). Pause and gap length in face-to-face interaction. In Proceedings of the Annual Conference of the International Speech Communication Association (pp. 2779–2782). INTERSPEECH. [ Google Scholar ]

- Freeman V, & Pisoni DB (2017). Speech rate, rate-matching, and intelligibility in early-implanted cochlear implant users. The Journal of the Acoustical Society of America, 142(2), 1043–1054. 10.1121/1.4998590 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Fusaroli R, & Tylén K. (2016). Investigating conversational dynamics: Interactive alignment, interpersonal synergy, and collective task performance. Cognitive Science, 40(1), 145–171. 10.1111/cogs.12251. [ DOI ] [ PubMed ] [ Google Scholar ]

- Fusaroli R, Raczaszec-Leonardi J, & Tylen K, (2013) Dialog as Interpersonal Synergy. New Ideas in Psychology, 32, 147–157. [ Google Scholar ]

- Giles H, Coupland J, & Coupland N. (1991). Accommodation theory: Communication, context, and consequence. In Giles H, Coupland J, & Coupland N. (Eds.), Contexts of accommodation: Developments in applied sociolinguistics (pp. 1–68). Cambridge University Press. [ Google Scholar ]

- Gordon RG, Rigon A, & Duff MC (2015). Conversational synchrony in the communicative interactions of individuals with traumatic brain injury. Brain Injury, 29(11), 1300–1308. 10.3109/02699052.2015.1042408 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Gregory SW., Dagan K., & Webster S. (1997). Evaluating the relation of vocal accommodation in conversation partners’ fundamental frequencies to perceptions of communication quality. Journal of Nonverbal Behavior, 21(1), 23–43. 10.1023/A:1024995717773 [ DOI ] [ Google Scholar ]

- Holler J, & Wilkin K. (2011). Co-speech gesture mimicry in the process of collaborative referring during face-to-face dialogue. Journal of Nonverbal Behavior, 35(2), 133–153. 10.1007/s10919-011-0105-6 [ DOI ] [ Google Scholar ]

- Ireland ME, Slatcher RB, Eastwick PW, Scissors LE, Finkel EJ, & Pennebaker JW (2011). Language Style Matching Predicts Relationship Initiation and Stability. Psychological Science, 22(1), 39–44. 10.1177/0956797610392928 [ DOI ] [ PubMed ] [ Google Scholar ]

- Ivanova I, Horton WS, Swets B, Kleinman D, & Ferreira VS (2020). Structural alignment in dialogue and monologue (and what attention may have to do with it). Journal of Memory and Language, 110. [ Google Scholar ]

- Kim M, Horton WS, & Bradlow AR (2011). Phonetic convergence in spontaneous conversations as a function of interlocutor language distance. Laboratory Phonology, 2(1), 125–156. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Ko E-S, Seidl A, Cristia A, Reimchen M, & Soderstrom M. (2016). Entrainment of prosody in the interaction of mothers with their young children. Journal of Child Language, 43(02), 284–309. 10.1017/S0305000915000203 [ DOI ] [ PubMed ] [ Google Scholar ]

- Kousidis S, Dorran D, Mcdonnell C, & Coyle E, (2009) Convergence in Human Dialogues Time Series Analysis of Acoustic Feature. In Proceedings of SPECOM2009. [ Google Scholar ]

- Lee C., Black M., Katsamanis A., Lammert A., Baucom B., Christensen A., Georgiou P., & Narayanan S. (2010). Quantification of prosodic entrainment in affective spontaneous spoken interactions of married couples. In Proceedings of the 11th Annual Conference of the International Speech Communication Association (pp. 793–796). INTERSPEECH. [ Google Scholar ]

- Lehnert-LeHouillier H, Terrazas S, & Sandoval S. (2020). Prosodic Entrainment in Conversations of Verbal Children and Teens on the Autism Spectrum. Frontiers in Psychology, 11, 2718. 10.3389/fpsyg.2020.582221. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Lehnert-LeHouillier H, Terrazas S, Sandoval S, & Boren R. (2020). The Relationship between Prosodic Ability and Conversational Prosodic Entrainment. 10th International Conference onSpeech Prosody. 10.21437/speechprosody.2020-157. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Lelong A, & Bailly G. (2011). Study of the phenomenon of phonetic convergence thanks to speech dominoes. In Esposito A, Vinciarelli A, Vicsi K, Pelachaud C, and Nihjolt A. (Eds.), Analysis of Verbal and Nonverbal Communication and Enactment: The Processing Issue, (pp.280–293) Springer. doi: 10.1007/978-3-642-25775-9_26 [ DOI ] [ Google Scholar ]

- Levitan R. (2014). Acoustic-prosodic entrainment in human-human and human-computer dialogue. [Doctoral dissertation, Columbia University]. Columbia Academic Commons. [ Google Scholar ]

- Levitan R, Beňuš Š, Gravano A, & Hirschberg J. (2015). Acoustic-prosodic entrainment in Slovak, Spanish, English and Chinese: A cross-linguistic comparison. Proceedings of the 16th Annual Meeting of the Special Interest Group on Discourse and Dialogue, (pp. 325–334). Association for Computational Linguistics. [ Google Scholar ]

- Levitan R, Gravano A, Willson L, Benus S, Hirschberg J, & Nenkova A. (2012). Acoustic-prosodic entrainment and social behavior. Proceedings of the 2012 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (pp. 11–19). North American Chapter of the Association for Computational Linguistics. [ Google Scholar ]

- Levitan R, & Hirschberg J. (2011). Measuring acoustic-prosodic entrainment with respect to multiple levels and dimensions. In Proceedings of the Annual Conference of the International Speech Communication Association, (pp. 3081–3084). INTERSPEECH. [ Google Scholar ]

- Lewandowski N., & Jilka M. (2019). Phonetic Convergence, Language Talent, Personality and Attention. Frontiers in Communication, Frontiers in Communication, 4. 10.3389/fcomm.2019.00018 [ DOI ] [ Google Scholar ]

- Louwerse MM, Dale R, Bard EG, & Jeuniaux P. (2012). Behavior Matching in Multimodal Communication Is Synchronized. Cognitive Science, 36(8), 1404–1426. 10.1111/j.1551-6709.2012.01269.x [ DOI ] [ PubMed ] [ Google Scholar ]

- Lubold N, & Pon-Barry H, (2014). Acoustic-Prosodic Entrainment and Rapport in Collaborative Learning Dialogues. In MLA ‘14: Proceedings of the 2014 ACM workshop on Multimodal Learning Analytics Workshop and Grand Challenge, (pp. 5–12) [ Google Scholar ]

- Manson JH, Bryant GA, Gervais MM, & Kline MA (2013). Convergence of speech rate in conversation predicts cooperation. Evolution and Human Behavior, 34(6), 419–426. 10.1016/j.evolhumbehav.2013.08.001 [ DOI ] [ Google Scholar ]

- McIntosh DN (2006). Spontaneous facial mimicry, liking and emotional contagion. Polish Psychological Bulletin, 37(1), 31–42. [ Google Scholar ]

- Michalsky J. (2017). Pitch synchrony as an effect of perceived attractiveness and likability. In Proceedings of DAGA. [ Google Scholar ]

- Michalsky J, & Schoormann H. (2017). Pitch convergence as an effect of perceived attractiveness and likability. In Proceedings of the Annual Conference of the International Speech Communication Association, (pp. 2254–2256). INTERSPEECH. [ Google Scholar ]

- Michalsky J, Schoormann H, & Niebuhr O. (2018). Conversational quality is affected by and reflected in prosodic entrainment. In International Conference on Speech Prosody, (pp. 389–392). [ Google Scholar ]

- Michalsky J., Schoormann H., & Niebuhr O. (2016). Turn transitions as salient places for social signals-Local prosodic entrainment as a cue to perceived attractiveness and likability. In Proceedings of the Conference on Phonetics & Phonology in German-speaking countries, (pp. 125–128). [ Google Scholar ]

- Miles LK, Nind LK, & Macrae CN (2009). The rhythm of rapport: Interpersonal synchrony and social perception. Journal of Experimental Social Psychology, 45(3), 585–589. 10.1016/j.jesp.2009.02.002 [ DOI ] [ Google Scholar ]

- Niederhoffer KG, & Pennebaker JW (2002). Linguistic Style Matching in Social Interaction. Journal of Language & Social Psychology, 21(4), 337. [ Google Scholar ]

- Ochi K, Ono N, Owada K, Kojima M, Kuroda M, Sagayama S, & Yamasue H. (2019). Quantification of speech and synchrony in the conversation of adults with autism spectrum disorder. PLoS ONE, 14(12). 10.1371/journal.pone.0225377 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Ostrand R, & Chodroff E. (2021). It’s alignment all the way down, but not all the way up: Speakers align on some features but not others within a dialogue. Journal of Phonetics, 88, 101074. 10.1016/j.wocn.2021.101074 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Pardo JS (2006). On Phonetic Convergence during Conversational Interaction. Journal of the Acoustical Society of America, 119(4), 2382–2393. [ DOI ] [ PubMed ] [ Google Scholar ]

- Pardo JS, Jay IC, & Krauss RM (2010). Conversational role influences speech imitation. Attention, Perception, & Psychophysics, 72(8), 2254–2264. 10.3758/BF03196699 [ DOI ] [ PubMed ] [ Google Scholar ]

- Pardo JS, Urmanche A, Wilman S, Wiener J, Mason N, Francis K, & Ward M. (2018). A comparison of phonetic convergence in conversational interaction and speech shadowing. Journal of Phonetics, 69, 1–11. [ Google Scholar ]

- Paxton A., & Dale R. (2017). Interpersonal Movement Synchrony Responds to High- and Low- Level Conversational Constraints. Frontiers in Psychology, 8, 1135. 10.3389/fpsyg.2017.01135 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Pérez JM, Gálvez RH, & Gravano A. (2016). Disentrainment may be a positive thing: A novel measure of unsigned acoustic-prosodic synchrony, and its relation to speaker engagement. In Proceedings of the Annual Conference of the International Speech Communication Association, (pp. 1270–1274). INTERSPEECH. [ Google Scholar ]

- Pickering MJ, & Garrod S. (2004). Toward a mechanistic psychology of dialogue. Behavioral and Brain Sciences, 27(2). 10.1017/S0140525X04000056 [ DOI ] [ PubMed ] [ Google Scholar ]

- Polyanskaya L, Samuel AG, & Ordin M. (2019). Speech Rhythm Convergence as a Social Coalition Signal. Evolutionary Psychology, 17(3), 1474704919879335. 10.1177/1474704919879335 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Rasenberg M, Ozyurek A, Dingemanse M, (2020). Alignment in Multimodal Interaction: An Integrative Framework. Cognitive Science, 44, e12911. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Reichel UD, Beňuš Š, Mády K. (2018). Entrainment profiles: Comparison by gender, role, and feature set. Speech Communication, 100, 46–57. [ Google Scholar ]

- Reitter D, & Moore J. (2014). Alignment and task success in spoken dialogue. Journal of Memory and Language, 76, 29–46. 10.1016/j.jml.2014.05.008 [ DOI ] [ Google Scholar ]

- Savino M, Lapertosa L, & Refice M. (2018). Seeing or not seeing your conversational partner: The influence of interaction modality on prosodic entrainment. In Karpov A, Jokisch O, & Potapova R. (Eds.), Speech and Computer (pp. 574–584). Springer International Publishing. [ Google Scholar ]

- Sawyer J., Matteson C., Ou H., & Nagase T. (2017). The effects of parent-focused slow relaxed speech intervention on articulation rate, response time latency, and fluency in preschool children who stutter. Journal of Speech Language and Hearing Research, 60(4), 794. 10.1044/2016_JSLHR-S-16-0002 [ DOI ] [ PubMed ] [ Google Scholar ]

- Schweitzer A, & Lewandowski N. (2013). Convergence of articulation rate in spontaneous speech. In Bimbot F. (Ed.), Proceedings of the 14th Annual Conference of the International Speech Communication Association (pp. 525–529). INTERSPEECH. [ Google Scholar ]

- Schweitzer A, Lewandowski N, & Duran D. (2017). Social attractiveness in dialogs. In Proceedings of the Annual Conference of the International Speech Communication Association, (pp. 2243–2247). INTERSPEECH. [ Google Scholar ]

- Schweitzer K, Walsh M, & Schweitzer A. (2017). To see or not to see: Interlocutor visibility and likeability influence convergence in intonation. In Proceedings of the Annual Conference of the International Speech Communication Association, (pp. 919–923). INTERSPEECH. [ Google Scholar ]

- Seidl A, Cristia A, Soderstrom M, Ko E-S Abel EA, Kellerman A, & Schwichtenberge AJ (2018). Infant-mother acoustic-prosodic alignment and developmental risk. Journal of Speech, Language & Hearing Research, 61(6), 1369–1380. 10.1044/2018_JSLHR-S-17-0287 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Shockley K, Santana M-V, & Fowler CA (2003). Mutual interpersonal postural constraints are involved in cooperative conversation. Journal of Experimental Psychology: Human Perception and Performance, 29(2), 326–332. 10.1037/0096-1523.29.2.326 [ DOI ] [ PubMed ] [ Google Scholar ]

- Street RL (1982). Evaluation of noncontent speech accommodation. Language & Communication, 2(1), 13–31. 10.1016/0271-5309(82)90032-5 [ DOI ] [ Google Scholar ]

- Street RL. (1984). Speech convergence and speech evaluation in fact-finding interviews. Human Communication Research, 11(2), 139–169. 10.1111/j.1468-2958.1984.tb00043.x [ DOI ] [ Google Scholar ]

- Suffill E, Kutasi T, Pickering M, & Branigan H. (2021). Lexical alignment is affected by addressee but not speaker nativeness. Bilingualism: Language and Cognition, 24(4), 746–757. doi: 10.1017/S1366728921000092 [ DOI ] [ Google Scholar ]

- Vaughan B. (2011). Prosodic synchrony in cooperative task-based dialogues: A measure of agreement and disagreement. Proceedings of the Annual Conference of the International Speech Communication Association (pp. 1865–1868). INTERSPEECH. [ Google Scholar ]

- Weise A, Levitan SI, Hirschberg J, & Levitan R. (2019). Individual differences in acoustic-prosodic entrainment in spoken dialogue. Speech Communication, 115, 78–87. 10.1016/j.specom.2019.10.007 [ DOI ] [ Google Scholar ]

- Willi M, Borrie SA, Barrett TS, Tu M, & Berisha V. (2018). A discriminative acoustic-prosodic approach for measuring local entrainment. Proceedings of the Annual Conference of the International Speech Communication Association (pp. 581–585). [ Google Scholar ]

- Wynn CJ, Barrett TS, & Borrie SA (2022). Rhythm Perception, Speaking Rate Entrainment, and Conversational Quality: A Mediated Model. Journal of Speech, Language, and Hearing Research, 65(6), 2187–2203. 10.1044/2022_JSLHR-21-00293. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Wynn CJ, Borrie SA (2020). Methodology Matters: The impact of research design on conversational entrainment outcomes. Journal of Speech, Language, and Hearing Research. 63, 1352–1360. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Wynn CJ, Borrie SA, & Sellers TP (2018). Speech rate entrainment in children and adults with and without autism spectrum disorder. American Journal of Speech-Language Pathology, 27(3), 965–974. 10.1044/2018_AJSLP-17-0134 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Xia Z, Levitan R, & Hirschberg J. (2014). Prosodic entrainment in Mandarin and English: A cross-linguistic comparison. Proceedings of the International Conference on Speech Prosody, (pp. 65–69). [ Google Scholar ]

- View on publisher site

- PDF (1.0 MB)

- Collections

Similar articles

Cited by other articles, links to ncbi databases.

- Download .nbib .nbib

- Format: AMA APA MLA NLM

Add to Collections

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

What drives successful verbal communication?

Miriam de boer, roel m willems.

- Author information

- Article notes

- Copyright and License information

Edited by: Klaus Kessler, University of Glasgow, UK

Reviewed by: Ulrich Pfeiffer, University Hospital Cologne, Germany; Dale J. Barr, University of Glasgow, UK

*Correspondence: Miriam de Boer, Donders Institute for Brain, Cognition and Behaviour, Radboud University Nijmegen, P.O. Box 9101, 6500 HB, Nijmegen, Netherlands e-mail: [email protected]

This article was submitted to the journal Frontiers in Human Neuroscience.

Received 2013 Jun 29; Accepted 2013 Sep 9; Collection date 2013.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

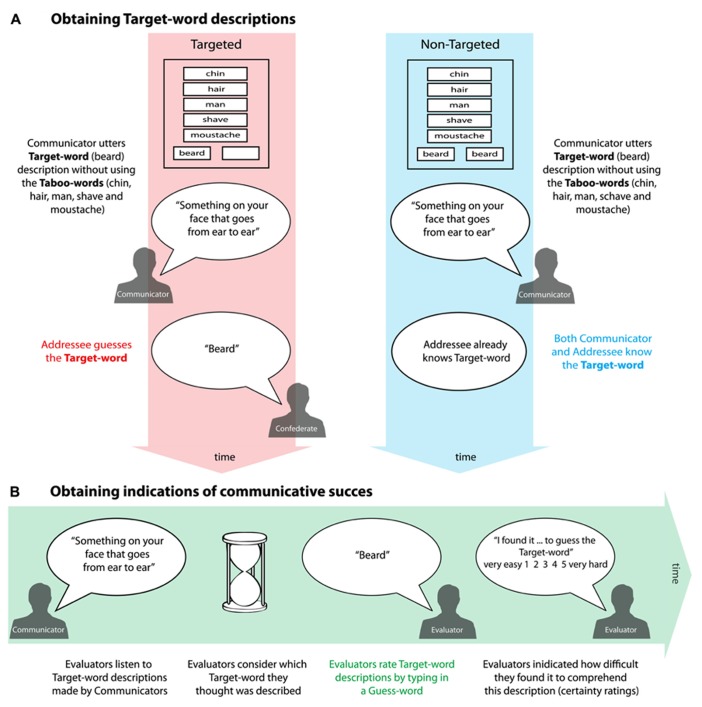

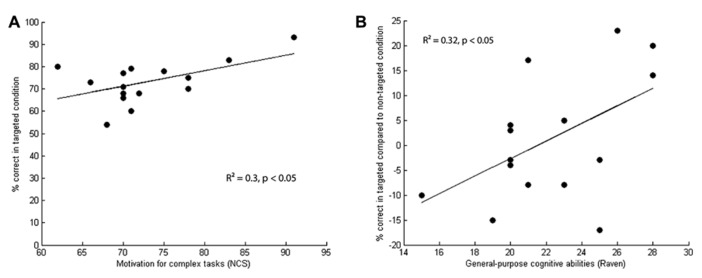

There is a vast amount of potential mappings between behaviors and intentions in communication: a behavior can indicate a multitude of different intentions, and the same intention can be communicated with a variety of behaviors. Humans routinely solve these many-to-many referential problems when producing utterances for an Addressee. This ability might rely on social cognitive skills, for instance, the ability to manipulate unobservable summary variables to disambiguate ambiguous behavior of other agents (“mentalizing”) and the drive to invest resources into changing and understanding the mental state of other agents (“communicative motivation”). Alternatively, the ambiguities of verbal communicative interactions might be solved by general-purpose cognitive abilities that process cues that are incidentally associated with the communicative interaction. In this study, we assess these possibilities by testing which cognitive traits account for communicative success during a verbal referential task. Cognitive traits were assessed with psychometric scores quantifying motivation, mentalizing abilities, and general-purpose cognitive abilities, taxing abstract visuo-spatial abilities. Communicative abilities of participants were assessed by using an on-line interactive task that required a speaker to verbally convey a concept to an Addressee. The communicative success of the utterances was quantified by measuring how frequently a number of Evaluators would infer the correct concept. Speakers with high motivational and general-purpose cognitive abilities generated utterances that were more easily interpreted. These findings extend to the domain of verbal communication the notion that motivational and cognitive factors influence the human ability to rapidly converge on shared communicative innovations.

Keywords: communication, language, individual differences, mentalizing, Raven’s progressive matrices

INTRODUCTION

Daily human communication is surprisingly effective, even though it involves producing and understanding utterances that are inherently ambiguous. The potential mapping between behavior and intentions in communication is very large and many-to-many, such that similar behaviors can indicate different intentions and vice versa.