Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Published: 18 February 2021

Essentials of data management: an overview

- Miren B. Dhudasia 1 , 2 ,

- Robert W. Grundmeier 2 , 3 , 4 &

- Sagori Mukhopadhyay 1 , 2 , 3

Pediatric Research volume 93 , pages 2–3 ( 2023 ) Cite this article

4037 Accesses

4 Citations

5 Altmetric

Metrics details

What is data management?

Data management is a multistep process that involves obtaining, cleaning, and storing data to allow accurate analysis and produce meaningful results. While data management has broad applications (and meaning) across many fields and industries, in clinical research the term data management is frequently used in the context of clinical trials. 1 This editorial is written to introduce early career researchers to practices of data management more generally, as applied to all types of clinical research studies.

Outlining a data management strategy prior to initiation of a research study plays an essential role in ensuring that both scientific integrity (i.e., data generated can accurately test the hypotheses proposed) and regulatory requirements are met. Data management can be divided into three steps—data collection, data cleaning and transformation, and data storage. These steps are not necessarily chronological and often occur simultaneously. Different aspects of the process may require the expertise of different people necessitating a team effort for the effective completion of all steps.

Data collection

Data source.

Data collection is a critical first step in the data management process and may be broadly classified as “primary data collection” (collection of data directly from the subjects specifically for the study) and “secondary use of data” (repurposing data that were collected for some other reason—either for clinical care in the subject’s medical record or for a different research study). While the terms retrospective and prospective data collection are occasionally used, 2 these terms are more applicable to how the data are utilized rather than how they are collected . Data used in a retrospective study are almost always secondary data; data collected as part of a prospective study typically involves primary data collection, but may also involve secondary use of data collected as part of ongoing routine clinical care for study subjects. Primary data collected for a specific study may be categorized as secondary data when used to investigate a new hypothesis, different from the question for which the data were originally collected. Primary data collection has the advantage of being specific to the study question, minimize missingness in key information, and provide an opportunity for data correction in real time. As a result, this type of data is considered more accurate but increases the time and cost of study procedures. Secondary use of data includes data abstracted from medical records, administrative data such as from the hospital’s data warehouse or insurance claims, and secondary use of primary data collected for a different research study. Secondary use of data offers access to large amounts of data that are already collected but often requires further cleaning and codification to align the data with the study question.

A case report form (CRF) is a powerful tool for effective data collection. A CRF is a paper or electronic questionnaire designed to record pertinent information from study subjects as outlined in the study protocol. 3 CRFs are always required in primary data collection but can also be useful in secondary use of data to preemptively identify, define, and, if necessary, derive critical variables for the study question. For instance, medical records provide a wide array of information that may not be required or be useful for the study question. A CRF with well-defined variables and parameters helps the chart reviewer focus only on the relevant data, and makes data collection more objective and unbiased, and, in addition, optimize patient confidentiality by minimizing the amount of patient information abstracted. Tools like REDCap (Research Electronic Data Capture) provide electronic CRFs and offer some advanced features like setting validation rules to minimize errors during data collection. 4 Designing an effective CRF upfront during the study planning phase helps to streamline the data collection process, and make it more efficient. 3

Data cleaning and transformation

Quality checks.

Data collected may have errors that arise from multiple sources—data manually entered in a CRF may have typographical errors, whereas data obtained from data warehouses or administrative databases may have missing data, implausible values, and nonrandom misclassification errors. Having a systematic approach to identify and rectify these errors, while maintaining a log of the steps performed in the process, can prevent many roadblocks during analysis.

First, it is important to check for missing data. Missing data are defined as values that are not available and that would be meaningful for analysis if they were observed. 5 Missing data can bias the results of the study depending on how much data is missing and what is the pattern of distribution of missing data in the study cohort. Many methods for handling missing data have been published. Kang 6 provide a practical review of methods for handling missing data. If missing data cannot be retrieved and is limited to only a small number of subjects, one approach is to exclude these subjects from the study. Missing data in different variables across many subjects often require more sophisticated approaches to account for the “missingness.” These may include creating a category of “missing” (for categorical variables), simple imputation (e.g., substituting missing values in a variable with an average of non-missing values in the variable), or multiple imputations (substituting missing values with the most probable value derived from other variables in the dataset). 7

Second, errors in the data can be identified by running a series of data validation checks. Some examples of data validation rules for identifying implausible values are shown in Table 1 . Automated algorithms for detection and correction of implausible values may be available for cleaning specific variables in large datasets (e.g., growth measurements). 8 After identification, data errors can either be corrected, if possible, or can be marked for deletion. Other approaches, similar to those for dealing with missing data, can also be used for managing data errors.

Data transformation

The data collected may not be in the form required for analysis. The process of data transformation includes recategorization and recodification of the data, which has been collected along with derivation of new variables, to align with the study analytic plan. Examples include categorizing body mass index collected as a continuous variable into under- and overweight categories, recoding free-text values such as “growth of an organism” or “no growth,” and into a binary “positive” or “negative,” or deriving new variables such as average weight per year from multiple weight values over time available in the dataset. Maintaining a code-book of definitions for all variables, predefined and derived, can help a data analyst better understand the data.

Data storage

Securely storing data is especially important in clinical research as the data may contain protected health information of the study subjects. 9 Most institutes that support clinical research have guidelines for safeguards to prevent accidental data breaches.

Data are collected in paper or electronic formats. Paper data should be stored in secure file cabinets inside a locked office at the site approved by the institutional review board. Electronic data should be stored on a secure approved institutional server, and should never be transported using unencrypted portable media devices (e.g., “thumb drives”). If all study team members do not require access to study data, then selective access should be granted to the study team members based on their roles.

Another important aspect of data storage is data de-identification. Data de-identification is a process by which identifying characteristics of the study participants are removed from the data, in order to mitigate privacy risks to individuals. 10 Identifying characteristics of a study subject includes name, medical record number, date of birth/death, and so on. To de-identify data, these characteristics should either be removed from the data or modified (e.g., changing the medical record number to study IDs, changing dates to age/duration, etc.). If feasible, study data should be de-identified when storing. If you anticipate that reidentification of the study participants may be required in future, then the data can be separated into two files, one containing only the de-identified data of the study participants, and one containing all the identifying information, with both files containing a common linking variable (e.g., study ID), which is unique for every subject or record in the two files. The linking variable can be used to merge the two files when reidentification is required to carry out additional analyses or to get further data. The link key should be maintained in a secure institutional server accessible only to authorized individuals who need access to the identifiers.

To conclude, effective data management is important to the successful completion of research studies and to ensure the validity of the results. Outlining the steps of the data management process upfront will help streamline the process and reduce the time and effort subsequently required. Assigning team members responsible for specific steps and maintaining a log, with date/time stamp to document each action as it happens, whether you are collecting, cleaning, or storing data, can ensure all required steps are done correctly and identify any errors easily. Effective documentation is a regulatory requirement for many clinical trials and is helpful for ensuring all team members are on the same page. When interpreting results, it will serve as an important tool to assess if the interpretations are valid and unbiased. Last, it will ensure the reproducibility of the study findings.

Krishnankutty, B., Bellary, S., Kumar, N. B. & Moodahadu, L. S. Data management in clinical research: an overview. Indian J. Pharm. 44 , 168–172 (2012).

Article Google Scholar

Weinger, M. B. et al. Retrospective data collection and analytical techniques for patient safety studies. J. Biomed. Inf. 36 , 106–119 (2003).

Avey, M. in Clinical Data Management 2nd edn. (eds Rondel, R. K., Varley, S. A. & Webb, C. F.) 47–73 (Wiley, 1999).

Harris, P. A. et al. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J. Biomed. Inf. 42 , 377–381 (2009).

Little, R. J. et al. The prevention and treatment of missing data in clinical trials. N. Engl. J. Med. 367 , 1355–1360 (2012).

Article CAS Google Scholar

Kang, H. The prevention and handling of the missing data. Korean J. Anesthesiol. 64 , 402 (2013).

Rubin, D. B. Inference and missing data. Biometrika 63 , 581–592 (1976).

Daymont, C. et al. Automated identification of implausible values in growth data from pediatric electronic health records. J. Am. Med. Inform. Assoc. 24 , 1080–1087 (2017).

Office for Civil Rights, Department of Health and Human Services. Health insurance portability and accountability act (HIPAA) privacy rule and the national instant criminal background check system (NICS). Final rule. Fed. Regist. 81 , 382–396 (2016).

Google Scholar

Office for Civil Rights (OCR). Methods for de-identification of PHI. https://www.hhs.gov/hipaa/for-professionals/privacy/special-topics/de-identification/index.html (2012).

Download references

Acknowledgements

This work was partially supported in part by the Eunice Kennedy Shriver National Institute of Child Health & Human Development of the National Institutes of Health grant (K23HD088753).

Author information

Authors and affiliations.

Division of Neonatology, Children’s Hospital of Philadelphia, Philadelphia, PA, USA

Miren B. Dhudasia & Sagori Mukhopadhyay

Center for Pediatric Clinical Effectiveness, Children’s Hospital of Philadelphia, Philadelphia, PA, USA

Miren B. Dhudasia, Robert W. Grundmeier & Sagori Mukhopadhyay

Department of Pediatrics, University of Pennsylvania Perelman School of Medicine, Philadelphia, PA, USA

Robert W. Grundmeier & Sagori Mukhopadhyay

Department of Biomedical and Health Informatics, Children’s Hospital of Philadelphia, Philadelphia, PA, USA

Robert W. Grundmeier

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Sagori Mukhopadhyay .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Reprints and permissions

About this article

Cite this article.

Dhudasia, M.B., Grundmeier, R.W. & Mukhopadhyay, S. Essentials of data management: an overview. Pediatr Res 93 , 2–3 (2023). https://doi.org/10.1038/s41390-021-01389-7

Download citation

Received : 11 December 2020

Revised : 27 December 2020

Accepted : 06 January 2021

Published : 18 February 2021

Issue Date : January 2023

DOI : https://doi.org/10.1038/s41390-021-01389-7

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Advancing clinical and translational research in germ cell tumours (gct): recommendations from the malignant germ cell international consortium.

- Adriana Fonseca

- Matthew J. Murray

British Journal of Cancer (2022)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Clinical Trial Data Management and Professionals

By Andy Marker | January 16, 2020 (updated September 16, 2021)

- Share on Facebook

- Share on LinkedIn

Link copied

This guide provides professionals with everything they need to understand clinical data management, offering expert advice, templates, graphics, and a sample clinical data management plan.

Included on this page, you'll find information on how to become a clinical trial data manager , a clinical data management plan template , a clinical data validation plan template , and much more.

What Is Clinical Trial Management?

Clinical trial management refers to the structured, organized regulatory approach that managers take in clinical trial projects to produce timely and efficient project outcomes. It includes developing and maintaining specified or general software systems, processes, procedures, training, and protocols.

What Is a Clinical Trial Management System (CTMS)?

A clinical trial management system (CTMS) is a type of project management software specific to clinical research and clinical data management. It allows for centralized planning, reporting, and tracking of all aspects of clinical trials, with the end goal of ensuring that the trials are efficient, compliant, and successful, whether across one or several institutions.

Companies use CTMS for their clinical data management to ensure they build trust with regulatory agencies. Trust is earned as the companies collect, integrate, and validate their clinical trial data with integrity over time. A comprehensive system helps them do so.

In a 2017 paper, “ Artificial intelligence based clinical data management systems: A review ,” Gazali discusses CTMS and what makes it worthwhile for investigators — namely, that it helps to authenticate data. Accurate study results and a trail of data collection, as collected through a quality CTMS, lend credence to research study data. Clinical trial data management systems enable researchers to adhere to quality standards and provide assurance that they are appropriately collecting, cleaning, and managing the data.

A clinical data management system also offers remote data monitoring. The sponsor, or principal investigator, may want to monitor the trial from a distance, especially if the organization has many sites. Since the FDA mandates monitoring in clinical trials, and many studies generally consider it a large cost, remote monitoring offers a lower-priced option in which sponsors can identify issues and outliers and mitigate them quickly.

Many data management systems are also incorporating artificial intelligence (AI). AI-based clinical data management systems support process automation, data insights analysis, and critical decision making. All of this can happen as your staff inputs the research data. According to a review of clinical data management systems , researchers note that automating all dimensions of clinical data management in trials can take them from mere electronic data capture to something that helps with findings in clinical trials.

The most helpful strategies for implementing clinical data management systems balance risk reduction and lead time. All trial managers want to have their software deployed rapidly. However, it is best to set up the databases thoroughly before the trial. When staff must make software changes during the trial, it can be costly and have implications on the trial data’s validity.

Other strategies that help organizations implement a new system include making sure that, prior to deployment, the intended users give input. These users include entities such as the contract research organization (CRO), the sponsor, staff at the investigator site, and any onsite technical support. Staff should respond well to the graphical user interface (GUI). Additionally, depending on software support, the staff can gradually expand the modules to include more functionality, perform module-based programming, and duplicate the hardware. These actions give the staff the most functionality and the software the best chance at success.

How to Compare Clinical Data Management Systems

When deciding which clinical data management system to use, compare the program’s available features and those that your clinical sites need. Additionally, you can compare clinical data management systems by reviewing the installation platforms, pricing, technical support, and number of allowed users.

For programs that collect data on paper and send it to data entry staff, the data entry portal should be simple and allow for double entry and regular oversight.

In general, here are the main features to compare in a clinical data management system:

- 21 CFR Part 11 Compliance: Electronic systems must provide assurance of authentic records.

- Document Management: All documents should be in a centralized location, linked to their respective records.

- Electronic Data Capture (EDC): Direct clinical trial data collection, as opposed to paper forms.

- Enrollment Management: Research studies can use this data (from interactive web or voice response systems) to enroll, randomize, and manage patients.

- HIPAA compliance: Ensure compliance with the Health Insurance Portability and Accountability Act to protect patients’ information.

- Installation: Identify whether you want a cloud-based or on-premises solution and if you need mobile deployment (iOS or Android).

- Investigator and Site Profiling: Use this function to rapidly identify the feasibility of possible investigators and sites.

- Monitoring: The system should offer a calendar, scheduling capabilities, adverse and severe adverse event tracking, trip reporting, site communication, and triggers.

- Number of Users: How many users can the software can handle? Is there a minimum number of required users? Does the software provide levels of accessibility and price based on the number of users?

- Patient Database: Separate from recruitment and enrollment, the patient database is a record of previous contacts that you can potentially draw from for future trials.

- Payment Portal: Pay out stipends, contracts, and other finances related to the research project.

- Pricing: Check whether the software company offers free trials, free or premium options, monthly subscriptions, annual subscriptions, or a one-time license.

- Recruiting Management: This function helps streamline recruitment by targeting potential trial patients with web recruitment and prescreening.

- Scheduling: Use this feature to keep track of visits and events.

- Study Planning and Workflows: This function enables you to track all required study pieces from the beginning and optimize each piece with workflows.

- Support: Check if the software company offers 24-hour issue support and training on the software.

What Is Clinical Data Management?

Clinical data management (CDM) is the part of clinical trial management that deals specifically with information that comes out of the trials. In order to yield ethical, repeatable results, researchers must document their patients’ medical status — including everything relative to that status — and the trial’s interventions.

Clinical data management evolved from drug companies’ need for an honest path from their research to their findings; in short, their data had to be reproducible. CDM helps evolve a standards-based approach, and many regulators are continually imposing their requirements on it. For instance, paper is no longer favored as a collection method; most clinical trials prefer software systems that improve the timeliness and quality of data.

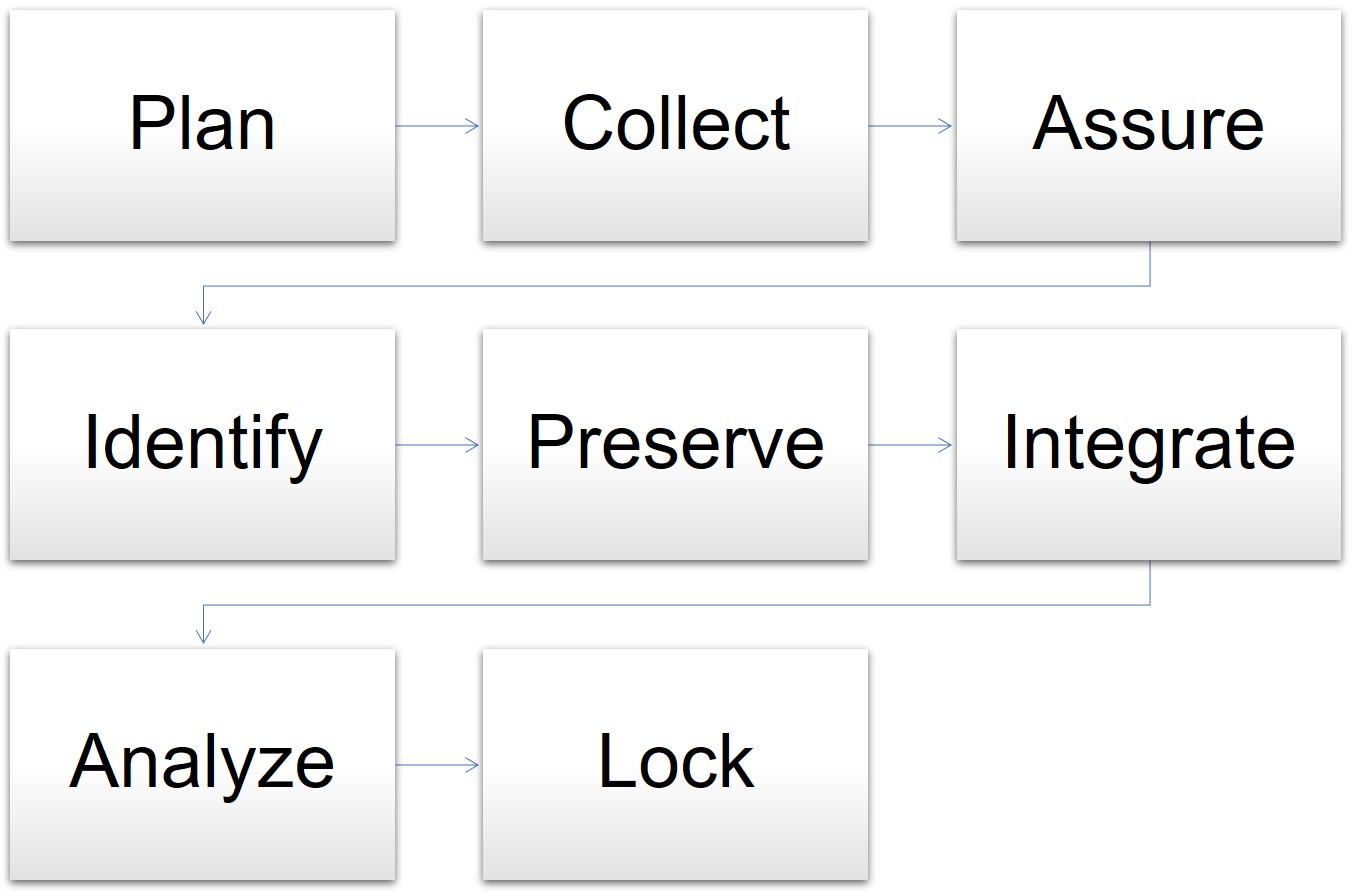

In one model for data management, the cycle begins when the clinical trial is in the planning stages and goes through the final analysis and lockdown of the data. The stages for data management are as follows:

- Plan: The data manager prepares the database, forms, and overall plan.

- Collect: Staff gathers data in the course of the trial.

- Assure: The data manager determines if the data plan and tools meet the requirements.

- Identify: Staff and the data manager identify any issues or risks.

- Preserve: The data manager preserves the data already collected and mitigates risks.

- Integrate: The data manager oversees different datasets and information mapped together for consistency.

- Analyze: The statisticians analyze the mapped data trends and outcomes.

- Lock: The data manager locks the database for integrity.

Model for Data Management in Clinical Trials

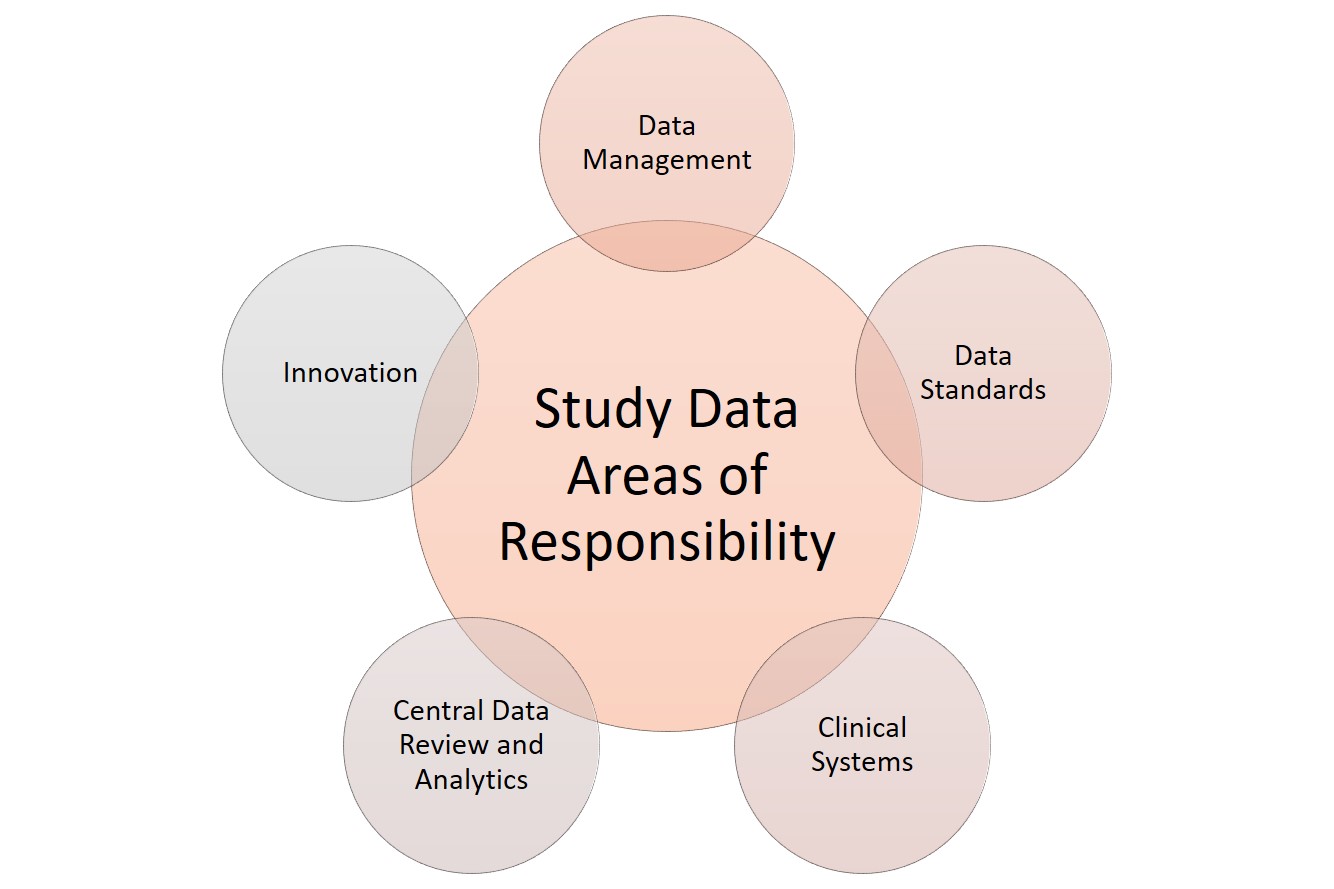

When it comes to data, clinical research has several areas of responsibility. Sponsors can split these functions among several staff or, in smaller studies, assign them to the main data manager. These functions include the following:

Clinical systems: Any software or technology used.

Data management: Data acquisition, coding, and standardization.

Data review and analytics: Quality management, auditing, and statistical analysis of the collected data.

Data standards: Checking against regulatory requirements.

Innovation: Using tools and theory that coordinate with the developing field. For more innovative templates to use in clinical trials, see “ Clinical Trial Templates to Start Your Clinical Research .”

Clinical Research Data Areas of Responsibility

Clinical data management is one of the most critical functions in overall clinical trial management. Staff collects data from many different sources in a clinical trial — some will necessarily be from paper forms filled out by the patient, their representative, or a staff member on their behalf. However, instead of paper, some clinics may use devices such as tablets or iPads to fill out this direct-entry data electronically.

Clinical data management also includes top-line data , such as the demographic data summary, the primary endpoint data, and the safety data. Together, this constitutes the executive summary for clinical trials. Companies often issue this data as a part of press releases. Additional clinical trial data management activities include the following:

- Case report form (CRF) design, annotation, and tracking

- Central lab data

- Data abstraction and extraction

- Data archiving

- Data collection

- Data entry and validation

- Data extraction

- Data queries and analysis

- Data storage and privacy

- Data transmission

- Database design, build, and testing

- Database locking

- Discrepancy management

- Medical data coding and processing

- Patient recorded data

- Severe adverse event (SAE) reconciliation

- Study metrics and tracking

- Quality control and assurance

- User acceptance testing

- Validation checklist

Since there are many different types of data coming from many different sources, some data managers have become experts in hybrid data management — the synchronization required to not only make disparate data relate to each other, but also to adequately manage each type of data. For example, one study could generate data on paper from both the trial site and from a contract research organization, electronic data from the site, and clinical data measurements from a laboratory.

The Roles and Responsibilities in Clinical Data Management

Clinical data management software assigns database access limitations based on the assigned roles and responsibilities of the users. This coding ensures there is an audit trail and the users can only access their respective required functionalities, without the ability to make other changes.

All staff members, whether a manager, programmer, administrator, medical coder, data coordinator, quality control staff, or data entry person, have differing levels of access to the software system, as delineated in the protocol. The principle investigator can use the CDMS to restrict these access levels.

What Is Clinical Trial Data Management (CDM)?

Clinical trial data management (CDM) is the process of a program or study collecting, cleaning, and managing subject and study data in a way that complies with internal protocols and regulatory requirements. It is simultaneously the initial phase in a clinical trial, a field of study, and an aspirational model.

With properly collected data in clinical trials, the study can progress and result in reliable, high-quality, statistically appropriate conclusions. Proper data collection also decreases the time from drug development to marketing. Further, proper data collection involves a multidisciplinary team, such as the research nurses, clinical data managers, investigators, support personnel, biostatisticians, and database programmers. Finally, CDM enables high-quality, understandable research, which can be capitalized on in its field and across many disciplines, according to the National Institutes of Health (NIH).

In clinical trials, data managers perform setup during the trial development phase. Data comes from the primary sources, such as site medical records, laboratory results, and patient diaries. If the project uses paper-based CRFs, staff members must transcribe them, then enter this source data into a clinical trial database. They enter paper-based forms twice, known as double data entry, and compare them, per best practice. This process significantly decreases the error rate from data entry mistakes. Electronic CRFs (eCRFs) enable staff to enter source data directly into the database.

As with any project, the financial and human resources in clinical trials are finite. Coming up with and sticking to a solid data management plan is crucial — it should include structure for the research personnel, resources, and storage. A clinical trial is a huge investment of time, people, and money. It warrants expert-level management from its inception.

Clinical Data Management Plans

Clinical data management plans (DMPs) outline all the data management work needed in a clinical research project. This includes the timeline, any milestones, and all deliverables, as well as strategies for how the data manager will deal with disparate data sets.

Regulators do not require a DMP, but they expect and audit them in clinical research. Thus, the DMPs should be comprehensive and all stakeholders should agree on them. They should also be living documents that staff regularly updates as the study evolves and the various study pieces develop.

For example, during one study, the study manager might change the company used for laboratory work. This affects the DMP in two ways: First, staff needs to develop the data sharing agreement with the new company, and second, they need to integrate the data from both laboratories into one dataset at the end of the trial. The DMP should describe both.

When creating DMPs, you should also bear in mind any industry data standards, so the research can also be valuable outside of the discrete study. The Clinical Data Acquisitions Standards Harmonization (CDASH) recommends 16 standards for data collection fields for consistency in data across different studies.

The final piece of standardization in DMPs is the use of a template, which provides staff with a solid place to start developing a DMP specific to their study. Sponsors may have a standard template they use across their projects to help reduce the complexity inherent in clinical trials.

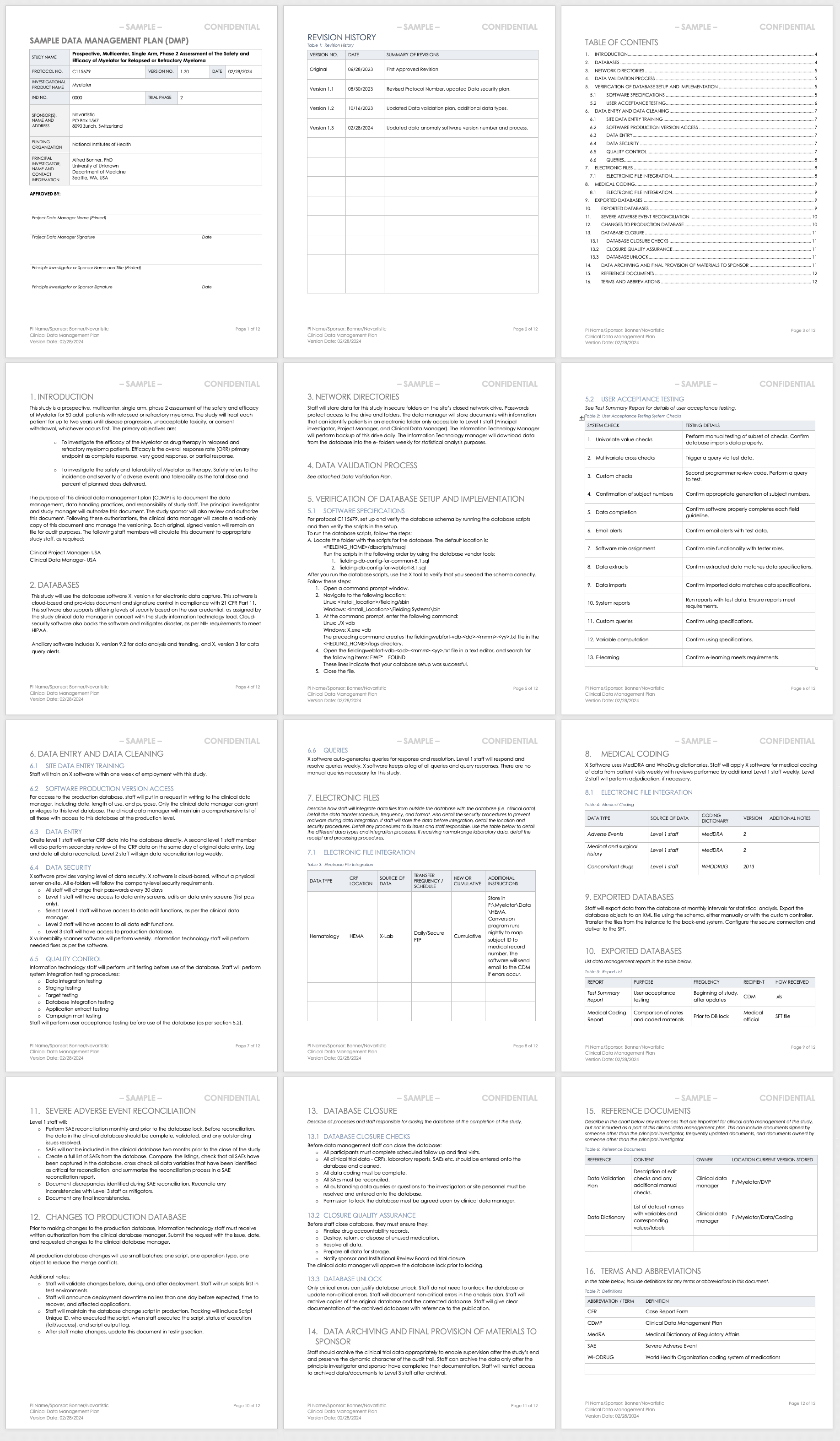

Data Management Plan Template for Clinical Trials

This data management plan template provides the required contents of a standard clinical trial data management plan, with space and instructions to input elements such as the data validation process, the verification of database setup and implementation processes, and the data archival process.

Download Data Management Plan Template - Word

Sample Data Management Plan for Clinical Trials

This sample data management plan shows a fictitious prospective, multicenter, single-arm study and its data management process needs. In two years of study, the data manager should regularly update this plan to demonstrate the study’s evolving needs, and document each change and update. Examples of sections include the databases used, how data will be entered and cleaned, and how staff will integrate different data sets collected in the study.

Download Sample Data Management Plan - Word

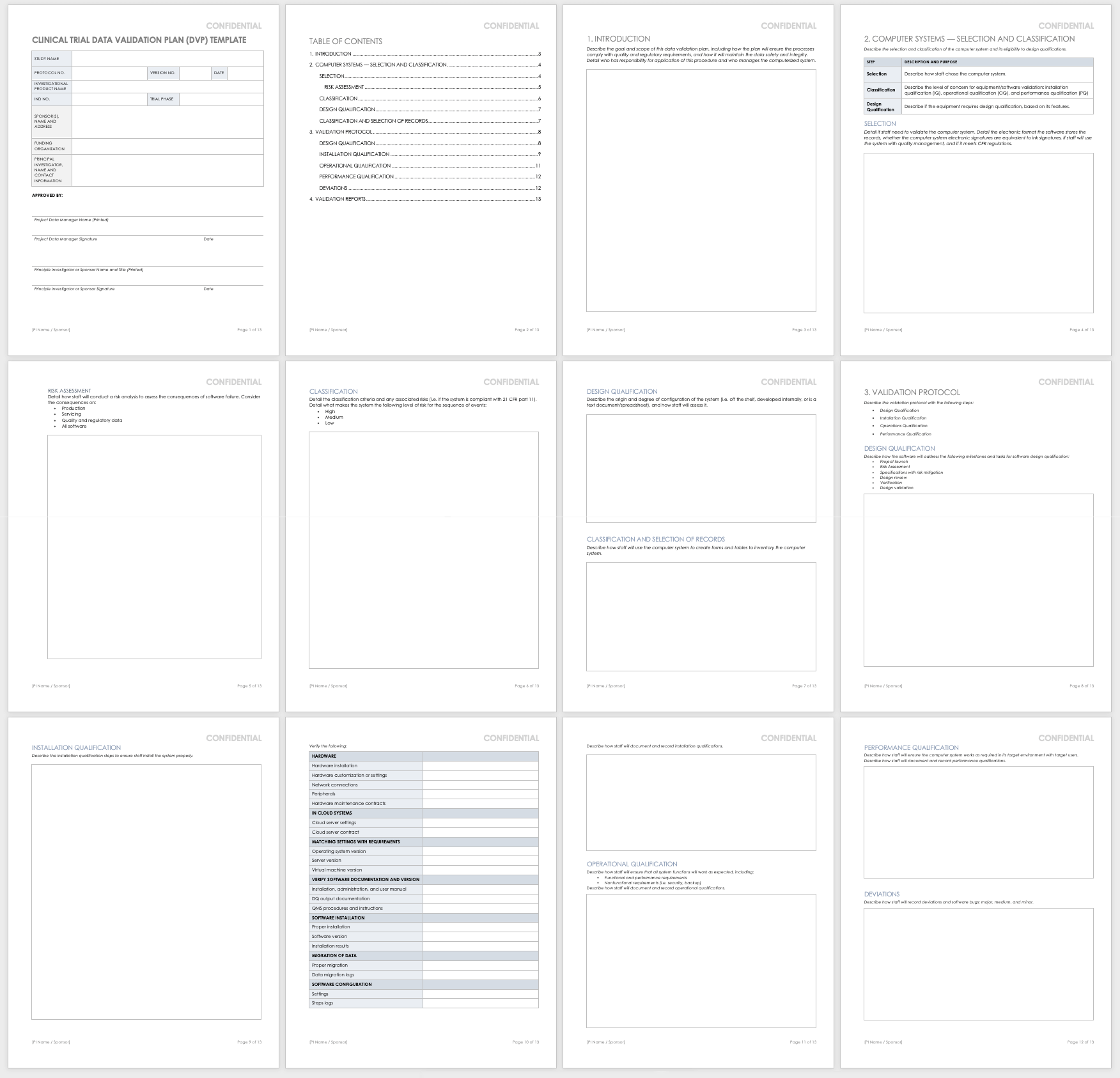

Clinical Trial Data Validation Plan

Data validation involves resolving database queries and inconsistencies by checking the data for accuracy, quality, and completeness. A data validation plan in clinical trials has all the variable calculations and checks that data managers use to identify any discrepancies in the dataset.

When the data is final, the database administrator locks it to ensure no further changes are made, as they could interrupt the integrity of the data. During reporting and analysis, experts may copy the data and reformat it into tables, lists, and graphs. Once the analysts complete their work, they report the results. When they have significant findings, they may create additional tables, lists, and graphs to present as part of the results. They then integrate these results into higher-level findings documentation. Examples of this type of documentation include investigator’s brochures or clinical case study reports (CSRs). Finally, the data manager archives the database.

The above steps are important because they preserve the integrity of the data in the database. However, managers do not need to perform them in a strict order. Some studies may need more frequent data validation, due to the high volume of data they produce, while other studies may produce intermediate analysis and reporting as part of their predetermined requirements. Finally, due to the complexity of some studies, the data manager or analyst may need to query , which means running a data request in a database and determining cursory results so that they may adjust the protocol.

Use this template to develop your own data validation plan. This Word template includes space and instructions for you to develop a data validation plan that you can include in your data management plan or use as a stand-alone document. Examples of sections include selecting and classifying the computer systems, validation protocol, and validation reporting.

Download Data Validation Plan - Word

Data Management Workflow

A data management workflow is the process clinical research uses to deal with their data, from the data collection design to the electronic archival and findings presentation. This includes getting through the entry process, any batch validation, discrepancy management, coding, reconciliations, and quality control plans.

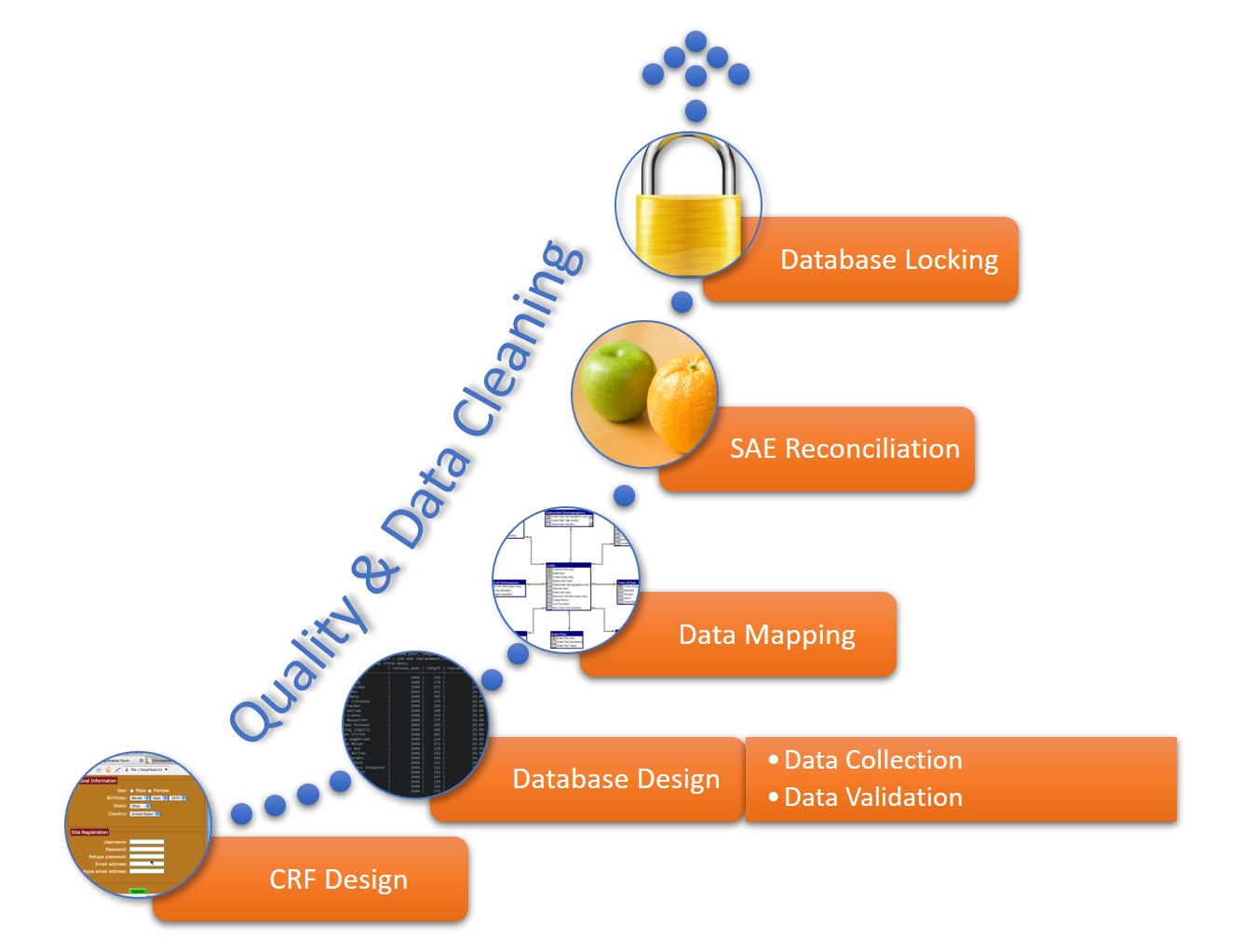

This workflow starts when researchers generate a CRF, whether manually or electronically, and continues through the final lock on the database. The data manager should perform quality checks and data cleaning throughout the workflow. The workflow steps for a data manager are as follows:

- CRF Design: This initial design step forms the basis of initial data collection.

- Database Design: The database should include space for all data collected in the study.

- Data Mapping: This step integrates data from different forms or formats so researchers can consistently report it.

- SAE Reconciliation: Data managers should regularly review and correct severe adverse events and potential events.

- Database Locking: Once a study is complete, the database manager should lock the database so that no one can change the data.

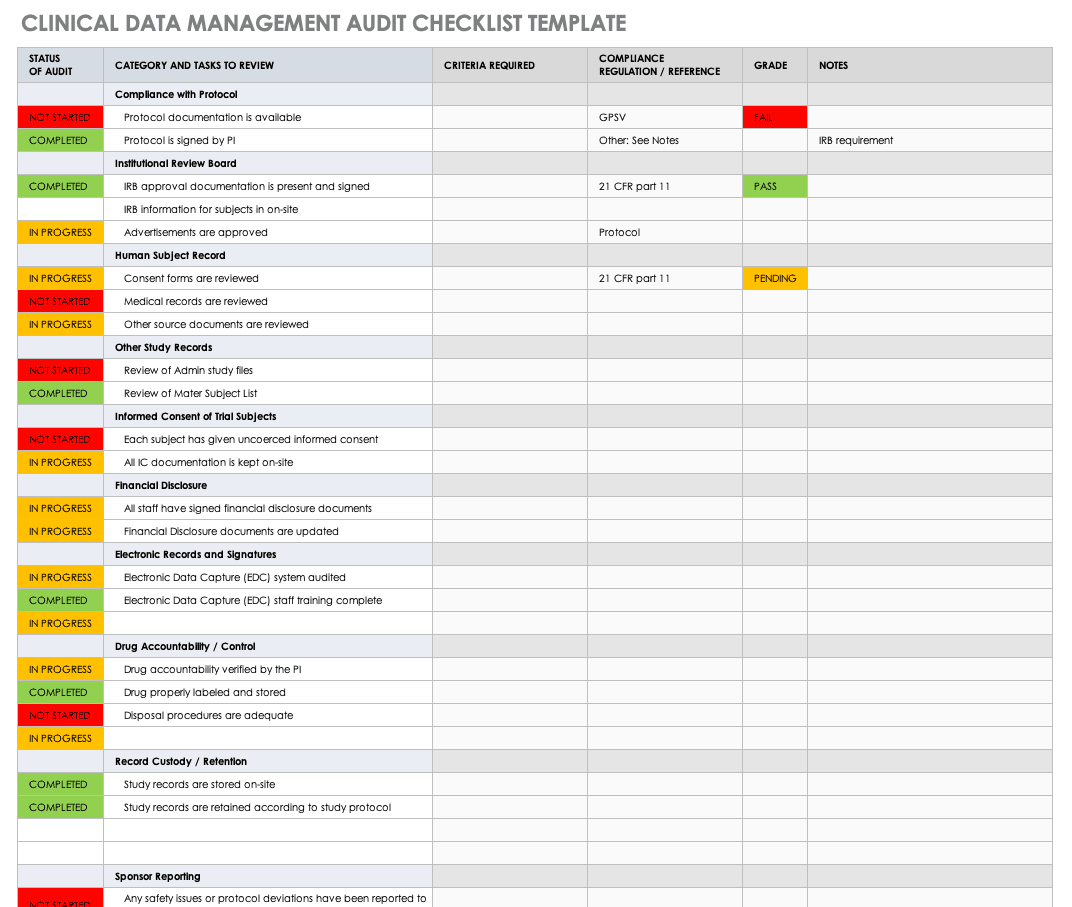

Clinical Trial Data Audits

A clinical trial data audit is a review of the information collected in order to ensure the quality, accuracy, and appropriateness for the stated research requirements, per the study protocol. Regulatory authorities, sponsors, and internal study staff can conduct two varieties of audit: overall and database-specific.

Regulators use database audits to ensure that no one has tampered with the data. In general, there must be an audit trail to know which user made changes to what and when in the database. For example, the auditors will look at record creation, modification, and deletion, noting the usernames, dates, and times. FDA 21 CFR Part 11 includes this as a part of fraud detection, and requires that there is a complete history of the recordkeeping system and clinical trial data transparency.

The data manager develops templates for auditing the study during the study development phase and performs their own internal audits as a part of its quality management.

This free clinical trial data management audit checklist template will help you develop your own checklist. This Excel template lets you show the status of your audit in an easy color-coded display, the category and tasks to review, and what criteria you require. It brings all your audit requirements and results together.

Download Clinical Data Management Audit Checklist - Excel

Quality Management in Clinical Trials

Data quality management (DQM) refers to the practices that ensure clinical information is of high value. In a clinical trial, DQM starts when staff first acquires the information and continues until the findings are distributed. DQM is critical in providing accurate outcomes.

The factors that influence the quality of clinical data include how well the study investigators develop and implement each of the following data pieces:

- Case Report Forms (CRF): Design the CRF in parallel with the protocol so that the data collected by staff is complete, accurate, and consistent.

- Data Conventions: Data conventions include dates, times, and acronyms. Data managers should set these conventions during study development, especially if there are multiple study locations and investigators.

- Guidelines for Monitoring: The overall data quality is contingent on the quality of the monitoring guidelines established.

- Missing Data: Missing data are those values not available that could change the analysis findings. During study development, investigators and analysts should determine how they will handle missing data.

- Verification of Source Data: Staff must verify that the source data is complete and accurate during data validation.

Regulations, Guidelines, and Standards in Clinical Data Management

Different regulations, guidelines, and standards govern clinical data management industry. The Clinical Data Interchange Standards Consortium (CDISC) is a global organization that holds clinical studies accountable to clinical trial data standards, international regulations, institutional and sponsor standard operating procedures (SOPs), and state laws.

There are standard operating procedures and best practices in clinical trial data management that are widespread. CDISC has two standards, the Study Data Tabulation Model Implementation Guide for Human Clinical Trials (SDTMIG), mandated by the U.S. Food and Drug Administration (FDA), and the Clinical Data Acquisition Standards Harmonization (CDASH). Also, in the industry, the Society for Clinical Data Management (SCDM) releases the Good Clinical Data Management Practices (GCDMP) guidelines and administers the International Association for Continuing Education and Training (IACET) credential for certified clinical data managers. The National Accreditations Board of Hospitals Health (NABH) provides additional guidance, such as pharmaceutical study auditing checklists. Finally, Good Clinical Practices (GCP) guidelines discuss ethical and quality standards in clinical research.

A trial conducted under the appropriate standards ensures that staff has followed the protocol and treated the patients according to that protocol. Ultimately, this shows the integrity and reproducibility of the study and acceptance in the industry.

Case Report Forms in Data Management

In data management, CRFs are the main tool researchers use to collect information from their participants. Researchers design CRFs based on the study protocol; in them, they document all patient information per the protocol for the duration of the study’s requirements.

CRFs should comply with all regulatory requirements and enable efficient analysis to decrease the need for data mapping during any data exchange. When longer than one page, the CRF is known as a CRF book, and each visit adds to the book. The main parts of a CRF are the header, the efficacy-related modules, and the safety-related modules:

- CRF Header: This portion includes the patient identification information and study information, such as the study number, site number, and subject identification number.

- Efficacy-Related Modules: This portion includes the baseline measurements, any diagnostics, the main efficacy endpoints of the trial, and any additional efficacy testing.

- Safety-Related Modules: The portion contains the patient’s demographic information, any adverse events, medical history, physical history, medications, confirmation of eligibility, and any reasons for release from the study.

What Is the Role of a Clinical Data Manager?

In a clinical trial, the data manager is the person who ensures that the research staff collects, manages, and prepares the resulting information accurately, comprehensively, and securely. This is a key role in clinical research, as the person is involved in the study setup, conduct, closeout, and some analysis and reporting.

Melissa Peda , Clinical Data Manager at Fred Hutch Cancer Research Center , says, “Being a clinical data manager, you have to be very detail-oriented. We write up very specific instructions for staff. For example, the specifications to a program’s database include one document that could easily have 1,000 rows in Excel, and it needs to be perfect for queries to fire in real time. Code mistakes can put your project behind, so they must do their review with a close eye. You must also be logical and think through the project setup. A good clinical data manager must be detailed, so the programmers and other staff can do their thing.”

Krishnankutty, et al. , developed an overview of best practices for data management in clinical research. In their article, published in the Indian Journal of Pharmacology, they say that the need for strong clinical data management has sprung up from the pharmaceuticals industry wanting to fast-track drug development by having high-quality data, regardless of the type of data. Clinical data managers can expect to work with many different types of clinical data; the most common types include the following:

- Administrative data

- Case report forms (CRFs)

- Electronic health records

- Laboratory data

- Patient diaries

- Patient or disease registries

- Safety data

The clinical data managers often must oversee the analysis of the data as well. Data analysis conducted in clinical trial data management is very delicate: It requires a solid dataset and an analyst who can explain the findings. Regulatory agencies, along with other companies and professionals, check the findings and analysis, so they need to be accurate and understandable.

Education and Credentials of a Clinical Data Manager

Professionals in clinical data management receive data management in clinical trials training, and often have the Certified Clinical Data Manager (CCDM) credential. Their studies can have optimized outcomes since they are executed by a competent CDM team with validated skill sets and continued professional development.

To become certified, the applicant must have the appropriate education and experience, including the following:

- A bachelor’s degree and two or more years of full-time data management experience.

- An associate’s degree and three or more years of full-time data management experience.

- Four years of full-time data management experience.

- Part-time data management experience that adds up to the requirements above.

Raleigh Edelstein , a clinical data manager and EDC programmer, discusses the credentialing in this field. “Anyone can excel in this profession,” she says. “A CRA — a clinical research associate, also called a clinical monitor or a trial monitor — may need this credential more, as their profession is more competitive, and their experience is more necessary in trials. But if the credential makes you more confident, then I say go for it. Your experience and confidence matter.”

There are several degrees with an emphasis on clinical research that can also teach the necessary technical skills. In addition to many online options, these include the following, or a combination of the following:

- Associate of Science in biology, mathematics, or pharmacy.

- Bachelor of Science in one of the sciences.

- Post-Master's certificate in clinical data management, or a certificate related to medical device and drug development.

- Master of Science in clinical research, biotechnology, bioinformatics.

- Doctor of Nursing Practice.

- Doctor of Philosophy in any clinical research area.

These degree programs include concepts that help data managers understand what clinical studies need. They especially focus on survey design and data collection, but also include the following:

- Biostatistics

- Clinical research management and safety surveillance

- Compliance, ethics, and law

- New product business and strategic planning

- New product research and development

These degree programs offer coursework that improves the relevant clinical research skills. Many of the courses are introductory to clinical research, trials, and pharmacology, and others include the following:

- Business processes

- Clinical outsourcing

- Clinical research biostatistics

- Clinical trial design

- Compliance and monitoring

- Data collection strategies

- Data management

- Electronic data capture

- Epidemiology

- Ethics in research

- Federal regulatory issues

- Health policy and economics

- Human research protection

- Medical devices and product regulation

- Patient recruitment and informed consent

- Pharmaceutical law

- Review boards

- Worldwide regulations for submission

Clinical data managers can get involved with several professional organizations worldwide, including the following:

- The Association for Clinical Data Management (ACDM): This global professional organization supports the industry by providing additional resources and promoting best practices.

- The Association Française de Data Management Biomédicale (DMB): This French data management organization shares information and practices among professionals.

- International Network of Clinical Data Management Associations (INCDMA): Based in the United Kingdom, this professional network exchanges information and discusses relevant professional issues.

- The Society for Clinical Data Management (SCDM): This global organization awards CCDM credential to professionals, provides additional education, and facilitates conferences in clinical data management.

FAQs about Clinical Trial Managers

The field of clinical management is quickly expanding in many forms to support the need for new research. Below are some frequently asked questions.

How do I become a clinical trial manager?

To become a clinical trial manager, you must obtain the appropriate education, experience, and credentialing, as detailed above.

What is better: a Master’s in Health Administration or a Master’s in Health Sciences?

To work as a clinical data manager, either degree program is appropriate. Your choice depends on your interest.

What can you do with a degree in biotechnology or bioenterprise?

Biotechnology is involved in the technology that aids in biological research, and bioenterprise takes the products of biotechnology and markets and sells them.

What is a clinical application analyst?

A clinical application analyst is a professional who helps clinics evaluate software systems and vendors.

What is a clinical data analyst?

A clinical data analyst is a professional who analyzes data from clinical trials, and develops and maintains databases.

Contract Research Organizations for Data Management Services

Contract research organizations (CROs) are companies that provide outsourced research services to industries such as pharmaceutical, biotechnology, and research development. Designed to keep costs low, studies can hire them to perform everything from overall project management and data management to technical jobs.

Studies can hire CROs that specialize as clinical trial data management companies so they don’t have to worry about having all the necessary skills in-house. According to Melissa Peda, “A consultant may have the expertise that someone already working in the organization may not have, so they make sense to bring in.” Further, a contractor outside of the business can bring a lack of bias to the project.

According to Raleigh Edelstein, “A third-party person in charge of data management may be necessary because you don’t have to worry about the lack of company loyalty that the data may need.”

CROs can offer skills such as the following:

- Annotation and review

- Coding and validation

- Database export, transfer, and locking

- Database integration

- Database setup and validation

- Double data entry and third-party review of discrepancies

- Form design

- Planning, such as project management and data management plans

- Quality assessments and auditing

- Software implementation and training

- SAE reconciliation

Related Topics in Clinical Data Management

The following are related topics to clinical data management:

- Application Analyst: This position deals with the software side of clinical trials. Examples of their work include choosing software, designing databases, and writing queries.

- Clinical Data Analyst: A professional who examines and verifies that clinical study data is appropriate and means what it is supposed to mean.

- Clinical Research Academic Programs: Entry-level professional positions in clinical trials often require a minimum of a bachelor’s degree.

- Clinical Research Associate: This clinical trial staff member designs and performs clinical studies.

- Laboratory Informatics: The field of data and computational systems specialized for laboratory work.

- Laboratory Information Management System (LIMS): LIMS enables collection and analysis of data from laboratory work. LIMS is specialized to work in different environments, such as manufacturing and pharmaceuticals.

- Scientific Management: This management theory studies workflows, applying science to process engineering and management.

Improve Clinical Trial Data Management with Smartsheet for Healthcare

Empower your people to go above and beyond with a flexible platform designed to match the needs of your team — and adapt as those needs change.

The Smartsheet platform makes it easy to plan, capture, manage, and report on work from anywhere, helping your team be more effective and get more done. Report on key metrics and get real-time visibility into work as it happens with roll-up reports, dashboards, and automated workflows built to keep your team connected and informed.

When teams have clarity into the work getting done, there’s no telling how much more they can accomplish in the same amount of time. Try Smartsheet for free, today.

Any articles, templates, or information provided by Smartsheet on the website are for reference only. While we strive to keep the information up to date and correct, we make no representations or warranties of any kind, express or implied, about the completeness, accuracy, reliability, suitability, or availability with respect to the website or the information, articles, templates, or related graphics contained on the website. Any reliance you place on such information is therefore strictly at your own risk.

These templates are provided as samples only. These templates are in no way meant as legal or compliance advice. Users of these templates must determine what information is necessary and needed to accomplish their objectives.

Discover why over 90% of Fortune 100 companies trust Smartsheet to get work done.

Best Practices for Research Data Management

- First Online: 15 June 2023

Cite this chapter

- Anita Walden 4 ,

- Maryam Garza 5 &

- Luke Rasmussen 6

Part of the book series: Health Informatics ((HI))

620 Accesses

Data is one of the most valuable assets to answer vital questions, so careful planning is needed to ensure quality while maximizing resources and controlling costs. Mature research and clinical trial organizations create and use data management plans to guide their processes from data generation to archiving. Today, clinical research activities are more complex due to a myriad of data sources, the use of new technologies, linkages between health care, patient reported, and research data environments, and the availability of digital tools. It is important to invest in training and hiring skilled data managers and informaticists to manage this rapidly changing landscape and to integrate variable and large-scale data. For everyone in the research enterprise, being aware of and implementing end-to-end data management best practices early in the research design phase can have a positive impact on data analysis.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Krishnankutty B, Bellary S, Kumar NB, Moodahadu LS. Data management in clinical research: an overview. Indian J Pharmacol. 2012;44(2):168.

Article PubMed PubMed Central Google Scholar

Society for Clinical Data Management. Good clinical data management practices(GCDMP) [Internet]. 2013 Oct [cited 2022 Jun 01]. https://scdm.org/wp-content/uploads/2019/05/Full-GCDMP-Oct-2013.pdf

Ulrich H, Kock-Schoppenhauer AK, Deppenwiese N, Gött R, Kern J, Lablans M, Majeed RW, Stöhr MR, Stausberg J, Varghese J, Dugas M. Understanding the nature of metadata: systematic review. J Med Internet Res. 2022;24(1):e25440.

Go Fair.(Meta)data are associated with detailed provenance [Internet]. [cited 2022 Jun 01]. https://www.go-fair.org/fair-principles/r1-2-metadata-associated-detailed-provenance/

U.S. Department of Health & Human Services (HHS). Human Research Protection Training [Internet]. [cited 2022 Jun 01]. https://www.hhs.gov/ohrp/education-and-outreach/online-education/human-research-protection-training/index.html

National Institutes of Health (NIH). Good Clinical Practice Training [Internet]. [cited 2022 Jun 01]. https://grants.nih.gov/policy/clinical-trials/good-clinical-training.htm

Zozus MN, Sanns W, Eisenstein E, Sanns B. Beyond EDC. J Soc Clin Data Manag. 2021;1(1):1–22.

Google Scholar

Park YR, Yoon YJ, Koo H, Yoo S, Choi CM, Beck SH, Kim TW. Utilization of a clinical trial management system for the whole clinical trial process as an integrated database: system development. J Med Internet Res. 2018;20(4):e9312.

Article Google Scholar

Michael W. Electronic Clinical Trial Management Systems: The Basics, Needs, and Outputs. SoCRA [Internet]. 2021 Jan 04 [cited 2022 Jun 01]. https://www.socra.org/blog/electronic-clinical-trial-management-systems-the-basics-needs-and-outputs/ .

United States Food and Drug Administration; Guidance for Industry Part 11, Electronic Records; Electronic Signatures. https://www.fda.gov/regulatory-information/search-fda-guidance-documents/part-11-electronic-records-electronic-signatures-scope-and-application

U.S. Department of Health & Human Services (HHS). Revised Common Rule Q&As [Internet]. [cited 2022 Jun 01]. https://www.hhs.gov/ohrp/education-and-outreach/revised-common-rule/revised-common-rule-q-and-a/index.html .

U.S. Food & Drug Administration (FDA). Use of Electronic Informed Consent in Clinical Investigations – Questions and Answers [Internet]. [cited 2022 Jun 01]. https://www.fda.gov/regulatory-information/search-fda-guidance-documents/use-electronic-informed-consent-clinical-investigations-questions-and-answers .

TransCelerate BioPharma. What is eConsent? [Internet]. [cited 2022 Jun 01]. https://www.transceleratebiopharmainc.com/assets/econsent-solutions/what-is-econsent/#implementation-guidance .

Research Electronic Data Capture (REDCap). https://www.project-redcap.org

National Institutes of Health (NIH). Use Common Data Elements for More FAIR Research Data [Internet]. Waltham, MA: USA. [cited 27 May 2022]. https://cde.nlm.nih.gov/home .

National Institutes of Health (NIH). BRIDG, ISO and DICOM [Internet]. [cited 27 May 2022]. https://bridgmodel.nci.nih.gov/bridg-iso-dicom .

Von Rosing M, Von Scheel H, Scheer AW. The complete business process handbook: body of knowledge from process modeling to BPM, vol. 1. Morgan Kaufmann; 2014.

Gane C. Structured systems analysis: tools and techniques. Prentice-Hall, NJ: Englewood Cliffs; 1978.

UNICOM Systems. Ward and Mellor data flow diagrams [Internet]. [cited 27 May 2022]. https://support.unicomsi.com/manuals/systemarchitect/11482/starthelp.html#page/Architecting_and_designing%2FStructuredAnalysisDesign.18.010.html%23

Visual Paradigm. DFD Using Yourdon and DeMarco Notation [Internet]. [cited 2022 Jun 01]. https://online.visual-paradigm.com/knowledge/software-design/dfd-using-yourdon-and-demarco

Lucidchart. What is a data flow diagram? [Internet]. [cited 2022 Jun 01]. https://www.lucidchart.com/pages/data-flow-diagram#section_0

Lucidchart. What is a data flow diagram? [Internet]. [cited 2022 Jun 01]. https://www.lucidchart.com/blog/data-flow-diagram-tutorial#:~:text=Data%20flow%20diagrams%20visually%20represent,a%20new%20system%20for%20implementation

Bellary S, Krishnankutty B, Latha MS. Basics of case report form designing in clinical research. Perspect Clin Res. 2014;5(4):159.

Guideline IH. Guideline for good clinical practice E6 (R1). ICH Harmon Tripart Guidel. 1996;1996(4)

Califf RM, Karnash SL, Woodlief LH. Developing systems for cost-effective auditing of clinical trials. Control Clin Trials. 1997;18(6):651–60.

Article CAS PubMed Google Scholar

Coons SJ, Eremenco S, Lundy JJ, O’Donohoe P, O’Gorman H, Malizia W. Capturing patient-reported outcome (PRO) data electronically: the past, present, and promise of ePRO measurement in clinical trials. Patient. 2015;8(4):301–9.

Article PubMed Google Scholar

Coons SJ, Gwaltney CJ, Hays RD, Lundy JJ, Sloan JA, Revicki DA, Lenderking WR, Cella D, Basch E. Recommendations on evidence needed to support measurement equivalence between electronic and paper-based patient-reported outcome (PRO) measures: ISPOR ePRO good research practices task force report. Value Health. 2009;12(4):419–29.

Denny JC. Chapter 13: mining electronic health records in the genomics era. PLoS Comput Biol. 2012;8(12):e1002823.

Article CAS PubMed PubMed Central Google Scholar

Robinson JR, Wei WQ, Roden DM, Denny JC. Defining phenotypes from clinical data to drive genomic research. Annu Rev Biomed Data Sci. 2018;1:69–92.

Westra BL, Christie B, Johnson SG, Pruinelli L, LaFlamme A, Sherman SG, Park JI, Delaney CW, Gao G, Speedie S. Modeling flowsheet data to support secondary use. Comput Inform Nurs. 2017;35(9):452.

Newton KM, Peissig PL, Kho AN, Bielinski SJ, Berg RL, Choudhary V, Basford M, Chute CG, Kullo IJ, Li R, Pacheco JA. Validation of electronic medical record-based phenotyping algorithms: results and lessons learned from the eMERGE network. J Am Med Inform Assoc. 2013;20(e1):e147–54.

Zozus MN, Young LW, Simon AE, Garza M, Lawrence L, Tounpraseuth S, Bledsoe M, Newman-Norlund S, Jarvis JD, McNally M, Harris KR. Training as an intervention to decrease medical record abstraction errors multicenter studies. Stud Health Technol Inform. 2019;257:526.

PubMed PubMed Central Google Scholar

National Institutes of Health Pragmatic Trials Collaboratory. Electronic Health Records Based Phenotyping [Internet]. [cited 2022 Jun 01]. https://rethinkingclinicaltrials.org/chapters/conduct/electronic-health-records-based-phenotyping/electronic-health-records-based-phenotyping-introduction/

Wei WQ, Denny JC. Extracting research-quality phenotypes from electronic health records to support precision medicine. Genome Med. 2015;7(1):1–4.

Article CAS Google Scholar

Peissig PL, Rasmussen LV, Berg RL, Linneman JG, McCarty CA, Waudby C, Chen L, Denny JC, Wilke RA, Pathak J, Carrell D. Importance of multi-modal approaches to effectively identify cataract cases from electronic health records. J Am Med Inform Assoc. 2012;19(2):225–34.

Richesson RL, Smerek MM, Cameron CB. A framework to support the sharing and reuse of computable phenotype definitions across health care delivery and clinical research applications. eGEMs. 2016;4(3):1232.

Levinson RT, Malinowski JR, Bielinski SJ, Rasmussen LV, Wells QS, Roger VL, Wiley LK Identifying heart failure from electronic health records: a systematic evidence review medRxiv. 2021.

Kirby JC, Speltz P, Rasmussen LV, Basford M, Gottesman O, Peissig PL, Pacheco JA, Tromp G, Pathak J, Carrell DS, Ellis SB. PheKB: a catalog and workflow for creating electronic phenotype algorithms for transportability. J Am Med Inform Assoc. 2016;23(6):1046–52.

HDR UK Caliber Phenotype Library [Internet]. HDR UK Phenotype Library. [cited 2022Aug3]. https://portal.caliberresearch.org/

Rasmussen LV, Brandt PS, Jiang G, Kiefer RC, Pacheco JA, Adekkanattu P, Ancker JS, Wang F, Xu Z, Pathak J, Luo Y. Considerations for improving the portability of electronic health record-based phenotype algorithms. In AMIA Annual Symposium Proceedings 2019 (Vol. 2019, p. 755). American Medical Informatics Association.

Mo H, Thompson WK, Rasmussen LV, Pacheco JA, Jiang G, Kiefer R, et al. Desiderata for computable representations of electronic health records-driven phenotype algorithms. J Am Med Inform Assoc. 2015;22(6):1220–30.

Richesson R, Wiley LK, Gold S, Rasmussen L. Electronic Health REcords-Based Phenotyping: Introduction. NIH Health Care Systems Research Collaboratory Electronic Health Records Core Working Group. Electronic health records based phenotyping in next-generation clinical trials: a perspective from the NIH Health Care Systems Collaboratory. J Am Med Inform Assoc. 2021;20:e226–31. https://doi.org/10.1136/amiajnl-2013-001926 .

Richesson RL, Rusincovitch SA, Wixted D, Batch BC, Feinglos MN, Miranda ML, Hammond W, Califf RM, Spratt SE. A comparison of phenotype definitions for diabetes mellitus. J Am Med Inform Assoc. 2013;20(e2):e319–26.

Haendel MA, Chute CG, Bennett TD, Eichmann DA, Guinney J, Kibbe WA, Payne PR, Pfaff ER, Robinson PN, Saltz JH, Spratt H. The national COVID cohort collaborative (N3C): rationale, design, infrastructure, and deployment. J Am Med Inform Assoc. 2021;28(3):427–43.

U.S. Department of Health & Human Services (HHS). Summary of the HIPAA Privacy Rule [Internet]. [cited 2022 Jun 01]. https://www.hhs.gov/hipaa/for-professionals/privacy/laws-regulations/index.html .

Hemming K, Kearney A, Gamble C, Li T, Jüni P, Chan AW, Sydes MR. Prospective reporting of statistical analysis plans for randomised controlled trials. Trials. 2020;21(1):1–2.

Yuan I, Topjian AA, Kurth CD, Kirschen MP, Ward CG, Zhang B, Mensinger JL. Guide to the statistical analysis plan. Pediatr Anesth. 2019;29(3):237–42.

Services H. National Institutes of Health. Clinical trials registration and results information submission. Final rule. Fed Regist. 2016;81(183):64981–5157.

Guideline IH. Statistical Principles for Clinical Trials. Geneva In International Conference on Harmonisation of Technical Requirements for Registration of Pharmaceuticals for Human Use 1998.

Chan AW, Tetzlaff JM, Gøtzsche PC, Altman DG, Mann H, Berlin JA, Dickersin K, Hróbjartsson A, Schulz KF, Parulekar WR, Krleža-Jerić K. SPIRIT 2013 explanation and elaboration: guidance for protocols of clinical trials. BMJ. 2013:346.

Gamble C, Krishan A, Stocken D, Lewis S, Juszczak E, Doré C, Williamson PR, Altman DG, Montgomery A, Lim P, Berlin J. Guidelines for the content of statistical analysis plans in clinical trials. JAMA. 2017;318(23):2337–43.

Weiskopf NG, Weng C. Methods and dimensions of electronic health record data quality assessment: enabling reuse for clinical research. J Am Med Inform Assoc. 2013;20(1):144–51.

European Medicines Agency. ICH Topic E 6 (R1). Guideline for Good Clinical Practice. Note for Guidance on Good Clinical Practice.

European Medicines Agency. ICH Topic E 6 (R2). Guideline for Good Clinical Practice.

Hines S. Targeting source document verification. Appl Clin Trials. 2011;20(2):38.

Dykes B. Reporting vs. analysis: What’s the difference. Digital Marketing Blog. 2010.

Walden A, Nahm M, Barnett ME, Conde JG, Dent A, Fadiel A, Perry T, Tolk C, Tcheng JE, Eisenstein EL. Economic analysis of centralized vs. decentralized electronic data capture in multi-center clinical studies. Stud Health Technol Inform. 2011;164:82.

Download references

Author information

Authors and affiliations.

University of Colorado Denver—Anschutz Medical Campus, Denver, CO, USA

Anita Walden

University of Arkansas for Medical Sciences, Little Rock, AR, USA

Maryam Garza

Northwestern University Feinberg School of Medicine, Chicago, IL, USA

Luke Rasmussen

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Anita Walden .

Editor information

Editors and affiliations.

Learning Health Sciences, University of Michigan School of Medicin, Ann Arbor, MI, USA

Rachel L. Richesson

School of Information, University of South Florida, Tampa, FL, USA

James E. Andrews

Medical Informatics and Clinical Epidemiology, Oregon Health and Science University, Portland, OR, USA

Kate Fultz Hollis

Rights and permissions

Reprints and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Walden, A., Garza, M., Rasmussen, L. (2023). Best Practices for Research Data Management. In: Richesson, R.L., Andrews, J.E., Fultz Hollis, K. (eds) Clinical Research Informatics. Health Informatics. Springer, Cham. https://doi.org/10.1007/978-3-031-27173-1_14

Download citation

DOI : https://doi.org/10.1007/978-3-031-27173-1_14

Published : 15 June 2023

Publisher Name : Springer, Cham

Print ISBN : 978-3-031-27172-4

Online ISBN : 978-3-031-27173-1

eBook Packages : Medicine Medicine (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

An official website of the United States government

Here’s how you know

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you’ve safely connected to the .gov website. Share sensitive information only on official, secure websites.

Investigators should consider using this template when developing the Data and Safety Monitoring Plan (DSMP) for clinical studies funded by the National Institute of Arthritis and Musculoskeletal and Skin Diseases (NIAMS).

The goal of the DSMP is to provide a general description of a plan that you intend to implement for data and safety monitoring. The DSMP should specify the following:

- Primary and secondary outcome measures/endpoints

- Sample size and target population

- Inclusion and exclusion criteria

- A list of proposed participating sites and centers for multi-site trials

- Potential risks and benefits for participating in the study

- Procedures for data review and reportable events

- Project Director (PD)/Principal Investigator (PI) (required)

- Institutional Review Board (IRB) (required)

- Designated medical monitor

- Internal Committee or Board

- Independent, NIAMS-appointed Monitoring Body (MB) which can include a Data and Safety Monitoring Board (DSMB), an Observational Study Monitoring Board (OSMB), a Safety Officer (SO) or Dual SOs

- Content and format of the safety report

- Data Management, Quality Control and Quality Assurance

Note that all sample text should be replaced with the study specific text. There is no need to include sections that are not relevant to the particular study. Please do not use the sample text verbatim .

TABLE OF CONTENTS

1.0 Study Overview

- 1.1 Study Description

- 1.2 Study Management

2.0 Participant Safety

- 2.1.1 Potential Risks

- 2.1.2 Potential Benefits

- 2.2.1 Informed Consent Process

3.0 Reportable Events

- 3.1.1 Adverse Events (AEs)

- 3.1.2 Serious Adverse Events (SAEs)

- 3.1.3 Unanticipated Problems (UPs)

- 3.1.4 Protocol Deviations

- 3.2 Collection and Assessment of AEs, SAEs, UPs, and Protocol Deviations

- 3.3.1 AE Reporting Procedures

- 3.3.2 SAE Reporting Procedures

- 3.3.3 UP Reporting Procedures

- 3.3.4 Protocol Deviation Reporting Procedures

- 3.3.5 Serious or Continuing Noncompliance

- 3.3.6 Suspension or Termination of IRB Approval

4.0 Interim Analysis & Stopping Rules

5.0 Data and Safety Monitoring

- 5.1 Frequency of Data and Safety Monitoring

- 5.2 Content of Data and Safety Monitoring Report

- 5.3 Monitoring Body Membership and Affiliation

- 5.4 Conflict of Interest for Monitoring Bodies

- 5.5 Protection of Confidentiality

- 5.6 Monitoring Body Responsibilities

6.0 Data Management, Quality Control, and Quality Assurance

1.0 Study Overview

1.1 study description.

This section outlines the overall goal of this project. It also describes the study design, primary and secondary outcome measures/endpoints, sample size/power calculation and target population, inclusion and exclusion criteria.

1.2 Study Management

This section includes the proposed participating sites and their responsibilities. In addition, this section should include the planned enrollment timetable (i.e. projected enrollment).

2.0 Participant Safety

2.1 potential risks and benefits for participants.

This section outlines the potential risks and benefits of the research for the study participants and for society. It should include a description of all expected adverse events (AEs), the known side effects of the intervention, and all known risks or complications of the outcomes being assessed.

2.1.1 Potential Risks

Outline potential risks for study participants including a breach of confidentiality.

{Begin sample text}

{End sample text}

2.1.2 Potential Benefits

Outline potential benefits for study participants or if there are no direct benefits to the participants.

2.2 Protection Against Study Risks

This section provides information on how risks to participants will be managed. It should specify any events that would preclude a participant from continuing in the study. In general, the format and content of this section are similar to the Human Participants section of the grant application.

In addition, this section describes measures to protect participants against study specific risks including the data security to protect the confidentiality of the data.

2.2.1 Informed Consent Process

This section explains the informed consent process. It should include, but not be limited to, who will be consenting the participant, how and under what conditions will a participant be consented, and that participation is voluntary. The informed consent process should meet the revised Common Rule requirements for consenting. For further details on this requirement, please visit: https://www.ecfr.gov/cgi-bin/text-idx?SID=921afb2e7909a2cf08c5f3ce160a0c96&mc=true&node=se45.1.46_1116 .

3.0 Data and Safety Monitoring

3.1 definitions.

This section should describe how to identify AEs, SAEs and UPs. In the case where the intervention is a Food and Drug Administration (FDA) regulated drug, device or biologic, it should include the FDA definition, grading scale and “study relatedness” criteria of AEs.

3.1.1 Adverse Events (AEs)

The definition of adverse event here is drawn from the OHRP guidance ( https://www.hhs.gov/ohrp/regulations-and-policy/guidance/reviewing-unanticipated-problems/index.html ); for some studies, the ICH E6 definition may be more appropriate. Expected and unexpected AEs should be listed in this section.

3.1.2 Serious Adverse Events (SAEs)

SAEs are a subset of all AEs.

3.1.3 Unanticipated Problems (UPs)

The OHRP definition of UPs can be accessed using the link provided in Section 3.1.1 above.

{End sample text}

3.1.4 Protocol Deviations

This section should include the study definition of protocol deviations and define the events placing the participant at increased risk of harm or compromising the integrity of the safety data.

3.2 Collection and Assessment of AEs, SAEs, UPs, and Protocol Deviations

The section should include who is responsible for collecting these events, how the information will be captured, where the information will be collected from (e.g., medical records, self-reported), and what study form(s) will be used to collect the information (e.g., case report forms, direct data entry). This section should also include what type of information will be collected (e.g., event description, time of onset, assessment of seriousness, relationship to the study intervention, severity, etc.). Note that it is the NIAMS requirement to collect all AEs regardless of the expectedness or relatedness.

This section should also describe who is responsible for assessing these events. The individual(s) responsible should have the relevant clinical expertise to make such an assessment (e.g., physician, nurse practitioner, physician assistant, nurse). When assessing AEs and SAEs, the following information should be included:

- Possibly/Probably (may be related to the intervention)

- Definitely (clearly related to the intervention)

- Not Related (clearly not related to the intervention)

3.3 Reporting of AEs, SAEs, UPs, Protocol Deviations, Serious or Continuing Noncompliance, and Suspension or Termination of IRB Approval

This section should describe who is responsible for reporting these events and the roles and responsibilities of each person on the clinical study team who is involved in the safety reporting to the IRB, FDA (if applicable), Monitoring Body, and NIAMS (through the NIAMS Executive Secretary). It should also include the Office for Human Research Protections (OHRP) and FDA reporting requirements. See NIAMS Reportable Events Requirements and Guidelines for more details.

3.3.1 AE Reporting Procedures

All non-serious AEs (regardless of expectedness or relatedness) are reported to the Monitoring Body and NIAMS (through the NIAMS Executive Secretary) semi-annually or as determined by the NIAMS.

3.3.2 SAE Reporting Procedures

All SAEs (regardless of expectedness or relatedness ) must be reported in an expedited manner to the NIAMS and the Monitoring Body. There may be different timeline for reporting SAE to the IRBs, FDA (if applicable), Monitoring Body and the NIAMS. The timeline for reporting SAEs to the Monitoring Body and NIAMS (through the NIAMS Executive Secretary) is within 48 hours of the investigator becoming aware of the event so that a real time assessment can be conducted, and the outcome shared in a timely manner.

3.3.3 UP Reporting Procedures

All events that meet the criteria of a UP must be reported in an expedited manner to the NIAMS and the Monitoring Body. There may be different timeline for reporting UPs to the IRBs, OHRP/FDA (if applicable), Monitoring Body, and the NIAMS. The timeline for reporting UPs to the Monitoring Body and NIAMS (through the NIAMS Executive Secretary) is within 48 hours of the investigator becoming aware of the event so that a real time assessment can be conducted, and the outcome shared in a timely manner.

3.3.4 Protocol Deviation Reporting Procedures

Protocol deviations impacting participant safety are subject to expedited reporting to the Monitoring Body and NIAMS (through the NIAMS Executive Secretary) within 48 hours of the investigator becoming aware of the even t so that a real time assessment can be conducted, and the outcome shared in a timely manner. All other events should be reported at the time of the routine DSMB meeting or submission of the safety report.

3.3.5 Serious or Continuing Noncompliance

This section should include the process in place at your institution to capture and report serious or continuing noncompliance. It should include who is responsible for reporting. Serious or continuing noncompliance must be reported to the NIAMS Program Officer and Grants Management Specialist within 3 business days of IRB determination. A copy of the IRB submission and determination must be submitted along with the report to the NIAMS. The guidance on reporting incidents to OHRP should also be followed to provide the timeline of reporting to this regulatory body.

3.3.6 Suspension or Termination of IRB Approval

This section should include the process for reporting study suspension or termination by the IRB. It should also include who is responsible for reporting to the NIAMS, OHRP, and the timeline for reporting of these events. Suspension or termination of IRB approval must include a statement of the reason(s) for the action and must be reported promptly to the NIAMS Program Officer and Grants Management Specialist within 3 business days of receipt by the PI.

4.0 Interim Analysis & Stopping Rules

This section provides information on planned interim analysis. Interim analysis may be conducted either due to pre-specified stopping rules as outlined in the protocol and at predetermined intervals, or as determined necessary by the Monitoring Body to assess safety concerns or study futility based upon accumulating data. An interim analysis may be performed for safety, efficacy and/or futility, and the reports are prepared by the unmasked study statistician or data coordinating center responsible for generating such reports. Rules for stopping the study, based on interim analysis, should be described.

If no interim analysis is planned, this should be noted within this section.

5.0 Data and Safety Monitoring

This section identifies the name of the individual or entity responsible for data and safety monitoring, what information will be reviewed, and frequency of such reviews. A brief general introduction regarding data and safety monitoring oversight should be provided in section 5.0, and further details should be provided in the subsequent sections.

5.1 Frequency of Data and Safety Monitoring