Top searches

Trending searches

stop bullying

11 templates

44 templates

welcome back

90 templates

27 templates

business pitch

695 templates

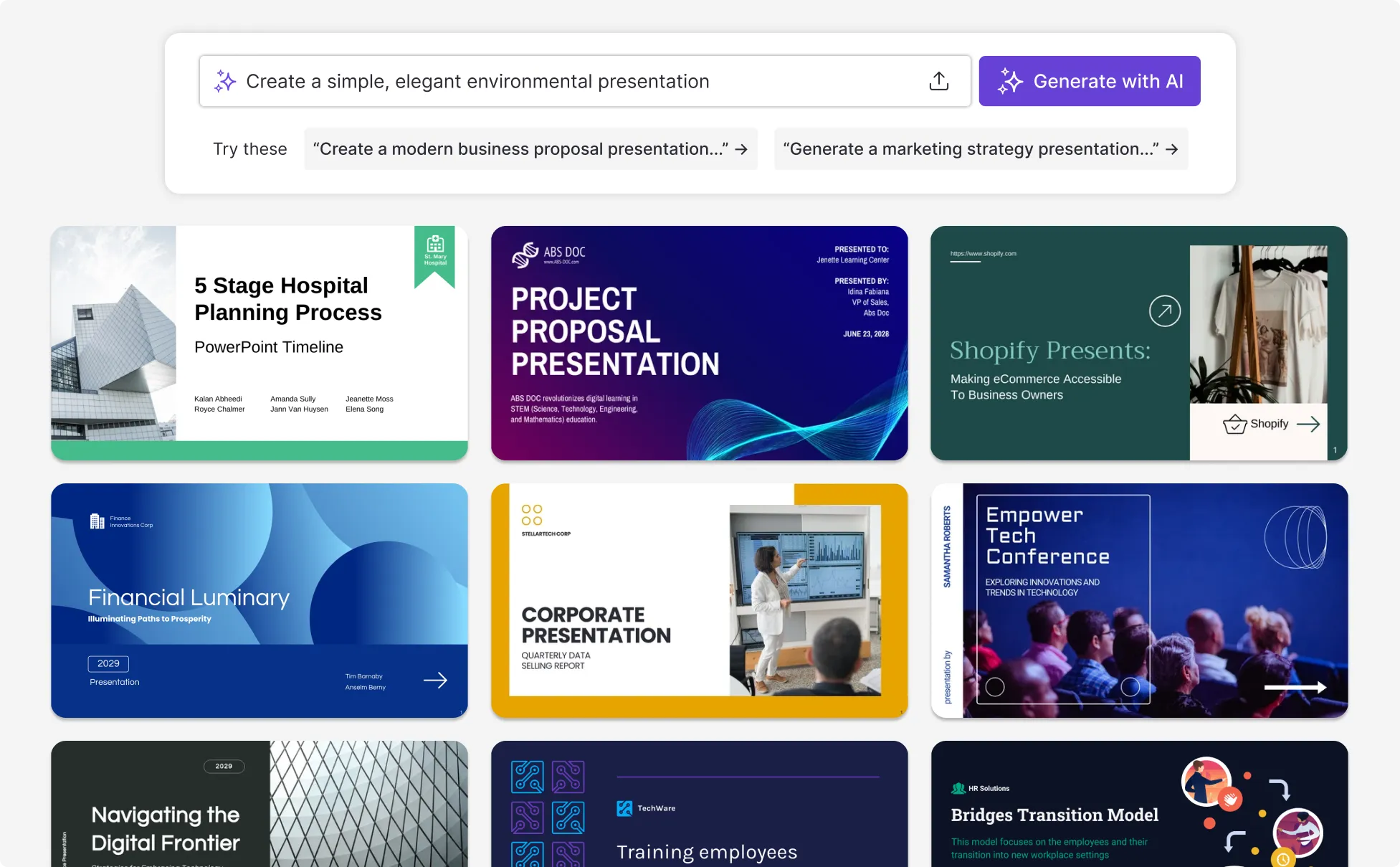

Create your presentation Create personalized presentation content

Writing tone, number of slides, ai presentation maker.

When lack of inspiration or time constraints are something you’re worried about, it’s a good idea to seek help. Slidesgo comes to the rescue with its latest functionality—the AI presentation maker! With a few clicks, you’ll have wonderful slideshows that suit your own needs . And it’s totally free!

Generate presentations in minutes

We humans make the world move, but we need to sleep, rest and so on. What if there were someone available 24/7 for you? It’s time to get out of your comfort zone and ask the AI presentation maker to give you a hand. The possibilities are endless : you choose the topic, the tone and the style, and the AI will do the rest. Now we’re talking!

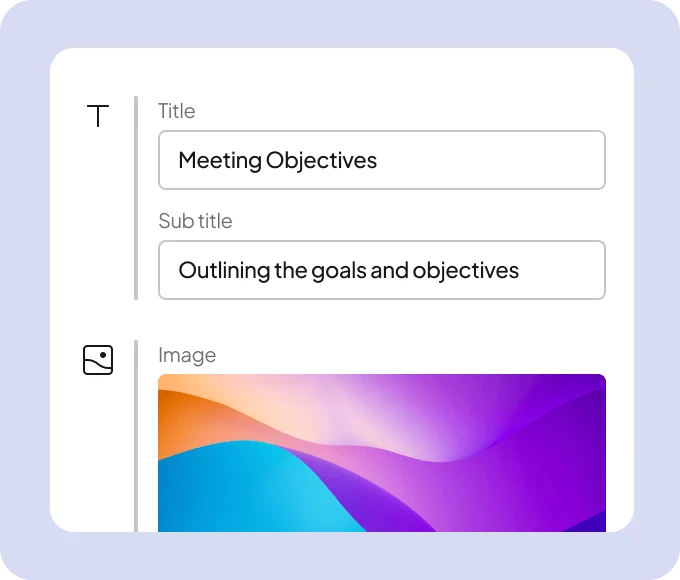

Customize your AI-generated presentation online

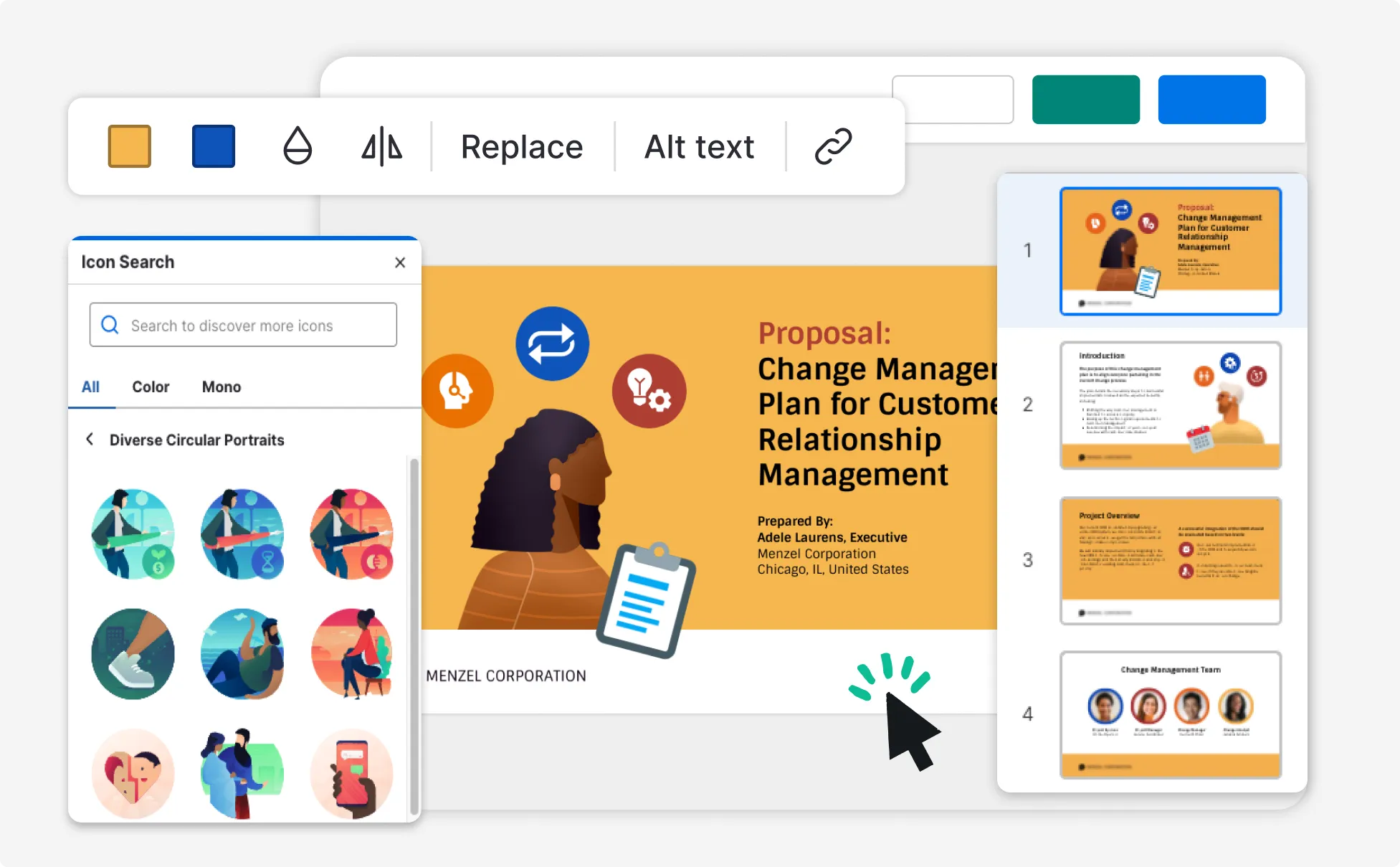

Alright, your robotic pal has generated a presentation for you. But, for the time being, AIs can’t read minds, so it’s likely that you’ll want to modify the slides. Please do! We didn’t forget about those time constraints you’re facing, so thanks to the editing tools provided by one of our sister projects —shoutouts to Wepik — you can make changes on the fly without resorting to other programs or software. Add text, choose your own colors, rearrange elements, it’s up to you! Oh, and since we are a big family, you’ll be able to access many resources from big names, that is, Freepik and Flaticon . That means having a lot of images and icons at your disposal!

How does it work?

Think of your topic.

First things first, you’ll be talking about something in particular, right? A business meeting, a new medical breakthrough, the weather, your favorite songs, a basketball game, a pink elephant you saw last Sunday—you name it. Just type it out and let the AI know what the topic is.

Choose your preferred style and tone

They say that variety is the spice of life. That’s why we let you choose between different design styles, including doodle, simple, abstract, geometric, and elegant . What about the tone? Several of them: fun, creative, casual, professional, and formal. Each one will give you something unique, so which way of impressing your audience will it be this time? Mix and match!

Make any desired changes

You’ve got freshly generated slides. Oh, you wish they were in a different color? That text box would look better if it were placed on the right side? Run the online editor and use the tools to have the slides exactly your way.

Download the final result for free

Yes, just as envisioned those slides deserve to be on your storage device at once! You can export the presentation in .pdf format and download it for free . Can’t wait to show it to your best friend because you think they will love it? Generate a shareable link!

What is an AI-generated presentation?

It’s exactly “what it says on the cover”. AIs, or artificial intelligences, are in constant evolution, and they are now able to generate presentations in a short time, based on inputs from the user. This technology allows you to get a satisfactory presentation much faster by doing a big chunk of the work.

Can I customize the presentation generated by the AI?

Of course! That’s the point! Slidesgo is all for customization since day one, so you’ll be able to make any changes to presentations generated by the AI. We humans are irreplaceable, after all! Thanks to the online editor, you can do whatever modifications you may need, without having to install any software. Colors, text, images, icons, placement, the final decision concerning all of the elements is up to you.

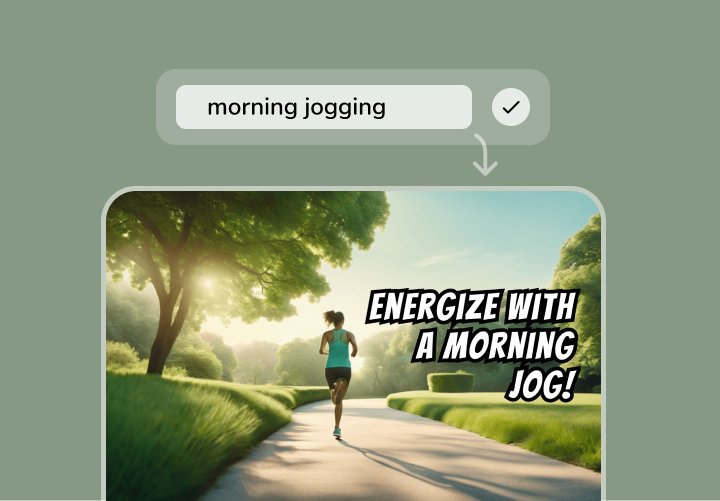

Can I add my own images?

Absolutely. That’s a basic function, and we made sure to have it available. Would it make sense to have a portfolio template generated by an AI without a single picture of your own work? In any case, we also offer the possibility of asking the AI to generate images for you via prompts. Additionally, you can also check out the integrated gallery of images from Freepik and use them. If making an impression is your goal, you’ll have an easy time!

Is this new functionality free? As in “free of charge”? Do you mean it?

Yes, it is, and we mean it. We even asked our buddies at Wepik, who are the ones hosting this AI presentation maker, and they told us “yup, it’s on the house”.

Are there more presentation designs available?

From time to time, we’ll be adding more designs. The cool thing is that you’ll have at your disposal a lot of content from Freepik and Flaticon when using the AI presentation maker. Oh, and just as a reminder, if you feel like you want to do things yourself and don’t want to rely on an AI, you’re on Slidesgo, the leading website when it comes to presentation templates. We have thousands of them, and counting!.

How can I download my presentation?

The easiest way is to click on “Download” to get your presentation in .pdf format. But there are other options! You can click on “Present” to enter the presenter view and start presenting right away! There’s also the “Share” option, which gives you a shareable link. This way, any friend, relative, colleague—anyone, really—will be able to access your presentation in a moment.

Discover more content

This is just the beginning! Slidesgo has thousands of customizable templates for Google Slides and PowerPoint. Our designers have created them with much care and love, and the variety of topics, themes and styles is, how to put it, immense! We also have a blog, in which we post articles for those who want to find inspiration or need to learn a bit more about Google Slides or PowerPoint. Do you have kids? We’ve got a section dedicated to printable coloring pages! Have a look around and make the most of our site!

The World's Best AI Presentation Maker

Key features of our AI presentation maker

Use AI to create PPTs, infographics, charts, timelines, project plans, reports, product roadmaps and more - effortless, engaging, and free to try

Effortless Creation

Instantly transform ideas into professional presentations with our AI-driven design assistant.

Personalized Design

Automatically receive design suggestions tailored to your unique style and content.

Anti-fragile Templates

Employ templates that effortlessly adapt to your content changes, preserving design integrity.

PowerPoint Compatibility

Efficiently export your presentations to PowerPoint format, ensuring compatibility and convenience for all users.

Ensure consistent brand representation in all presentations with automatic alignment to your visual identity.

Seamless Sharing

Share your presentations effortlessly, with real-time sync and comprehensive access control

Analytics &Tracking

Leverage detailed insights on engagement and performance to refine your presentations.

Multi-device Compatibility

Edit and present from anywhere, with seamless access across all your devices.

Multilingual Support

Reach a global audience with presentation AI that supports multiple languages.

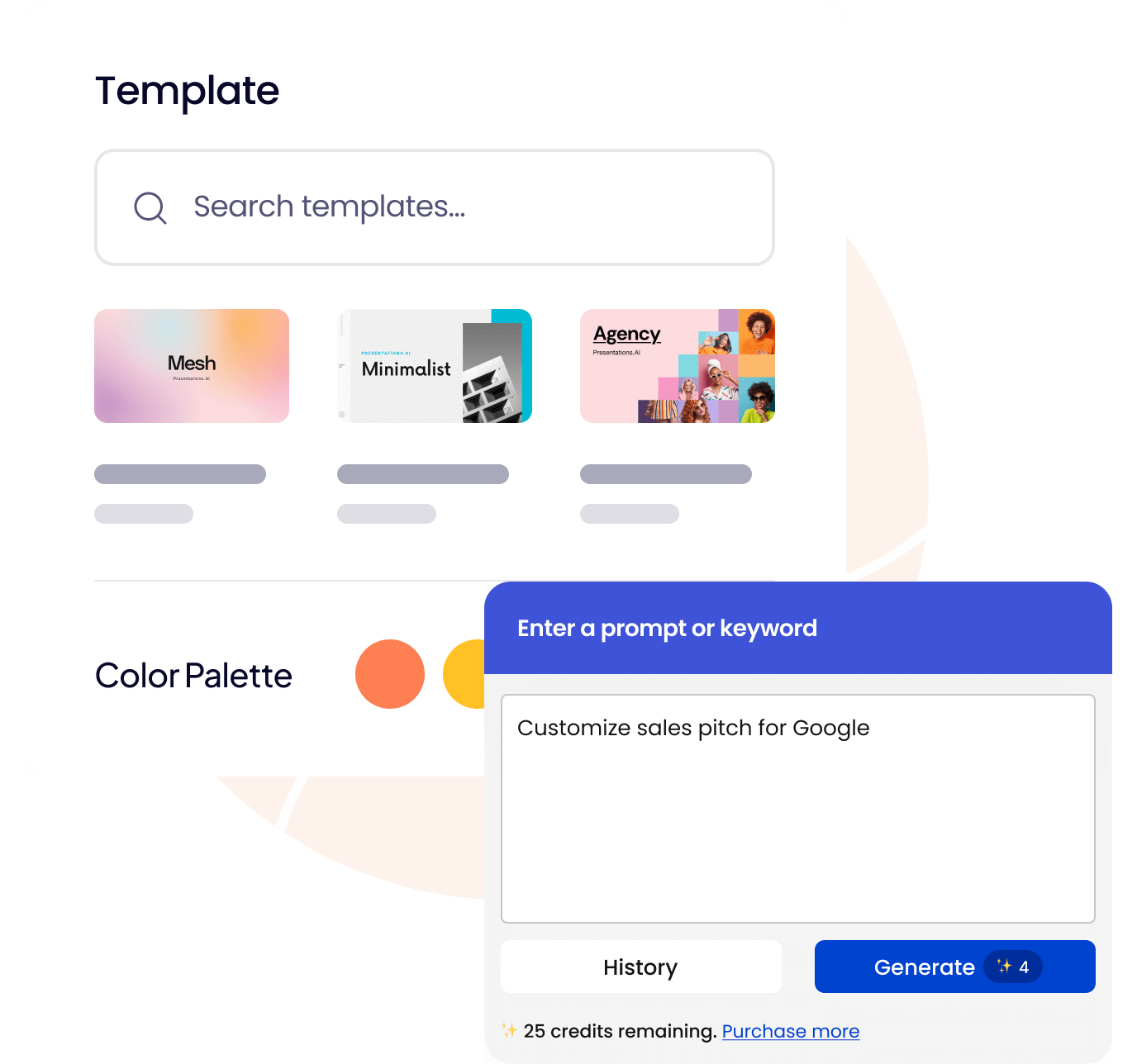

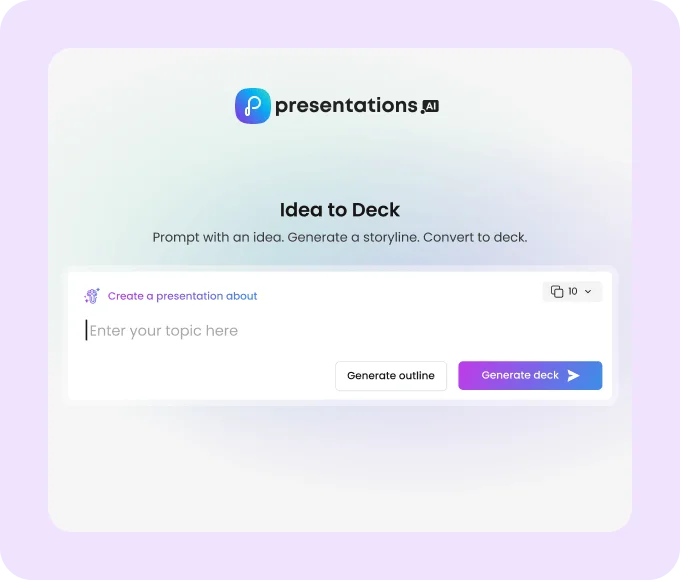

Idea to Deck in seconds

ChatGPT for Presentations Create stunning PPTs at the speed of thought with the world's best AI slide maker. You focus on the story. We handle the fine print.

Creative power that goes way beyond templates

Impress your audience with professional and engaging presentations created through AI. Easy to customize. Hard to go wrong.

Brand consistent

Ensure that your presentations match your brand's style and messaging through our proprietary "Brand Sync" feature.

Presentations.AI is simple, fast and fun

Bring your ideas to life instantly

You bring the story. We bring design.

A collaborative AI partner at your command

Create at the speed of thought.

10 Best AI Presentation Generators (July 2024)

Unite.AI is committed to rigorous editorial standards. We may receive compensation when you click on links to products we review. Please view our affiliate disclosure .

Table Of Contents

In the digital age, AI-powered presentation generators are revolutionizing the way we create and deliver presentations. These tools leverage artificial intelligence to streamline the creation process, enhance visual appeal, and boost audience engagement. Here, we discuss the top 10 AI presentation generators that can help you elevate your next presentation.

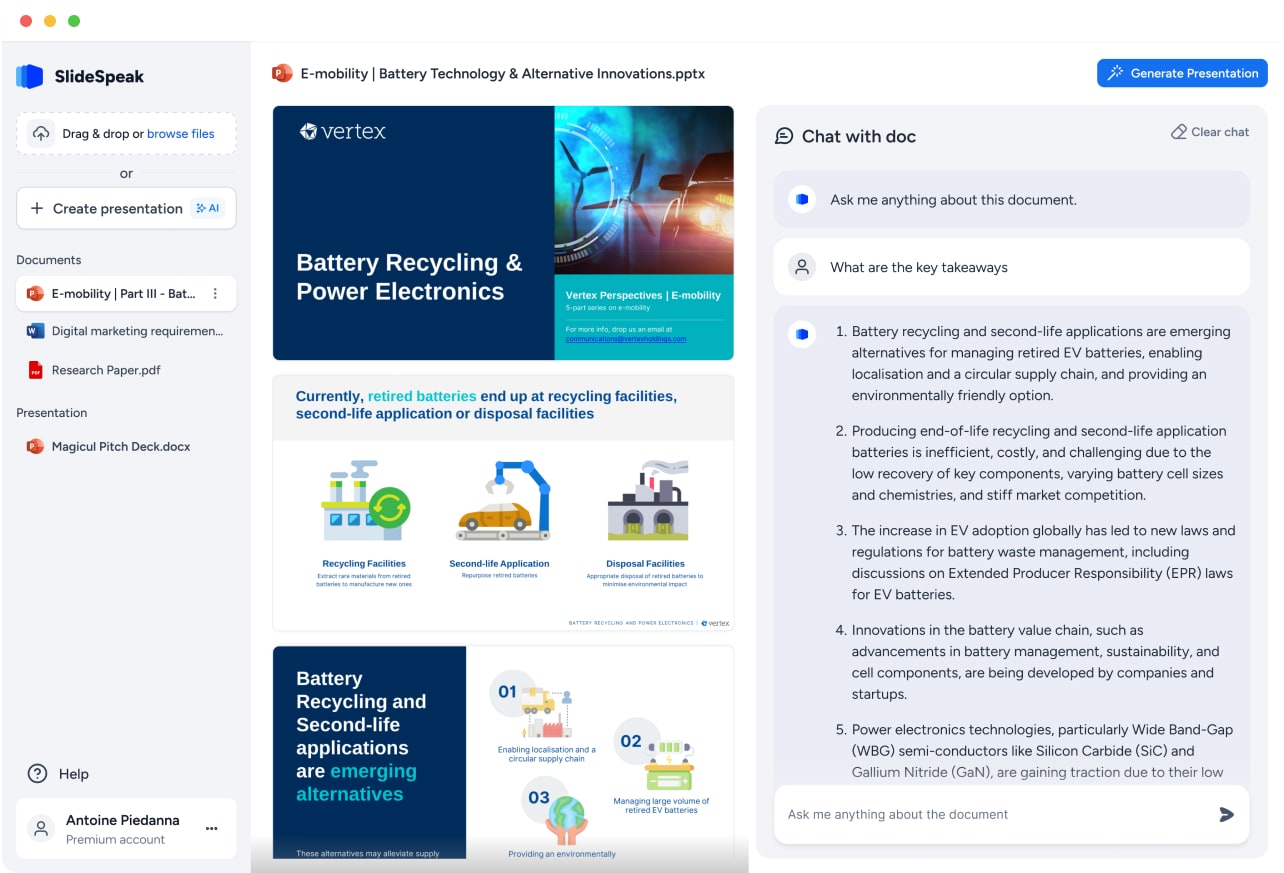

This tool enables users to create presentations and edit slides using Generative AI in Google Slides.

The AI-powered suggestions are a game-changer. It's like having a personal presentation assistant. The process is extremely simple, s tart with a prompt to generate a customizable outline, then watch as the AI turns it into slides in just a few minutes.

Once this is complete you have multiple options including rewriting the content to change the tone, or remixing the slide to transform the content into a specific layout.

Best of all, Plus AI will generate an outline, which you can customize before generating the presentation itself. To offer additional flexibility, when generating your slides, you can choose a visual theme. After the slides are generated, you can edit them just like any other presentation in Google Slides, export them for PowerPoint, and continue to edit them with Plus AI.

Top Features of Plus AI

- Powered by the latest in Generative AI

- Integration between Google Slides and Powerpoint is seamless

- It creates a presentation that needs only minor editing when used with detailed prompts

- The ability to rewrite content on slides is a game-changer

Use discount code: UNITEAI10 to claim a 10% discount .

Read Review →

Visit Plus AI →

2. Slides AI

Slide AI simplifies the presentation-making process. Users start by adding their desired text into the system. This text forms the foundation of the presentation, with Slide AI's intelligent algorithms analyzing and structuring the content into a visually appealing format. This innovative approach not only enhances efficiency but also democratizes design skills, allowing users to focus on content quality without worrying about design complexities.

Understanding the significance of personalization, Slide AI offers extensive customization options. Users can select from a range of pre-designed color schemes and font presets to align the presentation's aesthetics with their message or brand identity. For those seeking a unique touch, the platform provides tools to create custom designs, offering unparalleled flexibility in tailoring the look and feel of presentations.

Top Features of Slides AI

- Slide AI transforms text into polished presentations effortlessly.

- Works with all major languages, including English, Spanish, French, Italian, & Japanese

- Choose from pre-designed presets or create your unique style for the perfect look and feel.

Visit Slides AI →

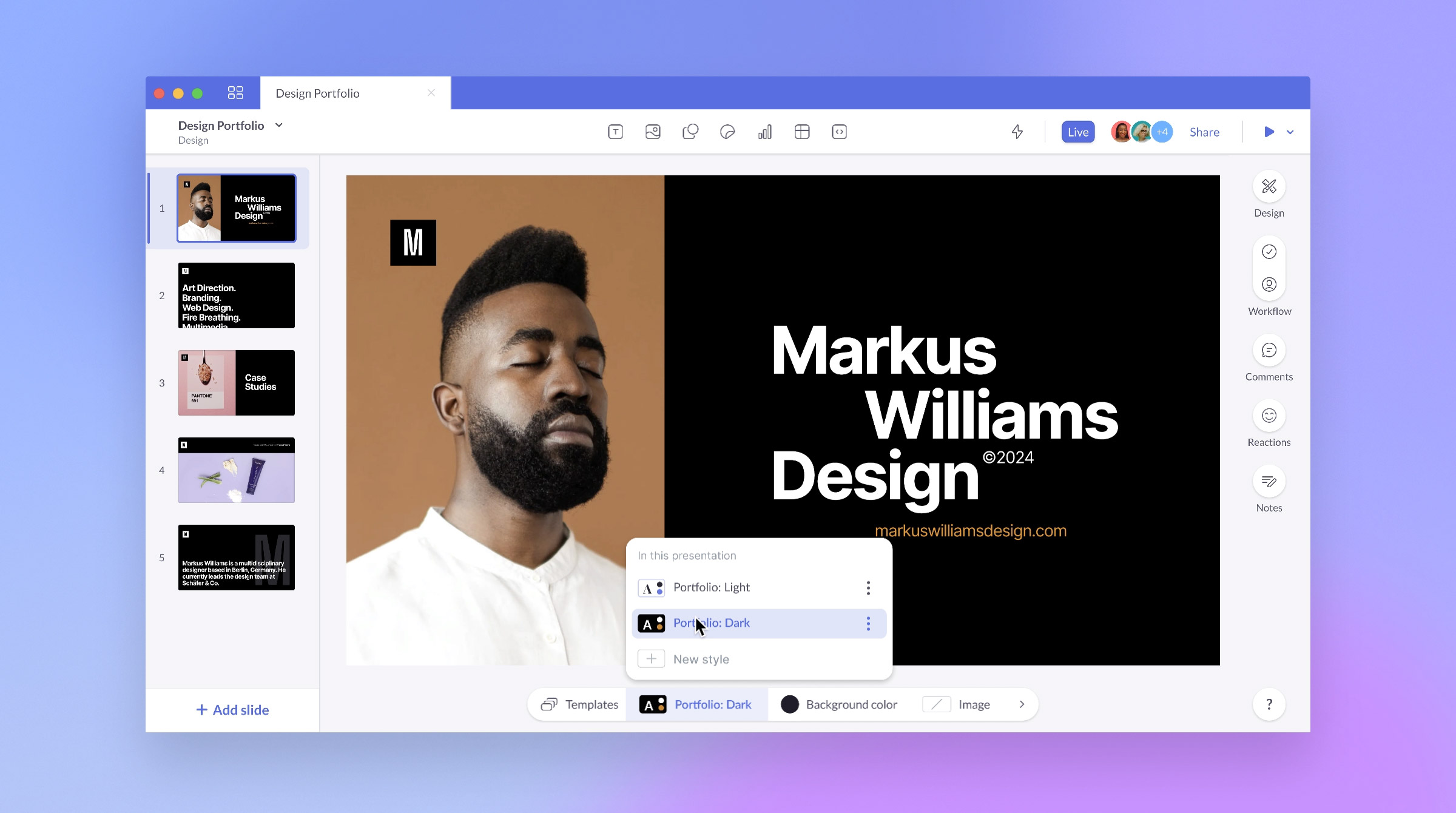

3. Beautiful.ai

Beautiful.ai is more than just a presentation tool; it's a smart assistant that helps you craft compelling narratives. As you begin to personalize your presentation, Beautiful.ai starts to understand your needs, offering suggestions for further enhancements. This predictive feature is a game-changer, making the design process more intuitive and less time-consuming.

But the innovation doesn't stop there. Beautiful.ai's voice narration feature adds an extra layer of communication, making your content more engaging. Imagine being able to narrate your slides, adding a personal touch to your presentation. This feature can be particularly useful for remote presentations, where the personal connection can sometimes be lost.

Top features of Beautiful.ai

- Anticipates user needs and offers suggestions

- Facilitates the creation of clear, concise presentations

- Voice narration feature for enhanced communication

Visit Beautiful.ai →

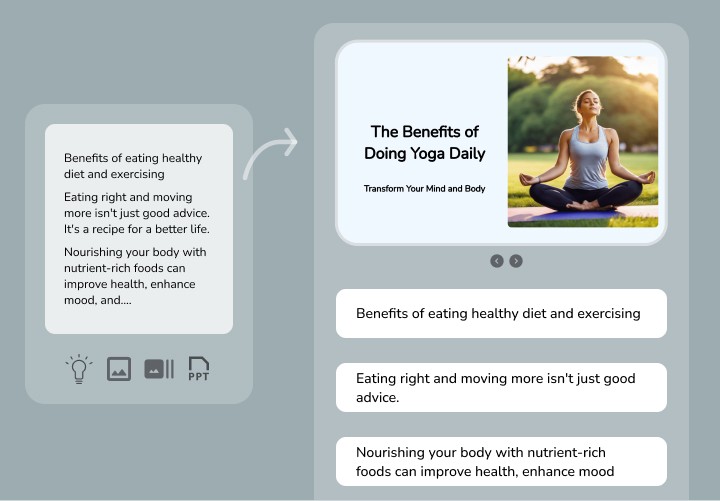

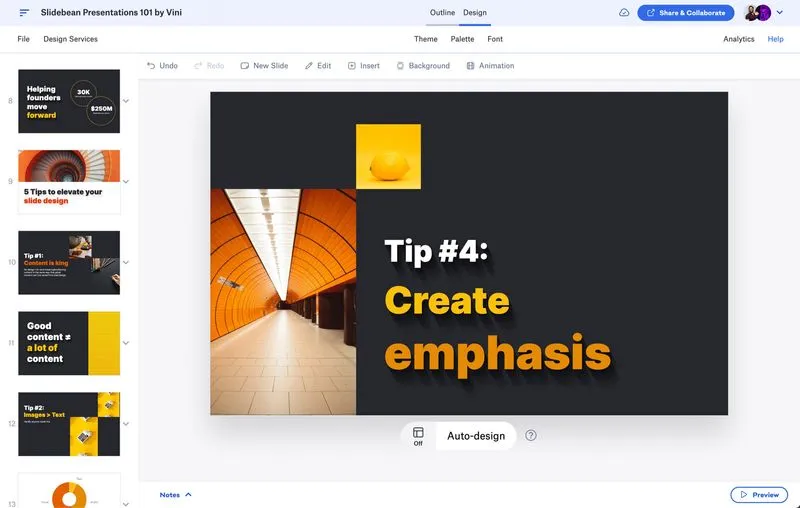

4. Slidebean

Slidebean is a web-based presentation tool that revolutionizes the way presentations are made. With just a few clicks, users can create powerful presentations that leave a lasting impression. The beauty of Slidebean lies in its ability to separate content creation from slide design. This means you can focus on what matters most – your message – while Slidebean takes care of the design.

Slidebean is particularly suitable for small to medium businesses that may not have a dedicated design team. Even users with zero design skills can create professional-looking slides, thanks to the collection of design templates, premium fonts, and high-end color palettes. Slidebean is not just an alternative to PowerPoint and Keynote; it's a step up.

Top features of Slidebean:

- Separates content creation from slide design

- Enables users with no design skills to create professional-looking slides

- Offers a collection of design templates, premium fonts, and high-end color palettes

Visit Slidebean →

Tome is an AI-powered presentation creator that goes beyond just designing slides. It serves as a collaborative AI assistant, helping users design engaging presentations from scratch. Using OpenAI’s ChatGPT and DALL-E 2 technology, Tome can understand your needs and generate content that resonates with your audience.

Tome offers ready-made templates and themes, AI-generated text and images, and tools for adding animations, videos, graphs, and more. But what sets it apart is its ability to understand your instructions. All you have to do is tell the AI assistant what you want, and it will do the rest. This makes the design process not just easier, but also more fun.

Top features of Tome:

- Uses OpenAI’s ChatGPT and DALL-E 2 technology

- Offers ready-made templates and themes, AI-generated text and images

- Provides tools for adding animations, videos, graphs, and more

Visit Tome →

6. Synthesia

Synthesia is a robust AI presentation maker that stands out for its user-friendly interface and unique features. One of its standout features is the ability to create your own AI avatar. This means you can add a personal touch to your presentation, making it more engaging and memorable.

With Synthesia, you don't need to be an expert to create high-quality presentations. The tool offers a wide range of professionally designed video templates that you can use as a starting point. From there, you can customize your presentation to suit your needs. Whether you're presenting to a small team or a large audience, Synthesia has you covered.

Top features of Synthesis:

- User-friendly interface

- Allows creation of personalized AI avatar

- Offers a wide range of professionally designed video templates

Visit Synthesia →

7. Simplified

Simplified is an AI presentation maker designed with collaboration in mind. It enables teams to work together seamlessly, creating presentations with the help of AI. This means you can collaborate with your team in real-time, making changes and seeing updates instantly.

After the AI generates a presentation, you can customize fonts, colors, and textures to make your presentation more impactful. You can also convert your slides into a video presentation by adding transitions. This feature can be particularly useful for remote presentations, where visual engagement is key.

Top features of Simplified:

- Designed for team collaboration

- Allows customization of fonts, colors, and textures

- Can convert slides into video presentations

Visit Simplified →

8. Sendsteps

Sendsteps is a drag-and-drop AI presentation maker that simplifies the creation process. It's not just about creating slides; it's about creating an interactive experience for your audience. With Sendsteps, you can add interactive elements such as polls, SMS voting, quizzes, etc., to your presentation, making it more engaging and interactive.

One of the standout features of Sendsteps is its multilingual support. You can create presentations in more than 11 languages, including Spanish, Italian, Portuguese, French, and Dutch. This makes it a great tool for international teams or for presentations to a global audience.

Top features of Sendsteps:

- Drag-and-drop interface

- Offers interactive elements like polls, SMS voting, quizzes

- Supports creation of presentations in more than 11 languages

Visit Sendsteps →

Prezi is a powerful AI presentation maker that can transform your ordinary slides into impactful presentations. It's not just about adding slides and text; it's about creating a narrative that captivates your audience. With Prezi, you can add a dynamic flow to your presentation, making it more engaging and memorable.

However, Prezi offers limited customization options after you choose a template. This means that while you can create a stunning presentation quickly, you may not have as much control over the final look and feel. Despite this, Prezi is a great tool for those who want to create a professional presentation quickly and easily.

Top features of Prezi:

- Transforms ordinary slides into impactful presentations

- Offers limited customization options after template selection

Visit Prezi →

Kroma is a popular AI presentation tool used by large organizations such as Apple and eBay. It gives you access to over a million creative assets and numerous data visualization elements, allowing you to create a visually stunning presentation. Whether you're presenting data, sharing a project update, or pitching a new idea, Kroma can help you do it.

One of the standout features of Kroma is its integration with MS PowerPoint and Apple’s Keynote. This means you can easily import your existing presentations and enhance them with Kroma's powerful features.

Top features of Kroma:

- Used by large organizations like Apple and eBay

- Provides access to over a million creative assets and data visualization elements

- Can be easily integrated with MS PowerPoint and Apple’s Keynote

Visit Kroma →

In the digital age, AI-powered presentation generators are revolutionizing the way we create and deliver presentations. These tools utilize artificial intelligence to simplify the creation process, enhance visual appeal, and increase audience engagement. By leveraging AI, users can quickly produce professional presentations that would typically require extensive time and design skills. Features such as personalized templates, voice narration, real-time collaboration, and multilingual support make these tools versatile and accessible for various needs. Adopting AI-driven presentation tools can greatly improve the quality and impact of your presentations, making them more engaging and effective.

10 Best AI Game Generators (July 2024)

10 Best AI Voice Changer Tools (July 2024)

Alex McFarland is an AI journalist and writer exploring the latest developments in artificial intelligence. He has collaborated with numerous AI startups and publications worldwide.

You may like

10 Best AI Writing Generators (July 2024)

9 Best AI Business Plan Generators (July 2024)

10 Best AI Headshot Generators (July 2024)

10 Best AI Video Generators (July 2024)

10 Best AI Marketing Tools (July 2024)

10 Best AI Tools for Business (July 2024)

Recent Posts

- Flash Attention: Revolutionizing Transformer Efficiency

- The Transformative Impact of Generative AI on Software Development and Quality Engineering

- Beyond Scripts: The Future of Video Game NPCs with Generative AI

- How Text-to-3D AI Generation Works: Meta 3D Gen, OpenAI Shap-E and more

- The Road Ahead for Autonomous Vehicle Adoption

- Artificial Intelligence

- Most Recent

- Presentations

- Infographics

- Data Visualizations

- Forms and Surveys

- Video & Animation

- Case Studies

- Design for Business

- Digital Marketing

- Design Inspiration

- Visual Thinking

- Product Updates

- Visme Webinars

15 Best AI Presentation Makers in 2024 [Free & Paid]

![ai websites for presentations 15 Best AI Presentation Makers in 2024 [Free & Paid]](https://visme.co/blog/wp-content/uploads/2023/11/Best-AI-Presentation-Makers-in-2024-Header.jpg)

Written by: Idorenyin Uko

![ai websites for presentations 15 Best AI Presentation Makers in 2024 [Free & Paid]](https://visme.co/blog/wp-content/uploads/2023/11/Best-AI-Presentation-Makers-in-2024-Header.jpg)

Creating a presentation from scratch can be time-consuming. Managing moving parts like content, design and visuals adds to the complexity.

The good news: AI is revolutionizing the way presentations are created. AI presentation makers leverage the power of artificial intelligence to simplify the process of creating visually appealing and impactful presentations. Anyone, regardless of their design experience, can whip up stunning presentations, pitch decks, slide decks and more in a fraction of the time.

Yet, with so many AI presentation makers flooding the market, making a choice can be overwhelming. We’ve got you covered!

In this article, we’ll review the 15 best AI presentation makers in 2024. Our extensive review covers their features, pricing, pros and cons so that you can make an informed decision.

Let’s get to it.

Table of Contents

- Beautiful.ai

- Wonderslide

Visme’s AI-Powered Tools

Visme is an all-in-one visual design and content authoring software that lets you create presentations for different purposes.

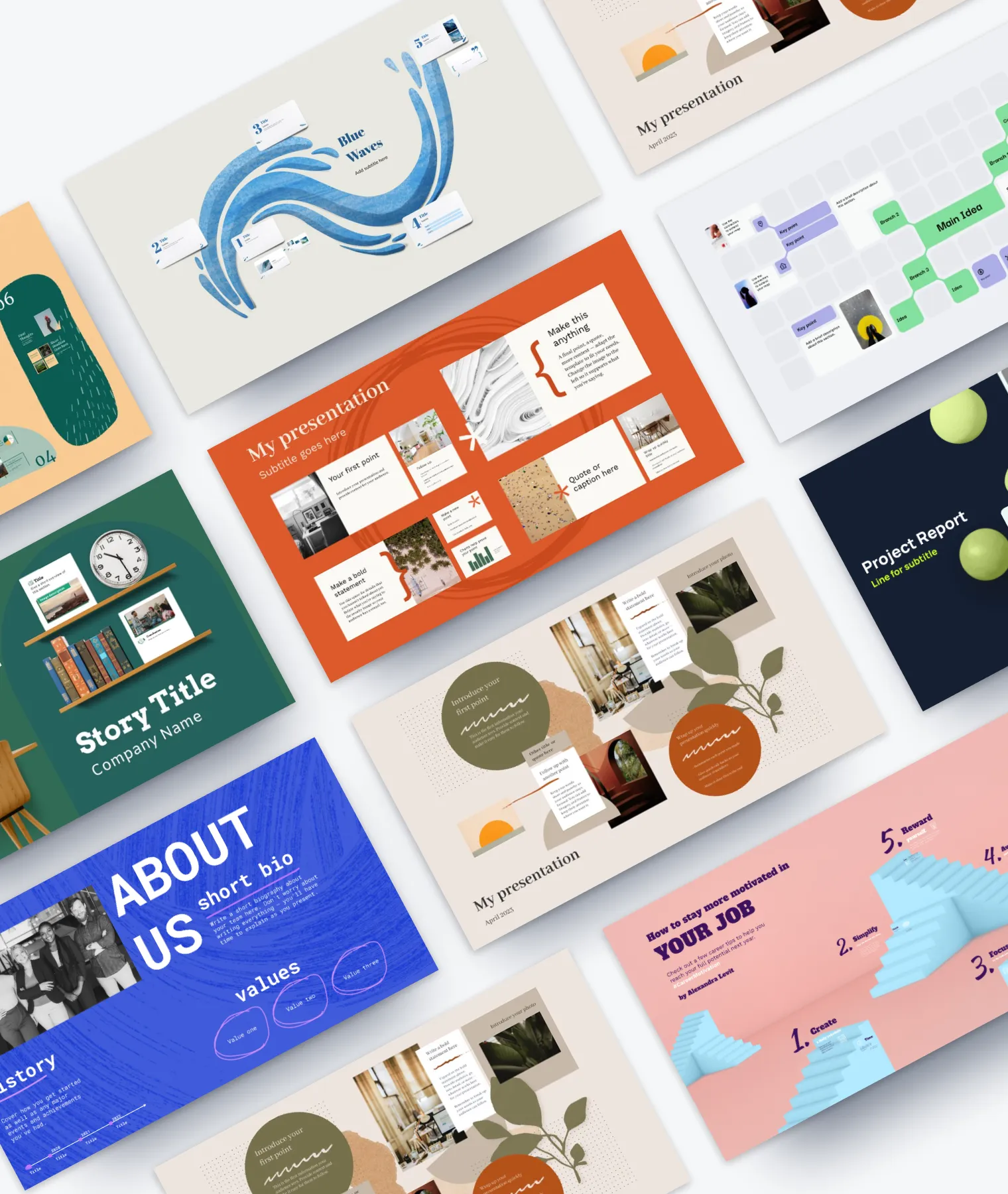

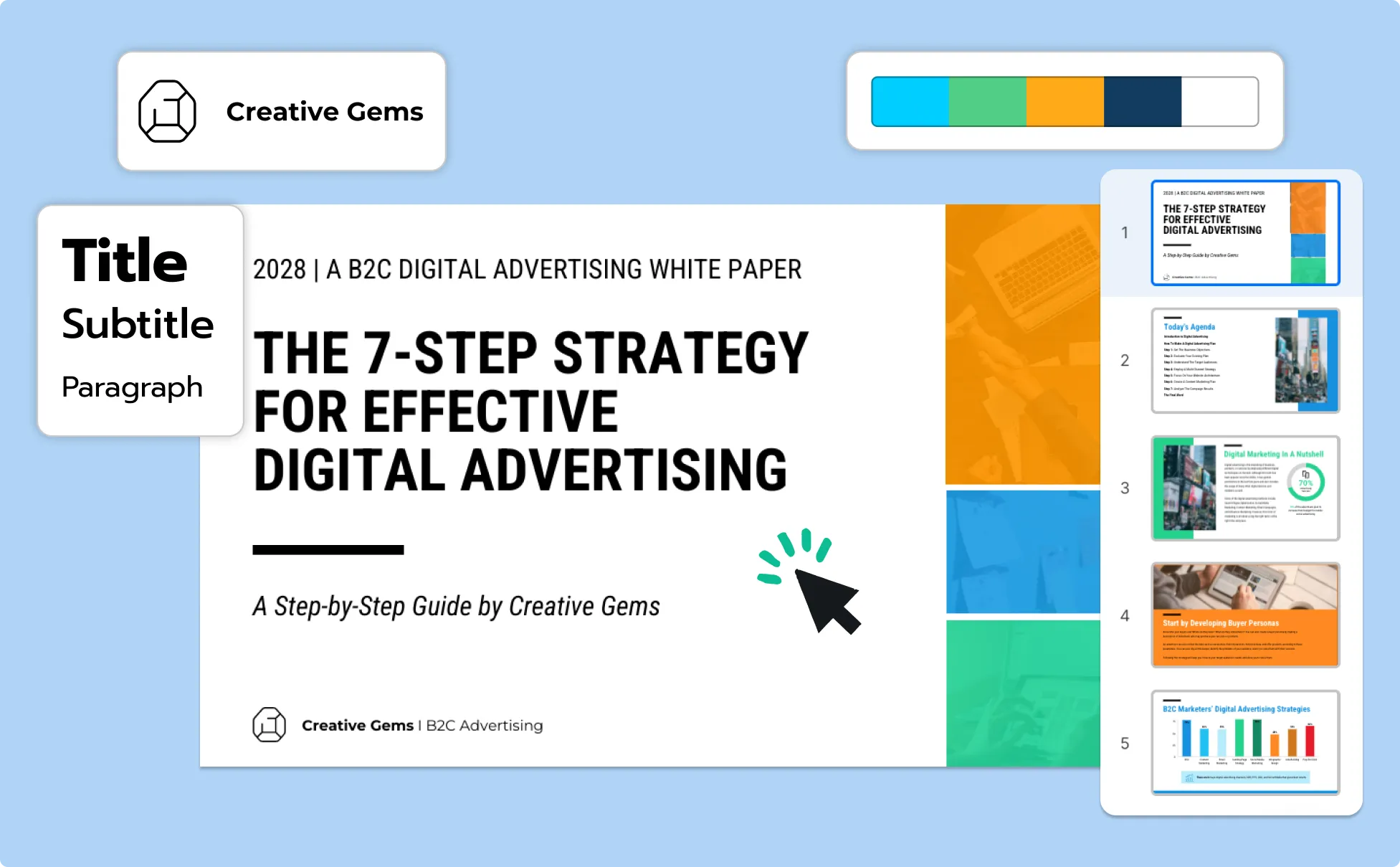

Visme’s AI presentation maker takes it up a notch. With accurate prompts, you can generate ready-to-use presentation slides in minutes. The tool not only gives you a creative head start but also provides design suggestions and customization options for you to choose from.

The possibilities are endless. All you have to do is converse with the Visme AI chatbot, provide detailed information about the content type, tone and style and watch the AI do the rest. The tool cleverly transforms your prompts into beautiful, multi-page presentations. That way, you can spend more time honing your pitch to perfection! It's like having your very own presentation wizard at your fingertips.

Visme isn’t just an AI presentation maker. It has other AI-powered tools like AI Document Generator , AI Report Writer , AI Business Plan Generator , AI Image Generator , AI Edit Tools and AI Text Generator. These tools can help you create stunning visual content in minutes and boost productivity.

Visme's drag-and-drop editor makes it easy for anyone with little to no design skills to create eye-catching pitch decks , slide decks and interactive presentations for sales , marketing , webinar and business .

Rich Library of Templates and Styles

The extensive template library in Visme means users will always have options to showcase their ideas. Visme has thousands of presentation templates for any purpose, use case, industry and more. Our professionally designed AI presentation templates ensure your slides have a polished and visually appealing look.

Array of Customization Options

The Visme editor is foolproof. This means anyone can customize their AI-generated presentation to fit their unique needs or tastes. The tool lets you customize every aspect of your presentation or project.

You can change the color theme of your presentation using the preset color themes or create your own color palette. It's also easy to modify the font type and color. You'll have access to 400+ font types to play with in Visme.

Extensive Library of Stock Photos/ AI Image Generator

Not satisfied with the images in your presentation? We've got you covered.

Visme’s royalty-free graphic library includes thousands of free icons, illustrations, stock photos , videos, 3D graphics, audio clips, charts and graphs to include in your presentations.

Or you can simply generate new ones with the AI image generator. There's also the option to upload your own image and use Visme's AI image touchup tools to beautify it.

Audio and Voiceover

Visme offers dozens of free audio clips you can attach to individual slides or set as background music for your entire presentation. In addition, you can record a voiceover for your next presentation. The audio clip will be saved to your audio library for reuse in other presentations.

Data Visualization

If you need to visualize data, Visme has over 20 charts, graphs and maps to choose from. You’ll also find 30+ widgets, tables and diagrams to display data in a snackable format and complement your data visualization needs. Plus, you can modify the color, values, legends and text for all of your data visualizations.

The best part of using Visme is that it supports integration with lots of third-party tools. For more advanced customization, you can connect your charts to live data or import data from other tools into your charts.

Animations and Interactive Elements

Ditch boring presentations and enliven them with animations and interactive elements available in Visme's editor. Engage your audience and keep them immersed with interactive navigations, transitions, hover effects, pop ups, embeddable content and more.

Animated 3D Graphics and Illustrations

Visme's animation software has over 450 3D animation graphics, illustrations and special effects. Not only are our 3D graphics incredibly versatile, but we guarantee they'll wow your audience and take your presentation to the next level.

Keep your presentations consistent with your brand by creating a brand kit with Visme. The kit includes your logo, fonts, colors and other visual assets.

You can either upload these assets manually, use a brand guideline template or use an AI-powered brand wizard. Using the Brand Design Tool is super easy. Just input your website URL and the wizard will grab your logo, fonts and colors and add them to your brand kit.

Collaboration

Collaboration is seamless with Visme, no matter the size of your team. Your team members can edit your presentation, leave comments and draw annotations in real-time or asynchronously.

With the Workflow feature , you can streamline the review, collaboration and project sign-off processes. You can assign specific slides to different team members, set due dates and require approvals.

Presenter Studio

Visme’s Presenter Studio lets you easily record projects and presentations for your audience to view at their own pace. Take advantage of the Presenter Notes feature to stay on track and recall important points while delivering your presentation.

Download and Sharing Options

Once your presentation is ready, there are several ways to share it with your audience. You can generate a shareable online link or an embed code that can be placed on a website. Plus, you can download your slides in different formats, including:

- Image: JPG and PNG

- Document: PDF & PDF with Bleed Marks

- Video: MP4 and GIF

- Present Offline: PPTX and HTML5

- LMS Export Formats: SCORM and xAPI

Track how your audience has engaged with your presentation with Visme analytics. This tool offers valuable insights into metrics like views, unique visits, average time spent, average completion and more.

Visme’s pricing plans include:

- Basic: FreeStarter: $29.00/month

- Pro: $59/month

- Visme for Teams: Contact Sales

- Intuitive and easy-to-use editor

- Ability to chat with the AI chatbot, meaning users are not limited in terms of style, tone, language, or other elements.

- Full suite of AI-powered tools to help users streamline their content production.

- Multiple design and customizable options.

- Thousands of free icons, illustrations, stock photos, videos, 3D graphics, audio clips, charts and graphs to include in your presentations.

- Unlimited number of template styles to choose from

- A wide selection of data visualizations, including charts, graphs, maps and widgets

- Tools that enable teams of all sizes to collaborate effectively.

- A diverse collection of templates catering to different presentation needs and themes.

- Robust animation and interactive features

Due to a wide variety of features, it may take some time to use all available options.

2. Slidesgo

Slidesgo is a popular online platform with free PowerPoint and Google Slides templates to elevate your presentations. The tool offers an AI PowerPoint maker that helps you generate presentations in minutes. It uses artificial intelligence to analyze content and automatically generate visually appealing slides, complete with images, videos and other design elements.

Just type out your topic and choose your preferred style and tone. Then you can customize the template by choosing your own colors, adding and rearranging elements.

- Intuitive AI presentation maker that can generate presentations quickly.

- Easy Customization: Users can easily customize slides to suit their specific needs. They can change colors, fonts and images, as well as add or edit content to create unique presentations.

- Regular Updates: Slidesgo regularly updates its template library, ensuring users can access fresh and contemporary presentation designs.

- User-Friendly Interface: The platform features a user-friendly interface. Users can easily browse through templates, preview them and download the ones they like without any hassle.

- Compatibility: Templates provided by Slidesgo are compatible with popular presentation software, including Microsoft PowerPoint and Google Slides.

Slidesgo has two pricing plans:

- Premium: $5.99/month/user

- Education: $3.50/month/user

- AI presentation software saves time as users don't need to start from scratch.

- Supports more than 13 languages.

- Interface is easy to navigate and use.

- Number of free templates is limited.

- Limited customization of design elements and layout alterations.

- Prompts, writing tone and style are limited. Users cannot create prompts beyond the provided options or criteria.

- Some bugs and layout limitations

- Animation and interactive elements are limited.

- Pricing is based on the number of users.

3. Beautiful.ai

Beautiful.ai is an AI-powered presentation maker designed to simplify the process of creating visually appealing and engaging presentations.

The DesignerBot offers users an innovative platform that leverages artificial intelligence to automate design elements, allowing for stunning presentations without the need for extensive design skills.

- Automated Design: The AI PowerPoint generator automatically arranges text, images and data in an aesthetically pleasing manner, saving users time and effort in designing each slide.

- AI Writer: Beautiful.ai can summarize, expand or rewrite text in a different tone.

- Text to Image Generator: Users can generate an Al image with a detailed search prompt.

- Data Visualization: Beautiful.ai can transform raw data into interactive and visually appealing charts and graphs, simplifying complex information for easy understanding.

- Brand Customization: Users can customize AI-generated presentations to match their brand colors, fonts and logos, ensuring brand consistency across presentations.

- Collaboration: Beautiful.ai allows for real-time collaboration, enabling teams to work together on presentations, share feedback and make edits simultaneously.

- Intuitive Interface: The platform features a user-friendly interface with drag-and-drop functionality, making it accessible to users with varying technical expertise. Integrations: Supports integrations with Slack, Dropbox, PowerPoint and Monday.com.

Beautiful.ai offers three paid plans.

- Pro: $12/month

- Team: $50/month

- Enterprise: Contact Sales

- Rich media library containing millions of free stock photos, videos and icons.

- Supports real-time collaboration.

- Customize templates to align with users’ brand identity.

- Ability to add voice narration over slides.

- Doesn’t have a free plan.

- Steep learning curve.

- Limited design capabilities and interactive elements.

- Only supports integrations with a handful of third-party tools.

4. Storydoc

Storydoc is an innovative AI-powered presentation maker that transforms the way presentations are created and delivered. It combines the power of artificial intelligence with storytelling techniques, enabling users to craft compelling narratives and visually engaging presentations seamlessly.

- Professional Templates: Access pre-made templates for the most popular use cases.

- AI-Driven Design: The platform uses AI algorithms to automate presentation creation. It structures and writes your content, assigns a design template and optimizes performance.

- AI Writing Assistant: Generate any text, rephrase to perfection and brainstorm ideas.

- AI Visual Assistant: Instantly generate any image you can imagine directly in your slides.

- Automatic Analytics Insights : Get full visibility into how people engage with your slide deck.

- Integrations : Connect your favorite tools to improve business processes.

Storydoc’s pricing plans includes:

- Starter: $40/month/ user

- Pro: $60/month/ user

- Supports integrations with a vast number of third-party software.

- AI-powered design results in professional-looking presentations—great for users without graphic design skills.

- Workflow is simple and intuitive.

- Modern user interface.

- Limited number of templates.

- Rigid Platform.

- Limited options to tailor templates, fonts and colors to match brand identity and create a unique visual style.

- Pricing is on the high side.

- Cannot embed presentations through an iframe or embed.

Canva is a popular online design platform that offers a user-friendly AI-powered presentation maker. With the Magic Design for Presentations, you can generate a first draft of your presentation instantly.

Simply input your text and watch as it transforms into a well-organized outline, vibrant slides and compelling content.

- Template Library: Canva offers a vast collection of professionally designed templates specifically tailored for presentations. Users can choose from various themes, styles and layouts.

- Library of Assets: Get access to a library of millions of photos, icons, graphics, media elements and more.

- Drag-and-Drop Interface: The intuitive drag-and-drop interface makes it easy to add text, images, video and other elements to slides.

- Real-time Collaboration: Ability to collaborate and get team members on the same page at the same time.

- Brand Kit: Users can store your logo, brand colors and fonts to maintain brand consistency.

- Magic Write: Easily generate, summarize, expand and re-write text.

- AI Photo Editing: Quickly erase, add to, edit and enhance your photos using AI-powered photo editing tools.

Let’s take a look at Canvas’ pricing plan

- Canva Free: $0/month

- Canva Pro: $14.99/ month

- Canva for Teams: $29.99/month

- Intuitive and user-friendly interface.

- Supports collaboration across teams of all sizes.

- Offers a diverse range of templates suitable for different industries.

- Offers an AI writing tool and an AI photo editing tool.

- Free plan only provides basic features and some advanced elements and templates are behind a paywall.

- Users might need some time to explore all the features, especially the advanced customization options.

6. Design.AI

Design.AI offers a cutting-edge AI presentation maker designed to simplify the process of creating visually appealing and impactful presentations. This all-in-one platform provides a range of powerful features to enhance your creative projects.

With AI writing assistance, a logo maker, a video presentation creator and natural-sounding AI voiceovers, it's your go-to toolkit for creating compelling content.

- Smart Templates: Design.AI boasts a wide selection of smart templates tailored for various presentation needs. These templates adapt to the content, ensuring consistent and polished designs across slides.

- Branding Kit: Users can apply consistent branding on all their presentations

- AI Writer: Create powerful, compelling marketing copy using AI to boost engagement and sales.

- Designmaker: Users can automatically generate thousands of design variations for creative projects.

- Speechmaker: Convert text into realistic voiceovers and add them to your presentation

- Automated Design Elements: The AI algorithms automatically handle design elements, such as layout, color schemes and typography, streamlining the creation process and maintaining a cohesive visual style.

- Millions of Design Assets: Rich library of stock images, graphics, shapes, frames and stickers.

- Content Enhancement: Design.AI provides premade color palettes, font pairs and illustrations, helping users refine their designs for clarity and impact.

Designs.AI has three pricing plans:

- Basic: $29/month

- Pro: $69/month

- Enterprise: $199/month

- Simple and user-friendly design interface.

- Offers a wide array of additional tools.

- No free plan.

- While automation is a strength, users might find limitations in terms of highly specialized or unique design needs.

- Doesn’t provide robust analytics or statistics for projects.

- Lacks data visualization capabilities.

- Users might require time to familiarize themselves with the platform's features.

7. Simplified

Simplified offers an advanced AI presentation maker that revolutionizes the way presentations are created. The text to presentation AI is crafted to simplify the creative process for users of all skill levels.

With the power of artificial intelligence, Simplified streamlines your design, enhances your creativity and improves your presentation quality.

- Premade Templates: Simplified offers a vast selection of smart templates designed for various uses.

- AI-Design Assistance: The platform offers AI-driven design suggestions, helping users create polished and professional slides effortlessly. From layout to color schemes, AI ensures a consistent and visually appealing design.

- Collaborative Tools: The tool offers real-time collaboration features that improve teamwork and productivity.

- AI-Powered Tools: Simplified provides a wide range of AI-powered tools like AI writer, AI image generator, magic resizer and so on.

- Design Customization Tool: An intuitive drag-and-drop interface lets users easily customize their design. Users can easily add text, images, videos and other elements to their presentation.

- Brand Kit: Users can create custom brand kits for their projects and business branding.

Simplified offers three pricing plans for its AI-Powered Graphic Design Tools, Stock Photos & Templates:

- Free Forever

- Pro: $9/month

- Business: $15/month

- Interface is easily navigable.

- Wide range of smart AI-powered tools.

- Rich library of stock images, icons and other visual assets.

- Turn presentations into a video project.

- Offers multiple export formats (PNG, JPG, PDF and SVG).

- Customization features are basic and limited.

- Limited number of predesigned templates.

- Basic animation features.

- Limited Data visualization tools.

Tome is an AI-powered presentation maker that helps users create professional and engaging presentations without any design skills. It uses artificial intelligence to analyze content and automatically generate visually appealing slides, complete with images and animations.

Users can simply input their text and data, select a template or theme and let Tome do the rest.

- Real-Time Collaboration: Tome AI presentation maker allows for collaboration and real-time feedback, making it ideal for teams and businesses.

- Third-Party Integration: Tome supports integration with various third-party tools such as Typeform, Google Sheets, Figma, Miro and others.

- AI-powered Designs: Automated slide creation using AI technology.

- Turn Documents into Presentations: Paste documents into Tome and convert them into structured narratives in a single click.

- AI Image Generator: Generate unique and professional images from text prompts.

- Robust Library of Brand Assets: Search Tome’s image libraries or upload your own.

- AI Writer: Fine-tune your copy by using AI to rewrite text, adjust tone and reduce or extend the length.

- Free Forever: $0

- Pro: $8/month/users

- Enterprise: Contact for Sales

- Easy to use, even for those with no design experience.

- Saves time by automating the design process.

- Produces high-quality, visually appealing presentations.

- Collaboration tools make it ideal for teams.

- Provides tools for incorporating animations, videos, graphs and more.

- Limited customization options compared to other presentation software.

- Occasional glitches or errors in AI-generated slides.

- Limited control over design elements.

- Not suitable for complex, data-heavy presentations.

9. Sendsteps

Sendsteps offers an innovative AI presentation maker that enables users to create engaging and impactful presentations in minutes. What makes Sendsteps stand out is its ability to facilitate real audience participation and engagement.

- AI-Generated Presentation Design: Users can describe the topic or upload a document and then the AI tool will generate interactivity, design and content.

- AI Content Creator: You can easily generate compelling text, visually stunning word clouds, or interactive quiz questions to boost audience engagement.

- Multi-Language Support: Create presentations in 86 languages, including English, Spanish, Dutch, Portuguese, Italian and French.

- Interactive Quizzes: Sendsteps supports interactive quizzes, enabling presenters to create engaging quizzes to test audience knowledge and enhance participation.

- Live Polls and Surveys: Sendsteps enables presenters to conduct live polls and surveys during presentations, allowing audience members to participate and provide instant feedback.

- Audience Feedback: Sendsteps' AI analyzes audience responses, providing valuable insights into audience sentiment and engagement levels.

Sendsteps’s pricing tiers include

- Free: $0.00/month

- Starter: $13.99/month

- Professional: $33.99/month

- Easy to use interface, even for those with no design experience.

- Offers a wide range of customizable templates and themes.

- Provides a comprehensive suite of real-time audience engagement tools, including live polls, quizzes and surveys.

- Support for multiple languages makes it accessible to a global audience.

- Limited control over design elements compared to traditional presentation software.

- Not suitable for highly complex or data-intensive presentations.

- Limited design assets and customization options.

- Occasional glitches or errors in the AI-generated slides.

- Limited integration with other apps and services compared to some other presentation software.

10. Decktopus

Decktopus is an AI-driven presentation maker that aims to simplify the process of creating visually appealing and professional presentations. It combines intuitive design elements with artificial intelligence technology to help users craft engaging presentations with ease.

- AI Content Suggestions: The platform provides AI-driven content suggestions, including target audience, purpose text and visuals. This helps users refine their message and enhance the overall quality of their presentations.

- Smart Templates: Decktopus offers a range of smart templates that automatically adjust based on the content, ensuring consistency and aesthetics throughout the presentation.

- Brand Customization: Users can easily customize and personalize the AI-generated content, slide layouts, colors, fonts and graphics to align with their brand identity and presentation style.

- Ease of Use: The user-friendly interface and drag-and-drop functionality make it easy for users, even those without design skills, to create professional-looking presentations.

- Collaboration: Decktopus enables collaboration, allowing multiple users to work on the same presentation simultaneously.

- Pro AI: $14.99/month

- Business AI: $48/user/month

- Features an intuitive interface.

- AI-driven content suggestions and smart templates save time and effort.

- Support collaboration with an unlimited number of team members.

- Rich library of design assets, including images, icons and graphics.

- Support integrations with multiple third-party software.

- Design customization options are basic.

- Customization options are totally controlled by AI.

- Doesn’t have a brand kit.

- No option to import PowerPoint presentations.

- Users cannot export in HTML.

Gamma AI is an advanced presentation maker that integrates artificial intelligence technology to streamline the presentation creation process. It is designed to help users create visually stunning and engaging presentations.

This makes it an ideal choice for businesses, educators and professionals seeking an efficient and innovative presentation solution.

- Smart Templates: Gamma AI offers a wide array of smart templates professionally designed for stunning presentations.

- Brand Customization: Users can customize templates, fonts, colors and layouts to match their branding.

- Export Capabilities: Ability to export presentations in PDF and PPT format.

- Analytics: Users can measure audience engagement with built-in analytics.

- AI-driven Content Suggestions: This feature helps users refine their message and enhance their content.

- Real-time Collaboration: Multiple users can work on the same presentation simultaneously, share feedback and make edits in a collaborative environment.

Gamma’s pricing plans are as follows:

- Free: $0/user/month

- Plus: $10 /user/month

- Pro: $20/user/ month

- Presentations have a polished and professional look.

- User-friendly interface makes the tool accessible to users with varying levels of technical expertise.

- Limited export options.

- Free plan shows limited change history.

- Limited capability for highly specific or intricate design customization.

Plus is an innovative AI presentation maker that integrates artificial intelligence to simplify the presentation creation process. The tool makes it easy for individuals or businesses to generate AI presentations or edit slides with AI.

What makes Plus special is that it has seamless integrations between Google Slides and PowerPoint.

- AI Design Assistance: The platform employs AI algorithms to provide content outlines and design suggestions and generate slides from text prompts.

- Presentation Editing with AI: Users can insert slides, remix layouts or create new formats with text prompts with AI.

- AI Writing and Rewriting Tool: You can modify the language and grammar, change the tone, lengthen, summarize or translate your text with Plus.

- Custom Presentation Themes: Easily generate custom presentations and add your logo, custom fonts and colors to match your brand or ask AI to do it for you.

- Multi-language Support: Plus AI can generate, edit and translate Google Slides presentations in Spanish, French, German, Portuguese, Italian and nearly any other language.

Below are the plan options in Plus AI

- Free: Free forever

- Slides:$15/user/month

- Pro:$25/user/month

- Enterprise: Contact sales for pricing

- Offers multi-language support.

- Real-time collaboration features available in Google Slides.

- Super easy to use, so users are sure to have a smooth and hassle-free experience.

- Supports integrations with a vast number of third-party tools like Slack, Notion, Confluence, Coda, Canva, etc.

- Offers more than 100,000 character prompts.

- Ability to automate workflows for future projects.

- Functions as an add-on on Google Slides, but you need to have a Google account to use it.

- Limited number of layouts and themes.

- Design customization options in Plus are limited.

- Lacks detailed analytics for tracking audience engagement.

13. Appy Pie

Appy Pie's AI presentation maker is a user-friendly and innovative tool designed to simplify the process of creating engaging presentations.

By leveraging artificial intelligence technology, the platform helps users craft visually appealing slideshows without requiring extensive design skills.

- User-Friendly Interface: Appy Pie Design offers an intuitive and user-friendly interface that makes it easy for anyone, regardless of their design expertise, to create presentations.

- AI-Powered Features: The tool leverages the power of artificial intelligence (AI) to streamline the presentation creation process. It also offers AI-generated suggestions for text, images and design elements.

- Extensive Template Collection: AI-Powered Appy Pie’s Presentation Template Collection offers a vast and diverse range of beautifully crafted designs, catering to every occasion and celebration.

- Customization Options: Users can customize every aspect of their presentation, including layouts, text, fonts, colors, images and layouts.

- Extensive Image Library: With a vast library of AI-generated images, illustrations, icons and backgrounds,

- Custom Design: Appy Pie gives users plenty of options to enhance their presentations visually. You can also upload your visual to add a personal touch.

Appypie design starts at $8/month. Additional features attract extra usage costs.

- User-friendly interface.

- Offers a wide array of AI-powered design and productivity tools.

- Supports integrations with a wide range of third-party tools.

- Rich library of templates.

- Real-time collaboration features are limited.

- Customization options are basic.

- Doesn’t offer a brand kit.

14. Wonderslide

Wonderslide is another AI-powered tool that helps you create presentations blazingly fast. Once you upload your draft slide, select your color, logo, themed images and fonts and the AI will handle the rest.

- Customization and Branding: Users can choose a color, font and style consistent with their company’s brand book.

- AI-Powered Design: The platform provides AI-driven design suggestions.

- Free Start: $0.00/month

- Pay As You Go: $4.99 per one-time use.

- Monthly: $9.99/month

- Yearly: $69.00/year

- Enterprise plan: Book a demo call

- AI designer works with PowerPoint and Google Slides files.

- Brand and customization options are limited.

- Lacks collaborative design features.

- Designs look amateurish.

- Inability to create presentations from scratch, requiring a draft presentation.

- Lacks animations and interactive elements.

- Limited number of design assets, illustrations, icons and graphics.

15. SlidesAI

SlidesAI is an innovative AI-powered presentation add-on tool that simplifies the process of creating visually appealing and compelling presentations in Google Slides. Anyone, regardless of their design ability, can create presentation slides with AI in seconds.

- AI-Powered Design Assistance: The platform provide AI-driven design suggestions, including images, layout, color schemes and typography.

- Magic Write: The AI-powered tool lets users generate high-quality outlines and text or rewrite existing content.

- Integration: The tool supports easy integration with Google Slides.

- Basic: $0.00/month

- Pro: $10.75/month

- Premium: $21.50/month

- Team: Pricing varies based on the number of team members

- Institution: Contact Sales

- Supports 100+ languages.

- Clean user interface.

- No technical expertise is required to use the app.

- The platform is rigid; you must install the tool as a Google Slides extension.

- Limited download and export options.

- Template library is limited.

- Some users report technical glitches while using the tool.

- Overall presentation designs aren’t impressive.

Visme is not just the best presentation maker . It offers a wide range of AI-powered tools to get your creative juices flowing and boost productivity, including:

AI Document Generator

- AI Report Writer

- AI Business Plan Generator

- AI Image Generator

- AI Edit Tools

- AI Text Generator

Brand Wizard

This tool streamlines the process of creating documents like business proposals, newsletters, reports, ebooks, whitepapers, case studies, plans, etc. The tool ignites your creative spark as you generate your first draft and produce incredible documents that will wow your audience.

By using Visme, you get more than the AI document generator. With the AI business plan generator , you can produce investment-ready business plans in seconds. Visme’s AI report writer automates the process of writing and designing reports. It allows you focus on analyzing data and deriving meaningful insights rather than getting bogged down in the intricacies of report creation.

Visme AI Edit Tools

With the Visme AI TouchUp tools, you can modify the appearance of any image. Users can easily erase and replace objects they don’t want in your images, remove backgrounds and unblur low-quality, smudged or motion-blurred images.

In addition, the tool also lets you enlarge the size of your images without losing visual quality.

Visme AI Text Generator

Whether you need creative content, professional articles, marketing copy, or even academic papers, Visme’s AI text generator can assist you in producing high-quality, tailored text.

The tool is also handy for editing tasks. You can edit, proofread, lengthen, summarize and switch tones for your text.

Visme’s AI Image Generators

We’ve reviewed the 11 Best AI Image Generators in 2023 [Free & Paid] . Here’s what we found: Visme stands out as one of the best you can find on the market. The design possibilities are limitless. Users can select from several creative outputs, including photos, paintings, pencil drawings, 3D graphics, icons and abstract art.

The AI-powered Brand Wizard helps keep your design on brand. The wizard generates your brand's fonts and styles across beautiful templates. Simply input your website URL, confirm your brand colors and fonts, choose the branded template theme you like the most and watch the magic happen.

Easily Tap into the Power of AI with Visme

And there you have it—our comprehensive review of the finest AI presentation makers in 2024.

But hey, if you're on the hunt for an exceptional AI presentation maker that not only meets but exceeds your expectations, look no further than Visme! We highly recommend giving it a try.

Our review clearly shows that Visme offers an unparalleled array of features. Not only can you create captivating presentations with ease, but you also gain access to a treasure trove of content authoring and visual design tools. Plus, the added perks of Visme's AI-powered solutions take your creativity to a whole new level.

But that's not all! By choosing Visme, you unlock a world of possibilities. Explore an extensive library of professionally designed templates for presentations, infographics, reports, plans, social media graphics and other assets. Collaborate seamlessly with your team, add animations and interactive elements and choose from our vast library of icons, stock photos and videos to make your content exceptional.

Ready to get started? Sign up now and let Visme's AI presentation maker transform your ideas into captivating visual stories that dazzle your audience.

Create stunning presentations in minutes with Visme

Trusted by leading brands

Recommended content for you:

Effortless Design Content in Minutes with the AI Design Generator

Supercharge your content creation and designs in minutes with the power of the AI Designer.

About the Author

AI Presentation Maker

Effortlessly create stunning presentations with our free ai presentation maker, designed to save you time and inspire your audience..

credit card not required

Magic Create

Presentation

Maximum pages: 1 page

Stock Media

Create impressive presentations with ai in minutes.

Tired of spending hours crafting presentations? Say hello to Fliki AI Presentation Maker, your ultimate solution for creating professional presentations in no time.

Our AI powerpoint generator empowers you to input your presentation idea and let AI do the heavy lifting. With AI-generated templates, premium stock media, and advanced features, you can transform your ideas into captivating presentations that leave a lasting impression.

Whether you're crafting pitch decks, educational presentations, marketing slideshows, or anything in between, our AI PPT generator is your go-to solution for captivating your audience and conveying your message effectively.

How to create a presentation in 3 simple steps

Write your presentation topic.

Begin by entering your presentation idea and selecting your preferred visual type - whether it's stock media or AI-generated media.

Customize your presentation

Personalize your presentation with different elements such as shapes, text, images, and media layers.

Download your presentation

Once your presentation is perfected, download it in PPTX format.

Loved by content creators around the world

5,000,000 +.

happy content creators, marketers, & educators.

average satisfaction rating from 5,500+ reviews on G2, Capterra, Trustpilot & more.

$95+ million

and 1,750,000+ hours saved in content creation so far.

Nicolai Grut

Digital Product Manager

Excellent Neural Voices + Super Fast App

I love how clean and fast the interface is, using Fliki is fast and snappy and the content is "rendered" incredibly quickly.

Lisa Batitto

Public Relations Professional

Hoping for something like this!

I'm having a great experience with Fliki so I was excited about this deal. My first project is turning my blog posts into videos, and posting on YouTube/TikTok.

Frequently asked questions

Yes, Fliki offers a tier that allows users to explore text to voice and text to video features without any cost.

You can generate 5 minutes of free audio and video content per month. However, certain advanced features and premium AI capabilities may require a paid subscription.

Fliki stands out from other tools because we combine text to video AI and text to speech AI capabilities to give you an all in one platform for your content creation needs.

Fliki helps you create visually captivating videos with professional-grade voiceovers, all in one place. In addition, we take pride in our exceptional AI Voices and Voice Clones known for their superior quality.

Fliki supports over 75 languages in over 100 dialects.

The AI speech generator offers 1300+ ultra-realistic voices, ensuring that you can create videos with voice overs in your desired language with ease.

No, our text-to-video tool is fully web-based. You only need a device with internet access and a browser preferably Google Chrome, to create, edit, and publish your videos.

An AI-generated presentation is created using artificial intelligence technology. It analyzes user input to generate engaging content, opening up exciting possibilities for various fields like business, education, and digital marketing.

Yes, our AI Presentation Maker provides customization options. You can make changes to colors, include brand assets, and more using our intuitive online editor.

Yes, our AI Presentation Maker is completely free to use. Create stunning presentations without any cost or subscription fees.

Once your presentation is ready, simply navigate to the download options. You can choose to download it in various formats such as PPTX or PDF directly from the platform. Additionally, if you prefer to have each slide as an individual image, you can download a zip file containing JPG, PNG, or WebP images of each slide.

Fliki supports voice cloning, allowing you to replicate your own voice or create unique voices for different characters. This feature saves time on recording and adds authenticity to your content.

It also opens up creative possibilities and assists individuals with speech impairments. With Fliki, you can personalize your content, enhance creativity, and overcome limitations with ease.

No, prior experience as a designer or video editor is not required to use Fliki. Our intuitive and user-friendly platform offers capabilities that make it super easy for anyone to create content.

Our Voice Cloning AI, Text to Speech AI, and Text to Video AI, combined with our ready to use templates and 10 million+ rich stock media, allow you to create high-quality videos without any design or video editing expertise.

You can cancel your subscription at anytime by navigating to Account and selecting "Manage billing"

Prices are listed in USD. We accept all major debit and credit cards along with GPay, Apple Pay and local payment wallets in supported countries.

Fliki operates on a subscription system with flexible pricing tiers. Users can access the platform for free or upgrade to a premium plan for advanced features.

The paid subscription includes benefits like ultra realistic AI voices, extended video durations, commercial usage rights, watermark removal, and priority customer support.

Payments can be made through the secure payment gateway provided.

Check out our pricing page for more information.

Stop wasting time, effort and money creating videos

Hours of content you create per month: 4 hours

To save over 96 hours of effort & $ 4800 per month

No technical skills or software download required.

Filter by Keywords

10 Best AI Tools for Presentations in 2024

Senior Content Marketing Manager

May 11, 2024

Presentations are a powerful way to share information, but building your slide deck is often time-consuming.

Artificial intelligence (AI) is revolutionizing how people put professional and engaging presentations together, allowing you to create polished presentations in seconds. With AI, say goodbye to tediously moving elements around on a slide and say hello to getting time back in your schedule to work on the stuff that matters most.

Ready to harness this presentation superpower? Learn more about AI presentation tools , what to look for, and some of the best AI presentation makers available in 2024.

What to look for in AI tools for presentations

1. beautiful.ai, 2. simplified’s ai presentation maker, 3. slidebean, 4. designs.ai, 6. presentations.ai, 8. kroma.ai, 10. deckrobot.

What are AI Tools for Presentations?

Various software and platforms use AI to help users create, enhance, and deliver visually appealing slides. These tools can assist with all aspects of the presentation creation process, from the initial creation to supercharging engagement.

Depending on the specific AI presentation tool, it can help you with tasks such as:

- Designing stunning presentations: AI can understand and follow the rules for good design, enabling it to craft professional-looking slide decks that are visually appealing and relevant to your content

- Creating content: Tell the AI writing tool what you want to write about, and it will generate content for each slide and even create your speech notes

- Building visuals for data: Give the platform your data, and it can create easy-to-read visualizations of your information ready to add to your slide deck

AI presentation makers help you produce engaging content faster and connect with your audience more effectively. But with so many AI presentation makers available today, you’ll want to work with a few options and determine which suits your workflow and style best.

When looking for an AI presentation maker, you’ll want to find something that feels intuitive and has a user-friendly interface. You don’t want to spend hours learning how to use a new piece of software, and you shouldn’t need extensive technical expertise to put these tools to work.

You’ll also want to ensure that the AI tool you choose integrates well with the rest of your tech stack. A stunning presentation is no good if you can’t get it to work with your preferred software, such as Google Slides or Microsoft PowerPoint.

You should also look for AI-powered tools that offer the functionality you need. For example, maybe you love designing slides but aren’t strong at crafting engaging copy. In that case, look for tools that focus on content generation with interactive elements so you can flex your design skills.

Or, you may want an AI presentation generator that does everything, from designing graphics to writing copy. These are often paid tools, but you can use free trials to determine your best options for creating presentations.

10 Best AI Presentation Tools 2024

_Page_13.jpg)

Beautiful.ai is an innovative AI presentation maker that aims to revolutionize how you create engaging presentations. The platform makes smart design recommendations through its intuitive interface and streamlines the creation process. You focus on your content while Beautiful.ai manages the aesthetics. Customizable templates, smart charts for data visualization, and analytics to track which slides get the most engagement from your audience are available.

Beautiful.ai best features

- Insightful analytics give you information on which slides in your presentation are making the most significant impact in a professional or academic setting

- Secure sharing helps protect sensitive information while making it easy to access for key stakeholders

- Seamless integration allows you to design slides in Beautiful.ai and edit them in PowerPoint

Beautiful.ai limitations

- While the templates offer beautiful designs, some users complain that there aren’t enough options compared with other platforms. Some users may want more control over their final design than the platform allows

Beautiful.ai pricing

- Pro for individuals: $12/mo

- Team for team collaboration: $40/mo

- Enterprise for advanced security and support: Contact sales for a custom plan

Beautiful.ai ratings and reviews

- G2: 4.7/5 (160+ reviews)

- Capterra: 4.6/5 (70+ reviews)

.png)

Simplified’s AI Presentation Maker promises to help you make on-brand presentations effortlessly. The platform handles image and content creation for any topic, and you can customize the results to suit your needs. All you have to do is tell the AI what topic you want to present, and it will generate the presentation in a few seconds.

Simplified best features

- A range of pre-designed templates and a vast visual library, even for users on the free plan

- Integration with all your favorite platforms, including Google Drive and Shopify

- Easy real-time collaboration on presentations

Simplified limitations

- The software can quickly become expensive, especially compared with similar services. It may be too costly for small organizations

Simplified pricing

- Design Free: $0 for one seat

- Design Pro: $6/month for one seat

- Design Business: $10/month for five seats

- Enterprise: Contact for pricing

Simplified ratings and reviews

- G2: 4.6/5 (1800+ reviews)

- Capterra: 4.7/5 (160+ reviews)

Slidebean is an AI presentation software that focuses on helping founders and startups create their pitch decks. It helps simplify the pitching process and ensures you have the right pitch for the right stage of company development. Whether you need an initial pitch deck, a marketing presentation, or a sales deck, Slidebean has solutions to suit your needs and help you get a yes.

Slidebean best features

- Online sharing keeps your deck (and ideas!) secure while putting the right information in front of the right investors

- Analytics track the activity of every slide in your deck so you know who viewed it, how many times, and when

- Easy collaboration options help you get the whole team in on the action

Slidebean limitations

- The user interface can be clunky, and some users may find editing AI-generated graphics and images difficult

Slidebean pricing

- All Access: $199/year

- Accelerate: $499/year

Slidebean ratings and reviews

- G2: 4.5/5 (20+ reviews)

- Capterra: 4.2/5 (50+ reviews)

Designs.ai offers a full suite of AI-powered creation tools. The platform offers AI writing assistance, a logo maker, a video presentation maker, and natural-sound AI voiceovers.

Those looking for help designing presentations will love the platform’s Designmaker, which creates visual content for you in seconds. Choose from a vast library of presentation templates, then give the platform your content and let it take care of the rest.

Designs.ai best features

- The huge library of templates and design elements enables you to customize your AI presentations to suit your needs

- One-click resize feature allows you to optimize your presentation for Instagram, mobile, projector screens, and other devices

- Intuitive design editor means that you don’t have to be a master of graphic design to create a gorgeous deck

Designs.ai limitations

- Exporting files can be slow and cumbersome

Designs.ai pricing

- Basic: $19/month

- Enterprise: Call for a custom quote

Designs.ai ratings and reviews

- G2: 4.3/5 (6+ reviews)

- Capterra: 4.5/5 (2+ reviews)

Pitch helps you create sleek presentations in seconds. Pick your template and add your content; you’ll soon have a beautiful deck ready to share. The platform allows you to share a link, present your presentation live, or even embed it on the web so you can direct viewers to it anytime. It has robust integration capabilities, connecting with tech-stack favorites like Slack, Vimeo, and YouTube.

Pitch best features

- Great integration capabilities make it easy to add content to your deck and share it with others

- Analytics help you track what’s working in your deck and see what you need to tweak

- Great presentation tools make it simple to share, record, and present your deck

Pitch limitations

- While the AI-generated slides are aesthetically pleasing, slide editing features can be clunky

Pitch pricing

- Starter: $0, free forever

- Pro: $8 per member per month

- Enterprise: Contact for a custom quote

Pitch ratings and reviews

- G2: 4.4/5 (40+ reviews)

- Capterra: 4.9/5 (30+ reviews)

Presentations.ai aims to help you reduce presentation creation time while improving your overall presentation design quality. The platform uses AI technology to make creation effortless and offers many personalized design options. You can then share, track, and analyze your presentations to see how they perform with your audience.

Presentations.ai best features

- Create presentations in seconds from a single prompt

- Get the perfect final look with various presentation tools and customization options

- Great branding options so you have a consistent, polished look for everything you create

Presentations.ai limitations

- The platform focuses heavily on pitch decks and marketing presentations, so the templates may be frustrating for users looking to create educational or informative presentations. Some templates and slides are behind a paywall, limiting options for free users

Presentations.ai pricing

- Starter: $0 (beta pricing)

- Pro: $396 (beta pricing)

Presentations.ai ratings and reviews

- Not available

Gamma uses natural language processing to help users create dynamic decks. Start writing your content, then use the built-in AI chatbot to change the look of your deck. When you’re happy with the deck, enter present mode and show your work off in a live presentation or send it as a webpage for users to view independently. It’s a flexible, user-friendly platform that helps you engage your audience.

Gamma best features

- Change up the look of your slide deck at any time with the help of the AI-assisted presentation tool

- Embed GIFs, charts, videos, and even websites to bring presentations to life

- Receive instantaneous feedback on your presentations from viewers with quick reactions

Gamma limitations

- Templates offer significant color customization, but there is little variation in layout and design

Gamma pricing

- Starter: Free

- Pro: Coming soon

- G2: 4/5 (6+ reviews)

- Capterra: 4/5 (1+ reviews)

Kroma.ai helps you create beautiful decks whether you present them to potential investors or need to share your ideas and data.

Simply pick your template, choose your colors, and add your logo. Then, use the grab-and-go expertly generated content to take your presentation to the next level, making it more persuasive and engaging with the help of industry leaders.

Kroma.ai best features

- Choose content from industry experts to boost your professional presentation

- Make your numbers stand out with robust data visualization tools

- Create stunning presentations with expertly designed templates that ensure that you have the right format for the job

Kroma.ai limitations

- Some parts of the user interface aren’t hugely intuitive

- Some users may not care about the expert-written content, which is one of the platform’s big selling points

Kroma.ai pricing

- Explorer: Free

- Premium: $49.99 per user per month

- Enterprise: $1,699 per year

Kroma.ai ratings and reviews

Tome wants to be your go-to AI presentation tool. Tell the platform what you want to do with a simple prompt, and it will generate images, copy, and slides to help you achieve your goal.

You can tweak every aspect of the design, asking the AI tool to change the tone or generate a new AI image in a different style. It’s also great for turning boring documents into dynamic presentations.

Tome best features

- It helps you find and cite references that support your claims

- Easy language translations permit you to your share presentations across the world

- Excellent customizable templates allow you to create pitch decks, marketing presentations, and educational presentations

Tome limitations

- Some users are encountering issues with the platform, though this could be because the tool is still in development

Tome pricing

- Free Forever

- Pro: $8 per person per month

- Enterprise: Contact for custom pricing

Tome ratings and reviews

- G2: 4.8/5 (20+ reviews)

DeckRobot is a handy plug-in that turns your drafts into polished presentation decks in seconds. It even ensures that your final deck complies with your corporate branding guidelines so you maintain consistent branding in everything you create. It’s a great way to reduce manual design time, and all your data stays securely on your servers.

DeckRobot best features

- Plug-in for PowerPoint presentations takes the first draft of your presentation and turns it into a polished final deck

- Your data stays secure because all information stays on your server

- It allows you to redesign with preset corporate branding in one click

DeckRobot limitations

- This plug-in is for PowerPoint, so if you’re using another platform, you’ll need to opt for another AI solution

DeckRobot pricing

- Call for custom pricing

DeckRobot ratings and reviews

Other ai tools for slide creation.

Are you looking for more tools to help you harness the power of AI? If so, discover the game-changing power of ClickUp Brain . ClickUp Brain integrates seamlessly with the rest of the project-management platform. That means you can use the AI with your ClickUp Docs to take your content creation to the next level.

ClickUp’s AI technology enhances your written copy with grammar and spell-checks plus custom suggestions tailored to your role and writing style. Use the AI Writer for Work not only to change the tone, language, or audience for your content but also to produce on-point new copy in just a few clicks. The AI writer will also help make your copy more engaging or simplify complex concepts.

ClickUp’s AI writing tools can create emails, draft blog posts, and summarize long documents in a few clicks. Generate action items and provide recaps from meeting notes to save you from tedious admin tasks. There are prompts for every department, helping everyone in your company work smarter.

If you need help creating an excellent presentation, use the ClickUp Presentation Template as a launching point. You can use the integrated AI tools to make your content, then collaborate with the rest of your team on polishing the final deck with the innovative collaboration tools. With ClickUp’s robust integration options, you can easily pull content into your presentation and share it with stakeholders when it’s complete.

ClickUp Brain revolutionizes how you write, manage tasks, and create content. Sign up for your free account today, and start using ClickUp’s innovative AI tools to get more done!

Questions? Comments? Visit our Help Center for support.

Receive the latest WriteClick Newsletter updates.

Thanks for subscribing to our blog!

Please enter a valid email

- Free training & 24-hour support