ChatGPT isn’t the death of homework – just an opportunity for schools to do things differently

Professor of IT Ethics and Digital Rights, Bournemouth University

Disclosure statement

Andy Phippen is a trustee of SWGfL

Bournemouth University provides funding as a member of The Conversation UK.

View all partners

ChatGPT, the artificial intelligence (AI) platform launched by research company Open AI , can write an essay in response to a short prompt. It can perform mathematical equations – and show its working.

ChatGPT is a generative AI system: an algorithm that can generate new content from existing bodies of documents, images or audio when prompted with a description or question. It’s unsurprising concerns have emerged that young people are using ChatGPT and similar technology as a shortcut when doing their homework .

But banning students from using ChatGPT, or expecting teachers to scour homework for its use, would be shortsighted. Education has adapted to – and embraced – online technology for decades. The approach to generative AI should be no different.

The UK government has launched a consultation on the use of generative AI in education, following the publication of initial guidance on how schools might make best use of this technology.

In general, the advice is progressive and acknowledged the potential benefits of using these tools. It suggests that AI tools may have value in reducing teacher workload when producing teaching resources, marking, and in administrative tasks. But the guidance also states:

Schools and colleges may wish to review homework policies, to consider the approach to homework and other forms of unsupervised study as necessary to account for the availability of generative AI.

While little practical advice is offered on how to do this, the suggestion is that schools and colleges should consider the potential for cheating when students are using these tools.

Nothing new

Past research on student cheating suggested that students’ techniques were sophisticated and that they felt remorseful only if caught. They cheated because it was easy, especially with new online technologies.

But this research wasn’t investigating students’ use of Chat GPT or any kind of generative AI. It was conducted over 20 years ago , part of a body of literature that emerged at the turn of the century around the potential harm newly emerging internet search engines could do to student writing, homework and assessment.

We can look at past research to track the entry of new technologies into the classroom – and to infer the varying concerns about their use. In the 1990s, research explored the impact word processors might have on child literacy. It found that students writing on computers were more collaborative and focused on the task. In the 1970s , there were questions on the effect electronic calculators might have on children’s maths abilities.

In 2023, it would seem ludicrous to state that a child could not use a calculator, word processor or search engine in a homework task or piece of coursework. But the suspicion of new technology remains. It clouds the reality that emerging digital tools can be effective in supporting learning and developing crucial critical thinking and life skills.

Get on board

Punitive approaches and threats of detection make the use of such tools covert. A far more progressive position would be for teachers to embrace these technologies, learn how they work, and make this part of teaching on digital literacy, misinformation and critical thinking. This, in my experience , is what young people want from education on digital technology.

Children should learn the difference between acknowledging the use of these tools and claiming the work as their own. They should also learn whether – or not – to trust the information provided to them on the internet.

The educational charity SWGfL , of which I am a trustee, has recently launched an AI hub which provides further guidance on how to use these new tools in school settings. The charity also runs Project Evolve , a toolkit containing a large number of teaching resources around managing online information, which will help in these classroom discussions.

I expect to see generative AI tools being merged, eventually, into mainstream learning. Saying “do not use search engines” for an assignment is now ridiculous. The same might be said in the future about prohibitions on using generative AI.

Perhaps the homework that teachers set will be different. But as with search engines, word processors and calculators, schools are not going to be able to ignore their rapid advance. It is far better to embrace and adapt to change, rather than resisting (and failing to stop) it.

- Artificial intelligence (AI)

- Keep me on trend

Public Policy Editor

ARDC Project Management Office Manager

Laboratory Officer (Animal Anatomy Teaching Facility)

Lecturer / Senior Lecturer in Indigenous Knowledges

Commissioning Editor Nigeria

- Skip to main content

- Keyboard shortcuts for audio player

A new AI chatbot might do your homework for you. But it's still not an A+ student

Emma Bowman

Enter a prompt into ChatGPT, and it becomes your very own virtual assistant. OpenAI/Screenshot by NPR hide caption

Enter a prompt into ChatGPT, and it becomes your very own virtual assistant.

Why do your homework when a chatbot can do it for you? A new artificial intelligence tool called ChatGPT has thrilled the Internet with its superhuman abilities to solve math problems, churn out college essays and write research papers.

After the developer OpenAI released the text-based system to the public last month, some educators have been sounding the alarm about the potential that such AI systems have to transform academia, for better and worse.

"AI has basically ruined homework," said Ethan Mollick, a professor at the University of Pennsylvania's Wharton School of Business, on Twitter.

The tool has been an instant hit among many of his students, he told NPR in an interview on Morning Edition , with its most immediately obvious use being a way to cheat by plagiarizing the AI-written work, he said.

Academic fraud aside, Mollick also sees its benefits as a learning companion.

Opinion: Machine-made poetry is here

He's used it as his own teacher's assistant, for help with crafting a syllabus, lecture, an assignment and a grading rubric for MBA students.

"You can paste in entire academic papers and ask it to summarize it. You can ask it to find an error in your code and correct it and tell you why you got it wrong," he said. "It's this multiplier of ability, that I think we are not quite getting our heads around, that is absolutely stunning," he said.

A convincing — yet untrustworthy — bot

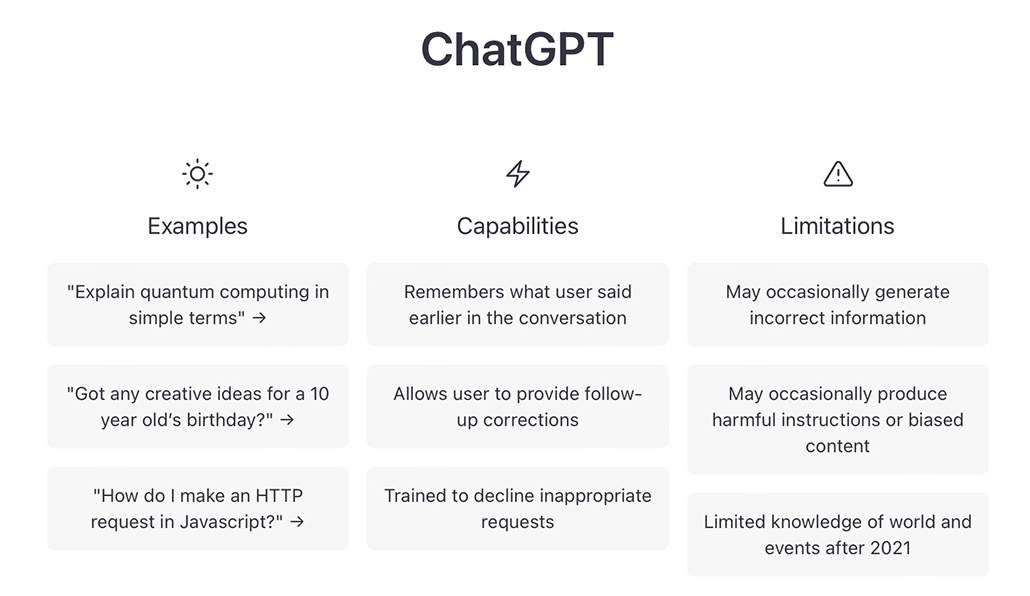

But the superhuman virtual assistant — like any emerging AI tech — has its limitations. ChatGPT was created by humans, after all. OpenAI has trained the tool using a large dataset of real human conversations.

"The best way to think about this is you are chatting with an omniscient, eager-to-please intern who sometimes lies to you," Mollick said.

It lies with confidence, too. Despite its authoritative tone, there have been instances in which ChatGPT won't tell you when it doesn't have the answer.

That's what Teresa Kubacka, a data scientist based in Zurich, Switzerland, found when she experimented with the language model. Kubacka, who studied physics for her Ph.D., tested the tool by asking it about a made-up physical phenomenon.

"I deliberately asked it about something that I thought that I know doesn't exist so that they can judge whether it actually also has the notion of what exists and what doesn't exist," she said.

ChatGPT produced an answer so specific and plausible sounding, backed with citations, she said, that she had to investigate whether the fake phenomenon, "a cycloidal inverted electromagnon," was actually real.

When she looked closer, the alleged source material was also bogus, she said. There were names of well-known physics experts listed – the titles of the publications they supposedly authored, however, were non-existent, she said.

"This is where it becomes kind of dangerous," Kubacka said. "The moment that you cannot trust the references, it also kind of erodes the trust in citing science whatsoever," she said.

Scientists call these fake generations "hallucinations."

"There are still many cases where you ask it a question and it'll give you a very impressive-sounding answer that's just dead wrong," said Oren Etzioni, the founding CEO of the Allen Institute for AI , who ran the research nonprofit until recently. "And, of course, that's a problem if you don't carefully verify or corroborate its facts."

Users experimenting with the chatbot are warned before testing the tool that ChatGPT "may occasionally generate incorrect or misleading information." OpenAI/Screenshot by NPR hide caption

An opportunity to scrutinize AI language tools

Users experimenting with the free preview of the chatbot are warned before testing the tool that ChatGPT "may occasionally generate incorrect or misleading information," harmful instructions or biased content.

Sam Altman, OpenAI's CEO, said earlier this month it would be a mistake to rely on the tool for anything "important" in its current iteration. "It's a preview of progress," he tweeted .

The failings of another AI language model unveiled by Meta last month led to its shutdown. The company withdrew its demo for Galactica, a tool designed to help scientists, just three days after it encouraged the public to test it out, following criticism that it spewed biased and nonsensical text.

Untangling Disinformation

Ai-generated fake faces have become a hallmark of online influence operations.

Similarly, Etzioni says ChatGPT doesn't produce good science. For all its flaws, though, he sees ChatGPT's public debut as a positive. He sees this as a moment for peer review.

"ChatGPT is just a few days old, I like to say," said Etzioni, who remains at the AI institute as a board member and advisor. It's "giving us a chance to understand what he can and cannot do and to begin in earnest the conversation of 'What are we going to do about it?' "

The alternative, which he describes as "security by obscurity," won't help improve fallible AI, he said. "What if we hide the problems? Will that be a recipe for solving them? Typically — not in the world of software — that has not worked out."

It’s a wonderful world — and universe — out there.

Come explore with us!

Science News Explores

Think twice before using chatgpt for help with homework.

This new AI tool talks a lot like a person — but still makes mistakes

ChatGPT is impressive and can be quite useful. It can help people write text, for instance, and code. However, “it’s not magic,” says Casey Fiesler. In fact, it often seems intelligent and confident while making mistakes — and sometimes parroting biases.

Glenn Harvey

Share this:

- Google Classroom

By Kathryn Hulick

February 16, 2023 at 6:30 am

“We need to talk,” Brett Vogelsinger said. A student had just asked for feedback on an essay. One paragraph stood out. Vogelsinger, a 9th-grade English teacher in Doylestown, Pa., realized that the student hadn’t written the piece himself. He had used ChatGPT. It’s a new artificial intelligence (AI) tool. It answers questions. It writes code. And it can generate long essays and stories.

The company OpenAI made ChatGPT available for free at the end of November 2022. Within a week, it had more than a million users. Other tech companies are racing to put out similar tools. Google launched Bard in early February. The AI company Anthropic is testing a new chatbot named Claude. And another AI company, DeepMind, is working on a bot called Sparrow.

ChatGPT marks the beginning of a new wave of AI that will disrupt education. Whether that’s a good or bad thing remains to be seen.

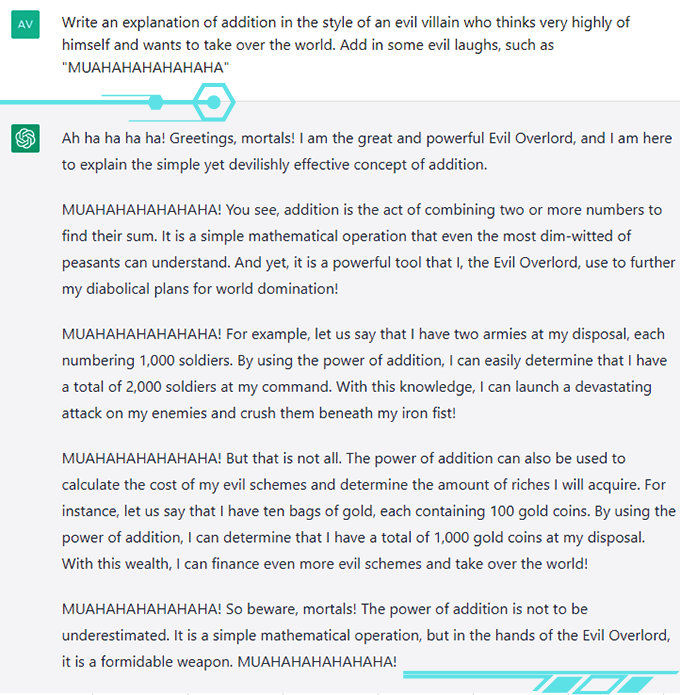

Some people have been using ChatGPT out of curiosity or for entertainment. I asked it to invent a silly excuse for not doing homework in the style of a medieval proclamation. In less than a second, it offered me: “Hark! Thy servant was beset by a horde of mischievous leprechauns, who didst steal mine quill and parchment, rendering me unable to complete mine homework.”

But students can also use it to cheat. When Stanford University’s student-run newspaper polled students at the university, 17 percent said they had used ChatGPT on assignments or exams during the end of 2022. Some admitted to submitting the chatbot’s writing as their own. For now, these students and others are probably getting away with cheating.

And that’s because ChatGPT does an excellent job. “It can outperform a lot of middle-school kids,” Vogelsinger says. He probably wouldn’t have known his student used it — except for one thing. “He copied and pasted the prompt,” says Vogelsinger.

This essay was still a work in progress. So Vogelsinger didn’t see this as cheating. Instead, he saw an opportunity. Now, the student is working with the AI to write that essay. It’s helping the student develop his writing and research skills.

“We’re color-coding,” says Vogelsinger. The parts the student writes are in green. Those parts that ChatGPT writes are in blue. Vogelsinger is helping the student pick and choose only a few sentences from the AI to keep. He’s allowing other students to collaborate with the tool as well. Most aren’t using it regularly, but a few kids really like it. Vogelsinger thinks it has helped them get started and to focus their ideas.

This story had a happy ending.

But at many schools and universities, educators are struggling with how to handle ChatGPT and other tools like it. In early January, New York City public schools banned ChatGPT on their devices and networks. They were worried about cheating. They also were concerned that the tool’s answers might not be accurate or safe. Many other school systems in the United States and elsewhere have followed suit.

Test yourself: Can you spot the ChatGPT answers in our quiz?

But some experts suspect that bots like ChatGPT could also be a great help to learners and workers everywhere. Like calculators for math or Google for facts, an AI chatbot makes something that once took time and effort much simpler and faster. With this tool, anyone can generate well-formed sentences and paragraphs — even entire pieces of writing.

How could a tool like this change the way we teach and learn?

The good, the bad and the weird

ChatGPT has wowed its users. “It’s so much more realistic than I thought a robot could be,” says Avani Rao. This high school sophomore lives in California. She hasn’t used the bot to do homework. But for fun, she’s prompted it to say creative or silly things. She asked it to explain addition, for instance, in the voice of an evil villain. Its answer is highly entertaining.

Tools like ChatGPT could help create a more equitable world for people who are trying to work in a second language or who struggle with composing sentences. Students could use ChatGPT like a coach to help improve their writing and grammar. Or it could explain difficult subjects. “It really will tutor you,” says Vogelsinger, who had one student come to him excited that ChatGPT had clearly outlined a concept from science class.

Teachers could use ChatGPT to help create lesson plans or activities — ones personalized to the needs or goals of specific students.

Several podcasts have had ChatGPT as a “guest” on the show. In 2023, two people are going to use an AI-powered chatbot like a lawyer. It will tell them what to say during their appearances in traffic court. The company that developed the bot is paying them to test the new tech. Their vision is a world in which legal help might be free.

@professorcasey Replying to @novshmozkapop #ChatGPT might be helpful but don’t ask it for help on your math homework. #openai #aiethics ♬ original sound – Professor Casey Fiesler

Xiaoming Zhai tested ChatGPT to see if it could write an academic paper . Zhai is an expert in science education at the University of Georgia in Athens. He was impressed with how easy it was to summarize knowledge and generate good writing using the tool. “It’s really amazing,” he says.

All of this sounds great. Still, some really big problems exist.

Most worryingly, ChatGPT and tools like it sometimes gets things very wrong. In an ad for Bard, the chatbot claimed that the James Webb Space Telescope took the very first picture of an exoplanet. That’s false. In a conversation posted on Twitter, ChatGPT said the fastest marine mammal was the peregrine falcon. A falcon, of course, is a bird and doesn’t live in the ocean.

ChatGPT can be “confidently wrong,” says Casey Fiesler. Its text, she notes, can contain “mistakes and bad information.” She is an expert in the ethics of technology at the University of Colorado Boulder. She has made multiple TikTok videos about the pitfalls of ChatGPT .

Also, for now, all of the bot’s training data came from before a date in 2021. So its knowledge is out of date.

Finally, ChatGPT does not provide sources for its information. If asked for sources, it will make them up. It’s something Fiesler revealed in another video . Zhai discovered the exact same thing. When he asked ChatGPT for citations, it gave him sources that looked correct. In fact, they were bogus.

Zhai sees the tool as an assistant. He double-checked its information and decided how to structure the paper himself. If you use ChatGPT, be honest about it and verify its information, the experts all say.

Under the hood

ChatGPT’s mistakes make more sense if you know how it works. “It doesn’t reason. It doesn’t have ideas. It doesn’t have thoughts,” explains Emily M. Bender. She is a computational linguist who works at the University of Washington in Seattle. ChatGPT may sound a lot like a person, but it’s not one. It is an AI model developed using several types of machine learning .

The primary type is a large language model. This type of model learns to predict what words will come next in a sentence or phrase. It does this by churning through vast amounts of text. It places words and phrases into a 3-D map that represents their relationships to each other. Words that tend to appear together, like peanut butter and jelly, end up closer together in this map.

Before ChatGPT, OpenAI had made GPT3. This very large language model came out in 2020. It had trained on text containing an estimated 300 billion words. That text came from the internet and encyclopedias. It also included dialogue transcripts, essays, exams and much more, says Sasha Luccioni. She is a researcher at the company HuggingFace in Montreal, Canada. This company builds AI tools.

OpenAI improved upon GPT3 to create GPT3.5. This time, OpenAI added a new type of machine learning. It’s known as “reinforcement learning with human feedback.” That means people checked the AI’s responses. GPT3.5 learned to give more of those types of responses in the future. It also learned not to generate hurtful, biased or inappropriate responses. GPT3.5 essentially became a people-pleaser.

During ChatGPT’s development, OpenAI added even more safety rules to the model. As a result, the chatbot will refuse to talk about certain sensitive issues or information. But this also raises another issue: Whose values are being programmed into the bot, including what it is — or is not — allowed to talk about?

OpenAI is not offering exact details about how it developed and trained ChatGPT. The company has not released its code or training data. This disappoints Luccioni. “I want to know how it works in order to help make it better,” she says.

When asked to comment on this story, OpenAI provided a statement from an unnamed spokesperson. “We made ChatGPT available as a research preview to learn from real-world use, which we believe is a critical part of developing and deploying capable, safe AI systems,” the statement said. “We are constantly incorporating feedback and lessons learned.” Indeed, some early experimenters got the bot to say biased things about race and gender. OpenAI quickly patched the tool. It no longer responds the same way.

ChatGPT is not a finished product. It’s available for free right now because OpenAI needs data from the real world. The people who are using it right now are their guinea pigs. If you use it, notes Bender, “You are working for OpenAI for free.”

Humans vs robots

How good is ChatGPT at what it does? Catherine Gao is part of one team of researchers that is putting the tool to the test.

At the top of a research article published in a journal is an abstract. It summarizes the author’s findings. Gao’s group gathered 50 real abstracts from research papers in medical journals. Then they asked ChatGPT to generate fake abstracts based on the paper titles. The team asked people who review abstracts as part of their job to identify which were which .

The reviewers mistook roughly one in every three (32 percent) of the AI-generated abstracts as human-generated. “I was surprised by how realistic and convincing the generated abstracts were,” says Gao. She is a doctor and medical researcher at Northwestern University’s Feinberg School of Medicine in Chicago, Ill.

In another study, Will Yeadon and his colleagues tested whether AI tools could pass a college exam . Yeadon is a physics teacher at Durham University in England. He picked an exam from a course that he teaches. The test asks students to write five short essays about physics and its history. Students who take the test have an average score of 71 percent, which he says is equivalent to an A in the United States.

Yeadon used a close cousin of ChatGPT, called davinci-003. It generated 10 sets of exam answers. Afterward, he and four other teachers graded them using their typical grading standards for students. The AI also scored an average of 71 percent. Unlike the human students, however, it had no very low or very high marks. It consistently wrote well, but not excellently. For students who regularly get bad grades in writing, Yeadon says, this AI “will write a better essay than you.”

These graders knew they were looking at AI work. In a follow-up study, Yeadon plans to use work from the AI and students and not tell the graders whose work they are looking at.

Educators and Parents, Sign Up for The Cheat Sheet

Weekly updates to help you use Science News Explores in the learning environment

Thank you for signing up!

There was a problem signing you up.

Cheat-checking with AI

People may not always be able to tell if ChatGPT wrote something or not. Thankfully, other AI tools can help. These tools use machine learning to scan many examples of AI-generated text. After training this way, they can look at new text and tell you whether it was most likely composed by AI or a human.

Most free AI-detection tools were trained on older language models, so they don’t work as well for ChatGPT. Soon after ChatGPT came out, though, one college student spent his holiday break building a free tool to detect its work . It’s called GPTZero .

The company Originality.ai sells access to another up-to-date tool. Founder Jon Gillham says that in a test of 10,000 samples of text composed by GPT3, the tool tagged 94 percent of them correctly. When ChatGPT came out, his team tested a much smaller set of 20 samples that had been created by GPT3, GPT3.5 and ChatGPT. Here, Gillham says, “it tagged all of them as AI-generated. And it was 99 percent confident, on average.”

In addition, OpenAI says they are working on adding “digital watermarks” to AI-generated text. They haven’t said exactly what they mean by this. But Gillham explains one possibility. The AI ranks many different possible words when it is generating text. Say its developers told it to always choose the word ranked in third place rather than first place at specific places in its output. These words would act “like a fingerprint,” says Gillham.

The future of writing

Tools like ChatGPT are only going to improve with time. As they get better, people will have to adjust to a world in which computers can write for us. We’ve made these sorts of adjustments before. As high-school student Rao points out, Google was once seen as a threat to education because it made it possible to instantly look up any fact. We adapted by coming up with teaching and testing materials that don’t require students to memorize things.

Now that AI can generate essays, stories and code, teachers may once again have to rethink how they teach and test. That might mean preventing students from using AI. They could do this by making students work without access to technology. Or they might invite AI into the writing process, as Vogelsinger is doing. Concludes Rao, “We might have to shift our point of view about what’s cheating and what isn’t.”

Students will still have to learn to write without AI’s help. Kids still learn to do basic math even though they have calculators. Learning how math works helps us learn to think about math problems. In the same way, learning to write helps us learn to think about and express ideas.

Rao thinks that AI will not replace human-generated stories, articles and other texts. Why? She says: “The reason those things exist is not only because we want to read it but because we want to write it.” People will always want to make their voices heard. ChatGPT is a tool that could enhance and support our voices — as long as we use it with care.

Correction: Gillham’s comment on the 20 samples that his team tested has been corrected to show how confident his team’s AI-detection tool was in identifying text that had been AI-generated (not in how accurately it detected AI-generated text).

Can you find the bot?

More stories from science news explores on tech.

Scientists Say: Quantum dot

This young engineer built an affordable electronic braille reader

Scientists Say: Deepfake

A Jurassic Park -inspired method can safely store data in DNA

Predicting and designing protein structures wins a 2024 Nobel Prize

Explainer: What is generative AI?

ChatGPT and other AI tools are full of hidden racial biases

Two AI trailblazers win the 2024 Nobel Prize in physics

Numbers, Facts and Trends Shaping Your World

Read our research on:

Full Topic List

Regions & Countries

- Publications

- Our Methods

- Short Reads

- Tools & Resources

Read Our Research On:

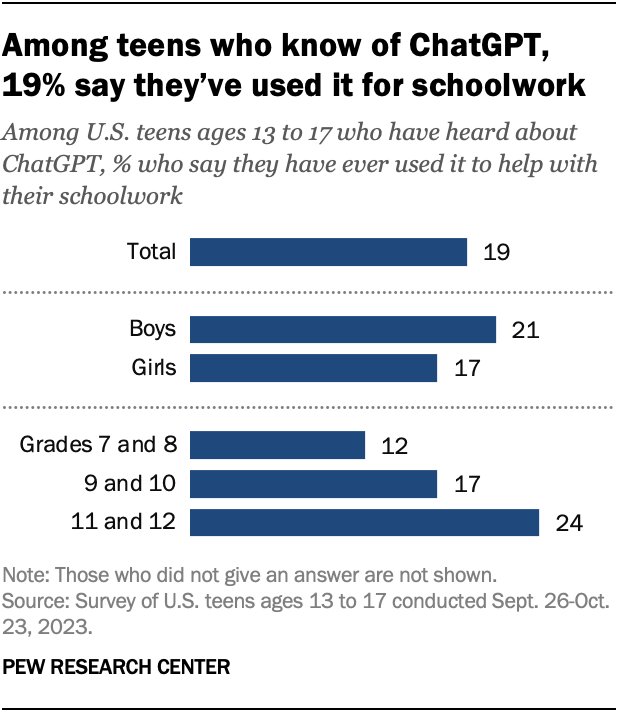

About 1 in 5 U.S. teens who’ve heard of ChatGPT have used it for schoolwork

Roughly one-in-five teenagers who have heard of ChatGPT say they have used it to help them do their schoolwork, according to a new Pew Research Center survey of U.S. teens ages 13 to 17. With a majority of teens having heard of ChatGPT, that amounts to 13% of all U.S. teens who have used the generative artificial intelligence (AI) chatbot in their schoolwork.

Teens in higher grade levels are particularly likely to have used the chatbot to help them with schoolwork. About one-quarter of 11th and 12th graders who have heard of ChatGPT say they have done this. This share drops to 17% among 9th and 10th graders and 12% among 7th and 8th graders.

There is no significant difference between teen boys and girls who have used ChatGPT in this way.

The introduction of ChatGPT last year has led to much discussion about its role in schools , especially whether schools should integrate the new technology into the classroom or ban it .

Pew Research Center conducted this analysis to understand American teens’ use and understanding of ChatGPT in the school setting.

The Center conducted an online survey of 1,453 U.S. teens from Sept. 26 to Oct. 23, 2023, via Ipsos. Ipsos recruited the teens via their parents, who were part of its KnowledgePanel . The KnowledgePanel is a probability-based web panel recruited primarily through national, random sampling of residential addresses. The survey was weighted to be representative of U.S. teens ages 13 to 17 who live with their parents by age, gender, race and ethnicity, household income, and other categories.

This research was reviewed and approved by an external institutional review board (IRB), Advarra, an independent committee of experts specializing in helping to protect the rights of research participants.

Here are the questions used for this analysis , along with responses, and its methodology .

Teens’ awareness of ChatGPT

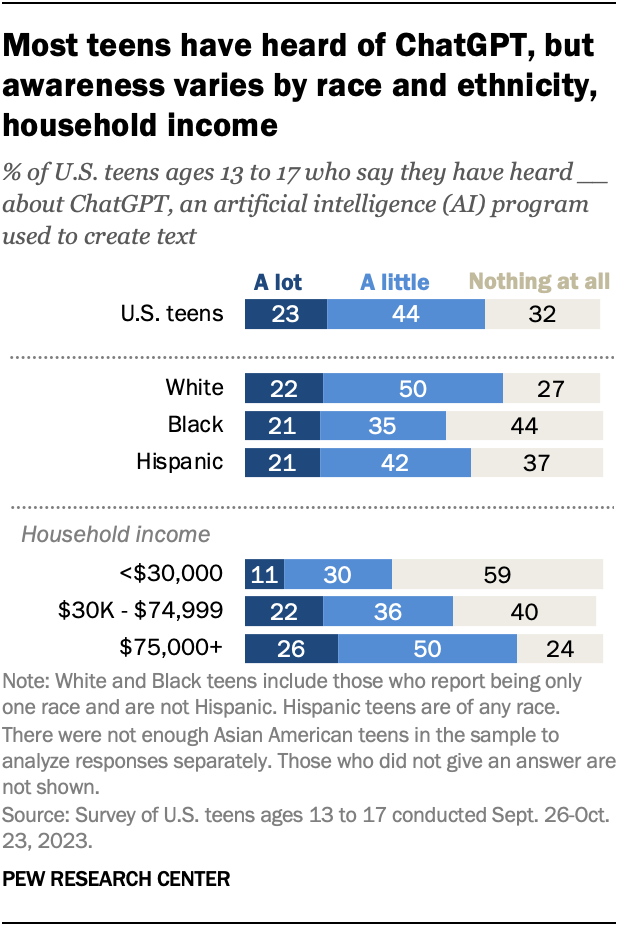

Overall, two-thirds of U.S. teens say they have heard of ChatGPT, including 23% who have heard a lot about it. But awareness varies by race and ethnicity, as well as by household income:

- 72% of White teens say they’ve heard at least a little about ChatGPT, compared with 63% of Hispanic teens and 56% of Black teens.

- 75% of teens living in households that make $75,000 or more annually have heard of ChatGPT. Much smaller shares in households with incomes between $30,000 and $74,999 (58%) and less than $30,000 (41%) say the same.

Teens who are more aware of ChatGPT are more likely to use it for schoolwork. Roughly a third of teens who have heard a lot about ChatGPT (36%) have used it for schoolwork, far higher than the 10% among those who have heard a little about it.

When do teens think it’s OK for students to use ChatGPT?

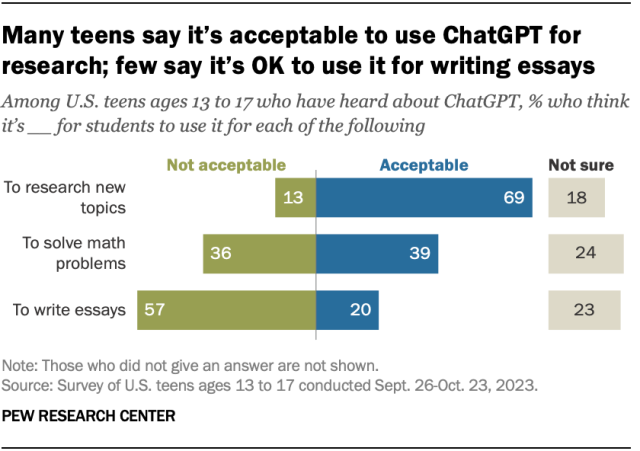

For teens, whether it is – or is not – acceptable for students to use ChatGPT depends on what it is being used for.

There is a fair amount of support for using the chatbot to explore a topic. Roughly seven-in-ten teens who have heard of ChatGPT say it’s acceptable to use when they are researching something new, while 13% say it is not acceptable.

However, there is much less support for using ChatGPT to do the work itself. Just one-in-five teens who have heard of ChatGPT say it’s acceptable to use it to write essays, while 57% say it is not acceptable. And 39% say it’s acceptable to use ChatGPT to solve math problems, while a similar share of teens (36%) say it’s not acceptable.

Some teens are uncertain about whether it’s acceptable to use ChatGPT for these tasks. Between 18% and 24% say they aren’t sure whether these are acceptable use cases for ChatGPT.

Those who have heard a lot about ChatGPT are more likely than those who have only heard a little about it to say it’s acceptable to use the chatbot to research topics, solve math problems and write essays. For instance, 54% of teens who have heard a lot about ChatGPT say it’s acceptable to use it to solve math problems, compared with 32% among those who have heard a little about it.

Note: Here are the questions used for this analysis , along with responses, and its methodology .

- Artificial Intelligence

- Technology Adoption

- Teens & Tech

Olivia Sidoti is a research assistant focusing on internet and technology research at Pew Research Center .

Jeffrey Gottfried is an associate director focusing on internet and technology research at Pew Research Center .

Americans in both parties are concerned over the impact of AI on the 2024 presidential campaign

A quarter of u.s. teachers say ai tools do more harm than good in k-12 education, many americans think generative ai programs should credit the sources they rely on, americans’ use of chatgpt is ticking up, but few trust its election information, q&a: how we used large language models to identify guests on popular podcasts, most popular.

901 E St. NW, Suite 300 Washington, DC 20004 USA (+1) 202-419-4300 | Main (+1) 202-857-8562 | Fax (+1) 202-419-4372 | Media Inquiries

Research Topics

- Email Newsletters

ABOUT PEW RESEARCH CENTER Pew Research Center is a nonpartisan, nonadvocacy fact tank that informs the public about the issues, attitudes and trends shaping the world. It does not take policy positions. The Center conducts public opinion polling, demographic research, computational social science research and other data-driven research. Pew Research Center is a subsidiary of The Pew Charitable Trusts , its primary funder.

© 2024 Pew Research Center

Advertisement

Supported by

Don’t Ban ChatGPT in Schools. Teach With It.

OpenAI’s new chatbot is raising fears of cheating on homework, but its potential as an educational tool outweighs its risks.

- Share full article

By Kevin Roose

Recently, I gave a talk to a group of K-12 teachers and public school administrators in New York. The topic was artificial intelligence, and how schools would need to adapt to prepare students for a future filled with all kinds of capable A.I. tools.

But it turned out that my audience cared about only one A.I. tool: ChatGPT, the buzzy chatbot developed by OpenAI that is capable of writing cogent essays, solving science and math problems and producing working computer code.

ChatGPT is new — it was released in late November — but it has already sent many educators into a panic. Students are using it to write their assignments, passing off A.I.-generated essays and problem sets as their own. Teachers and school administrators have been scrambling to catch students using the tool to cheat, and they are fretting about the havoc ChatGPT could wreak on their lesson plans. (Some publications have declared , perhaps a bit prematurely, that ChatGPT has killed homework altogether.)

Cheating is the immediate, practical fear, along with the bot’s propensity to spit out wrong or misleading answers. But there are existential worries, too. One high school teacher told me that he used ChatGPT to evaluate a few of his students’ papers, and that the app had provided more detailed and useful feedback on them than he would have, in a tiny fraction of the time.

“Am I even necessary now?” he asked me, only half joking.

Some schools have responded to ChatGPT by cracking down. New York City public schools, for example, recently blocked ChatGPT access on school computers and networks, citing “concerns about negative impacts on student learning, and concerns regarding the safety and accuracy of content.” Schools in other cities, including Seattle, have also restricted access. (Tim Robinson, a spokesman for Seattle Public Schools, told me that ChatGPT was blocked on school devices in December, “along with five other cheating tools.”)

It’s easy to understand why educators feel threatened. ChatGPT is a freakishly capable tool that landed in their midst with no warning, and it performs reasonably well across a wide variety of tasks and academic subjects. There are legitimate questions about the ethics of A.I.-generated writing, and concerns about whether the answers ChatGPT gives are accurate. (Often, they’re not.) And I’m sympathetic to teachers who feel that they have enough to worry about, without adding A.I.-generated homework to the mix.

But after talking with dozens of educators over the past few weeks, I’ve come around to the view that banning ChatGPT from the classroom is the wrong move.

Instead, I believe schools should thoughtfully embrace ChatGPT as a teaching aid — one that could unlock student creativity, offer personalized tutoring, and better prepare students to work alongside A.I. systems as adults. Here’s why.

It won’t work

The first reason not to ban ChatGPT in schools is that, to be blunt, it’s not going to work.

Sure, a school can block the ChatGPT website on school networks and school-owned devices. But students have phones, laptops and any number of other ways of accessing it outside of class. (Just for kicks, I asked ChatGPT how a student who was intent on using the app might evade a schoolwide ban. It came up with five answers, all totally plausible, including using a VPN to disguise the student’s web traffic.)

Some teachers have high hopes for tools such as GPTZero, a program built by a Princeton student that claims to be able to detect A.I.-generated writing. But these tools aren’t reliably accurate, and it’s relatively easy to fool them by changing a few words, or using a different A.I. program to paraphrase certain passages.

A.I. chatbots could be programmed to watermark their outputs in some way, so teachers would have an easier time spotting A.I.-generated text. But this, too, is a flimsy defense. Right now, ChatGPT is the only free, easy-to-use chatbot of its caliber. But there will be others, and students will soon be able to take their pick, probably including apps with no A.I. fingerprints.

Even if it were technically possible to block ChatGPT, do teachers want to spend their nights and weekends keeping up with the latest A.I. detection software? Several educators I spoke with said that while they found the idea of ChatGPT-assisted cheating annoying, policing it sounded even worse.

“I don’t want to be in an adversarial relationship with my students,” said Gina Parnaby, the chair of the English department at the Marist School, an independent school for grades seven through 12 outside Atlanta. “If our mind-set approaching this is that we have to build a better mousetrap to catch kids cheating, I just think that’s the wrong approach, because the kids are going to figure something out.”

Instead of starting an endless game of whack-a-mole against an ever-expanding army of A.I. chatbots, here’s a suggestion: For the rest of the academic year, schools should treat ChatGPT the way they treat calculators — allowing it for some assignments, but not others, and assuming that unless students are being supervised in person with their devices stashed away, they’re probably using one.

Then, over the summer, teachers can modify their lesson plans — replacing take-home exams with in-class tests or group discussions, for example — to try to keep cheaters at bay.

ChatGPT can be a teacher’s best friend

The second reason not to ban ChatGPT from the classroom is that, with the right approach, it can be an effective teaching tool.

Cherie Shields, a high school English teacher in Oregon, told me that she had recently assigned students in one of her classes to use ChatGPT to create outlines for their essays comparing and contrasting two 19th-century short stories that touch on themes of gender and mental health: “The Story of an Hour,” by Kate Chopin, and “The Yellow Wallpaper,” by Charlotte Perkins Gilman. Once the outlines were generated, her students put their laptops away and wrote their essays longhand.

The process, she said, had not only deepened students’ understanding of the stories. It had also taught them about interacting with A.I. models, and how to coax a helpful response out of one.

“They have to understand, ‘I need this to produce an outline about X, Y and Z,’ and they have to think very carefully about it,” Ms. Shields said. “And if they don’t get the result that they want, they can always revise it.”

Creating outlines is just one of the many ways that ChatGPT could be used in class. It could write personalized lesson plans for each student (“explain Newton’s laws of motion to a visual-spatial learner”) and generate ideas for classroom activities (“write a script for a ‘Friends’ episode that takes place at the Constitutional Convention”). It could serve as an after-hours tutor (“explain the Doppler effect, using language an eighth grader could understand”) or a debate sparring partner (“convince me that animal testing should be banned”). It could be used as a starting point for in-class exercises, or a tool for English language learners to improve their basic writing skills. (The teaching blog Ditch That Textbook has a long list of possible classroom uses for ChatGPT.)

Even ChatGPT’s flaws — such as the fact that its answers to factual questions are often wrong — can become fodder for a critical thinking exercise. Several teachers told me that they had instructed students to try to trip up ChatGPT, or evaluate its responses the way a teacher would evaluate a student’s.

ChatGPT can also help teachers save time preparing for class. Jon Gold, an eighth grade history teacher at Moses Brown School, a pre-K through 12th grade Quaker school in Providence, R.I., said that he had experimented with using ChatGPT to generate quizzes. He fed the bot an article about Ukraine, for example, and asked it to generate 10 multiple-choice questions that could be used to test students’ understanding of the article. (Of those 10 questions, he said, six were usable.)

Ultimately, Mr. Gold said, ChatGPT wasn’t a threat to student learning as long as teachers paired it with substantive, in-class discussions.

“Any tool that lets students refine their thinking before they come to class, and practice their ideas, is only going to make our discussions richer,” he said.

ChatGPT teaches students about the world they’ll inhabit

Now, I’ll take off my tech columnist hat for a second, and confess that writing this piece has made me a little sad. I loved school, and it pains me, on some level, to think that instead of sharpening their skills by writing essays about “The Sun Also Rises” or straining to factor a trigonometric expression, today’s students might simply ask an A.I. chatbot to do it for them.

I also don’t believe that educators who are reflexively opposed to ChatGPT are being irrational. This type of A.I. really is (if you’ll excuse the buzzword) disruptive — to classroom routines, to longstanding pedagogical practices, and to the basic principle that the work students turn in should reflect cogitation happening inside their brains, rather than in the latent space of a machine learning model hosted on a distant supercomputer.

But the barricade has fallen. Tools like ChatGPT aren’t going anywhere; they’re only going to improve, and barring some major regulatory intervention, this particular form of machine intelligence is now a fixture of our society.

“Large language models aren’t going to get less capable in the next few years,” said Ethan Mollick, a professor at the Wharton School of the University of Pennsylvania. “We need to figure out a way to adjust to these tools, and not just ban them.”

That’s the biggest reason not to ban it from the classroom, in fact — because today’s students will graduate into a world full of generative A.I. programs. They’ll need to know their way around these tools — their strengths and weaknesses, their hallmarks and blind spots — in order to work alongside them. To be good citizens, they’ll need hands-on experience to understand how this type of A.I. works, what types of bias it contains, and how it can be misused and weaponized.

This adjustment won’t be easy. Sudden technological shifts rarely are. But who better to guide students into this strange new world than their teachers?

Kevin Roose is a technology columnist and the author of “Futureproof: 9 Rules for Humans in the Age of Automation.” More about Kevin Roose

Explore Our Coverage of Artificial Intelligence

News and Analysis

Elon Musk has amended a lawsuit he brought against OpenAI, making new antitrust claims against the company and adding defendants , including Microsoft and the venture capitalist Reid Hoffman.

Meta will allow U.S. government agencies and contractors working on national security to use its A.I. models for military purposes . The shift in policy is intended to promote “responsible and ethical” innovations, the company said.

A national security memorandum signed by President Biden detailed how the Pentagon, the intelligence agencies and other national security institutions should use and protect A.I. technology.

The Age of A.I.

A radio station in Poland fired its on-air talent and brought in A.I.-generated presenters. An outcry over a purported chat with a Nobel laureate quickly ended that experiment.

Meta unveiled a set of A.I. tools for automatically generating videos, instantly editing them and synchronizing them with A.I.-generated sound effects, ambient noise and background music.

Despite — or, perhaps, because of — the rise in artificially made images, photography is suddenly in the spotlight, in galleries in New York and beyond .

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- 15 November 2023

Why teachers should explore ChatGPT’s potential — despite the risks

You have full access to this article via your institution.

Experiments to harness ChatGPT in education are under way in many universities. Credit: Riccardo Venturi/Contrasto/eyevine

Teachers were spooked when ChatGPT was launched a year ago. The artificial-intelligence (AI) chatbot can write lucid, apparently well-researched essays in response to assignment questions, forcing educators around the world to rethink their evaluation methods. A few countries brought back pen-and-paper exams. And some schools are ‘flipping’ the classroom model: students do their assignments at school, after learning about a subject at home.

But after that initial shock, educators have started studying the chatbots’ potential benefits. As we report in a News feature , experiments to harness the use of ChatGPT in education are under way in many schools and universities. There are risks, but some educators think that ChatGPT and other large language models (LLMs) can be powerful learning tools. They could help students by providing a personalized tutoring experience that is available at any time and might be accessible to more students than human tutors would be. Or they could help teachers and students by making information and concepts normally restricted to textbooks much easier to find and digest.

ChatGPT has entered the classroom: how LLMs could transform education

There are still problems to be ironed out. Questions remain about whether LLMs can be made accurate and reliable enough to be trusted as learning assistants. It’s too soon to know what their ultimate effect on education will be, but more institutions need to explore ChatGPT’s advantages and pitfalls, and share what they are learning, or their students might miss out on a valuable tool.

Many students are already using ChatGPT. Within months of its launch, reports surfaced of students using the chatbot to do their homework and essays for them. Teachers were often unimpressed by the quality of the output. Crucially, the chatbot was inventing fictitious references or citations. And although it excelled in some mathematical tests 1 , it didn’t do as well in others. That’s because ChatGPT has not been trained specifically to solve mathematical problems — rather, it finds plausible words to finish a sentence or respond to a query on the basis of billions of pieces of text it has seen.

In a February preprint, researchers described how, in a benchmark set of relatively simple mathematical problems usually answered by students aged 12–17, ChatGPT answered about half of the questions correctly 2 . If the problems were more complex — requiring ChatGPT to do four or more additions or subtractions in the same calculation — it was particularly likely to fail.

AI bot ChatGPT writes smart essays — should professors worry?

And the authors of a preprint study published in July found that the mathematical skills of the LLM that underlies ChatGPT might have worsened 3 . In March 2023, the GPT-4 version of the chatbot correctly differentiated between prime and composite numbers 84% of the time. By June, it did so in only 51% of cases. The study’s authors note that “improving the model’s performance on some tasks, for example with fine-tuning on additional data, can have unexpected side effects on its behavior in other tasks”.

Despite these risks, educators should not avoid using LLMs. Rather, they need to teach students the chatbots’ strengths and weaknesses and support institutions’ efforts to improve the models for education-specific purposes. This could mean building task-specific versions of LLMs that harness their strengths in dialogue and summarization and minimize the risks of a chatbot providing students with inaccurate information or enabling them to cheat.

Arizona State University (ASU), for example, is rolling out a platform that enables faculty members to use generative AI models, including GPT-4 and Google’s Bard — another LLM-powered chatbot. The platform uses a technique called retrieval-augmented generation in ASU courses. ChatGPT or Bard are instructed to seek answers to users’ questions in specific data sets, such as scientific papers or lecture notes. This approach not only harnesses the chatbots’ conversational power, but also reduces the chance of errors.

One of the greatest risks is that LLMs might perpetuate or worsen long-standing societal concerns, such as biases and discrimination. For example, when summarizing existing literature, LLMs probably take cues from their training data and give less weight to the viewpoints of people from under-represented groups. ASU says that its platform helps to address such concerns by ensuring that the LLMs provide the sources that they used to generate answers, allowing students to think critically about whose ideas the chatbots present.

How Nature readers are using ChatGPT

Vanderbilt University in Nashville, Tennessee, has an initiative called the Future of Learning and Generative AI. Students who need to use ChatGPT, for courses such as computer science, get access to a paid version. This variant of the chatbot can use other programs to execute computer code, augmenting the bot’s mathematical capabilities.

As understanding of the LLMs’ power and limitations increases, more university-wide initiatives will no doubt emerge. Using LLMs without considering their downsides is counterproductive. For many educational purposes, error-prone tools are unhelpful at best and, at worst, damage students’ ability to learn. But some institutes, such as ASU, are trying to reduce the LLMs’ weaknesses — even aiming to turn those into strengths by, for example, using them to improve students’ critical-thinking skills. Educators must be bold to avoid missing a huge opportunity — and vigilant to ensure that institutions everywhere use LLMs in a way that makes the world better, not worse.

Nature 623 , 457-458 (2023)

doi: https://doi.org/10.1038/d41586-023-03505-5

OpenAI. Preprint at https://arxiv.org/abs/2303.08774 (2023).

Shakarian, P. et al. Preprint at https://arxiv.org/abs/2302.13814 (2023).

Chen, L. et al. Preprint at https://arxiv.org/abs/2307.09009 (2023).

Download references

Reprints and permissions

Related Articles

- Machine learning

- Computer science

Setting the stage for using AI in language tasks 50 years ago

News & Views 19 NOV 24

Why AI-generated recommendation letters sell applicants short

Career Column 14 NOV 24

Can robotic lab assistants speed up your work?

Outlook 14 NOV 24

What Trump’s election win could mean for AI, climate and health

News 08 NOV 24

ChatGPT is transforming peer review — how can we use it responsibly?

World View 05 NOV 24

AI watermarking must be watertight to be effective

Editorial 23 OCT 24

Your dissertation is your business card!

Career Column 12 NOV 24

A spider’s windproof web

News & Views 05 NOV 24

How I hunt down fake degrees and zombie universities

Career Feature 04 NOV 24

Faculty Position: RNA and CRISPR therapeutics, gene and cell therapy, and nanoparticle medicine

Faculty recruitment in RNA and CRISPR therapeutics, gene and cell therapy, and nanoparticle medicine at the Icahn Genomics Institute (IGI)

New York City, New York (US)

Icahn Genomics Institute - Icahn School of Medicine at Mount Sinai

Chaire Professor in Renal Cell Carcinoma

Senior professor to develop fundamental, translational, and clinical renal cancer research and training programs

Bordeaux (Ville), Gironde (FR)

INSERM - U1312 BRIC

Group leader positions at the IMP

We invite applications for group leader positions at the Research Institute of Molecular Pathology (IMP) in Vienna, Austria.

Vienna (Landbezirke) (AT)

Research Institute of Molecular Pathology (IMP)

Postdoctoral fellow

The laboratory of Prof. Ylva Ivarsson is seeking a highly motived candidate for a two-year postdoctoral fellowship on a project focused on short li...

Uppsala (Stad) (SE)

Uppsala University, Department of Chemistry - BMC

Call for Participation at Forum of Young Scientists Shenzhen University of Advanced Technology

Shenzhen University of Advanced Technology (SUAT) invites interested individuals to its Forum of Young Scientists.

Shenzhen, Guangdong, China

Shenzhen University of Advanced Technology

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

IMAGES

VIDEO

COMMENTS

But banning students from using ChatGPT, or expecting teachers to scour homework for its use, would be shortsighted. Education has adapted to – and embraced – online technology for decades.

A new AI chatbot might do your homework for you. But it's still not an A+ student. December 19, 20225:00 AM ET. Emma Bowman. Enlarge this image. Enter a prompt into ChatGPT, and it becomes your ...

ChatGPT is impressive and can be quite useful. It can help people write text, for instance, and code. However, “it’s not magic,” says Casey Fiesler. In fact, it often seems intelligent and confident while making mistakes — and sometimes parroting biases. Glenn Harvey. By Kathryn Hulick. February 16, 2023 at 6:30 am.

About 1 in 5 U.S. teens who’ve heard of ChatGPT have used it for schoolwork. By Olivia Sidoti and Jeffrey Gottfried. (Maskot/Getty Images) Roughly one-in-five teenagers who have heard of ChatGPT say they have used it to help them do their schoolwork, according to a new Pew Research Center survey of U.S. teens ages 13 to 17.

ChatGPT doesn’t allow for an accurate assessment of understanding. But when used on homework, something usually meant for learning and practice, it can allow a student to more clearly grasp the ...

A large majority of students are already using ChatGPT for homework assignments, creating challenges around plagiarism, cheating, and learning. According to Wharton MBA Professor Christian ...

Cathelin and Cordier both note that students haven’t waited for ChatGPT to try to escape the chore of homework - whether by photocopying the library encyclopaedia, copy-pasting content from ...

The year is 2052, ChatGPT cover letters have become so ubiquitous that it is now advantageous to write cover letters as if texting ur friends. Sup Goog, Gyih dawg I'm finna work for you naw mean I'm like mad desperate for that cash I got a baby momma and those alimony payments really getting outta control no cap.

(Some publications have declared, perhaps a bit prematurely, that ChatGPT has killed homework altogether.) Cheating is the immediate, practical fear, along with the bot’s propensity to spit out ...

ChatGPT has entered the classroom: how LLMs could transform education. ... Within months of its launch, reports surfaced of students using the chatbot to do their homework and essays for them ...