- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

2.1: Types of Data Representation

- Last updated

- Save as PDF

- Page ID 5696

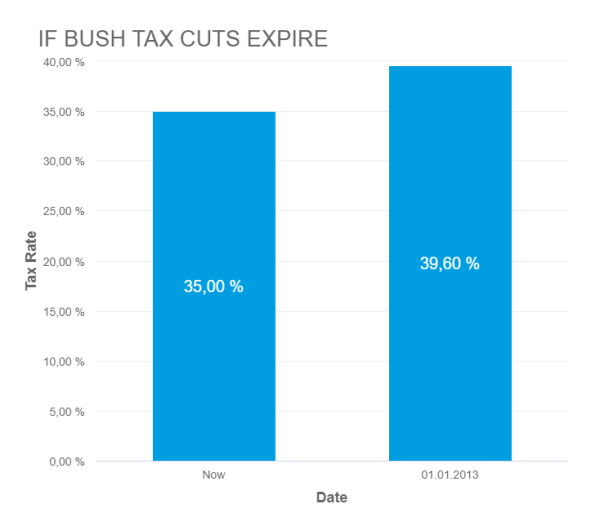

Two common types of graphic displays are bar charts and histograms. Both bar charts and histograms use vertical or horizontal bars to represent the number of data points in each category or interval. The main difference graphically is that in a bar chart there are spaces between the bars and in a histogram there are not spaces between the bars. Why does this subtle difference exist and what does it imply about graphic displays in general?

Displaying Data

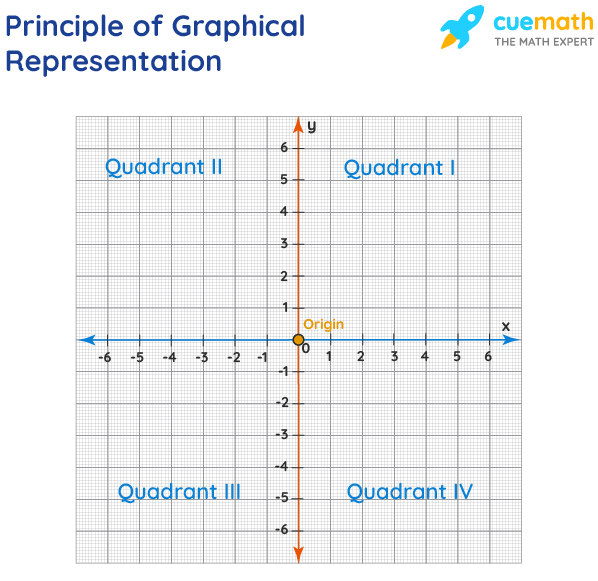

It is often easier for people to interpret relative sizes of data when that data is displayed graphically. Note that a categorical variable is a variable that can take on one of a limited number of values and a quantitative variable is a variable that takes on numerical values that represent a measurable quantity. Examples of categorical variables are tv stations, the state someone lives in, and eye color while examples of quantitative variables are the height of students or the population of a city. There are a few common ways of displaying data graphically that you should be familiar with.

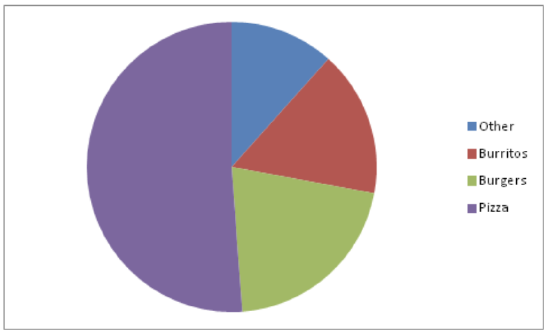

A pie chart shows the relative proportions of data in different categories. Pie charts are excellent ways of displaying categorical data with easily separable groups. The following pie chart shows six categories labeled A−F. The size of each pie slice is determined by the central angle. Since there are 360 o in a circle, the size of the central angle θ A of category A can be found by:

CK-12 Foundation - https://www.flickr.com/photos/slgc/16173880801 - CCSA

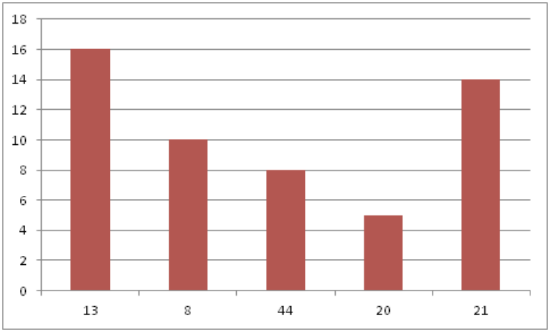

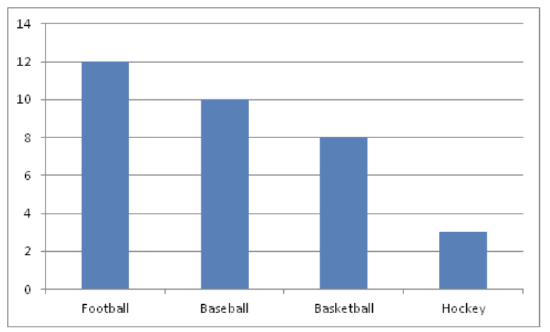

A bar chart displays frequencies of categories of data. The bar chart below has 5 categories, and shows the TV channel preferences for 53 adults. The horizontal axis could have also been labeled News, Sports, Local News, Comedy, Action Movies. The reason why the bars are separated by spaces is to emphasize the fact that they are categories and not continuous numbers. For example, just because you split your time between channel 8 and channel 44 does not mean on average you watch channel 26. Categories can be numbers so you need to be very careful.

CK-12 Foundation - https://www.flickr.com/photos/slgc/16173880801 - CCSA

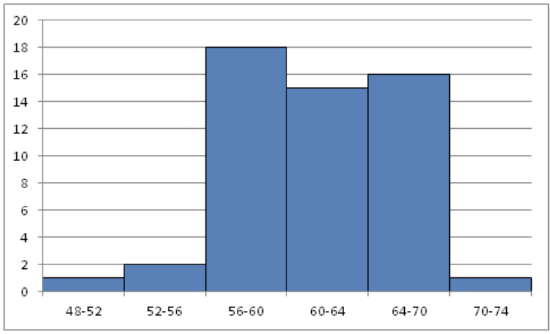

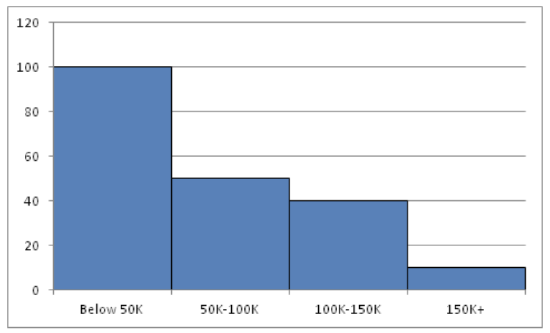

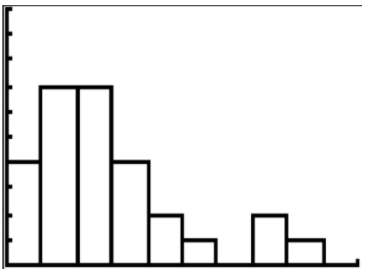

A histogram displays frequencies of quantitative data that has been sorted into intervals. The following is a histogram that shows the heights of a class of 53 students. Notice the largest category is 56-60 inches with 18 people.

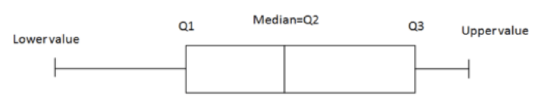

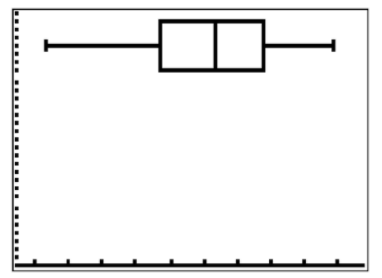

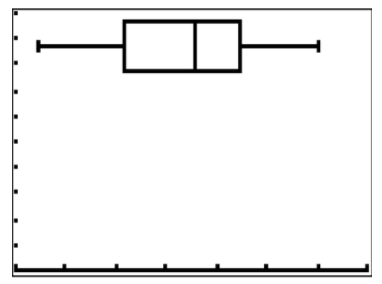

A boxplot (also known as a box and whiskers plot ) is another way to display quantitative data. It displays the five 5 number summary (minimum, Q1, median , Q3, maximum). The box can either be vertically or horizontally displayed depending on the labeling of the axis. The box does not need to be perfectly symmetrical because it represents data that might not be perfectly symmetrical.

Earlier, you were asked about the difference between histograms and bar charts. The reason for the space in bar charts but no space in histograms is bar charts graph categorical variables while histograms graph quantitative variables. It would be extremely improper to forget the space with bar charts because you would run the risk of implying a spectrum from one side of the chart to the other. Note that in the bar chart where TV stations where shown, the station numbers were not listed horizontally in order by size. This was to emphasize the fact that the stations were categories.

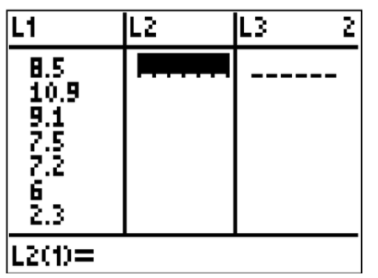

Create a boxplot of the following numbers in your calculator.

8.5, 10.9, 9.1, 7.5, 7.2, 6, 2.3, 5.5

Enter the data into L1 by going into the Stat menu.

CK-12 Foundation - CCSA

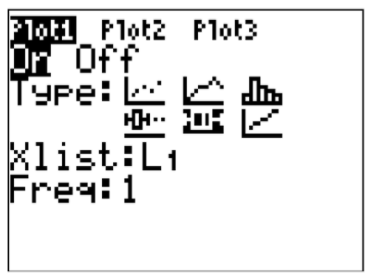

Then turn the statplot on and choose boxplot.

Use Zoomstat to automatically center the window on the boxplot.

Create a pie chart to represent the preferences of 43 hungry students.

- Other – 5

- Burritos – 7

- Burgers – 9

- Pizza – 22

Create a bar chart representing the preference for sports of a group of 23 people.

- Football – 12

- Baseball – 10

- Basketball – 8

- Hockey – 3

Create a histogram for the income distribution of 200 million people.

- Below $50,000 is 100 million people

- Between $50,000 and $100,000 is 50 million people

- Between $100,000 and $150,000 is 40 million people

- Above $150,000 is 10 million people

1. What types of graphs show categorical data?

2. What types of graphs show quantitative data?

A math class of 30 students had the following grades:

3. Create a bar chart for this data.

4. Create a pie chart for this data.

5. Which graph do you think makes a better visual representation of the data?

A set of 20 exam scores is 67, 94, 88, 76, 85, 93, 55, 87, 80, 81, 80, 61, 90, 84, 75, 93, 75, 68, 100, 98

6. Create a histogram for this data. Use your best judgment to decide what the intervals should be.

7. Find the five number summary for this data.

8. Use the five number summary to create a boxplot for this data.

9. Describe the data shown in the boxplot below.

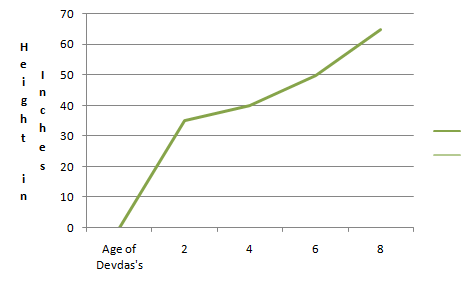

10. Describe the data shown in the histogram below.

A math class of 30 students has the following eye colors:

11. Create a bar chart for this data.

12. Create a pie chart for this data.

13. Which graph do you think makes a better visual representation of the data?

14. Suppose you have data that shows the breakdown of registered republicans by state. What types of graphs could you use to display this data?

15. From which types of graphs could you obtain information about the spread of the data? Note that spread is a measure of how spread out all of the data is.

Review (Answers)

To see the Review answers, open this PDF file and look for section 15.4.

Additional Resources

PLIX: Play, Learn, Interact, eXplore - Baby Due Date Histogram

Practice: Types of Data Representation

Real World: Prepare for Impact

- Reviews / Why join our community?

- For companies

- Frequently asked questions

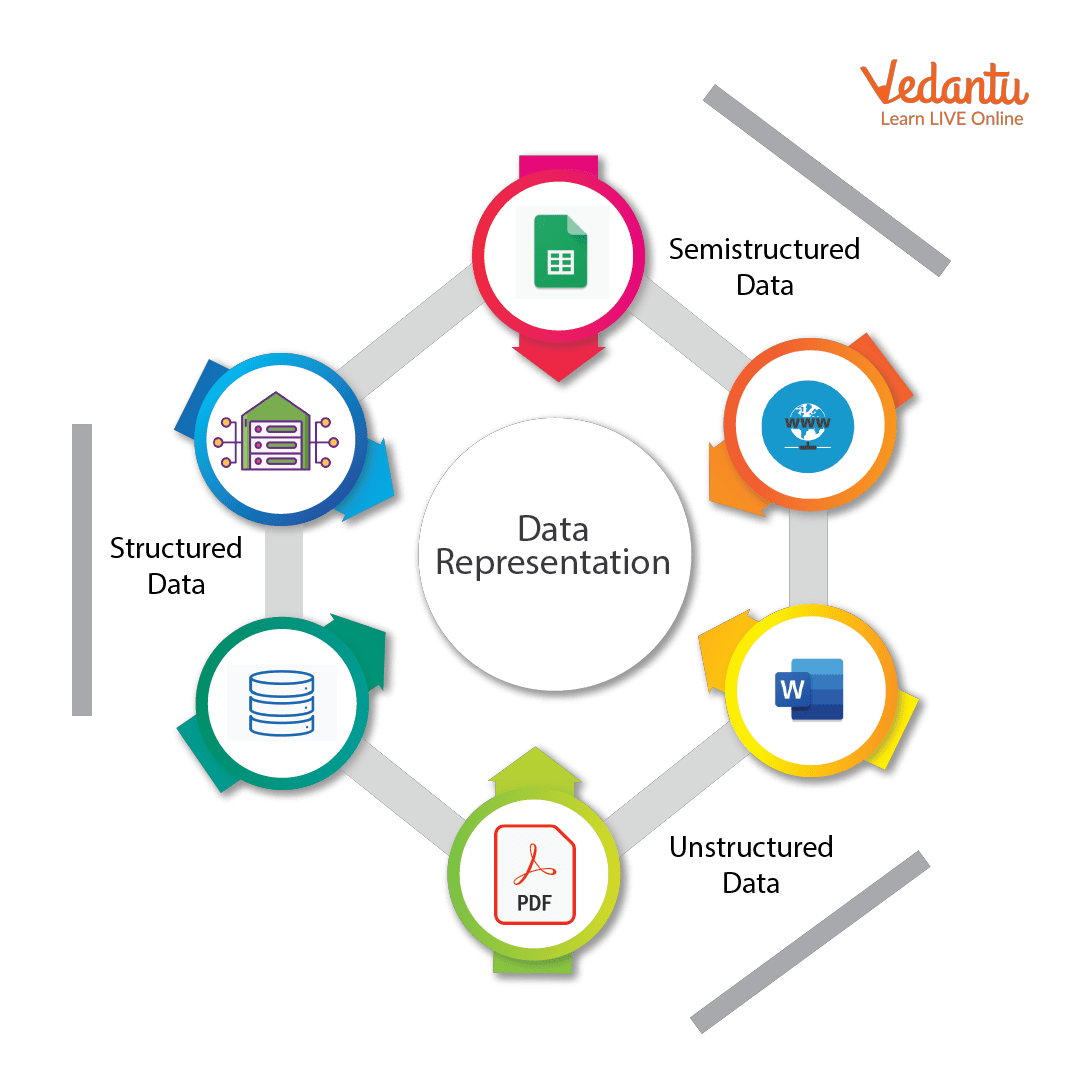

Data Representation

Literature on data representation.

Here’s the entire UX literature on Data Representation by the Interaction Design Foundation, collated in one place:

Learn more about Data Representation

Take a deep dive into Data Representation with our course AI for Designers .

In an era where technology is rapidly reshaping the way we interact with the world, understanding the intricacies of AI is not just a skill, but a necessity for designers . The AI for Designers course delves into the heart of this game-changing field, empowering you to navigate the complexities of designing in the age of AI. Why is this knowledge vital? AI is not just a tool; it's a paradigm shift, revolutionizing the design landscape. As a designer, make sure that you not only keep pace with the ever-evolving tech landscape but also lead the way in creating user experiences that are intuitive, intelligent, and ethical.

AI for Designers is taught by Ioana Teleanu, a seasoned AI Product Designer and Design Educator who has established a community of over 250,000 UX enthusiasts through her social channel UX Goodies. She imparts her extensive expertise to this course from her experience at renowned companies like UiPath and ING Bank, and now works on pioneering AI projects at Miro.

In this course, you’ll explore how to work with AI in harmony and incorporate it into your design process to elevate your career to new heights. Welcome to a course that doesn’t just teach design; it shapes the future of design innovation.

In lesson 1, you’ll explore AI's significance, understand key terms like Machine Learning, Deep Learning, and Generative AI, discover AI's impact on design, and master the art of creating effective text prompts for design.

In lesson 2, you’ll learn how to enhance your design workflow using AI tools for UX research, including market analysis, persona interviews, and data processing. You’ll dive into problem-solving with AI, mastering problem definition and production ideation.

In lesson 3, you’ll discover how to incorporate AI tools for prototyping, wireframing, visual design, and UX writing into your design process. You’ll learn how AI can assist to evaluate your designs and automate tasks, and ensure your product is launch-ready.

In lesson 4, you’ll explore the designer's role in AI-driven solutions, how to address challenges, analyze concerns, and deliver ethical solutions for real-world design applications.

Throughout the course, you'll receive practical tips for real-life projects. In the Build Your Portfolio exercises, you’ll practise how to integrate AI tools into your workflow and design for AI products, enabling you to create a compelling portfolio case study to attract potential employers or collaborators.

All open-source articles on Data Representation

Visual mapping – the elements of information visualization.

- 3 years ago

Rating Scales in UX Research: The Ultimate Guide

Open Access—Link to us!

We believe in Open Access and the democratization of knowledge . Unfortunately, world-class educational materials such as this page are normally hidden behind paywalls or in expensive textbooks.

If you want this to change , cite this page , link to us, or join us to help us democratize design knowledge !

Privacy Settings

Our digital services use necessary tracking technologies, including third-party cookies, for security, functionality, and to uphold user rights. Optional cookies offer enhanced features, and analytics.

Experience the full potential of our site that remembers your preferences and supports secure sign-in.

Governs the storage of data necessary for maintaining website security, user authentication, and fraud prevention mechanisms.

Enhanced Functionality

Saves your settings and preferences, like your location, for a more personalized experience.

Referral Program

We use cookies to enable our referral program, giving you and your friends discounts.

Error Reporting

We share user ID with Bugsnag and NewRelic to help us track errors and fix issues.

Optimize your experience by allowing us to monitor site usage. You’ll enjoy a smoother, more personalized journey without compromising your privacy.

Analytics Storage

Collects anonymous data on how you navigate and interact, helping us make informed improvements.

Differentiates real visitors from automated bots, ensuring accurate usage data and improving your website experience.

Lets us tailor your digital ads to match your interests, making them more relevant and useful to you.

Advertising Storage

Stores information for better-targeted advertising, enhancing your online ad experience.

Personalization Storage

Permits storing data to personalize content and ads across Google services based on user behavior, enhancing overall user experience.

Advertising Personalization

Allows for content and ad personalization across Google services based on user behavior. This consent enhances user experiences.

Enables personalizing ads based on user data and interactions, allowing for more relevant advertising experiences across Google services.

Receive more relevant advertisements by sharing your interests and behavior with our trusted advertising partners.

Enables better ad targeting and measurement on Meta platforms, making ads you see more relevant.

Allows for improved ad effectiveness and measurement through Meta’s Conversions API, ensuring privacy-compliant data sharing.

LinkedIn Insights

Tracks conversions, retargeting, and web analytics for LinkedIn ad campaigns, enhancing ad relevance and performance.

LinkedIn CAPI

Enhances LinkedIn advertising through server-side event tracking, offering more accurate measurement and personalization.

Google Ads Tag

Tracks ad performance and user engagement, helping deliver ads that are most useful to you.

Share the knowledge!

Share this content on:

or copy link

Cite according to academic standards

Simply copy and paste the text below into your bibliographic reference list, onto your blog, or anywhere else. You can also just hyperlink to this page.

New to UX Design? We’re Giving You a Free ebook!

Download our free ebook The Basics of User Experience Design to learn about core concepts of UX design.

In 9 chapters, we’ll cover: conducting user interviews, design thinking, interaction design, mobile UX design, usability, UX research, and many more!

- FOUNDATION YEARS

- LEARN TO CODE

- ROBOTICS ENGINEERING

- CLASS PROJECTS

- Classroom Discussions

- Useful Links

- PRIVACY POLICY

Digital Humanities Data Curation

This is the first stop on your way to mastering the essentials of data curation for the humanities. The Guide offers concise, expert introductions to key topics, including annotated links to important standards, articles, projects, and other resources.

The best place to start is the Table of Contents grid. To find out more about the project, visit the About This Site page. Please browse, read, and contribute. We’re still expanding the site, but take a look around. Happy browsing!

— The Editors

Follow @DHCuration

More about the DH Curation Guide

Data curation is an emerging problem for the humanities as both data and analytical practices become increasingly digital. Research groups working with cultural content as well as libraries, museums, archives, and other institutions are all in need of new expertise. This Guide is a first step to understanding the essentials of data curation for the humanities. The expert-written introductions to key topics include links to important standards, documentation, articles, and projects in the field, annotated with enough context from expert editors and the research community to indicate to newcomers how these resources might help them with data curation challenges.

A Community Resource

Intended to help students and those new to the field, the DH Curation Guide also provides a quick reference for teachers, administrators, and anyone seeking an orientation in the issues and practicalities of data curation.

As indicated by the name, this community resource guide is intended to be a living, participatory document. Readers are encouraged to review and comment on every part of this guide, to suggest additional resources, and to contribute to stub articles. Contributions from readers are incorporated at intervals to keep the Guide at the cutting edge. Read more about how to contribute

Browse, comment, contribute! The table of contents provides a road map to the Guide’s current topics and those to be added soon. Read more about this site

What Is Data Visualization? Definition, Examples, And Learning Resources

In our increasingly data-driven world, it’s more important than ever to have accessible ways to view and understand data. After all, the demand for data skills in employees is steadily increasing each year. Employees and business owners at every level need to have an understanding of data and of its impact.

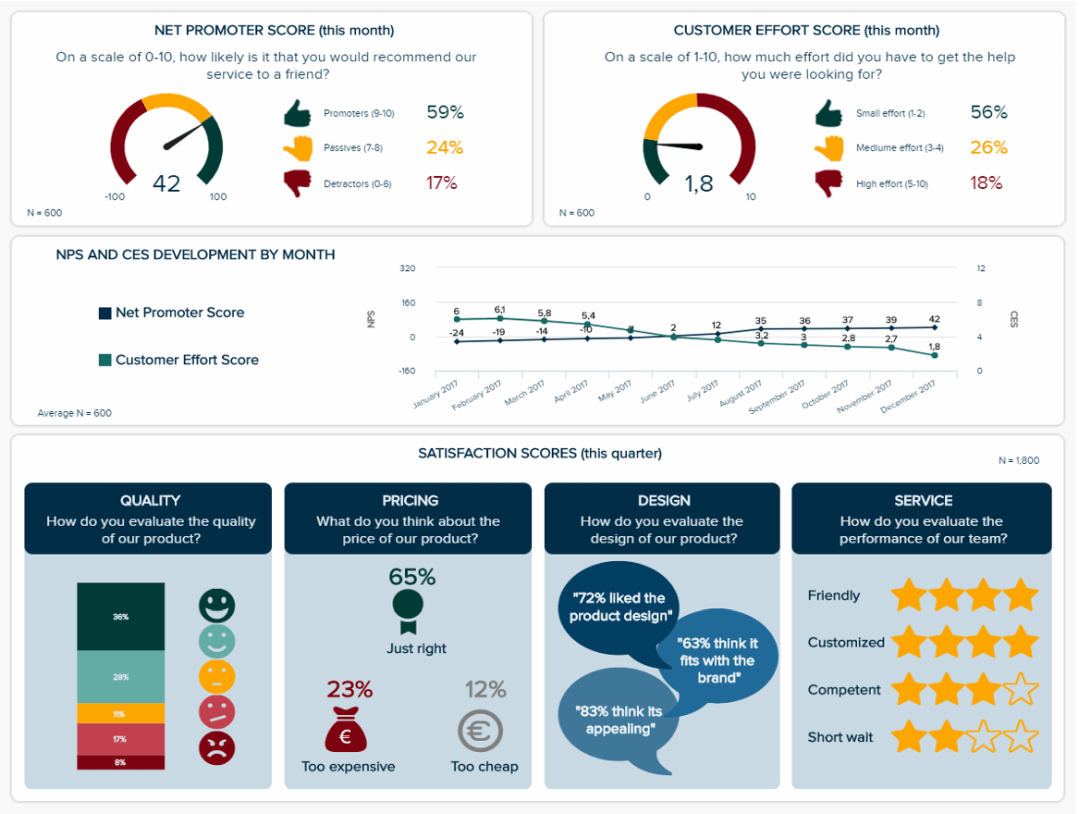

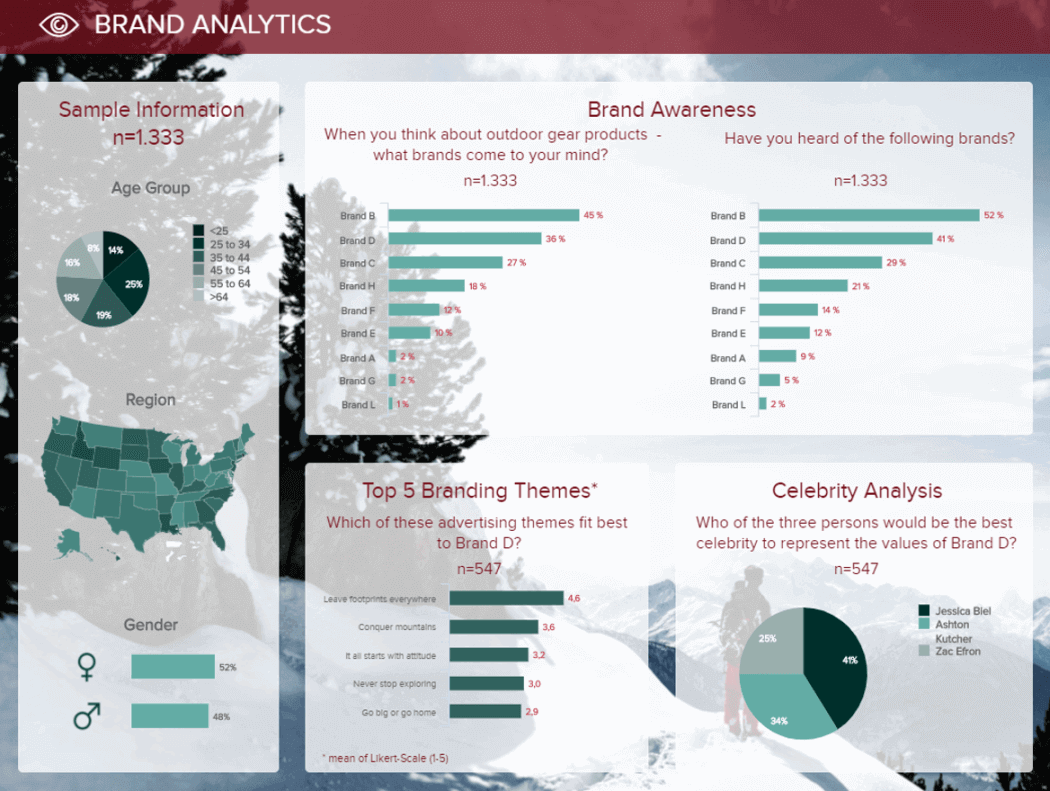

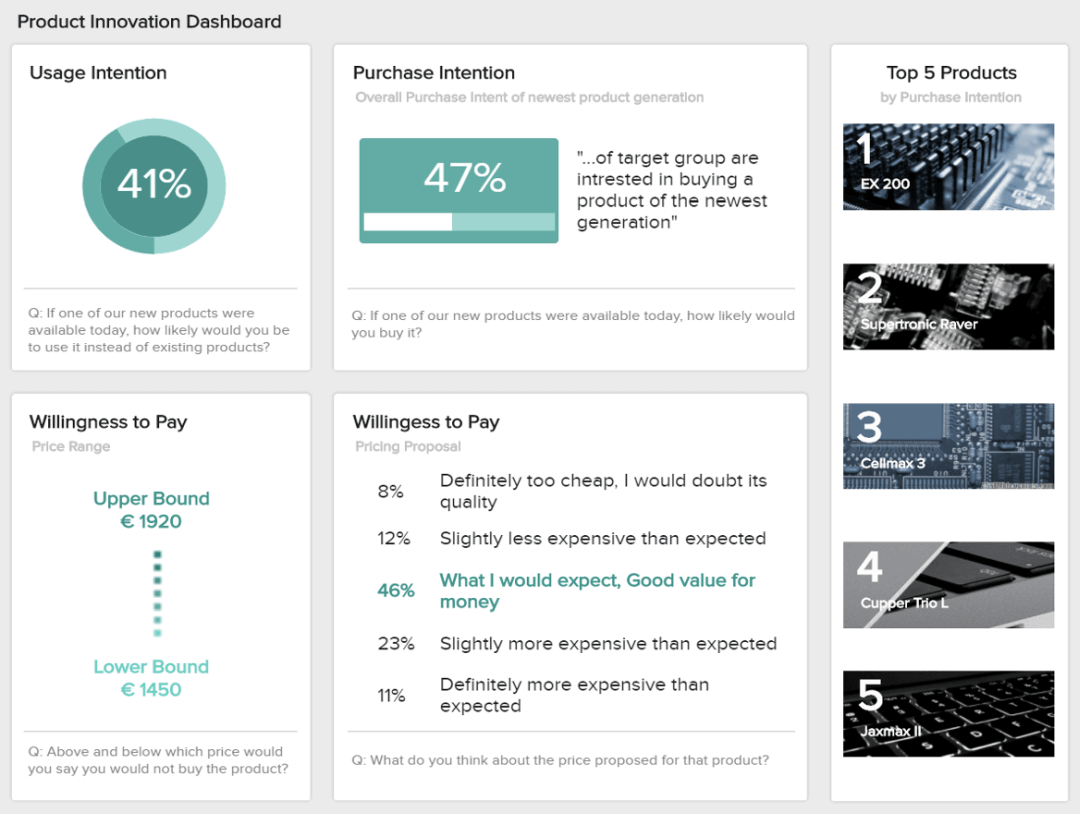

That’s where data visualization comes in handy. With the goal of making data more accessible and understandable, data visualization in the form of dashboards is the go-to tool for many businesses to analyze and share information.

In this article, we'll cover:

- The definition of data visualization

- Advantages and disadvantages of data visualization

Why data visualization is important

Data visualization and big data.

- Data visualization examples

- Tools and software of data visualization

- More about data visualization

What is data visualization?

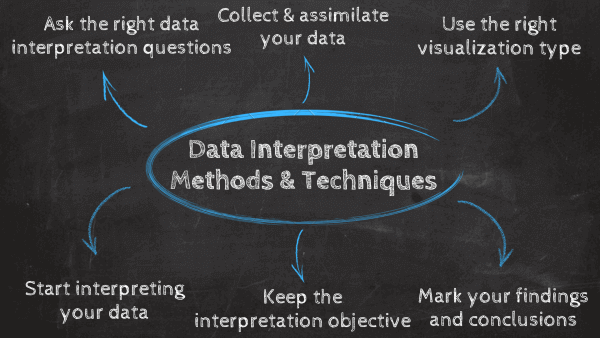

Data visualization is the graphical representation of information and data. By using v isual elements like charts, graphs, and maps , data visualization tools provide an accessible way to see and understand trends, outliers, and patterns in data. Additionally, it provides an excellent way for employees or business owners to present data to non-technical audiences without confusion.

In the world of Big Data, data visualization tools and technologies are essential to analyze massive amounts of information and make data-driven decisions.

What are the advantages and disadvantages of data visualization?

Something as simple as presenting data in graphic format may seem to have no downsides. But sometimes data can be misrepresented or misinterpreted when placed in the wrong style of data visualization. When choosing to create a data visualization, it’s best to keep both the advantages and disadvantages in mind.

Our eyes are drawn to colors and patterns . We can quickly identify red from blue, and squares from circles. Our culture is visual, including everything from art and advertisements to TV and movies. Data visualization is another form of visual art that grabs our interest and keeps our eyes on the message. When we see a chart, we quickly see trends and outliers . If we can see something, we internalize it quickly. It’s storytelling with a purpose. If you’ve ever stared at a massive spreadsheet of data and couldn’t see a trend, you know how much more effective a visualization can be.

Some other advantages of data visualization include:

- Easily sharing information.

- Interactively explore opportunities.

- Visualize patterns and relationships.

Disadvantages

While there are many advantages, some of the disadvantages may seem less obvious. For example, when viewing a visualization with many different datapoints, it’s easy to make an inaccurate assumption. Or sometimes the visualization is just designed wrong so that it’s biased or confusing.

Some other disadvantages include:

- Biased or inaccurate information.

- Correlation doesn’t always mean causation.

- Core messages can get lost in translation.

The importance of data visualization is simple: it helps people see, interact with, and better understand data. Whether simple or complex, the right visualization can bring everyone on the same page, regardless of their level of expertise.

It’s hard to think of a professional industry that doesn’t benefit from making data more understandable . Every STEM field benefits from understanding data—and so do fields in government, finance, marketing, history, consumer goods, service industries, education, sports, and so on.

While we’ll always wax poetically about data visualization (you’re on the Tableau website, after all) there are practical, real-life applications that are undeniable. And, since visualization is so prolific, it’s also one of the most useful professional skills to develop. The better you can convey your points visually, whether in a dashboard or a slide deck, the better you can leverage that information. The concept of the citizen data scientist is on the rise . Skill sets are changing to accommodate a data-driven world. It is increasingly valuable for professionals to be able to use data to make decisions and use visuals to tell stories of when data informs the who, what, when, where, and how.

While traditional education typically draws a distinct line between creative storytelling and technical analysis, the modern professional world also values those who can cross between the two: data visualization sits right in the middle of analysis and visual storytelling.

As the “age of Big Data” kicks into high gear , visualization is an increasingly key tool to make sense of the trillions of rows of data generated every day. Data visualization helps to tell stories by curating data into a form easier to understand, highlighting the trends and outliers. A good visualization tells a story, removing the noise from data and highlighting useful information.

However, it’s not simply as easy as just dressing up a graph to make it look better or slapping on the “info” part of an infographic. Effective data visualization is a delicate balancing act between form and function. The plainest graph could be too boring to catch any notice or it make tell a powerful point; the most stunning visualization could utterly fail at conveying the right message or it could speak volumes. The data and the visuals need to work together, and there’s an art to combining great analysis with great storytelling.

Learn more about big data .

Create beautiful visualizations with your data.

Try Tableau for free

Examples of data visualization

Different types of visualizations.

When you think of data visualization, your first thought probably immediately goes to simple bar graphs or pie charts. While these may be an integral part of visualizing data and a common baseline for many data graphics, the right visualization must be paired with the right set of information. Simple graphs are only the tip of the iceberg . There’s a whole selection of visualization methods to present data in effective and interesting ways.

General Types of Visualizations:

- Chart: Information presented in a tabular, graphical form with data displayed along two axes. Can be in the form of a graph, diagram, or map. Learn more.

- Table: A set of figures displayed in rows and columns. Learn more.

- Graph: A diagram of points, lines, segments, curves, or areas that represents certain variables in comparison to each other, usually along two axes at a right angle.

- Geospatial: A visualization that shows data in map form using different shapes and colors to show the relationship between pieces of data and specific locations. Learn more.

- Infographic: A combination of visuals and words that represent data. Usually uses charts or diagrams.

- Dashboards: A collection of visualizations and data displayed in one place to help with analyzing and presenting data. Learn more.

More specific examples

- Area Map: A form of geospatial visualization, area maps are used to show specific values set over a map of a country, state, county, or any other geographic location. Two common types of area maps are choropleths and isopleths. Learn more.

- Bar Chart: Bar charts represent numerical values compared to each other. The length of the bar represents the value of each variable. Learn more.

- Box-and-whisker Plots: These show a selection of ranges (the box) across a set measure (the bar). Learn more.

- Bullet Graph: A bar marked against a background to show progress or performance against a goal, denoted by a line on the graph. Learn more.

- Gantt Chart: Typically used in project management, Gantt charts are a bar chart depiction of timelines and tasks. Learn more.

- Heat Map: A type of geospatial visualization in map form which displays specific data values as different colors (this doesn’t need to be temperatures, but that is a common use). Learn more.

- Highlight Table: A form of table that uses color to categorize similar data, allowing the viewer to read it more easily and intuitively. Learn more.

- Histogram: A type of bar chart that split a continuous measure into different bins to help analyze the distribution. Learn more.

- Pie Chart: A circular chart with triangular segments that shows data as a percentage of a whole. Learn more.

- Treemap: A type of chart that shows different, related values in the form of rectangles nested together. Learn more.

Visualization tools and software

There are dozens of tools for data visualization and data analysis . These range from simple to complex, from intuitive to obtuse. Not every tool is right for every person looking to learn visualization techniques, and not every tool can scale to industry or enterprise purposes. If you’d like to learn more about the options, feel free to read up here or dive into detailed third-party analysis like the Gartner Magic Quadrant.

Also, remember that good data visualization theory and skills will transcend specific tools and products. When you’re learning this skill, focus on best practices and explore your own personal style when it comes to visualizations and dashboards. Data visualization isn’t going away any time soon, so it’s important to build a foundation of analysis and storytelling and exploration that you can carry with you regardless of the tools or software you end up using.

Learn more about data visualizations

If you’re feeling inspired or want to learn more, there are tons of resources to tap into. Data visualization and data journalism are full of enthusiastic practitioners eager to share their tips, tricks, theory, and more.

Blogs about data visualization

See our list of great data visualization blogs full of examples, inspiration, and educational resources. The experts who write books and teach classes about the theory behind data visualization also tend to keep blogs where they analyze the latest trends in the field and discuss new vizzes. Many will offer critiques on modern graphics or write tutorials to create effective visualizations. Others will collect many different data visualizations from around the web in order to highlight the most intriguing ones. Blogs are a great way to learn more about specific subsets of data visualization or to look for relatable inspiration from well-done projects.

See our list of the best data visualization blogs.

Books about data visualization

Read our list of great books about data visualization theory and practice. While blogs can keep up with the changing field of data visualization, books focus on where the theory stays constant. Humans have been trying to present data in a visual form throughout our entire existence. One of the earlier books about data visualization, originally published in 1983, set the stage for data visualization to come and still remains relevant to this day. More current books still deal with theory and techniques, offering up timeless examples and practical tips. Some even take completed projects and present the visual graphics in book form as an archival display.

See our list of the best data visualization books.

Courses and Training

There are plenty of great paid and free courses and resources on data visualization out there, including right here on the Tableau website. There are videos, articles, and whitepapers for everyone from beginners to data rockstars. When it comes to third-party courses, however, we won’t provide specific suggestions in this article at this time.

Explore Tableau’s trainings.

Additional Resources

10 interactive map and data visualization examples, tips for creating effective, engaging data visualizations.

Data representation: A Comprehensive Overview (2021)

Introduction

The computer is incapable of comprehending human language. Any data fed to a computer, such as letters, symbols, images, audio, videos, and so on, should be converted to machine language first. In this article, we will discuss data representation, binary number representation, pictorial representation of data, graph representation in the data structure, and the importance of graphical representation of data.

- What exactly is Braille?

- Numbers in data representation

- Text in data representation

- Images and Colours in data representation

- Program Instructions in data representation

1. What exactly is Braille?

Braille is a touch-reading and writing device for the blind that uses raised dots to represent the alphabet’s letters. It also includes punctuation mark equivalents as well as symbols that denote letter groupings. Braille is read by moving the hand or hands along each line from left to right. Reading is usually performed with both hands, with the index fingers doing the bulk of the job. Reading pace is about 125 words per minute on average. However, higher speeds of up to 200 words per minute can be reached. People who are blind should review and research the written word using the braille alphabet. They will also learn about writing conventions like spelling, punctuation, paragraphing, and footnotes.

2. Numbers in data representation

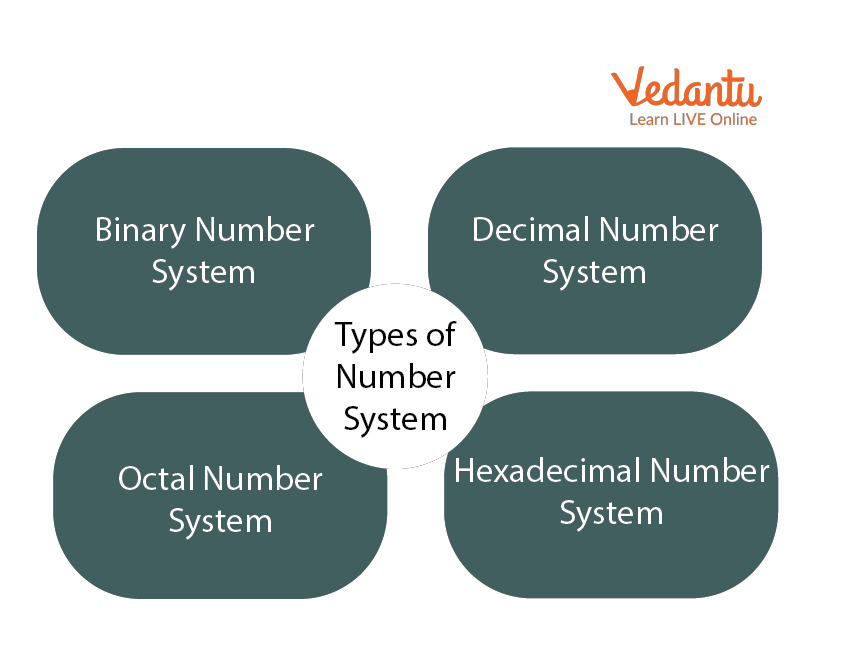

We are taught the idea of numbers from a young age. Anything is a number to a machine, including alphabets, images, sounds, and so on. There are four different types of number systems:

- The binary number scheme has only two possible values: 0 or 1.

- The octal number system is used to represent values with eight digits.

- The decimal number system is used to describe values with ten digits.

- The hexadecimal number system uses 16 digits to represent values.

Bits A bit is the smallest unit of data that a machine can understand or use. Bits are typically found as groups in computers.

A byte is an eight-bit group of information. A nibble is described as half a byte.

- The decimal (Base 10) number system has ten symbols. It’s written in positional notation. The least-significant digit (rightmost digit) is of the order of 10^0 (units or ones).

- Binary is a type of data that can (Base 2) The binary number system has two symbols: 0 and 1, which are referred to as bits. It’s a positional notation as well. A binary number will be denoted by the suffix B. Prefix 0b or 0B (e.g., 0b1001000) or prefix b with the bits quoted (e.g., b’10001111′) are used in some programming languages to represent binary numbers.

- Number System in Hexadecimal (Base 16): The hexadecimal number system employs 16 symbols referred to as hex digits. It’s referred to as a positional notation. A hexadecimal number (abbreviated as hex) will be denoted by the suffix H.

3. Text in data representation

Text code is a standard format for representing letters, punctuation marks, and other symbols. The four most common text code systems:

EBCDIC : EBCDIC is an IBM-developed data-encoding scheme that uses a specific eight-bit binary code for each number, alphabetic character, punctuation marks, accented letters, and non-alphabetic characters. EBCDIC differs from ASCII, the most commonly used text encoding scheme, in that it divides each character’s eight bits into two four-bit zones.

ASCII: ASCII stands for American Standard Code for Information Interchange, and it is a standard for allocating letters, numbers, and other characters to the 256 slots available in an 8-bit code. Binary, which is the language of all computers, is used to construct the ASCII decimal (Dec) number. The lowercase “h” character (Char) has a decimal value of 104, which is “01101000” in binary, as seen in the table below. The X3 committee, which is part of the ASA, established and published ASCII for the first time in 1963.

Extended ASCII: To represent text in a computer system, we assign a unique number to each character. This number is referred to as its code. We can then use binary ones and zeros to store this code on the computer. Computers can understand ASCII code because it tells them how to represent text. Each character in ASCII is represented by a binary value (letter, number, symbol, or control character). Extended ASCII is a variant of ASCII that supports 256 different characters. Since extended ASCII uses eight bits to represent a character instead of seven in standard ASCII, this is the case. In extended ASCII, the maximum number of characters that can be expressed is 256.

Unicode: Many of these schemes used code pages with only 256 characters, each of which needed 8 bits of storage. Although this was relatively lightweight, it was insufficient to hold ideographic character sets with thousands of characters, such as Chinese and Japanese. It also prevented the coexistence of character sets from multiple languages. Unicode is an attempt to unify all of the various text-encoding systems into a single universal standard.

4. Images and Colours in data representation

Computer screens and similar devices often use three colours, but they need a different set of primary colours since they are additive, meaning they start with a black screen and add colour to it. The colours red, green, and blue (RGB) are used in additive colours on computers. On a computer screen, each pixel comprises three tiny “lights”: one red, one green, and one blue. All of the different colours can be created by varying the amount of light coming from each of these three. You will experiment with RGB in the following interactive.

5. Program Instructions in data representation

We can interpret an entire program using binary in the same way as representing text or numbers using it. Since a program is nothing more than a set of instructions, we must first determine how many bits to use to represent each instruction and interpret those bits. Operation and operand are two pieces of machine code that are usually combined in instructions. Entire computer programs can be analysed in the same binary format by using bits to represent both the program instructions and data forms such as text, numbers, and images. This makes it possible to store programs on discs in memory and transfer them over the internet just like files.

Computers are information-processing devices. You will use them to view, listen to, create, and edit data in documents, photographs, videos, sound, spreadsheets, and databases. They allow you to compute and measure numerical data, as well as send and receive data over networks. The computer must reflect the information in some way within the computer’s memory, as well as store it on disc or send it over a network, for all of this to function.

For counting and calculating, humans use the decimal (base 10) and duodecimal (base 12) number systems (probably because we have ten fingers and two big toes). Since computers are made up of binary digital components (known as transistors) that operate in two states – on and off – they use the binary (base 2) number system.

If you are interested in making a career in the Data Science domain, our 11-month in-person Postgraduate Certificate Diploma in Data Science course can help you immensely in becoming a successful Data Science professional.

- 7 Important Advanced Data Structures: Simplified

- 10+ Best Data Governance Tools (2021)

Fill in the details to know more

PEOPLE ALSO READ

Related Articles

From The Eyes Of Emerging Technologies: IPL Through The Ages

April 29, 2023

Data Visualization Best Practices

March 23, 2023

What Are Distribution Plots in Python?

March 20, 2023

What Are DDL Commands in SQL?

March 10, 2023

Best TCS Data Analyst Interview Questions and Answers for 2023

March 7, 2023

Best Data Science Companies for Data Scientists !

February 26, 2023

Are you ready to build your own career?

Query? Ask Us

Enter Your Details ×

Find Study Materials for

- Business Studies

- Combined Science

- Computer Science

- Engineering

- English Literature

- Environmental Science

- Human Geography

- Macroeconomics

- Microeconomics

- Social Studies

- Browse all subjects

- Read our Magazine

Create Study Materials

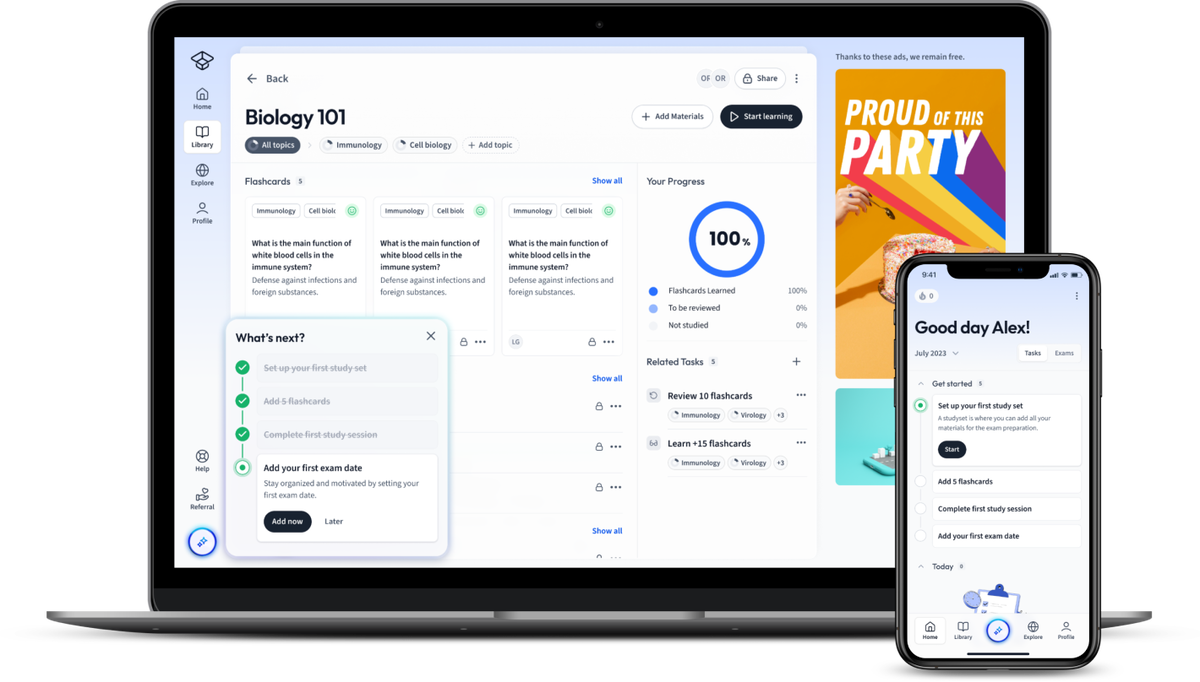

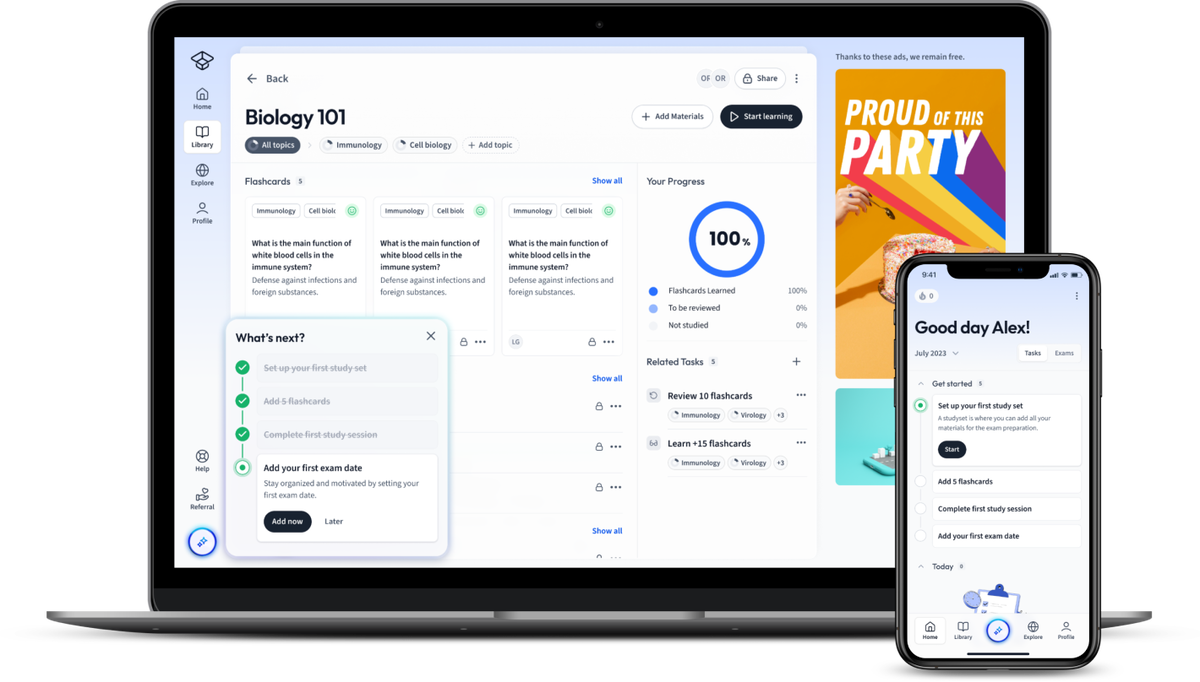

Dive deep into the realm of Computer Science with this comprehensive guide about data representation. Data representation, a fundamental concept in computing, refers to the various ways that information can be expressed digitally. The interpretation of this data plays a critical role in decision-making procedures in businesses and scientific research. Gain an understanding of binary data representation, the backbone of digital computing.

Explore our app and discover over 50 million learning materials for free.

Data Representation in Computer Science

Want to get better grades, get free, full access to:.

- Explanations

- Study Planner

- Textbook solutions

- StudySmarter AI

- Textbook Solutions

- Algorithms in Computer Science

- Computer Network

- Computer Organisation and Architecture

- Computer Programming

- Computer Systems

- Analogue Signal

- Binary Arithmetic

- Binary Conversion

- Binary Number System

- Bitmap Graphics

- Data Compression

- Data Encoding

- Digital Signal

- Hexadecimal Conversion

- Hexadecimal Number System

- Huffman Coding

- Image Representation

- Lempel Ziv Welch

- Logic Circuits

- Lossless Compression

- Lossy Compression

- Numeral Systems

- Quantisation

- Run Length Encoding

- Sample Rate

- Sampling Informatics

- Sampling Theorem

- Signal Processing

- Sound Representation

- Two's Complement

- What is ASCII

- What is Unicode

- What is Vector Graphics

- Data Structures

- Functional Programming

- Issues in Computer Science

- Problem Solving Techniques

- Theory of Computation

Lerne mit deinen Freunden und bleibe auf dem richtigen Kurs mit deinen persönlichen Lernstatistiken

Nie wieder prokastinieren mit unseren Lernerinnerungen.

Dive deep into the realm of Computer Science with this comprehensive guide about data representation. Data representation, a fundamental concept in computing, refers to the various ways that information can be expressed digitally. The interpretation of this data plays a critical role in decision-making procedures in businesses and scientific research. Gain an understanding of binary data representation, the backbone of digital computing.

Binary data representation uses a system of numerical notation that has just two possible states represented by 0 and 1 (also known as 'binary digits' or 'bits'). Grasp the practical applications of binary data representation and explore its benefits.

Finally, explore the vast world of data model representation. Different types of data models offer a variety of ways to organise data in databases . Understand the strategic role of data models in data representation, and explore how they are used to design efficient database systems. This comprehensive guide positions you at the heart of data representation in Computer Science.

Understanding Data Representation in Computer Science

In the realm of Computer Science, data representation plays a paramount role. It refers to the methods or techniques used to represent, or express information in a computer system. This encompasses everything from text and numbers to images, audio, and beyond.

Basic Concepts of Data Representation

Data representation in computer science is about how a computer interprets and functions with different types of information. Different information types require different representation techniques. For instance, a video will be represented differently than a text document.

When working with various forms of data, it is important to grasp a fundamental understanding of:

- Binary system

- Bits and Bytes

- Number systems: decimal, hexadecimal

- Character encoding: ASCII, Unicode

Data in a computer system is represented in binary format, as a sequence of 0s and 1s, denoting 'off' and 'on' states respectively. The smallest component of this binary representation is known as a bit , which stands for 'binary digit'.

A byte , on the other hand, generally encompasses 8 bits. An essential aspect of expressing numbers and text in a computer system, are the decimal and hexadecimal number systems, and character encodings like ASCII and Unicode.

Role of Data Representation in Computer Science

Data Representation is the foundation of computing systems and affects both hardware and software designs. It enables both logic and arithmetic operations to be performed in the binary number system , on which computers are based.

An illustrative example of the importance of data representation is when you write a text document. The characters you type are represented in ASCII code - a set of binary numbers. Each number is sent to the memory, represented as electrical signals; everything you see on your screen is a representation of the underlying binary data.

Computing operations and functions, like searching, sorting or adding, rely heavily on appropriate data representation for efficient execution. Also, computer programming languages and compilers require a deep understanding of data representation to successfully interpret and execute commands.

As technology evolves, so too does our data representation techniques. Quantum computing, for example, uses quantum bits or "qubits". A qubit can represent a 0, 1, or both at the same time, thanks to the phenomenon of quantum superposition.

Types of Data Representation

In computer systems , various types of data representation techniques are utilized:

Numbers can be represented in real, integer, and rational formats. Text is represented by using different types of encodings, such as ASCII or Unicode. Images can be represented in various formats like JPG, PNG, or GIF, each having its specific rendering algorithm and compression techniques.

Tables are another important way of data representation, especially in the realm of databases .

This approach is particularly effective in storing structured data, making information readily accessible and easy to handle. By understanding the principles of data representation, you can better appreciate the complexity and sophistication behind our everyday interactions with technology.

Data Representation and Interpretation

To delve deeper into the world of Computer Science, it is essential to study the intricacies of data representation and interpretation. While data representation is about the techniques through which data are expressed or encoded in a computer system, data interpretation refers to the computing machines' ability to understand and work with these encoded data.

Basics of Data Representation and Interpretation

The core of data representation and interpretation is founded on the binary system. Represented by 0s and 1s, the binary system signifies the 'off' and 'on' states of electric current, seamlessly translating them into a language comprehensible to computing hardware.

For instance, \[ 1101 \, \text{in binary is equivalent to} \, 13 \, \text{in decimal} \] This interpretation happens consistently in the background during all of your interactions with a computer system.

Now, try imagining a vast array of these binary numbers. It could get overwhelming swiftly. To bring order and efficiency to this chaos, binary digits (or bits) are grouped into larger sets like bytes, kilobytes, and so on. A single byte , the most commonly used set, contains eight bits. Here's a simplified representation of how bits are grouped:

- 1 bit = Binary Digit

- 8 bits = 1 byte

- 1024 bytes = 1 kilobyte (KB)

- 1024 KB = 1 megabyte (MB)

- 1024 MB = 1 gigabyte (GB)

- 1024 GB = 1 terabyte (TB)

However, the binary system isn't the only number system pivotal for data interpretation. Both decimal (base 10) and hexadecimal (base 16) systems play significant roles in processing numbers and text data. Moreover, translating human-readable language into computer interpretable format involves character encodings like ASCII (American Standard Code for Information Interchange) and Unicode.

These systems interpret alphabetic characters, numerals, punctuation marks, and other common symbols into binary code. For example, the ASCII value for capital 'A' is 65, which corresponds to \(01000001\) in binary.

In the world of images, different encoding schemes interpret pixel data. JPG, PNG, and GIF, being common examples of such encoded formats. Similarly, audio files utilise encoding formats like MP3 and WAV to store sound data.

Importance of Data Interpretation in Computer Science

Understanding data interpretation in computer science is integral to unlocking the potential of any computing process or system. When coded data is input into a system, your computer must interpret this data accurately to make it usable.

Consider typing a document in a word processor like Microsoft Word. As you type, each keystroke is converted to an ASCII code by your keyboard. Stored as binary, these codes are transmitted to the active word processing software. The word processor interprets these codes back into alphabetic characters, enabling the correct letters to appear on your screen, as per your keystrokes.

Data interpretation is not just an isolated occurrence, but a recurring necessity - needed every time a computing process must deal with data. This is no different when you're watching a video, browsing a website, or even when the computer boots up.

Rendering images and videos is an ideal illustration of the importance of data interpretation.

Digital photos and videos are composed of tiny dots, or pixels, each encoded with specific numbers to denote colour composition and intensity. Every time you view a photo or play a video, your computer interprets the underlying data and reassembles the pixels to form a comprehensible image or video sequence on your screen.

Data interpretation further extends to more complex territories like facial recognition, bioinformatics, data mining, and even artificial intelligence. In these applications, data from various sources is collected, converted into machine-acceptable format, processed, and interpreted to provide meaningful outputs.

In summary, data interpretation is vital for the functionality, efficiency, and progress of computer systems and the services they provide. Understanding the basics of data representation and interpretation, thereby, forms the backbone of computer science studies.

Delving into Binary Data Representation

Binary data representation is the most fundamental and elementary form of data representation in computing systems. At the lowermost level, every piece of information processed by a computer is converted into a binary format.

Understanding Binary Data Representation

Binary data representation is based on the binary numeral system. This system, also known as the base-2 system, uses only two digits - 0 and 1 to represent all kinds of data. The concept dates back to the early 18th-century mathematics and has since found its place as the bedrock of modern computers. In computing, the binary system's digits are called bits (short for 'binary digit'), and they are the smallest indivisible unit of data.

Each bit can be in one of two states representing 0 ('off') or 1 ('on'). Formally, the binary number \( b_n b_{n-1} ... b_2 b_1 b_0 \), is interpreted using the formula: \[ B = b_n \times 2^n + b_{n-1} \times 2^{n-1} + ... + b_2 \times 2^2 + b_1 \times 2^1 + b_0 \times 2^0 \] Where \( b_i \) are the binary digits and \( B \) is the corresponding decimal number.

For example, for the binary number 1011, the process will look like this: \[ B = 1*2^3 + 0*2^2 + 1*2^1 + 1*2^0 \]

This mathematical translation makes it possible for computing machines to perform complex operations even though they understand only the simple language of 'on' and 'off' signals.

When representing character data, computing systems use binary-encoded formats. ASCII and Unicode are common examples. In ASCII, each character is assigned a unique 7-bit binary code. For example, the binary representation for the uppercase letter 'A' is 0100001. Interpreting such encoded data back to a human-readable format is a core responsibility of computing systems and forms the basis for the exchange of digital information globally.

Practical Application of Binary Data Representation

Binary data representation is used across every single aspect of digital computing. From simple calculations performed by a digital calculator to the complex animations rendered in a high-definition video game, binary data representation is at play in the background.

Consider a simple calculation like 7+5. When you input this into a digital calculator, the numbers and the operation get converted into their binary equivalents. The microcontroller inside the calculator processes these binary inputs, performs the sum operation in binary, and finally, returns the result as a binary output. This binary output is then converted back into a decimal number which you see displayed on the calculator screen.

When it comes to text files, every character typed into the document is converted to its binary equivalent using a character encoding system, typically ASCII or Unicode. It is then saved onto your storage device as a sequence of binary digits.

Similarly, for image files, every pixel is represented as a binary number. Each binary number, called a 'bit map', specifies the colour and intensity of each pixel. When you open the image file, the computer reads the binary data and presents it on your screen as a colourful, coherent image. The concept extends even further into the internet and network communications, data encryption , data compression , and more.

When you are downloading a file over the internet, it is sent to your system as a stream of binary data. The web browser on your system receives this data, recognizes the type of file and accordingly interprets the binary data back into the intended format.

In essence, every operation that you can perform on a computer system, no matter how simple or complex, essentially boils down to large-scale manipulation of binary data. And that sums up the practical application and universal significance of binary data representation in digital computing.

Binary Tree Representation in Data Structures

Binary trees occupy a central position in data structures , especially in algorithms and database designs. As a non-linear data structure, a binary tree is essentially a tree-like model where each node has a maximum of two children, often distinguished as 'left child' and 'right child'.

Fundamentals of Binary Tree Representation

A binary tree is a tree data structure where each parent node has no more than two children, typically referred to as the left child and the right child. Each node in the binary tree contains:

- A data element

- Pointer or link to the left child

- Pointer or link to the right child

The topmost node of the tree is known as the root. The nodes without any children, usually dwelling at the tree's last level, are known as leaf nodes or external nodes. Binary trees are fundamentally differentiated by their properties and the relationships among the elements. Some types include:

- Full Binary Tree: A binary tree where every node has 0 or 2 children.

- Complete Binary Tree: A binary tree where all levels are completely filled except possibly the last level, which is filled from left to right.

- Perfect Binary Tree: A binary tree where all internal nodes have two children and all leaves are at the same level.

- Skewed Binary Tree: A binary tree where every node has only left child or only right child.

In a binary tree, the maximum number of nodes \( N \) at any level \( L \) can be calculated using the formula \( N = 2^{L-1} \). Conversely, for a tree with \( N \) nodes, the maximum height or maximum number of levels is \( \lceil Log_2(N+1) \rceil \).

Binary tree representation employs arrays and linked lists. Sometimes, an implicit array-based representation suffices, especially for complete binary trees. The root is stored at index 0, while for each node at index \( i \), the left child is stored at index \( 2i + 1 \), and the right child at \( 2i + 2 \).

However, the most common representation is the linked-node representation that utilises a node-based structure. Each node in the binary tree is a data structure that contains a data field and two pointers pointing to its left and right child nodes.

Usage of Binary Tree in Data Structures

Binary trees are typically used for expressing hierarchical relationships, and thus find application across various areas in computer science. In mathematical applications, binary trees are ideal for expressing certain elements' relationships.

For example, binary trees are used to represent expressions in arithmetic and Boolean algebra.

Consider an arithmetic expression like (4 + 5) * 6. This can be represented using a binary tree where the operators are parent nodes, and the operands are children. The expression gets evaluated by performing operations in a specific tree traversal order.

Among the more complex usages, binary search trees — a variant of binary trees — are employed in database engines and file systems .

- Binary Heaps, a type of binary tree, are used as an efficient priority queue in many algorithms like Dijkstra's algorithm and the Heap Sort algorithm.

- Binary trees are also used in creating binary space partition trees, which are used for quickly finding objects in games and 3D computer graphics.

- Syntax trees used in compilers are a direct application of binary trees. They help translate high-level language expressions into machine code.

- Huffman Coding Trees, which are used in data compression algorithms, are another variant of binary trees.

The theoretical underpinnings of all these binary tree applications are the traversal methods and operations, such as insertion and deletion, which are intrinsic to the data structure.

Binary trees are also used in advanced machine-learning algorithms. Decision Tree is a type of binary tree that uses a tree-like model of decisions. It is one of the most successful forms of supervised learning algorithms in data mining and machine learning.

The advantages of a binary tree lie in their efficient organisation and quick data access, making them a cornerstone of many complex data structures and algorithms. Understanding the workings and fundamentals of binary tree representation will equip you with a stronger pillaring in the world of data structures and computer science in general.

Grasping Data Model Representation

When dealing with vast amounts of data, organising and understanding the relationships between different pieces of data is of utmost importance. This is where data model representation comes into play in computer science. A data model provides an abstract, simplified view of real-world data. It defines the data elements and the relationships among them, providing an organised and consistent representation of data.

Exploring Different Types of Data Models

Understanding the intricacies of data models will equip you with a solid foundation in making sense of complex data relationships. Some of the most commonly used data models include:

- Hierarchical Model

- Network Model

- Relational Model

- Entity-Relationship Model

- Object-Oriented Model

- Semantic Model

The Hierarchical Model presents data in a tree-like structure, where each record has one parent record and many children. This model is largely applied in file systems and XML documents. The limitations are that this model does not allow a child to have multiple parents, thus limiting its real-world applications.

The Network Model, an enhancement of the hierarchical model, allows a child node to have multiple parent nodes, resulting in a graph structure. This model is suitable for representing complex relationships but comes with its own challenges such as iteration and navigation, which can be intricate.

The Relational Model, created by E.F. Codd, uses a tabular structure to depict data and their relationships. Each row represents a collection of related data values, and each column represents a particular attribute. This is the most widely used model due to its simplicity and flexibility.

The Entity-Relationship Model illustrates the conceptual view of a database. It uses three basic concepts: Entities, Attributes (the properties of these entities), and Relationships among entities. This model is most commonly used in database design .

The Object-Oriented Model goes a step further and adds methods (functions) to the entities besides attributes. This data model integrates the data and the operations applicable to the data into a single component known as an object. Such an approach enables encapsulation, a significant characteristic of object-oriented programming.

The Semantic Model aims to capture more meaning of data by defining the nature of data and the relationships that exist between them. This model is beneficial in representing complex data interrelations and is used in expert systems and artificial intelligence fields.

The Role of Data Models in Data Representation

Data models provide a method for the efficient representation and interaction of data elements, thus forming an integral part of any database system. They provide the theoretical foundation for designing databases, thereby playing an essential role in the development of applications.

A data model is a set of concepts and rules for formally describing and representing real-world data. It serves as a blueprint for designing and implementing databases and assists communication between system developers and end-users.

Databases serve as vast repositories, storing a plethora of data. Such vast data needs effective organisation and management for optimal access and usage. Here, data models come into play, providing a structural view of data, thereby enabling the efficient organisation, storage and retrieval of data.

Consider a library system. The system needs to record data about books, authors, publishers, members, and loans. All these items represent different entities. Relationships exist between these entities. For example, a book is published by a publisher, an author writes a book, or a member borrows a book. Using an Entity-Relationship Model, we can effectively represent all these entities and relationships, aiding the library system's development process.

Designing such a model requires careful consideration of what data is required to be stored and how different data elements relate to each other. Depending on their specific requirements, database developers can select the most suitable data model representation. This choice can significantly affect the functionality, performance, and scalability of the resulting databases.

From decision-support systems and expert systems to distributed databases and data warehouses, data models find a place in various applications.

Modern NoSQL databases often use several models simultaneously to meet their needs. For example, a document-based model for unstructured data and a column-based model for analyzing large data sets. In this way, data models continue to evolve and adapt to the burgeoning needs of the digital world.

Therefore, acquiring a strong understanding of data model representations and their roles forms an integral part of the database management and design process. It empowers you with the ability to handle large volumes of diverse data efficiently and effectively.

Data Representation - Key takeaways

- Data representation refers to techniques used to express information in computer systems, encompassing text, numbers, images, audio, and more.

- Data Representation is about how computers interpret and function with different information types, including binary systems, bits and bytes, number systems (decimal, hexadecimal) and character encoding (ASCII, Unicode).

- Binary Data Representation is the conversion of all kinds of information processed by a computer into binary format.

- Express hierarchical relationships across various areas in computer science.

- Represent relationships in mathematical applications, used in database engines, file systems, and priority queues in algorithms.

- Data Model Representation is an abstract, simplified view of real-world data that defines the data elements, and their relationships and provides a consistently organised way of representing data.

Frequently Asked Questions about Data Representation in Computer Science

--> what is data representation.

Data representation is the method used to encode information into a format that can be used and understood by computer systems. It involves the conversion of real-world data, such as text, images, sounds, numbers, into forms like binary or hexadecimal which computers can process. The choice of representation can affect the quality, accuracy and efficiency of data processing. Precisely, it's how computer systems interpret and manipulate data.

--> What does data representation mean?

Data representation refers to the methods or techniques used to express, display or encode data in a readable format for a computer or a user. This could be in different forms such as binary, decimal, or alphabetic forms. It's crucial in computer science since it links the abstract world of thought and concept to the concrete domain of signals, signs and symbols. It forms the basis of information processing and storage in contemporary digital computing systems.

--> Why is data representation important?

Data representation is crucial as it allows information to be processed, transferred, and interpreted in a meaningful way. It helps in organising and analysing data effectively, providing insights for decision-making processes. Moreover, it facilitates communication between the computer system and the real world, enabling computing outcomes to be understood by users. Finally, accurate data representation ensures integrity and reliability of the data, which is vital for effective problem solving.

--> How to make a graphical representation of data?

To create a graphical representation of data, first collect and organise your data. Choose a suitable form of data representation such as bar graphs, pie charts, line graphs, or histograms depending on the type of data and the information you want to display. Use a data visualisation tool or software such as Excel or Tableau to help you generate the graph. Always remember to label your axes and provide a title and legend if necessary.

--> What is data representation in statistics?

Data representation in statistics refers to the various methods used to display or present data in meaningful ways. This often includes the use of graphs, charts, tables, histograms or other visual tools that can help in the interpretation and analysis of data. It enables efficient communication of information and helps in drawing statistical conclusions. Essentially, it's a way of providing a visual context to complex datasets, making the data easily understandable.

Test your knowledge with multiple choice flashcards

What is data representation in computer science?

What are some of the fundamental concepts to understand when dealing with data representation?

Why is data representation crucial in computer science?

Your score:

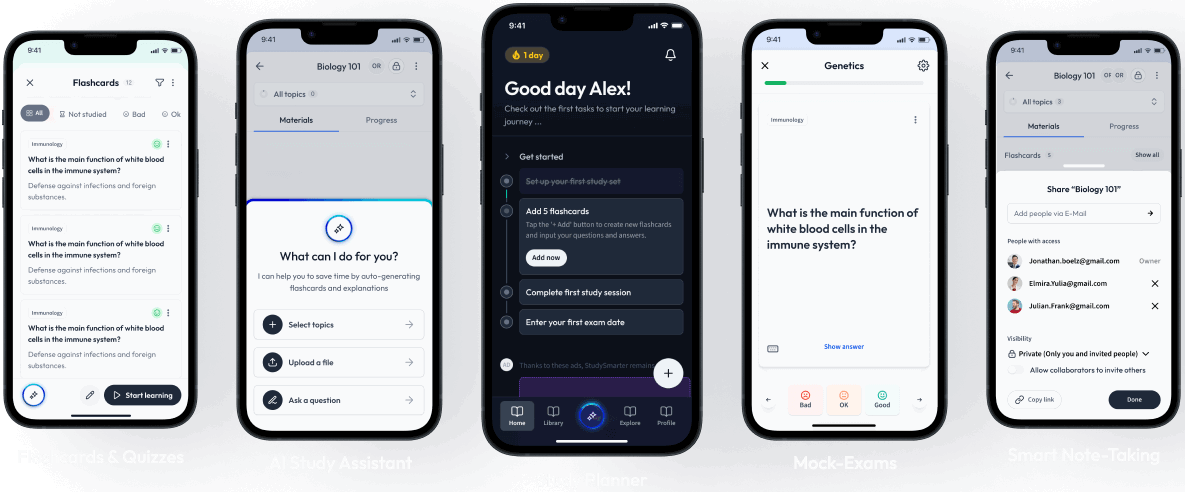

Join the StudySmarter App and learn efficiently with millions of flashcards and more!

Learn with 441 data representation in computer science flashcards in the free studysmarter app.

Already have an account? Log in

Data representation in computer science refers to the methods used to express information in a computer system. It's how a computer interprets and functions with different information types, ranging from text and numbers to images, audio, and beyond.

When dealing with data representation, one should understand the binary system, bits and bytes, number systems like decimal and hexadecimal, and character encoding such as ASCII and Unicode.

Data representation forms the foundation of computer systems and affects hardware and software designs. It enables logic and arithmetic operations to be performed in the binary number system, and is integral to computer programming languages and compilers.

What is the relationship between data representation and the binary system in computer systems?

The core of data representation in computer systems is based on the binary system, which uses 0s and 1s, representing 'off' and 'on' states of electric current. These translate into a language that computer hardware can understand.

What are the larger sets into which binary digits or bits are grouped for efficiency and order?

Binary digits or bits are grouped into larger sets like bytes, kilobytes, MB, GB and TB. For instance, 8 bits make up a byte, and 1024 bytes make up a kilobyte.

How does data interpretation contribute to the functionality of computer systems and services?

Data interpretation is vital as it allows coded data to be accurately translated into a usable format for any computer process or system. It is a recurring necessity whenever a computing process has to deal with data.

of the users don't pass the Data Representation in Computer Science quiz! Will you pass the quiz?

How would you like to learn this content?

Free computer-science cheat sheet!

Everything you need to know on . A perfect summary so you can easily remember everything.

Join over 22 million students in learning with our StudySmarter App

The first learning app that truly has everything you need to ace your exams in one place

- Flashcards & Quizzes

- AI Study Assistant

- Smart Note-Taking

Sign up to highlight and take notes. It’s 100% free.

This is still free to read, it's not a paywall.

You need to register to keep reading, create a free account to save this explanation..

Save explanations to your personalised space and access them anytime, anywhere!

By signing up, you agree to the Terms and Conditions and the Privacy Policy of StudySmarter.

Entdecke Lernmaterial in der StudySmarter-App

If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

Praxis Core Math

Course: praxis core math > unit 1.

- Data representations | Lesson

Data representations | Worked example

- Center and spread | Lesson

- Center and spread | Worked example

- Random sampling | Lesson

- Random sampling | Worked example

- Scatterplots | Lesson

- Scatterplots | Worked example

- Interpreting linear models | Lesson

- Interpreting linear models | Worked example

- Correlation and Causation | Lesson

- Correlation and causation | Worked example

- Probability | Lesson

- Probability | Worked example

Want to join the conversation?

- Upvote Button navigates to signup page

- Downvote Button navigates to signup page

- Flag Button navigates to signup page

Video transcript

Talk to our experts

1800-120-456-456

- Introduction to Data Representation

- Computer Science

About Data Representation

Data can be anything, including a number, a name, musical notes, or the colour of an image. The way that we stored, processed, and transmitted data is referred to as data representation. We can use any device, including computers, smartphones, and iPads, to store data in digital format. The stored data is handled by electronic circuitry. A bit is a 0 or 1 used in digital data representation.

Data Representation Techniques

Classification of Computers

Computer scans are classified broadly based on their speed and computing power.

1. Microcomputers or PCs (Personal Computers): It is a single-user computer system with a medium-power microprocessor. It is referred to as a computer with a microprocessor as its central processing unit.

Microcomputer

2. Mini-Computer: It is a multi-user computer system that can support hundreds of users at the same time.

Types of Mini Computers

3. Mainframe Computer: It is a multi-user computer system that can support hundreds of users at the same time. Software technology is distinct from minicomputer technology.

Mainframe Computer

4. Super-Computer: With the ability to process hundreds of millions of instructions per second, it is a very quick computer. They are used for specialised applications requiring enormous amounts of mathematical computations, but they are very expensive.

Supercomputer

Types of Computer Number System

Every value saved to or obtained from computer memory uses a specific number system, which is the method used to represent numbers in the computer system architecture. One needs to be familiar with number systems in order to read computer language or interact with the system.

Types of Number System

1. Binary Number System

There are only two digits in a binary number system: 0 and 1. In this number system, 0 and 1 stand in for every number (value). Because the binary number system only has two digits, its base is 2.

A bit is another name for each binary digit. The binary number system is also a positional value system, where each digit's value is expressed in powers of 2.

Characteristics of Binary Number System

The following are the primary characteristics of the binary system:

It only has two digits, zero and one.

Depending on its position, each digit has a different value.

Each position has the same value as a base power of two.

Because computers work with internal voltage drops, it is used in all types of computers.

Binary Number System

2. Decimal Number System

The decimal number system is a base ten number system with ten digits ranging from 0 to 9. This means that these ten digits can represent any numerical quantity. A positional value system is also a decimal number system. This means that the value of digits will be determined by their position.

Characteristics of Decimal Number System

Ten units of a given order equal one unit of the higher order, making it a decimal system.

The number 10 serves as the foundation for the decimal number system.

The value of each digit or number will depend on where it is located within the numeric figure because it is a positional system.

The value of this number results from multiplying all the digits by each power.

Decimal Number System

Decimal Binary Conversion Table

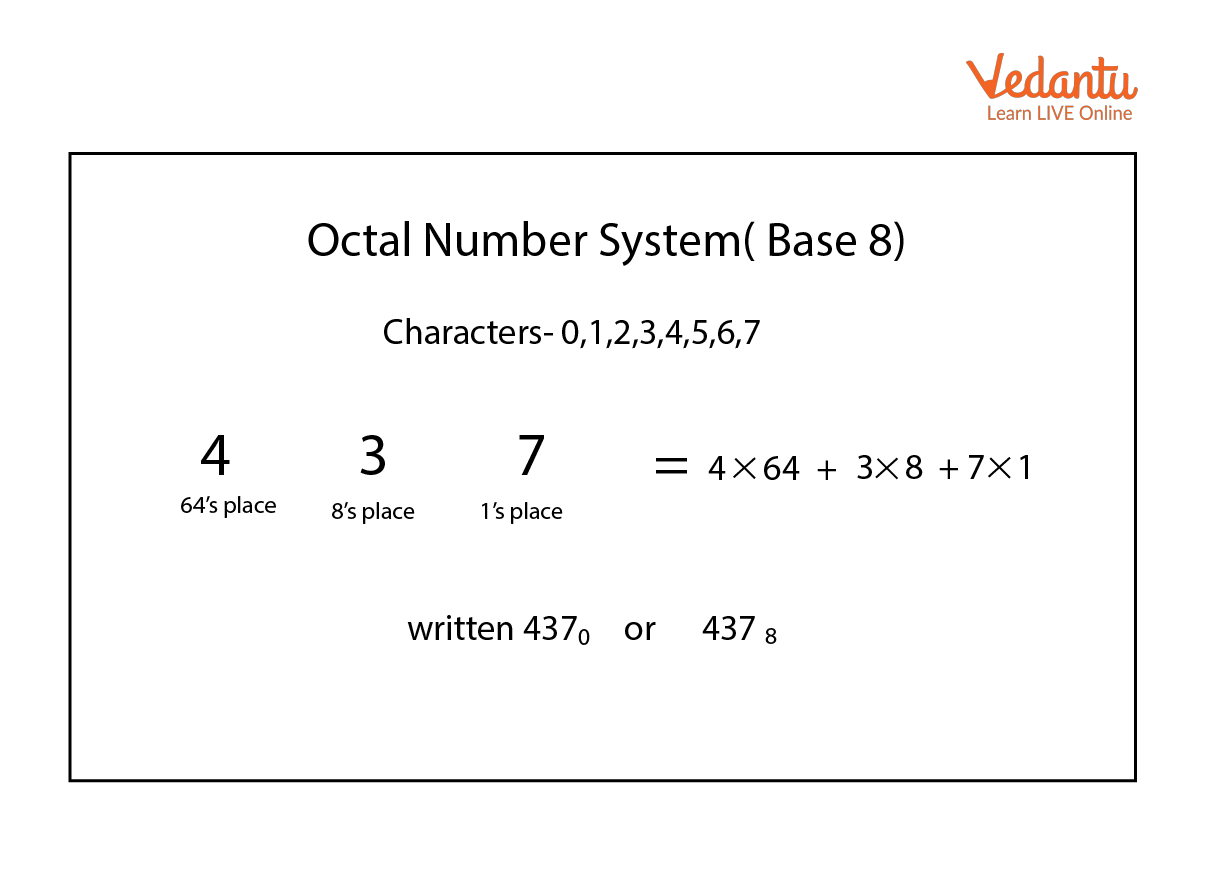

3. octal number system.

There are only eight (8) digits in the octal number system, from 0 to 7. In this number system, each number (value) is represented by the digits 0, 1, 2, 3,4,5,6, and 7. Since the octal number system only has 8 digits, its base is 8.

Characteristics of Octal Number System:

Contains eight digits: 0,1,2,3,4,5,6,7.

Also known as the base 8 number system.

Each octal number position represents a 0 power of the base (8).

An octal number's last position corresponds to an x power of the base (8).

Octal Number System

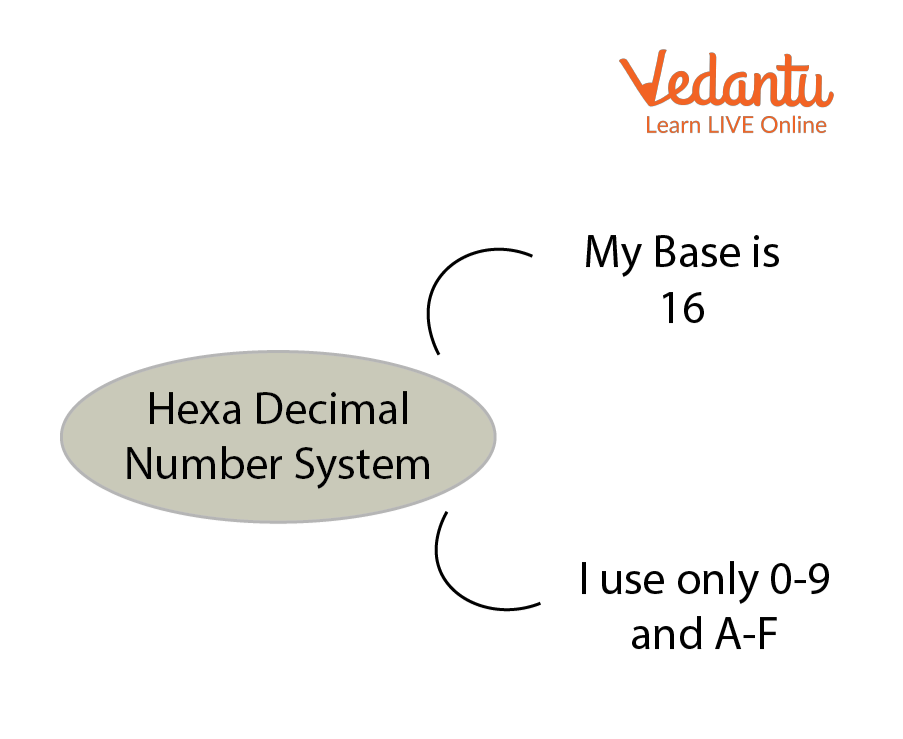

4. Hexadecimal Number System

There are sixteen (16) alphanumeric values in the hexadecimal number system, ranging from 0 to 9 and A to F. In this number system, each number (value) is represented by 0, 1, 2, 3, 5, 6, 7, 8, 9, A, B, C, D, E, and F. Because the hexadecimal number system has 16 alphanumeric values, its base is 16. Here, the numbers are A = 10, B = 11, C = 12, D = 13, E = 14, and F = 15.

Characteristics of Hexadecimal Number System:

A system of positional numbers.

Has 16 symbols or digits overall (0, 1, 2, 3, 4, 5, 6, 7, 8, 9, A, B, C, D, E, F). Its base is, therefore, 16.

Decimal values 10, 11, 12, 13, 14, and 15 are represented by the letters A, B, C, D, E, and F, respectively.

A single digit may have a maximum value of 15.

Each digit position corresponds to a different base power (16).

Since there are only 16 digits, any hexadecimal number can be represented in binary with 4 bits.

Hexadecimal Number System

So, we've seen how to convert decimals and use the Number System to communicate with a computer. The full character set of the English language, which includes all alphabets, punctuation marks, mathematical operators, special symbols, etc., must be supported by the computer in addition to numerical data.

Learning By Doing

Choose the correct answer:.

1. Which computer is the largest in terms of size?

Minicomputer

Micro Computer

2. The binary number 11011001 is converted to what decimal value?

Solved Questions

1. Give some examples where Supercomputers are used.

Ans: Weather Prediction, Scientific simulations, graphics, fluid dynamic calculations, Nuclear energy research, electronic engineering and analysis of geological data.

2. Which of these is the most costly?

Mainframe computer

Ans: C) Supercomputer

FAQs on Introduction to Data Representation

1. What is the distinction between the Hexadecimal and Octal Number System?

The octal number system is a base-8 number system in which the digits 0 through 7 are used to represent numbers. The hexadecimal number system is a base-16 number system that employs the digits 0 through 9 as well as the letters A through F to represent numbers.

2. What is the smallest data representation?

The smallest data storage unit in a computer's memory is called a BYTE, which comprises 8 BITS.

3. What is the largest data unit?

The largest commonly available data storage unit is a terabyte or TB. A terabyte equals 1,000 gigabytes, while a tebibyte equals 1,024 gibibytes.

Data Representation in Computer: Number Systems, Characters, Audio, Image and Video

- Post author: Anuj Kumar

- Post published: 16 July 2021

- Post category: Computer Science

- Post comments: 0 Comments

Table of Contents

- 1 What is Data Representation in Computer?

- 2.1 Binary Number System

- 2.2 Octal Number System

- 2.3 Decimal Number System

- 2.4 Hexadecimal Number System

- 3.4 Unicode

- 4 Data Representation of Audio, Image and Video

- 5.1 What is number system with example?

What is Data Representation in Computer?

A computer uses a fixed number of bits to represent a piece of data which could be a number, a character, image, sound, video, etc. Data representation is the method used internally to represent data in a computer. Let us see how various types of data can be represented in computer memory.

Before discussing data representation of numbers, let us see what a number system is.

Number Systems

Number systems are the technique to represent numbers in the computer system architecture, every value that you are saving or getting into/from computer memory has a defined number system.

A number is a mathematical object used to count, label, and measure. A number system is a systematic way to represent numbers. The number system we use in our day-to-day life is the decimal number system that uses 10 symbols or digits.

The number 289 is pronounced as two hundred and eighty-nine and it consists of the symbols 2, 8, and 9. Similarly, there are other number systems. Each has its own symbols and method for constructing a number.

A number system has a unique base, which depends upon the number of symbols. The number of symbols used in a number system is called the base or radix of a number system.

Let us discuss some of the number systems. Computer architecture supports the following number of systems:

Binary Number System

Octal number system, decimal number system, hexadecimal number system.

A Binary number system has only two digits that are 0 and 1. Every number (value) represents 0 and 1 in this number system. The base of the binary number system is 2 because it has only two digits.

The octal number system has only eight (8) digits from 0 to 7. Every number (value) represents with 0,1,2,3,4,5,6 and 7 in this number system. The base of the octal number system is 8, because it has only 8 digits.

The decimal number system has only ten (10) digits from 0 to 9. Every number (value) represents with 0,1,2,3,4,5,6, 7,8 and 9 in this number system. The base of decimal number system is 10, because it has only 10 digits.

A Hexadecimal number system has sixteen (16) alphanumeric values from 0 to 9 and A to F. Every number (value) represents with 0,1,2,3,4,5,6, 7,8,9,A,B,C,D,E and F in this number system. The base of the hexadecimal number system is 16, because it has 16 alphanumeric values.

Here A is 10, B is 11, C is 12, D is 13, E is 14 and F is 15 .

Data Representation of Characters

There are different methods to represent characters . Some of them are discussed below:

The code called ASCII (pronounced ‘’.S-key”), which stands for American Standard Code for Information Interchange, uses 7 bits to represent each character in computer memory. The ASCII representation has been adopted as a standard by the U.S. government and is widely accepted.

A unique integer number is assigned to each character. This number called ASCII code of that character is converted into binary for storing in memory. For example, the ASCII code of A is 65, its binary equivalent in 7-bit is 1000001.

Since there are exactly 128 unique combinations of 7 bits, this 7-bit code can represent only128 characters. Another version is ASCII-8, also called extended ASCII, which uses 8 bits for each character, can represent 256 different characters.

For example, the letter A is represented by 01000001, B by 01000010 and so on. ASCII code is enough to represent all of the standard keyboard characters.

It stands for Extended Binary Coded Decimal Interchange Code. This is similar to ASCII and is an 8-bit code used in computers manufactured by International Business Machines (IBM). It is capable of encoding 256 characters.

If ASCII-coded data is to be used in a computer that uses EBCDIC representation, it is necessary to transform ASCII code to EBCDIC code. Similarly, if EBCDIC coded data is to be used in an ASCII computer, EBCDIC code has to be transformed to ASCII.