An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Europe PMC Author Manuscripts

Effect of Speaking Environment on Speech Production and Perception

Peter howell.

1) Division for Psychology and Language Sciences, Faculty of Life Sciences, University College, London

Environments affect speaking and listening performance. This contribution reviews some of the main ways in which all sounds are affected by the environment they propagate into. These influences are used to assess how environments affect speakers and listeners. The article concludes with a brief consideration of factors that designers may wish to take into account to address the effects.

1. Introduction

This work considers how environments affect speech behavior. The principal effect environments have on produced speech is that they modify its acoustic structure and, when a speaker hears this altered speech, it can influence the properties of the speech that is uttered. The changes to the speech sound and noise sources that are present in the environment can affect how listeners process the speech sounds. This contribution starts with a brief review of the way environments alter sounds. It then considers how psychologists have determined how the environment influences speech production and perception. Where speech production and/or perception are adversely affected, designers may wish to modify the environment to reduce the impact of these influences.

2. How environments affect sounds

In free field conditions there are no walls or objects to affect sound as there are in enclosed spaces. When a sound enters an enclosed space or a space with obstacles, it is affected in several ways. Three ways in which sounds are affected by environments are discussed: timing, frequency and intensity structure.

The temporal properties of sounds are altered in different ways which depend on the dimensions of the room, its contents and the material on the walls. Perhaps the most obvious effect is when an echo occurs. This is a single repetition of the sound source caused by the sound-wave coming against an opposing surface which reflects it. Echoes occur when there is more than one repetition. Some buildings (such as mosques) have been specifically designed to enhance echoes.

The time the echoes arrive after the direct sound depends on the room’s dimensions. The delay between the original sound and the first echo can be calculated using the distance between the sound source and the reflecting object divided by the speed of sound. For example, if a wall is 17 meters from a sound source and the speed of sound is 340 meters per second, the echo will be heard at the origin after a delay of 0.1 seconds (2 × 17/340 seconds). The strength of an echo depends on room contents and dimensions. Strength is specified in dB sound pressure level relative to the directly transmitted wave.

Psychologists often use a procedure where a speaker hears the sound of his or her voice after a delay (commonly called delayed auditory feedback, DAF, which is discussed further in see section 3.1). It is often assumed that the delayed signal is the only sound the speaker hears. Although the voice may be heavily attenuated, it is unlikely that the speaker does not hear any of the direct voice (i.e. at zero delay). Thus the speaker hears a mixture of sound with no delay and after one or more echo.

2.2 Frequency

Rooms are resonant structures though the material in the room damps these resonances to different extents. Each room has its particular frequency response. Sounds are filtered in their passage through a room space (some frequencies pass easily into the room and others do not). For speakers using amplification signals, the equipment itself can alter the frequency content.

2.3 Intensity

Effects such as echoes, discussed earlier, and amplifiers affect the intensity of any perceived or produced sound. A sound traveling directly from its source to a receiver will be attenuated as distance increases, which will be affected by the transmission medium. In real environments, sound sources will enter environments where there may be other noises (including echoes from past sounds). Speech production is affected when there are sounds in the environment other than that which the speaker makes. These extraneous sounds disrupt the speaker’s intensity control. Section 3.3 describes how a speaker’s vocal intensity depends on whether they are speaking while hearing their own speech or other noises. The behavior of a reception device (including a listener) that needs to detect or identify a speech sound will be affected when there are extraneous sound sources (in psychoacoustics, the extra sounds are said to mask the signal; see section 4.1). Another example of how extraneous sounds affect listeners is the cocktail party phenomenon where a listener needs to distinguish one voice so as to be able to follow a conversation. Extraneous sounds can be other voices or non-speech noises.

3. How environments affect speech production

The effects environments have on speech production are considered before the effects on perception. This is because if the speaker is present in the environment, listening performance will be affected by how speech is changed by the environment as well as by the way the environment affects listening. The listener will receive the altered sound the speaker makes and the environment will affect processing by the listener too.

During the 1950s, the rapid growth in phone use raised interest in how hearing a delayed sound affected speech control ( CCITT, 1989a , 1989b ). Speaking along with a delayed version of the voice (DAF) is an on-going problem in telephony with the introduction of cellular phones and satellite technology. Many of the findings have relevance for speaking in rooms with echo. It was found that speaking along with a delayed version of the voice (DAF) caused drawling (usually on the medial vowels), led to a Lombard effect (increased voice level), while pitch became monotone, speech errors arose and messages took longer to complete than messages produced in normal listening conditions ( Fairbanks, 1955 ). It should be noted that in addition to time alterations, telephones can transmit a limited range of frequencies, and the voice can be masked by noise on the equipment.

One question that arises is whether the delayed sound during DAF has to be speech to produce the disruptions to fluent speakers’ speech? Howell and Archer (1984) addressed this question by transforming speech into a noise that had the same temporal structure as speech, but none of the phonetic content. Then they delayed the noise sound and compared performance of this with performance under standard DAF. The two conditions produced equivalent disruption over a range of delays. This suggests that the DAF signal does not need to be a speech sound to affect control in the same way as observed under DAF. It appears from these results that speech does not go through the speech comprehension system where its message content is determined and which is then used as feedback to the linguistic processes. The disruption could arise instead if asynchronous inputs affect operation of lower level mechanisms involved in motor control.

Not all speakers are adversely affected by DAF. For instance, fluent speakers vary in their susceptibility to DAF. Howell and Archer (1984) went on to show that susceptibility depended on loudness of the voice, which determined level of feedback (in their experiments feedback could be speech or non-speech noises). A second surprising finding was that the fluency of people who stutter improved when they were played DAF. Researchers who investigated the fluency-enhancing effects of DAF on people who stutter in the 1950s and 1960s include Nessel (1958) , Soderberg (1960) , Chase et al., (1961) , Lotzmann (1961) , Neelley (1961) , Goldiamond (1965) , Ham and Steer (1967) and Curlee and Perkins (1969) .

A further important claim that was made at this time that was embraced by several eminent workers was that DAF produces similar effects in fluent speakers to those that people who stutter ordinarily experience - in particular drawling and speech errors. This prompted Lee (1951) to refer to DAF as a form of ‘simulated’ stutter. In an extension of this point of view, Cherry and Sayers (1956) used DAF as a way of simulating stuttering in fluent speakers to establish the basis of the problem. They generated two different sources of sound that are heard whilst speaking normally (the sound transmitted over air and that transmitted through bone). They then examined separately which of these ‘feedback’ components led to increased stuttering rates in fluent speakers when each of them was delayed. The bone-conducted component seemed to be particularly effective in increasing ‘simulated’ stuttering; and they proposed that this source of feedback also led to the problem in speakers who stutter. They then designed a therapy that involved playing noise to speakers who stutter that was intended to mask out the problematic bone-conducted component of vocal ‘feedback’. They reported that fluency improved when the voice was masked in this way. Although there is some disagreement about whether speakers who stutter have problems processing bone-conducted sounds ( Howell & Powell, 1984 ), the effects of masking sounds on the fluency of speakers who stutter has not been disputed. In summary, altering speech timing affects the behavior of fluent speakers adversely but can improve the speech of speakers who stutter.

3.2 Frequency

There are relatively few studies on what effects altering the frequency content of speech has on speech control which is unfortunate as those that there are can be extrapolated to environments that affect the frequency content of sound. Early studies examined the effect of filtering speech ( Garber & Moller, 1979 ). Elman (1981) examined the effects of shifting the speech spectrum (frequency shifted feedback, FSF) on voice pitch, and reported that speakers partially compensate for these shifts. That is, speakers shifted their voice pitch in the opposite direction to the shift made by the experimenter. Subsequent studies have shown that FSF also has little effect on voice level ( Howell, 1990 ). The incomplete compensation for shifts in frequency of voice pitch in fluent speakers has also been confirmed ( Burnett et al., 1997 ), as well as being reported for upward shifts in speakers who stutter ( Natke et al., 2001 ) although no compensation occurs for downward shifts in people who stutter ( Natke et al., 2001 ).

Howell et al. (1987) created a frequency-shifted version of the speaker’s voice that was synchronous with the speaker’s voice, and assessed its effects on speakers who stutter. These authors used a speed-changing method (that produces a frequency shift in the same way that playing a tape recorder at different speeds does). The method they developed produces a virtually synchronous frequency shift. Other features to note about FSF are that the signal level in the shifted version varies with speech level (when speakers produce low intensity sounds, the FSF is also low in intensity, and vice versa). Also, no sound occurs when the speaker is silent (the latter is a feature that is shared with the Edinburgh masker). The two preceding factors limit the noise dose the speaker receives.

The effects on fluency of this (almost real-time) alteration was a marked improvement in fluency in people who stutter even when speakers were instructed to speak at normal rate. The first study reported by Howell et al. (1987) showed that FSF resulted in more fluent speech than DAF or a portable device called the Edinburgh masker ( Dewar et al., 1979 ). Later studies have argued that FSF does not produce speech that is superior to DAF speech at short delays ( Kalinowski et al., 1993 ; Macleod et al., 1995 ). However, these studies have used fast Fourier transform (FFT) techniques to produce frequency shifts. FFT techniques produce significant delays that are somewhat variable ( Howell & Sackin, 2002 ). Therefore, the studies that claim FSF has the same effect on fluency as DAF have compared FSF plus a short delay, with short-delay DAF. Thus the delay they include under FSF may account for why these studies failed to find a difference between it and DAF, whereas Howell et al. (1987) did. Kalinowski’s group claims the paucity of secondary effects on aspects of speech control other than fluency makes FSF acoustically ‘invisible’ on speakers who stutter (and they maintain that the same applies to short-duration DAF). They also claim that the minimal changes in speech control under these two forms of altered sound lead speakers to produce fluent, or near fluent, speech ( Kalinowski & Dayalu, 2002 ).

A second important point about the Howell et al. (1987) study was that, as mentioned, the effects on fluency were observed even though speakers were told to speak at a normal rate. Therefore, to the extent to which they obeyed instructions, the effects of FSF seem to be independent of rate. This argues against Costello-Ingham’s (1993) view that altered feedback techniques (DAF in particular) work on people who stutter because they slow overall speech rate. Direct tests of whether fluency-enhancing effects occur when speech rate is varied were made by Kalinowski et al. (1996) for DAF, and by Hargrave et al. (1994) , and Natke et al. (2001) for FSF. These studies reported that fluency was enhanced whether or not rate was slow (relative to normal speaking conditions). One proviso about the Kalinowski studies is that a global measure of speech rate was taken. It is possible for speakers to speed up global (mean) speech rate while, at the same time, reducing rate locally within an utterance. See Howell and Sackin (2000) for an empirical study that shows fluent speakers display local slowing in singing and local and global slowing under FSF. Until local measures are taken under FSF in people who stutter, it cannot be firmly concluded whether fluency changes are associated with rate change or not, since the speakers might have increased global rate but reduced local rate around the points where disfluencies would have occurred ( Howell & Sackin, 2000 ).

In Howell et al.’s (1987) fourth experiment, the effects of presenting FSF at sound onset only (where speakers who stutter have most problems) were compared with those in continuous FSF speech. The effects on fluency did not differ significantly between the two conditions, suggesting that having FSF at sound onset only was as effective as having it on throughout the utterance. This shows that it may be possible to get as much enhancement in fluency when alteration is made to selected areas in an utterance as opposed for when alteration is made to the whole utterance.

Another factor of interest is that Kalinowski’s group has investigated how FSF operates in more natural environments such as over the telephone ( Zimmerman, et al., 1997 ), or when speakers have to speak in front of audiences ( Armson et al., 1997 ). They reported that, in both these environments, there are marked improvements in fluency and, therefore, that these procedures may operate in natural environments.

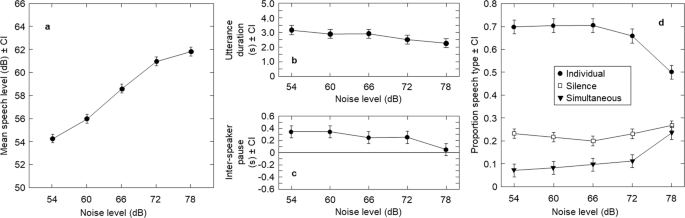

3.3 Intensity

Speaking is affected when the voice is amplified ( Fletcher et al., 1918 ) or when noise is present ( Lombard, 1911 ). Laboratory studies have shown that when voice level is amplified, speakers reduce voice level and when voice level is reduced, they increase it (called the Fletcher effect). Conversely, when noise level increases, speakers increase their voice level and when noise level reduces, speakers reduce their voice level (called the Lombard effect). It is possible that these compensations could be the result of a negative feedback mechanism for regulating voice level. If speakers need to hear their voice to control it but cannot do so, either because noise level is high or voice level is low, they compensate by increasing level. Speakers would compensate in the opposite way if their speech is too loud (low noise level or when the voice is amplified). Note, however, that explanations other than a feedback account, are also possible. For instance, Lane and Tranel (1971) discuss the view that voice level changes are made so that the audience, rather than the speaker himself or herself, does not receive speech at too high or too low a level.

Speakers who stutter change their voice level in the same direction as fluent speakers when noise is present and when their voice is amplified or attenuated ( Howell, 1990 ). The effects of non-speech noises on the fluency of speakers who stutter has been examined. In one imaginative study, Sutton and Chase (1961) arranged whether noise was on or off using a voice-activated relay while subjects read aloud. They compared the fluency-enhancing effects of noise that was on continuously, noise that was presented only while the speaker was speaking and noise presented only during the silent periods between speech. They found that all these conditions were equally effective. It appears from this that the operative effect is not simply masking as there is no sound to mask when noise is presented during silent periods. However, Webster and Lubker (1968) pointed out that voice-activated relays take time to operate and so some noise would have been present at the onset of words. Therefore a masking effect cannot be ruled out. Portable masking devices such as the Edinburgh masker ( Dewar et al., 1979 ) have been developed for treating stuttering.

3.4 Cognitive influences of speaking environment

Non-auditory influences can affect a speaker’s behavior too. The view that speakers can adapt their speech when they know something about the audience has been discussed in connection with Lane and Tranel’s interpretation of the Lombard effect. Another practically important example of non-auditory effects is the clear speech phenomenon. When producing clear speech, speakers can make a conscious effort about how to control their voice, overriding environmental influences. It has been shown that if speakers speak clearly, there are substantial intelligibility gains relative to conversational speech for hearing impaired individuals ( Picheny et al., 1989 ). Clear speech differs from conversational speech in a variety of ways, including speaking rate, consonant power and the occurrence of phonological phenomena ( Picheny et al., 1989 ). Speakers frequently make attempts to speak clearly in auditoria, although the influences of this have not been studied much outside the hearing impaired field. An exception is the work of Lindblom (1990) who has embodied the idea in his H and H theory that speakers place themselves on a continuum between clear and casual speech based on the perceived importance of getting the message across.

4. How environments affect speech perception

Topics were arranged in parallel ways in the two previous sections. Perceptual studies have been conducted under perceptual themes rather than parameters that have been manipulated to simulate the effects which occur in real environments. It should be borne in mind that in the case where the speaker, as well as listener, is in the same space, the sound the perceptual system is dealing with will also have been changed by the environment. In this section, a selection of the perceptual factors that affect listeners is outlined.

4.1 Masking

When there are noises (speech or non-speech) in the environment these sounds act as maskers. The effects of masking on listeners’ performance have been studied extensively and would take many volumes to describe fully. Here the important effects of masking on listeners’ performance are merely noted.

4.2 Clear speech

The studies on clear speech described in the preceding section were undertaken with the intention of establishing what benefit these would have to listeners (in the MIT group’s work, specifically what effect they would have on hearing impaired listeners). Intelligibility tests suggest around 10% more test words can be identified when speech is spoken clearly.

4.3 Location of an object in space

Speakers can localize the origin of a sound in a room. Researchers have examined cues to locales in controlled environments (not room specific) although some important influences that operate in room environments have been studied. A brief description of binaural and monaural cues is given and then some effects that operate in rooms are described.

Sound localization is a listener’s ability to identify the location or origin of a detected sound usually in a three-dimensional space (although localization has also been studied in virtual environments). Binaural cues (using both ears) are important in localization ability. The time of arrival at the two ears is different for a sound which is not directly in front of the listener because the length of the path to the near ear is less than that to the far ear. This time delay is the primary binaural cue to sound localization and is called the interaural time difference (ITD).

A secondary binaural cue is the reduction in loudness when the sound reaches the far ear. This is called the interaural intensity difference (IID). IID is frequency dependent as low frequency sounds can bend round the head. Thus IID cues operate at these frequencies whilst high frequencies are blocked by the head and never reach the far ear (IID does not operate for these frequencies). Note that these cues will only aid in localizing the sound source’s azimuth (the angle between the source and the sagittal plane), not its elevation (the angle between the source and the horizontal plane through both ears).

Monaural localization depends primarily on the filtering effects of external structures like the head, shoulders, torso, and outer ear or pinna. The sound frequencies are filtered depending on the angle from which they strike the various external structures. The main such effect arises from the pinna notch, which arises when the pinna attenuates frequencies in a narrow frequency band. The band of frequencies in the notch depends on the angle from which the sound strikes the outer ear and provides information about the direction of the source.

It has already been mentioned that sound intensity decays with increasing distance form the source. Thus intensity provides a cue to distance but generally speaking, this is not a reliable cue, because it is not known how loud the sound source is. However in the case of a familiar sound such as speech, there is an implicit knowledge of how loud the sound source should be, which enables a rough distance judgment to be made.

Echoes provide reasonable cues to the distance of a sound source, in particular because the strength of echoes does not depend on the distance of the source, while the strength of the sound that arrives directly from the sound source becomes weaker with distance. As a result, the ratio of direct-to-echo strength alters the quality of the sound. In this way consistent, although not very accurate, distance judgments are possible.

The final topic discussed is the precedence effect ( Wallach et al., 1949 ). This states that only the first of multiple identical sounds is used to determine the sound’s location. Echoes, which otherwise could cause confusion about locale, are effectively ignored by the listener’s perceptual system.

4.4 Auditory stream segregation

A large amount of work has been done on auditory stream segregation since the publication of Bregman’s (1990) book. Obviously, one cannot hope to do justice to this in a short paper like this. The main difference between this approach and classic psychoacoustics is in the emphasis placed on top-down cognitive influences. Listeners use a lot of stored information on what they know about the structure of sounds to interpret incoming sounds.

One example is the harmonic sieve model which collects together those frequency components that belong to a particular sound source ( Duifhuis et al., 1982 ). Voiced speech has a harmonic structure. The basic idea behind a harmonic sieve is that if the auditory system performs a spectral analysis and only those frequencies near to harmonics are taken, only the components from a single speaker would be obtained and other sounds would be sieved out. In this case, listeners make use of information about harmonic structure to segregate sounds.

The auditory stream segregation approach makes extensive use of Gestalt notions. Two related examples from Darwin (1984) and Nakajima et al. (2000) that involve the Gestalt notion of capture are discussed briefly. Darwin’s studies showed that a tone that starts or stops at a different time from a steady state vowel was less likely to be heard as part of that vowel than if it was simultaneous with it. In Nakajima et al.’s study, the stimuli consisted of two glides that crossed each other at a point in time (one of which started before and finished after the shorter glide). The shorter glide was continuous, but the longer one was interrupted at the crossover point. However listeners perceived this in the opposite way (the longer one was perceived as continuous and the shorter one as interrupted). This powerful illusion again points to the importance of the Gestalt grouping notions.

4.5 Cognitive influences on listeners

As with speech production, there are also cognitive influences in the environment that affect listeners. The ideas stemming form Bregman’s work include cognitive influences. The linguistic context is one factor that affects speech dysfluencies produced by fluent speakers ( Shriberg, 2001 ). Judgments about sounds are also affected by what the listener sees. One example of this is the well-known McGurk effect ( McGurk & MacDonald, 1976 ). This is an illusion in which a listener sees a video of a speaker saying the syllable /ga/ whilst hearing the syllable /ba/. The initial plosive in these sounds has different places of articulation (velar and bilabial respectively). Listeners do not report either of these sounds, but report hearing /da/ which has a place of articulation intermediate between /ga/ and /ba/. Another example is where a visual object that moves in synchrony with the sound source (e.g. a ventriloquist’s dummy) biases a sound’s judged location. The ventriloquism effect has been studied by asking for judgments about sound source location when dummy loudspeakers are visible ( Radeau & Bertelson, 1976 ).

5. Conclusion including considerations about room design

This short review does not claim to be comprehensive but, hopefully, raises some considerations about how speakers will be affected by environments with different acoustic properties, and some of the perceptual mechanisms that listeners have available to offset the deleterious effects of some of these influences. Delay and intensity are disruptive on fluent speakers, but frequency alterations less so. Speakers who stutter show similar responses to fluent speakers in these environments although the manipulations alleviate their fluency problem (FSF, DAF and masking of the voice). This shows that the way in which environments affect sound is not necessarily bad for all types of speaker.

Studies are needed which examine together the changes a speaker makes in an environment and how a listener in that same environment processes the altered speech. Speaking clearly can potentially offset poor acoustic characteristics in environments, although this needs to be checked. The precedence effect suggests that the disruptive effects of echoes can be reduced by listeners’ perceptual mechanisms. Listeners may use harmonic sieve’s to help them track a single voice in noisy environments. Besides these mechanisms that offset problems, there are cases where speakers can be misled (McGurk and ventriloquism effects).

Acknowledgements

This work was supported by grant 072639 from the Wellcome Trust to Peter Howell.

- Armson J, Foote S, Witt C, Kalinowski J, Stuart A. Effect of frequency altered feedback and audience size on stuttering. Europ. J. Disord. Comm. 1997; 32 :359–366. [ PubMed ] [ Google Scholar ]

- Bregman A. Auditory stream segregation. Cambride MA: MIT press; 1990. [ Google Scholar ]

- Burnett TA, Senner JE, Larson CR. Voice F0 responses to pitch-shifted auditory feedback: A preliminary study. J. Voice. 1997; 11 :202–211. [ PubMed ] [ Google Scholar ]

- CCITT (1989a) Interactions between sidetone and echo. CCITT - International Telegraph and Telephone Consultative Committee, Contribution, com XII, no BB.

- CCITT (1989b) Experiments on short-term delay and echo in conversation. CCITT - International Telegraph and Telephone Consultative Committee, Contribution, com XII, no AA.

- Chase RA, Sutton S, Rapin I. Sensory feedback influences on motor performance. J. Aud. Res. 1961; 1 :212–223. [ Google Scholar ]

- Cherry C, Sayers B. Experiments upon the total inhibition of stammering by external control and some clinical results. J. Psychosomat. Res. 1956; 1 :233–246. [ PubMed ] [ Google Scholar ]

- Costello-Ingham JC. Current status of stuttering and behavior modification - 1. Recent trends in the application of behavior application in children and adults. J. Fluency Disord. 1993; 18 :27–44. [ Google Scholar ]

- Curlee RF, Perkins WH. Conversational rate control for stuttering. J. Speech Hearing Disord. 1969; 34 :245–250. [ PubMed ] [ Google Scholar ]

- Darwin CJ. Perceiving vowels in the presence of another sound: Constraints on formant perception. J. Acoust. Soc. Amer. 1984; 70 :1636–1651. [ PubMed ] [ Google Scholar ]

- Dewar A, Dewar AW, Austin WTS, Brash HM. The long-term use of an automatically triggered auditory feedback masking device in the treatment of stammering. Brit. J. Disord. Comm. 1979; 14 :219–229. [ Google Scholar ]

- Duifhuis H, Willems LF, Sluyter RJ. Measurement of pitch in speech: An implementation of Goldstein’s theory of pitch perception. J. Acoust. Soc. Amer. 1982; 71 :1568–1580. [ PubMed ] [ Google Scholar ]

- Elman JL. Effects of frequency-shifted on the pitch of vocal productions. J. Acoust. Soc. Amer. 1981; 70 :45–50. [ PubMed ] [ Google Scholar ]

- Fairbanks G. Selected vocal effects of delayed auditory feedback. J. Speech Hear. Disord. 1955; 20 :333–345. [ PubMed ] [ Google Scholar ]

- Fletcher H, Raff GM, Parmley F. Study of the effects of different sidetones in the telephone set. Western Electrical Company; 1918. Report no. 19412, Case no. 120622. [ Google Scholar ]

- Garber S, Moller K. The effects of feedback filtering on nasalization in normal and hypernasal speakers. J. Speech Hear. Res. 1979; 22 :321–333. [ PubMed ] [ Google Scholar ]

- Goldiamond I. Stuttering and fluency as manipulatable operant response classes. In: Krasner L, Ullman L, editors. Research in behavior modification. New York: Holt, Rhinehart and Winston; 1965. pp. 106–156. [ Google Scholar ]

- Ham R, Steer MD. Certain effects of alterations in auditory feedback. Folia Phoniat. 1967; 19 :53–62. [ PubMed ] [ Google Scholar ]

- Hargrave S, Kalinowski J, Stuart A, Armson J, Jones K. Effect of frequency-altered feedback on stuttering frequency at normal and fast speech rates. J. Speech Hear. Res. 1994; 37 :1313–1319. [ PubMed ] [ Google Scholar ]

- Howell P. Changes in voice level caused by several forms of altered feedback in normal speakers and stutterers. Lang. Speech. 1990; 33 :325–338. [ PubMed ] [ Google Scholar ]

- Howell P, Archer A. Susceptibility to the effects of delayed auditory feedback. Percep. Psychophys. 1984; 36 :296–302. [ PubMed ] [ Google Scholar ]

- Howell P, El-Yaniv N, Powell DJ. Factors affecting fluency in stutterers when speaking under altered auditory feedback. In: Peters H, Hulstijn W, editors. Speech Motor Dynamics in Stuttering. New York: Springer Press; 1987. pp. 361–369. [ Google Scholar ]

- Howell P, Powell DJ. Hearing your voice through bone and air: Implications for explanations of stuttering behaviour from studies of normal speakers. J. Fluency Disord. 1984; 9 :247–264. [ Google Scholar ]

- Howell P, Sackin S. Speech rate manipulation and its effects on fluency reversal in children who stutter. J. Developmental Phys. Disab. 2000; 12 :291–315. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Howell P, Sackin S. Timing interference to speech in altered listening conditions. J. Acous. Soc. Amer. 2002; 111 :2842–2852. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Kalinowski J, Armson J, Roland-Mieszkowski M, Stuart A, Gracco V. Effects of alterations in auditory feedback and speech rate on stuttering frequency. Lang. Speech. 1993; 36 :1–16. [ PubMed ] [ Google Scholar ]

- Kalinowski J, Dayalu V. A common element in the immediate inducement of effortless, natural-sounding, fluent speech in stutterers: “The Second Speech Signal” Med. Hypoth. 2002; 58 :61–66. [ PubMed ] [ Google Scholar ]

- Kalinowski J, Stuart A, Sark S, Armson J. Stuttering amelioration at various auditory feedback delays and speech rates. Eu. J. Disord. Comm. 1996; 31 :259–269. [ PubMed ] [ Google Scholar ]

- Lane HL, Tranel B. The Lombard sign and the role of hearing in speech. J. Speech Hear. Res. 1971; 14 :677–709. [ Google Scholar ]

- Lee BS. Artificial stutter. J. Speech Hearing Disord. 1951; 15 :53–55. [ PubMed ] [ Google Scholar ]

- Lindblom BB. Explaining phonetic variation: A sketch of the H and H theory. In: Hardcastle WJ, Marchal A, editors. Speech production and speech modeling. Dordrecht: Kluwer; 1990. pp. 403–439. [ Google Scholar ]

- Lombard E. Le signe de l’elevation de la voix. Annales des Maladies de l’Oreille, du Larynx, du Nez et du Pharynx. 1911; 37 :101–119. [ Google Scholar ]

- Lotzmann G. Zur Anwedung variierter verzogerungszeiten bei balbuties. Folia Phoniat. Logoped. 1961; 13 :276–312. [ Google Scholar ]

- Macleod J, Kalinowski J, Stuart A, Armson J. Effect of single and combined altered auditory feedback on stuttering frequency at two speech rates. J. Comm. Disord. 1995; 28 :217–228. [ PubMed ] [ Google Scholar ]

- McGurk H, MacDonald J. Hearing lips and seeing voices. Nature. 1976; 264 :746–748. [ PubMed ] [ Google Scholar ]

- Nakajima Y, Sasaki T, Kanafuka K, Miyamoto A, Remijn G, ten Hoopen G. Illusory recouplings of onsets and terminations of glide tone components. Percept. Psychophys. 2000; 62 :1413–1425. [ PubMed ] [ Google Scholar ]

- Natke U, Grosser J, Kalveram KT. Fluency, fundamental frequency, and speech rate under frequency shifted auditory feedback in stuttering and nonstuttering persons. J. Fluency Disord. 2001; 26 :227–241. [ Google Scholar ]

- Neelley JN. A study of the speech behaviors of stutterers and nonstutterers under normal and delayed auditory feedback. J. Speech Hearing Disord. Monog. 1961; 7 :63–82. [ PubMed ] [ Google Scholar ]

- Nessel E. Die verzogerte Sprachruckkopplung (Lee Effect) bei Stotteren. Folia Phoniat. 1958; 10 :199–204. [ PubMed ] [ Google Scholar ]

- Picheny MA, Durlach NI, Braida LD. Speaking clearly for the hard of hearing II: Acoustic characteristics of clear and conversational speech. J. Speech Hearing Res. 1989; 29 :434–446. [ PubMed ] [ Google Scholar ]

- Radeau M, Bertelson P. The effects of a textured visual field on modality dominance in a ventriloquism situation. Percept Psychophy. 1976; 20 :227–235. [ Google Scholar ]

- Shriberg E. To ‘errrr’ is human: Ecology and acoustics of speech disfluencies. J. Int. Phonetic Assoc. 2001; 31 :153–169. [ Google Scholar ]

- Soderberg GA. A study of the effects of delayed side-tone on four aspects of stutterers’ speech during oral reading and spontaneous speech. Speech Monog. 1960; 27 :252–253. [ Google Scholar ]

- Sutton S, Chase RA. White noise and stuttering. J. Speech Hear. Res. 1961; 4 :72. [ Google Scholar ]

- Wallach H, Newman EB, Rosenzweig MR. The precedence effect in binaural unmasking. Amer. J. Psych. 1949; 62 :315–336. [ PubMed ] [ Google Scholar ]

- Webster RL, Lubker B. Masking of auditory feedback in stutterers’ speech. J. Speech Hear. Res. 1968; 11 :221–222. [ PubMed ] [ Google Scholar ]

- Zimmerman S, Kalinowski J, Stuart A, Rastatter MP. Effect of altered auditory feedback on people who stutter during scripted telephone conversations. J. Speech, Lang. Hear. Res. 1997; 40 :1130–1134. [ PubMed ] [ Google Scholar ]

Gaze and speech behavior in parent–child interactions: The role of conflict and cooperation

- Open access

- Published: 02 December 2021

- Volume 42 , pages 12129–12150, ( 2023 )

Cite this article

You have full access to this open access article

- Gijs A. Holleman ORCID: orcid.org/0000-0002-6443-6212 1 ,

- Ignace T. C. Hooge 1 ,

- Jorg Huijding 2 ,

- Maja Deković 2 ,

- Chantal Kemner 1 &

- Roy S. Hessels 1

2494 Accesses

3 Citations

Explore all metrics

A primary mode of human social behavior is face-to-face interaction. In this study, we investigated the characteristics of gaze and its relation to speech behavior during video-mediated face-to-face interactions between parents and their preadolescent children. 81 parent–child dyads engaged in conversations about cooperative and conflictive family topics. We used a dual-eye tracking setup that is capable of concurrently recording eye movements, frontal video, and audio from two conversational partners. Our results show that children spoke more in the cooperation-scenario whereas parents spoke more in the conflict-scenario. Parents gazed slightly more at the eyes of their children in the conflict-scenario compared to the cooperation-scenario. Both parents and children looked more at the other's mouth region while listening compared to while speaking. Results are discussed in terms of the role that parents and children take during cooperative and conflictive interactions and how gaze behavior may support and coordinate such interactions.

Similar content being viewed by others

Eye tracking in an everyday environment reveals the interpersonal distance that affords infant-parent gaze communication

Hiroki Yamamoto, Atsushi Sato & Shoji Itakura

Characteristics of Visual Fixation in Chinese Children with Autism During Face-to-Face Conversations

Zhong Zhao, Haiming Tang, … Jianping Lu

Eye gaze During Semi-naturalistic Face-to-Face Interactions in Autism

Alasdair Iain Ross, Jason Chan & Christian Ryan

Avoid common mistakes on your manuscript.

Introduction

A primary mode of human social behavior is face-to-face interaction. This is the “central ecological niche” where languages are learned and most language use occurs (Holler & Levinson, 2019 , p. 639). Face-to-face interactions are characterized by a variety of verbal and nonverbal behaviors, such as speech, gazing, facial displays, and gestures. Since the 1960s, researchers have extensively investigated the coordination and regulation of these behaviors (Duncan & Fiske, 2015 ; Kelly et al., 2010 ; Kendon, 1967 ). A paramount discovery is that gaze and speech behavior are closely coupled during face-to-face interactions. Although some patterns of speech behavior during face-to-face interactions, such as in turn-taking, are common across different languages and cultures (Stivers et al., 2009 ), the role of gaze behavior in interaction seems to be culturally- as well as contextually-dependent (Foddy, 1978 ; Haensel et al., 2017 , 2020 ; Hessels, 2020 ; Kleinke, 1986 ; Patterson, 1982 ; Rossano et al., 2009 ; Schofield et al., 2008 ). Observational studies on gaze behavior during interaction have been conducted in many different interpersonal contexts, such as interactions between adults, parents and infants, parents and children, as well as clinical interviews and conversations with typically and atypically developing children (Argyle & Cook, 1976 ; Arnold et al., 2000 ; Ashear & Snortum, 1971 ; Berger & Cunningham, 1981 ; Cipolli et al., 1989 ; Kendon, 1967 ; Levine & Sutton-Smith, 1973 ; Mirenda et al., 1983 ). More recently, new eye-tracking techniques have been developed to measure gaze behavior of individuals during face-to-face interactions with higher spatial and temporal resolution (Hessels et al., 2019 ; Ho et al., 2015 ; Rogers et al., 2018 ). However, these techniques have not been used to study the relation between speech and gaze in parent–child conversations.

Parent–child interactions provide a rich social context to investigate various aspects of social interaction, such as patterns of verbal and nonverbal behavior during face-to-face communication. Parent–child interactions are crucial for children’s social, emotional, and cognitive development (Branje, 2018 ; Carpendale & Lewis, 2004 ; Gauvain, 2001 ). Also, the ways in which parents and children interact changes significantly from childhood to adolescence. In infancy and childhood, parent–child interactions play an important role in children’s socialization, which involves the acquisition of language and social skills, as well as the internalization of social norms and values (Dunn & Slomkowski, 1992 ; Gauvain, 2001 ). In adolescence, parent–child interactions are often centered around relational changes in the hierarchical nature of the parent–child relationship, which typically consist of frequent conflicts about parental authority, child autonomy, responsibilities, and appropriate behavior (Laursen & Collins, 2009 ; Smetana, 2011 ). According to Branje ( 2018 , p. 171), “parent-adolescent conflicts are adaptive for relational development when parents and adolescents can switch flexibly between a range of positive and negative emotion s.” As children move through adolescence, they become more independent from their parents and start to challenge parents’ authority and decisions. In turn, parents need to react to these changes and renegotiate their role as a parent. How parents adapt to these changes (e.g. permissive, supporting, or authoritarian parenting styles) may have a significant impact on the social and emotional well-being of the child (Smokowski et al., 2015 ; Tucker et al., 2003 ). Children in this period become progressively aware of the perspectives and opinions of other people in their social environment (e.g. peers, classmates, teachers) and relationships with peers become more important to one’s social identity. In turn, parents’ authority and control over the decisions and actions of the child changes as the child moves from childhood to adolescence (Steinberg, 2001 ).

In this study, we investigated gaze behavior and its relation to speech in the context of parent–child interactions. We focus on the role of conflict and cooperation between parents and their preadolescent children (age range: 8 – 11 years) and how these interpersonal dynamics may be reflected in patterns of gaze and speech behavior. We chose this period because it marks the beginning of the transition from middle childhood to early adolescence. In this period, parents still hold sway over their children’s decisions and actions, however, the relational changes between children and parents start to become increasingly more prominent (e.g. striving for autonomy, disengagement from parental control), which is highly relevant to the study of conflict and cooperation in parent–child relationships (Branje, 2018 ; De Goede et al., 2009 ; Dunn & Slomkowski, 1992 ; Steinberg, 2001 ). Specifically, we are interested in patterns of gaze and speech behavior as a function of cooperative and conflicting conversation topics, to which parent–child interactions are ideally suited. We focus primarily on gaze behavior because of its importance for perception – does one need to look at another person’s face in order to perceive certain aspects of it? – and its relation to speech in face-to-face interactions (Hessels, 2020 ; Holler & Levinson, 2019 ; Kendon, 1967 ). Furthermore, the role of gaze in face-to-face interactions has previously been linked with various interpersonal dynamics, such as intimacy and affiliation, but also with social control, dominance, and authority (for a review, see Kleinke, 1986 ), which is relevant to the social context of the parent–child relationship. Although no eye-tracking studies to our knowledge have investigated the role of gaze behavior and its relation to speech in parent–child conversations, several studies have addressed the role of gaze behavior in face and speech perception and functions of gaze during conversational exchanges. Because these lines of research are directly relevant to our current study, we will briefly review important findings from this literature.

Where Do People Look at Each Other’s Faces?

Faces carry information that is crucial for social interaction. By looking at other people’s faces one may explore and detect certain aspects of those faces such as facial identity, emotional expression, gaze direction, and cognitive state (Hessels, 2020 ; Jack & Schyns, 2017 ). A well-established finding, ever since the classic eye-tracking studies by Buswell ( 1935 ) and Yarbus ( 1967 ), is that humans have a bias for looking at human faces and especially the eyes (see e.g. Birmingham et al., 2009 ; Hessels, 2020 ; Itier et al., 2007 ). This bias already seems to be present in early infancy (Farroni et al., 2002 ; Frank et al., 2009 ; Gliga et al., 2009 ). In a recent review, Hessels ( 2020 ) describes that where humans look at faces differs between, for example, when the face is moving, talking, expressing emotion, or when particular tasks or viewing conditions are imposed by researchers (e.g. face or emotion recognition, speech perception, restricted viewing). Moreover, recent eye-tracking research has shown that individuals exhibit large but stable differences in gaze behavior to faces (Arizpe et al., 2017 ; Kanan et al., 2015 ; Mehoudar et al., 2014 ; Peterson & Eckstein, 2013 ; Peterson et al., 2016 ). That is, some people tend to fixate mainly on the eye or brow region while others tend to fixate the nose or mouth area. Thus, what region of the face is looked at by an observer will likely depend on the conditions of the experimental context and on particular characteristics of the individual observer.

Previous eye-tracking research on gaze behavior to faces has mostly been conducted using static images or videos of faces presented to participants on a computer screen. However, some researchers have questioned whether gaze behavior under such conditions adequately reflects how people look at others in social situations, e.g. when there is a potential for interaction (Laidlaw et al., 2011 ; Risko et al., 2012 , 2016 ). For example, Laidlaw et al. ( 2011 ) showed that when participants were seated in a waiting room, they looked less at a confederate who was physically present compared to when that person was displayed on a video monitor. While this discovery has led researchers to question the presumed ‘automaticity’ of humans to look at faces and eyes, this situation may primarily pertain to potential interactions . That is, situations where social interaction is possible but can be avoided, for example, in some public spaces and on the street (see also Foulsham et al., 2011 ; Hessels, et al., 2020a , 2020b ; Rubo et al., 2020 ). Other studies have shown that, once engaged in actual interaction , such as in conversation, people tend to look at other people’s faces and its features (Freeth et al., 2013 ; Hessels et al., 2019 ; Rogers et al., 2018 ). One may thus expect that parents and children will also primarily look at each other’s faces during conversational interactions, but where on the face they will mostly look likely differs between individuals and may be closely related to what the face is doing (e.g. speaking, moving, expressing emotion) and to the social context of the interaction.

Where Do People Look at Each Other’s Faces During Conversations?

In (early) observational work (e.g., Argyle & Cook, 1976 ; Beattie & Bogle, 1982 ; Foddy, 1978 ; Kendon, 1967 ), researchers have often studied gaze behavior in face-to-face conversations by manually coding interactants’ gaze behavior from video recordings. These observational studies (i.e. not using an eye tracker) of two-person conversations have shown that speakers tend to equally gaze at or away from listeners, whereas listeners gaze longer at speakers with only occasional glances away from the speaker in between (Duncan & Fiske, 2015 ; Kendon, 1967 ; Rossano et al., 2009 ). Yet, the observational techniques used in these studies have limited reliability and validity to distinguish gaze direction at different regions of the face (Beattie & Bogle, 1982 ), which is of crucial importance for research on face-scanning behavior (see also Hessels, 2020 , p. 869). Conversely, eye-tracking studies on gaze behavior to faces have used videos of talking faces, instead of actual interactions (e.g. Foulsham & Sanderson, 2013 ; Vatikiotis-Bateson et al., 1998 ; Võ et al., 2012 ). Most eye-tracking studies have therefore only been concerned with where people look at faces while listening to another person speak. Võ et al. ( 2012 ), for example, presented observers with close-up video clips of people being interviewed. They found that overall, participants gazed at the eyes, nose, and mouth equally often. However, more fixations to the mouth and fewer to the eyes occurred when the face was talking than when the face was not talking. This finding converges with the well-established finding that visual information from the human face may influence, or enhance how speech is perceived (Sumby & Pollack, 1954 ). A well-known example is the McGurk effect (McGurk & MacDonald, 1976 ), where one’s perception of auditory speech syllables can be modulated by the mouth and lip movements from a talking face.

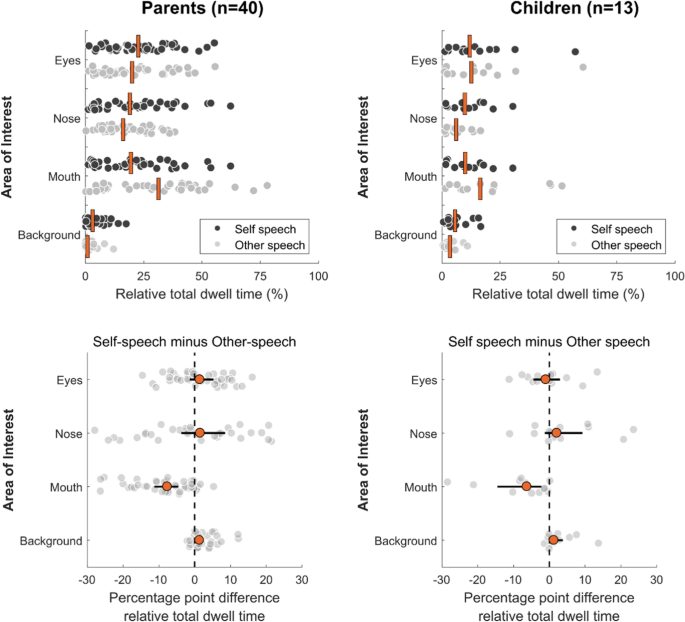

Only recently have researchers begun to use eye-tracking technology to measure where people look at each other’s faces when engaged in interactive conversational exchanges (Hessels et al., 2019 ; Ho et al., 2015 ; Rogers et al., 2018 ). Rogers et al. ( 2018 ), for example, used wearable eye trackers to measure where two people looked at each other while engaged in short “getting acquainted” conversations. They found that participants gazed away from the face of one’s partner for about 10% of the total conversation duration when listening, and about 29% when speaking (cf. Kendon, 1967 ). When participants gazed at their partner’s face, they looked primarily at the eyes and mouth region. Specifically, Rogers et al. ( 2018 ) reported that, on average, participants looked slightly more at the mouth area while listening compared to when they were speaking; a difference of approximately 5 percentage points of the time that they were looking at the other person’s face. In a different eye-tracking study, Hessels et al. ( 2019 ) investigated gaze behavior of participants engaged in a face-to-face interaction with a confederate. They observed that when participants listened to a confederate’s story, their gaze was directed at the facial features (e.g. eyes, nose, and mouth regions) for a longer total duration, as well as more often per second, compared to when speaking themselves. However, they did not find that participants looked proportionally longer at the mouth while listening compared to speaking, as in Rogers et al. ( 2018 ). One reason for this difference could be that participants in the Hessels et al. ( 2019 ) study did not need to exchange speaking turns as they were specifically tasked to wait for the confederate to end his story. The small differences in these two studies may then be explained if turn-transitions are associated with looking at the mouth.

In sum, it has been well established that gaze to faces during conversations is dependent on speaker-state: who is speaking or who is being addressed. Based on eye-tracking studies with videos of talking faces, it has often been suggested that gaze will be directed more at the mouth while listening to someone speak, as looking at the mouth area may be beneficial (but not necessary) for speech perception (see e.g. Buchan et al., 2007 ; Vatikiotis-Bateson et al., 1998 ; Võ et al., 2012 ). Recent dual eye-tracking studies on the role of gaze behavior in two-person conversations (Hessels et al., 2019 ; Rogers et al., 2018 ) have found no, or only small, differences in gaze to specific facial features (e.g. eyes, mouth) during episodes of speaking and listening. We expect to observe a similar pattern for parents and children as well.

Present study

In this study, we investigated speech and gaze behavior during conversational interactions between a parent and their child. Parent–child dyads engaged in two conversations about potential disagreements (conflict) and agreements (cooperation) on common family topics, given their importance and frequent occurrence within the social context of the parent–child relationship (Branje, 2018 ; Dixon et al., 2008 ; Laursen & Collins, 2004 ; Steinberg, 2001 ). We investigated (1) the similarities and differences between parents and children’s speech and gaze behavior during face-to-face interaction, (2) whether patterns of speech and gaze behavior in parent–child conversations are related to the nature of the conversation (conflictive versus cooperative topics), and (3) whether gaze behavior to faces is related to whether someone is speaking or listening. To engage parents and children in conflictive and cooperative conversations, we used two age-appropriate semi-structured conversation-scenarios. This method, which is considered a ‘gold standard’ in the field, has extensively been used by researchers to assess various aspects of the parent–child relationship, e.g. attachment, interpersonal affect, relational quality, parental style, and child compliance (Aspland & Gardner, 2003 ; Ehrlich et al., 2016 ; Scott et al., 2011 ). To investigate the relation between speech and gaze in parent–child conversations, we needed a setup capable of concurrently recording eye movements and audio from two conversational partners with enough spatial accuracy to distinguish gaze to regions of the face. To this end, we used a video-based dual eye-tracking setup by Hessels et al. ( 2017 ) that fulfills these criteria. Based on previous literature, we expected that parents and children on average looked predominantly at each other's faces, but that participants would exhibit substantial individual differences in what region of the face they looked at most (eyes, nose, mouth). Moreover, we expected that gaze behavior was related to whether subjects were speaking or listening. We may expect that when listening gaze is directed more at the mouth region, given its potential benefits for speech perception and turn-taking. Regarding the conflict and cooperative scenarios, we had no prior expectations.

Participants

81 parent–child dyads (total n = 162) participated in this study. All participants were also part of the YOUth study, a prospective cohort study about social and cognitive development with two entry points: Baby & Child and Child & Adolescent (Onland-Moret et al., 2020 ) . The YOUth study recruits participants who live in Utrecht and its neighboring communities. The YOUth study commenced in 2015 and is still ongoing. To be eligible for participation in our study, children needed to be aged between 8 and 11 years at the moment of the first visit (which was the same as the general inclusion criteria of the Child & Adolescent cohort). Participants also had to have a good understanding of the Dutch language. Parents needed to sign the informed consent form for the general cohort study, and for this additional eye-tracking study. Participants of the YOUth study received an additional information letter and informed consent form for this study prior to the first visit to the lab. Participants were not eligible to participate if the child was mentally or physically unable to perform the tasks, if parents didn’t sign the informed consent forms, or if a sibling was already participating in the same cohort. A complete overview of the in-and-exclusion criteria for the YOUth study are described in Onland-Moret et al. ( 2020 ).

For this study, a subset of participants from the first wave of the Child & Adolescent cohort were recruited. Children’s mean age was 9.34 (age range: 8–10 years) and 55 children were female (67%). Parents’ mean age was 42.11 (age range: 33–56) and 64 were female (79%). A complete overview with descriptive statistics of the participants’ age and gender is given in the results section (Table 1 ). We also acquired additional information about the families’ households, which is based on demographic data from seventy-six families. For five families, household demographics were not (yet) available (e.g., parents did not complete the demographics survey of the YOUth study). The average family/household size in our sample was 4.27 residents (sd = 0.71). Seventy children from our sample lived with two parents or caregivers (92.1%). Seven children had no siblings (9.2%), forty-two children had one sibling (55.3%), twenty-three children had two siblings (30.2%), and four children had three siblings (5.3%). Two parents/caregivers lived together with the children of their partner and one family/household lived together with an au pair.

We also checked how our sample compared to the rest of the YOUth study’s sample in terms of parents’ educational level, used here as a simplified proxy of social-economic status (SES). In our subset of participants, we found that most parents achieved at least middle-to-higher educational levels, which is representative of the general YOUth study population. For a detailed discussion of SES in the YOUth study population, see Fakkel et al. ( 2020 ). All participants received an information brochure at home in which this study was explained. Participants could then decide whether they wanted to participate in this additional study aside from the general testing program. All participants were included at their first visit to the lab and parents provided written informed consent for themselves as well as on behalf of their children. This study was approved by the Medical Research Ethics Committee of the University Medical Center Utrecht and is registered under protocol number 19–051/M.

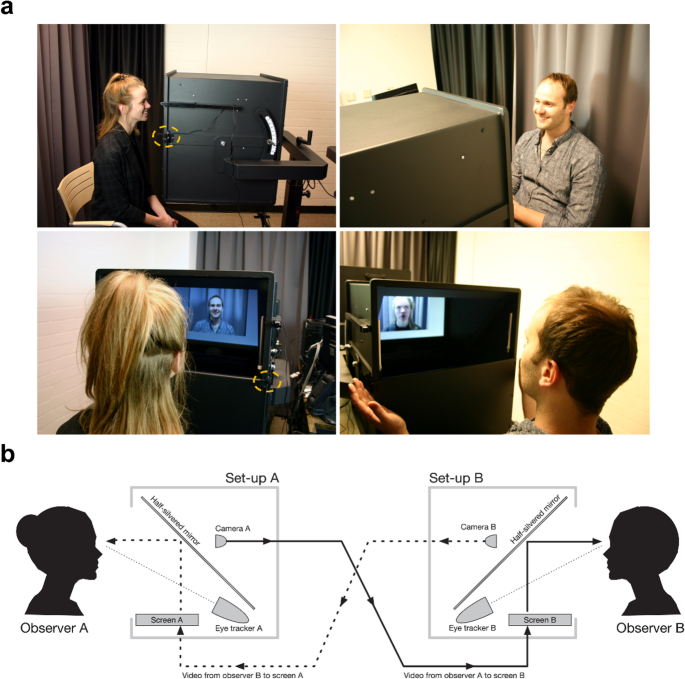

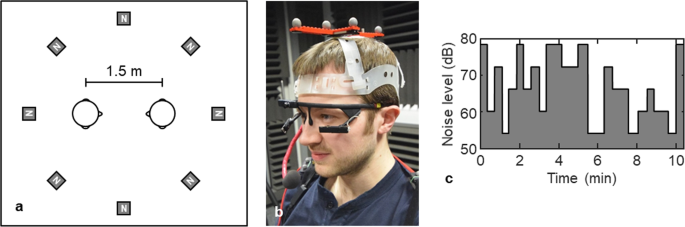

A dual eye-tracking setup (see Fig. 1 a) was used to record gaze of two interactors simultaneously. Each person was displayed to the other by means of a monitor and a half-silvered mirror (see Fig. 1 b). The cameras behind the half-silvered mirrors were Logitech webcams (recording at 30 Hz at a resolution of 800 by 600 pixels). The live video-feeds were presented at a resolution of 1024 by 768 pixels in the center of a 1680 by 1050 pixels computer screen and concurrently recorded to disk. Two SMI RED eye trackers running at 120 Hz recorded participants’ eye movements (see Fig. 1 b). A stimulus computer running Ubuntu 12.04 LTS handled the live video-connection and signaled to the eye-tracker computers to start and stop recording eye movements (for a more detailed explanation of this setup, see Hessels et al., 2017 ).

Overview of the dual eye-tracking setup. a Staged photographs of two interactors in the dual eye-tracking setup. b A schematic overview of the setup, reproduced from Hessels et al. ( 2018b ).

Audio was recorded using a set of AKG C417-PP Lavalier-microphones which were connected to a Behringer Xenyx 1204-USB audio panel. Each microphone was attached to the front of each setup (see the dashed orange circles on the left panels in Fig. 1 a). We used Audacity v. 2.3.3 running on a separate computer (Ubuntu 18.04.2 LTS) to record audio. In a stereo recording the signal of the parent was panned to the left channel and the signal of the child was panned to the right channel. Upon recording start, a 100 ms pulse was sent from the parallel port of the stimulus computer to the audio panel to be recorded. This resulted in a two-peak signal we used to synchronize the audio recordings to the beginning and end of the video and eye-tracking recordings. Audio recordings were saved to disk as 44,100 Hz 32-bit stereo WAVE files. We describe in detail how the data were processed for the final analyses in the Signal processing section below.

Upon entering the laboratory, a general instruction was read out by the experimenter (author GAH). This instruction consisted of a brief explanation of the two conversation-scenarios and the general experimental procedure. Participants were asked not to touch any equipment during the experiment (e.g. the screen, microphones, eye trackers). Because the experimenter needed to start and stop the video-feed after approximately five minutes for each conversation, he explained that he would remain present during the measurements to operate the computers. After the general instruction, participants were positioned in the dual eye-tracking setup. Participants were seated in front of one of the metal boxes at either end of the setup (containing the screens and eye trackers) such that their eyes were at the same height as the webcams behind the half-silvered mirrors using height-adjustable chairs. The distance of participants’ eyes to the eye tracker was approximately 70 cm and the distance from eyes to the screen was approximately 81 cm. After positioning, the experimenter briefly explained the calibration procedure. The eye tracker of the parent was calibrated first using a 5-point calibration sequence followed by a 4-point calibration validation. We aimed for a systematic error (validation-accuracy) below 1° in both the horizontal and vertical direction (they are returned separately by iViewX ). However, if for some reason, a sufficiently low systematic error could not be obtained, the experimenter continued anyway (see Section 11 how these recordings were handled). After calibrating the parent’s eye tracker, we continued with the child’s eye tracker. After the calibration procedure, the experimenter briefly repeated the task-instructions and explained that he would initiate the video-feed after a countdown. The experimenter repeated that he would stop recording the conversation after approximately five minutes. The experimenter did not speak or intervene during the conversation, only if participants questioned him directly, or when participants had changed their position too much. In the latter case, this was readily visible from the iViewX software which graphically and numerically displays the online gaze position signals of the eye trackers. If participants slouched too much, the incoming gaze position signals would disappear or show abnormal values. In such instances, the experimenter would ask the participants to sit more upright until the gaze position signals were being recorded properly again.

Conflict-Scenario

For the first conversation, children and their parents were instructed to discuss a family issue about which they have had a recent disagreement. The goal of the conflict-scenario was to discuss the topic of disagreement and to try to agree on possible solutions for the future. To assist the participants in finding a suitable topic, the experimenter provided a list with common topics of disagreements between parents and children. The list included topics such as screen time, bedtime, homework, and household chores (see Appendix 1 for a complete overview). The main criteria for the conflict-scenario were that the topic should be about a recent disagreement, preferably in the last month. If no suitable topic could be found on the list or could be agreed upon, the parent and child were asked to come up with a topic of their own. Some parent–child dyads could not decide at all or requested to skip the conflict-task altogether. Note that for the final analyses, we only included dyads that completed both scenarios (see Fig. 1 and Table 1 ). After participants had agreed on a topic, the experimenter explained that they should try to talk solely about the chosen topic for approximately 5 min and not digress.

Cooperation-Scenario

For the second conversation, participants were instructed to plan a party together (e.g. birthday or family gathering). The goal of the cooperation-scenario was to encourage a cooperative interaction between the parent and child. Participants were instructed to discuss for what occasion they want to organize a party and what kinds of activities they want to do. Importantly, participants had to negotiate the details and thus needed to collaborate to come up with a suitable party plan. Participants were instructed to discuss the party plan for approximately 5 min. Prior to the second conversation, the experimenter checked the participants’ positioning in front of the eye trackers and whether the eye trackers and microphones were still recording properly. In some cases, the experimenter re-calibrated the eye trackers if participants had changed position, or if the eye tracker did not work for whatever reason. Note that we always started with the conflict-scenario and ended with the cooperation-scenario because we reasoned this would be more pleasant for the children.

After the cooperation-scenario, the experimenter thanked the parents and children for their participation and the child received a small gift. The experimenter also asked how the participants had experienced the experiment, and if they were left with any questions about the goal of the experiment.

Signal Processing

To prepare the eye-tracking, audio, and video signals for the main analyses, we conducted several signal processing steps (e.g. synchronization, classification). In the following sections, we describe these separate steps. Readers who do not wish to consider all the technical and methodological details of the present study may wish to proceed to the Results, Section 12 .

Synchronization of eye-tracking signals and video recordings . By using timestamps produced by the stimulus computer, the eye-tracking signal was automatically trimmed to the start and end of the experimental trial. Next, the eye-tracking signal was downsampled from 120 to 30 Hz to correspond to the frame rate of the video. In the downsampling procedure, we averaged the position signals of four samples to produce a new sample. This caused the signal-to-noise ratio to increase by a factor of 2 (√4) due to the square root law.

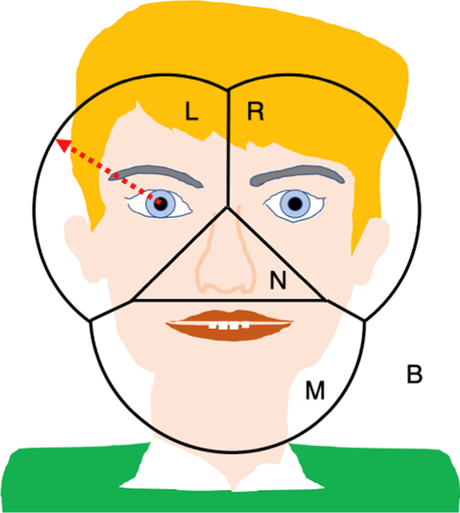

Construction of Areas of Interest (AOI) and AOI assignment of gaze position. To determine where and when participants looked at each other’s faces, we mapped gaze coordinates unto the frontal video-recordings. Because participants moved and rotated their faces and bodies during the conversations, we used an AOI construction method that can efficiently and effectively deal with an enormous number of images, namely the thousands of video frames produced in this experiment. This method consists of the fully automated Limited Radius Voronoi Tessellation procedure to construct Areas-of-Interest (AOIs) for facial features in dynamic videos (Hessels et al., 2016 ). Briefly, this procedure assigns each gaze position to one of the four facial features (left eye, right eye, nose, or mouth) based on the closest distance to the facial feature. If this minimal distance exceeds the limited radius, gaze position was assigned to the background AOI (see Fig. 2 ). This background area consists of the background and small parts of the upper body of the participant visible in the video. In our study, the LRVT-radius was set to 4° Footnote 1 (200 pixels). The LRVT method is partly data-driven, resulting in smaller AOIs on the children’s faces compared to AOIs on the parents’ faces. We quantified the AOI size by the AOI span. The AOI span is defined as the mean distance from each AOI cell center to the cell center of its closest neighbor (see Hessels et al., 2016 , p. 1701). The average AOI-span for parents’ faces was 1.76° and an average AOI span for children’s faces was 1.6°.

An example for computer-generated AOIs (Hessels, et al., 2018a ) for the left eye (L), right eye (R), nose (N), and mouth (M). The AOI for the left eye, for example, is the area closest to the left eye center but not further away from the center than the bounded radius of 4° (denoted with a red arrow). The background AOI (B) encompasses the background, the upper body of the participant and a small part of the top of the head

From gaze data to dwells. After individual gaze samples were mapped unto AOIs (see previous section), we computed ‘dwells’, defined here as the time spent looking at a particular face AOI (e.g., eyes, mouth). We operationalized a single dwell as the period between when the participants’ gaze position entered the AOI radius until gaze position exited the AOI, providing that the duration was at least 120 ms (i.e., four consecutive video frames). For further details, see Hessels et al. ( 2018b , p. 7).

From raw audio recordings to speaker categories.

Trimming to prepare audio for synchronization with video and eye-tracking. In a self-written audio visualization script in MATLAB, we manually marked the timepoints of the characteristic two-peak synchronization pulse sent by the stimulus computer (see Section 7 ), which indicated the start and stop of the two conversations at high temporal resolution (timing accuracy < 1 ms). Then we trimmed the audio files based on these start-and-stop timepoints. Next, the trimmed stereo files (left channel – the parent, right channel—the child) were split into two mono signals, and the first conversation (conflict) and the second conversation (cooperation) were separated. Finally, the audio signal was downsampled to 1000 Hz and converted into an absolute signal. As a result, we produced four audio files per parent–child dyad.

Determination of speech samples. Speech episodes (as an estimator for who was speaking) were operationalized as follows. First, the absolute audio signal was smoothed with a Savitsky-Golay filter (order 4; window 500 ms). Then, samples were labelled silent when the amplitude was smaller than 1.2 times the median amplitude of the whole filtered signal. Then, we computed the standard deviation of the amplitude of the silent samples. Subsequently, a sample was labelled as a speech sample if the amplitude exceeded the mean plus 2 times the standard deviation of the amplitude of the silent samples.

Removing crosstalk . Because the microphones were in the same room, we observed crosstalk in the audio channels. That is, we sometimes heard that parent speech was present in the audio recording channel of the child and vice versa. The child’s channel suffered more from crosstalk than the parent’s channel. To deal with this, we first equalized the speech signals of the parent and child by making them on average equally loud (by using the average speech amplitudes of the single episodes) Then, we identified episodes of potential crosstalk by selecting the episodes that contained a signal in the channel of both speakers. We removed crosstalk with the following rule: We assigned a crosstalk episode to X if the amplitude of the signal in the X’s channel was 3.33 times larger than in the Y’s channel. The value 3.33 was derived empirically. If a crosstalk episode was assigned to the child, it was removed from the parent’s channel and vice versa.

Determination of speech episodes. From the labelled samples, we determined speech episodes. Each speech episode is characterized by an onset and offset time, mean amplitude and duration.

Removing short speech and short silence episodes . We removed speech episodes shorter than 400 ms followed by the removal of silence episodes shorter than 100 ms.

Assigning speech labels to single samples . To link the speech signal to the eye-tracking signal in a later stage, we assigned speech labels to each sample of the speech signal of the parent–child dyad. For each timestamp, we produced a label for one of the following categories: parent speech, child speech, speech overlap and silence (no one speaks).

Combining speech and gaze behavior. The speech signal was combined with the gaze signal as follows. First, we upsampled the gaze signal (with AOI labels) from 30 to 1000 Hz by interpolation to match the sampling frequency of the audio signal. Each sample in the combined signal contained a classification of speaker categories (child speaks, parent speaks, both are speaking, no one speaks) and gaze location on the face for both child and parent (eyes, nose, mouth, background). Not all recordings produced valid eye-tracking data (eye-tracking data loss), valid AOIs for all video frames (e.g. due to extreme head rotations construction of AOIs is impossible), or valid dwells (i.e. dwells longer than 120 ms). These invalid cases were marked in our combined audio/gaze database. This is not necessarily problematic for data analysis, because we also conducted analyses on parts or combinations of parts of the data (e.g. speech analysis only).

Measures of speech and gaze. In this study, we mainly report relative measures of speech and gaze behavior because the total recording durations differed across dyads and conversations. We computed relative total speech durations as a descriptor of speech behavior and relative total dwell times as a descriptor of gaze behavior. To obtain relative measures, we determined the total duration for each speaker category (i.e. parent speech, child speech, overlap, no one speaks) and the total duration of dwells (with AOI labels eyes, nose, mouth, background) and then divided these durations by the total duration of the recording.

Eye-Tracking Data Quality and Exclusion

We first assessed the quality of the eye-tracking data, which is crucial for the validity of an eye-tracking study (Holmqvist et al., 2012 ). High-quality eye-tracking data is typically obtained when subjects are restrained with a chinrest/headrest to maintain equal viewing distance and minimize head movements. However, in the context of our face-to-face conversations, subjects could talk, gesture, move their face, head, and upper body. Although the dual eye-tracking setup used was specifically designed to allow for these behaviors, other eye-tracking studies have also demonstrated that such behaviors may negatively affect eye-tracking data quality (Hessels et al., 2015 ; Holleman et al., 2019 ; Niehorster et al., 2018 ). Moreover, young children may pose additional problems, such as excessive movement or noncompliance (Hessels & Hooge, 2019 ). We computed several commonly used eye-tracking data quality estimates, namely: accuracy (or systematic error) , precision (or variable error), and data loss (or missing data).

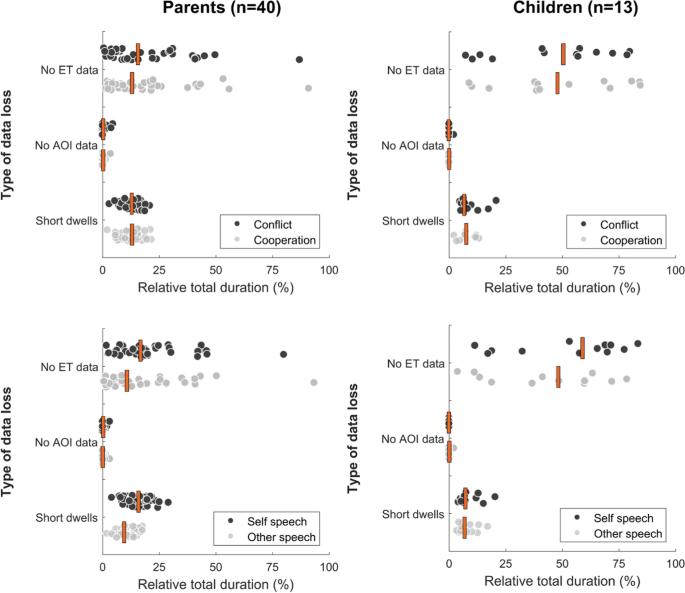

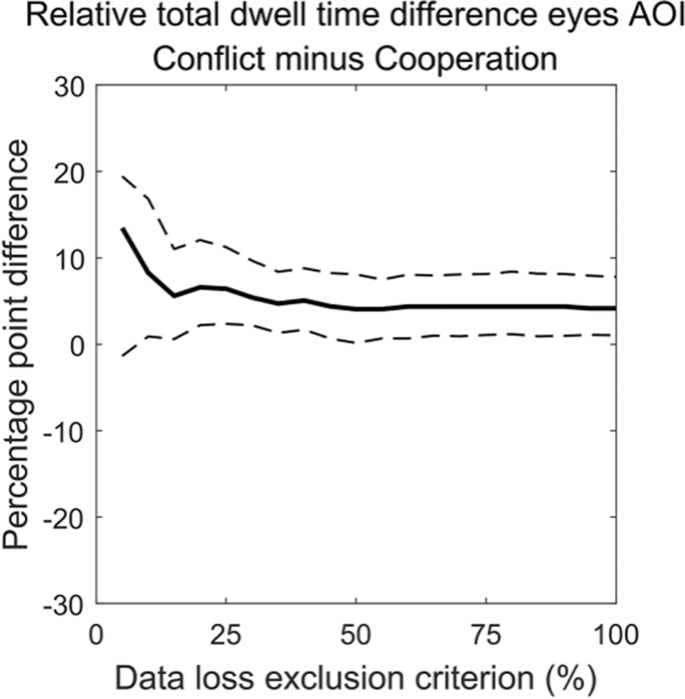

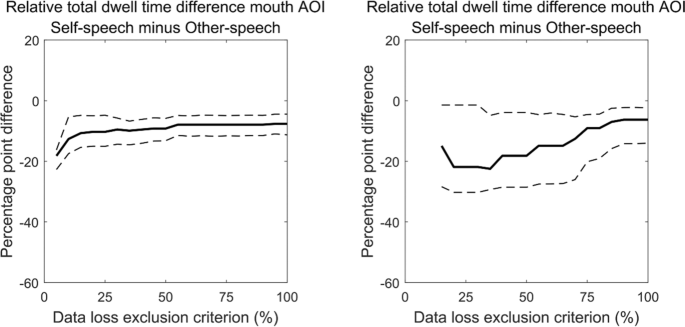

First, we assessed accuracy. The average validation accuracy of parents’ recordings was 0.98°. For the children’s recordings it was 1.48°. We set an exclusion criterion of 1° for the 2d-validation accuracy. Second, we determined precision by computing the sample-to-sample root mean square deviation (s2s-RMS) of the gaze-position signal. We then divided the s2s-RMS values for every participant by the AOI span (see Section Signal Processing ). This measure can range from 0 to infinity. A precision/AOI-span value of 1 means that precision is equal to the AOI span. In other words, a value of 1 means that the sample-to-sample variation of the gaze-position signal is equal to the average distance between AOIs. If the precision/AOI-span is larger than 1 this means that one cannot reliably map gaze position to an AOI. Therefore, we decided to exclude measurements in which the average precision/AOI span exceeded 1. Also, this measure accounts for differences between the recordings in the magnitude of the AOI spans in relation to the recorded gaze position. We also calculated periods of data loss – i.e. when the eye tracker did not report gaze position coordinates. Data loss is a slightly more complicated measure in the context of our study, given that data loss may coincide with talking and movement (Holleman et al., 2019 ). For example, it is well-known that some people gaze away more when speaking compared to when listening (Hessels et al., 2019 ; Kendon, 1967 ). Therefore, any exclusion based on data loss may selectively remove participants that spoke relatively more. For that reason, we did not exclude participants based on data loss but conducted separate sensitivity analyses for all our main findings as a function of a data loss exclusion criterion (see Appendix 3).

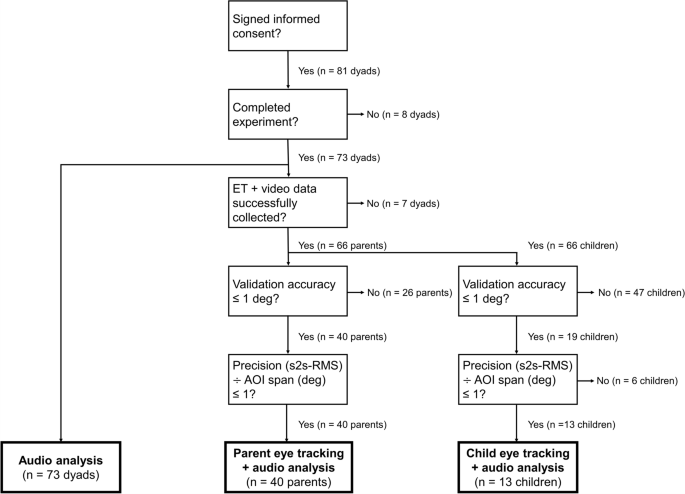

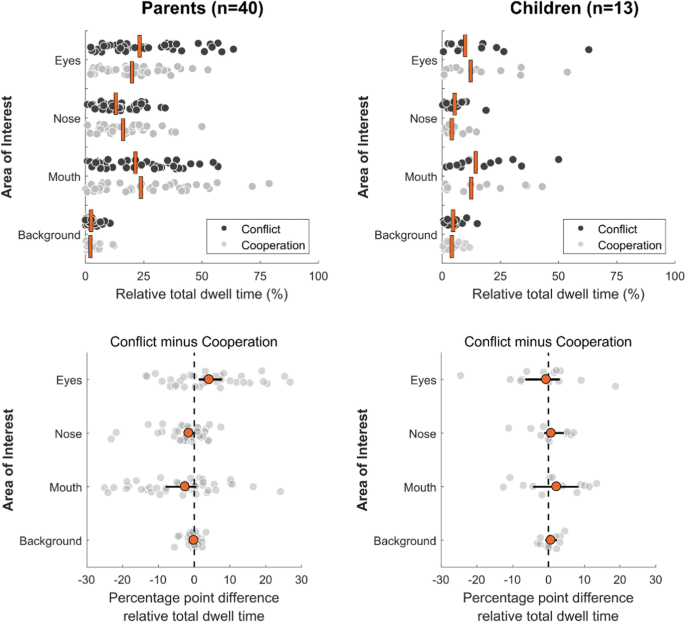

Based on the criteria for accuracy and precision, we determined how many measurements were suitable for further analyses. Figure 2 and Table 1 depict an overview of the eye-tracking data quality assessment and how many participants were excluded for further analyses based on exclusion criteria described above. Out of 81 parent–child dyads who participated, 73 dyads completed the experiment (i.e. participated in both conversation-scenarios). Out of this set, we had eye-tracking data of sufficient quality for 40 parents and 13 children. Descriptive statistics of the participants are given in Table 1 . Note that although the quality of the eye-tracking data is known to be worse for children, it was particularly problematic in our study as we needed data of sufficient quality for both conversations to answer our research questions. Although many more participants had at least one good measurement, applying our data quality criteria to both conversations for every parent–child dyad resulted in these substantial exclusion rates (Fig. 3 )

Flowchart of eye-tracking data quality assessment and exclusion criteria

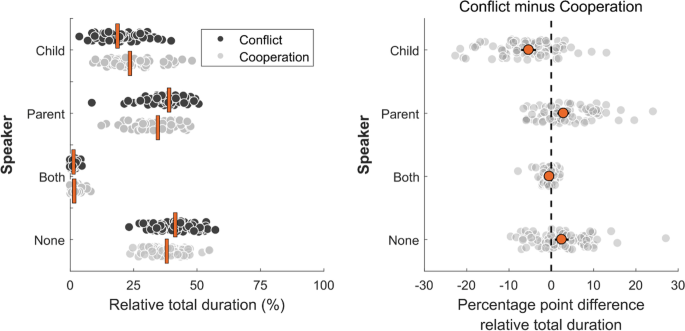

Main Analyses