- Search Menu

- Advance Articles

- Special Issues

- Author Guidelines

- Submission Site

- Open Access

- Reviewer Guidelines

- Review and Appeals Process

- About The Computer Journal

- About the BCS, The Chartered Institute for IT

- Editorial Board

- Advertising and Corporate Services

- Journals Career Network

- Self-Archiving Policy

- Dispatch Dates

- Journals on Oxford Academic

- Books on Oxford Academic

Article Contents

- 1. INTRODUCTION

- 2. BRAIN TUMOUR

- 3. MAGNETIC RESONANCE IMAGING (MRI)

- 5. BRAIN TUMOUR DETECTION MECHANISM

- 6. DISCUSSION AND ANALYSIS

- 7. CONCLUSION

- 8. FUTURE SCOPE

- ETHICS APPROVAL

- DATA AVAILABILITY

- < Previous

A Comprehensive Review of Brain Tumour Detection Mechanisms

- Article contents

- Figures & tables

- Supplementary Data

Praveen Kumar Ramtekkar, Anjana Pandey, Mahesh Kumar Pawar, A Comprehensive Review of Brain Tumour Detection Mechanisms, The Computer Journal , Volume 67, Issue 3, March 2024, Pages 1126–1152, https://doi.org/10.1093/comjnl/bxad047

- Permissions Icon Permissions

The brain is regarded as the central part of the human body and has a very complicated structure. The abnormal growth of tissue inside the brain is called a brain tumour. Tumour detection at an early stage is the most difficult task in the discipline of health. In this review article, the authors have deeply analysed and reviewed the brain tumour detection mechanisms which include manual, semi- and fully automated techniques. Today, fully automated mechanisms apply deep learning (DL) methods for tumour detection in brain magnetic resonance images (MRIs). This paper deals with previously published research articles relevant to various brain tumour detection techniques. Review of various types of tumours, MRI modalities, datasets, filters, segmentation methods and DL techniques like long short-term memory, gated recurrent unit network, convolution neural network, auto encoder, deep belief network, recurrent neural network, generative adverse network and deep stacking networks have been included in this paper. It has been observed from the analysis that the use of DL techniques in the detection of brain tumours improves accuracy. Finally, this paper reveals research gaps, limitations of existing methods, challenges in tumour detection and contributions of the proposed article.

Email alerts

Citing articles via.

- Recommend to your Library

Affiliations

- Online ISSN 1460-2067

- Print ISSN 0010-4620

- Copyright © 2024 British Computer Society

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Institutional account management

- Rights and permissions

- Get help with access

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

Brain tumor detection and classification using machine learning: a comprehensive survey

- Original Article

- Open access

- Published: 08 November 2021

- Volume 8 , pages 3161–3183, ( 2022 )

Cite this article

You have full access to this open access article

- Javaria Amin ORCID: orcid.org/0000-0003-1080-5446 1 , 2 ,

- Muhammad Sharif 2 ,

- Anandakumar Haldorai 3 ,

- Mussarat Yasmin 2 &

- Ramesh Sundar Nayak 4

48k Accesses

116 Citations

Explore all metrics

Brain tumor occurs owing to uncontrolled and rapid growth of cells. If not treated at an initial phase, it may lead to death. Despite many significant efforts and promising outcomes in this domain, accurate segmentation and classification remain a challenging task. A major challenge for brain tumor detection arises from the variations in tumor location, shape, and size. The objective of this survey is to deliver a comprehensive literature on brain tumor detection through magnetic resonance imaging to help the researchers. This survey covered the anatomy of brain tumors, publicly available datasets, enhancement techniques, segmentation, feature extraction, classification, and deep learning, transfer learning and quantum machine learning for brain tumors analysis. Finally, this survey provides all important literature for the detection of brain tumors with their advantages, limitations, developments, and future trends.

Similar content being viewed by others

A Comprehensive Survey of Machine Learning Techniques for Brain Tumor Detection

A Comprehensive Review of Deep Learning Techniques for Brain Tumor Prediction

A systematic analysis of magnetic resonance images and deep learning methods used for diagnosis of brain tumor

Shubhangi Solanki, Uday Pratap Singh, … Sanjeev Jain

Avoid common mistakes on your manuscript.

Introduction

The central nervous system disseminates sensory information and its corresponding actions throughout the body [ 1 , 2 , 3 ]. The brain, along with the spinal cord, assists in this dissemination. The brain’s anatomy [ 4 ] contains three main parts; brain stem, cerebrum, and cerebellum. The weight of a normal human brain is approximately 1.2–1.4 K, with a volume of 1260 cm 3 (male brain) and 1130 cm 3 (female brain) [ 5 ]. The frontal lobe of brain assists in problem-solving, motor control, and judgments. The parietal lobe manages body position. The temporal lobe controls memory and hearing functions, and occipital lobe supervises the brain’s visual processing activities. The outer part of cerebrum is known as cerebral cortex, and is a greyish material; it is composed of cortical neurons [ 6 ]. The cerebellum is relatively smaller than the cerebrum. It is responsible for motor control, i.e., systematic regulation of voluntary movements in living organisms with a nervous system. Due to variable size and stroke territory, ALI, lesionGnb, and LINDA methods fail to detect the small lesion region. Cerebellum is well-structured and well-developed in human beings as compared to other species [ 7 ]. The cerebellum has three lobes; an anterior, a posterior, and a flocculonodular. A round-shaped structure named vermis connects the anterior and posterior lobes. The cerebellum consists of an inner area of white matter (WM) and an outer greyish cortex, which is a bit thinner than that of the cerebrum. The anterior and posterior lobes assist in the coordination of complex motor movements. The flocculonodular lobe maintains the body’s balance [ 4 , 8 ]. The brain stem, as the name states, is a 7–10 cm-long stem-like structure. It contains cranial and peripheral nerve bundles and assists in eye movements and regulations, balance and maintenance, and some essential activities such as breathing. The nerve tracks originating from the cerebrum’s thalamus pass through the brain stem to reach the spinal cord. From there, they spread throughout the body. The main parts of the brain stem are midbrain, pons, and medulla. The midbrain assists in functions such as motor, auditory, and visual processing, as well as eye movements. The pons assists in breathing, intra-brain communication, and sensations, and medulla oblongata helps in blood regulation, swallowing, sneezing, etc. [ 9 ].

Brain tumor and stroke lesions

Brain tumors are graded as slow-growing or aggressive [ 2 , 10 , 11 , 12 , 13 , 14 , 15 , 16 , 17 , 18 , 19 , 20 ]. A benign (slow-growing) tumor does not invade the neighboring tissues; in contrast, a malignant (aggressive) tumor propagates itself from an initial site to a secondary site [ 16 , 17 , 21 , 22 , 23 , 24 , 25 , 26 , 27 ]. According to WHO, a brain tumor is categorized into grades I–IV. Grades I and II tumors are considered as slow-growing, whereas grades III and IV tumors are more aggressive, and have a poorer prognosis [ 28 ]. In this regard, the detail of brain tumor grades is as follows.

Grade I : These tumors grow slowly and do not spread rapidly. These are associated with better odds for long-term survival and can be removed almost completely by surgery. An example of such a tumor is grade 1 pilocyticastrocytoma.

Grade II : These tumors also grow slowly but can spread to neighboring tissues and become higher grade tumors. These tumors can even come back after surgery. Oligodendroglioma is a case of such a tumor.

Grade III : These tumors develop at a faster rate than grade II, and can invade the neighboring tissues. Surgery alone is insufficient for such tumors, and post-surgical radiotherapy or chemotherapy is recommended. An example of such a tumor is anaplastic astrocytoma.

Grade IV : These tumors are the most aggressive and are highly spreadable. They may even use blood vessels for rapid growth. Glioblastoma multiforme is such a type of tumor [ 29 ].

Ischemic stroke : Ischemic stroke is an aggressive disease of brain and it is major cause of disability and death around the globe [ 30 ]. An ischemic stroke occurs when the blood supply to the brain is cut off, resulting underperfusion (in tissue hypoxia) and dead the advanced tissues in hours [ 31 ]. Based on the severity, stroke lesions are categories into different stages such as acute (0–24 h), sub-acute (24 h–2 weeks) and chronic (> 2 weeks) [ 32 ].

- Brain imaging modalities

Three major methods (PET, CT, DWI and MRI) for brain tumors are widely used to analyze the brain structure.

Positron emission tomography

Positron emission tomography (PET) uses a special type of radioactive tracers. Metabolic brain tumor features such as blood flow, glucose metabolism, lipid synthesis, oxygen consumption, and amino acid metabolism are analyzed through PET. It is still considered as one of the most powerful metabolic techniques and utilizes the best nuclear medicine named as fluorodeoxyglucose (FDG) [ 33 ]. FDG is a widely used PET tracer in brain images. Nevertheless, FDG-PET images have limitations, e.g., an inability to differentiate between necrosis radiation and a recurrent high-grade (HG) tumor [ 34 ]. Moreover, during a PET scan, radioactive tracers can cause harmful effects to the human body, causing a post-scan allergic reaction. Some patients are allergic to aspartame and iodine. In addition, PET tracers do not provide accurate localization of anatomical structure, because they have a relatively poor spatial resolution as compared to an MRI scan [ 35 ].

Computed tomography

Computed tomography (CT) images provide more in-depth information than images obtained from normal X-rays. The CT scan has received widespread recommendation and adoption since its inception. A study [ 36 ] determined that in the USA alone, the annual CT scan rate is 62 million, with 4 million for children. CT scans show soft tissues, blood vessels, and bones of different human body parts. It uses more radiation than normal X-rays. This radiation may increase the risk of cancers when multiple CT scans are performed. The associated risks of cancers have been quantified according to CT radiation doses [ 37 , 38 ]. MRI can even help in evaluating structures obscured in a CT scan, and provides high contrast among the soft tissues, providing a clearer anatomical structure [ 39 ].

Magnetic resonance imaging

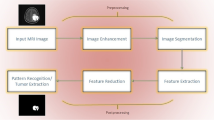

An MRI scan is used to completely analyze different bodyparts, and it also helps to detect abnormalities in the brain at earlier stages than other imaging modalities [ 40 ]. Hence, complex brain structures make tumor segmentation a challenging task [ 41 , 42 , 43 , 44 , 45 , 46 , 47 ]. This review discusses preprocessing approaches, segmentation techniques [ 48 , 49 ], feature extraction and reduction methods, classification methods, and deep learning approaches. Finally, benchmark datasets and performance measures are presented.

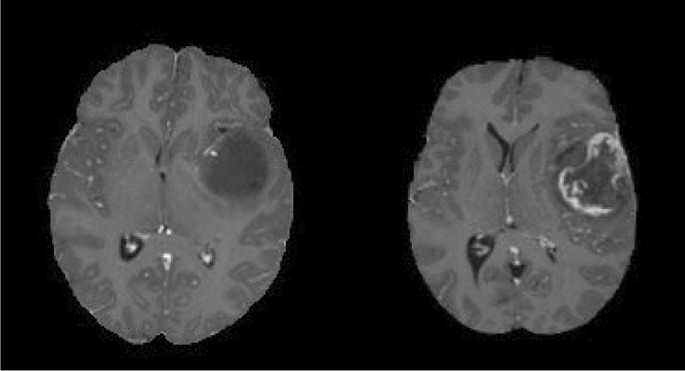

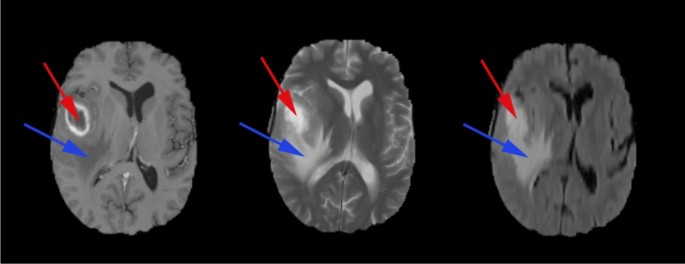

Diffusion weighting imaging

MRI sequences are utilized to analyze the stroke lesions based on the several parameters such as age, location and extent regions [ 50 ]. In the context of treatment, a computerized method might be utilized for accurate diagnosis of the disease progression rate [ 51 ]. The neuroscientists of cognitive, who frequently conduct research in which cerebral impairments are linked to cognitive function They observed that segmentation of the stroke lesions is a vital task to analyze the total infected region of brain that provide help in the treatment process [ 52 ]. However, segmentation of the stroke lesions is a difficult task, because stroke appearance is change as the passage of time. The MRI sequence such as diffusion weighted imaging (DWI) and FLAIR are utilized for stroke lesions detection. In acute stoke stage DWI sequence highlight the infection part as a hyperintensity. The underperfusion region represents the mapping magnitude of the perfusion [ 53 ]. The dis-similarity among two regions might be considered as penumbra tissue. Stroke lesions appear in distinct locations and shapes. Different types of lesions are appeared in a variable size and shape and these lesions are not aligned with vascular patterns and more than one lesions might appeared on similar time. The size of the stroke lesions is in radii of the few millimeters and appears in a full hemisphere. The structure of the hemisphere is dissimilar, and its intensity might significantly vary within the infected region. Furthermore, automated stroke segmentation is difficult due to the similar appearance of the pathology such as white matter hyperintensities and chronic stroke lesions [ 54 ].

Evaluation and validation

In the existing literature, experimental results are evaluated on publicly available datasets to verify the robustness of algorithms.

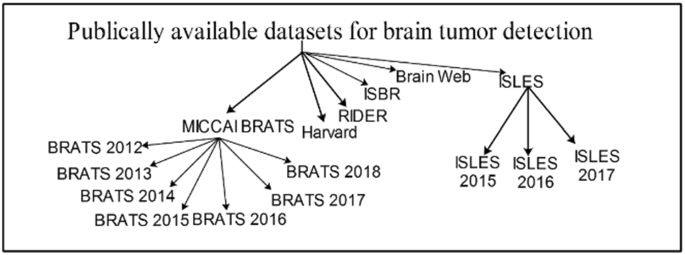

Publicly available datasets

Several datasets are publicly available that are used by the researchers to evaluate the proposed methods. Some important and challenging datasets are discussed in this section. BRATS are the most challenging MRI datasets [ 55 , 56 , 57 ]. BRATS Challenge is published in different years with more challenges having 1 mm 3 voxels resolution. The detail of datasets is given in Fig. 1 as well as in Table 1 .

Datasets for brain tumor detection

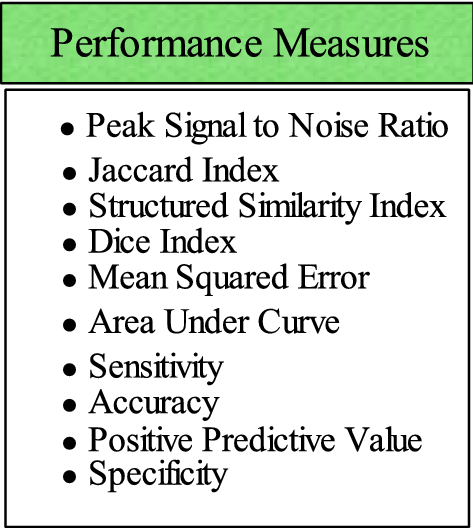

Performance metrics

The performance measures play a significant role to compute the method’s effectiveness. A list of performance metrics is provided in Fig. 2 .

List of performance measures for evaluation of brain tumor

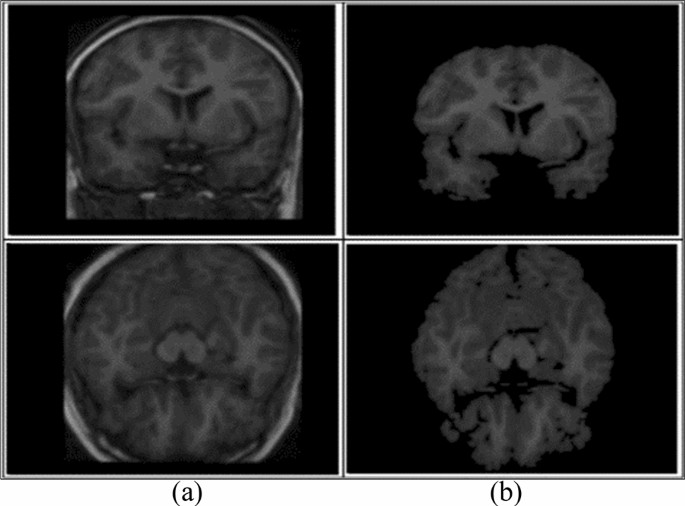

Preprocessing

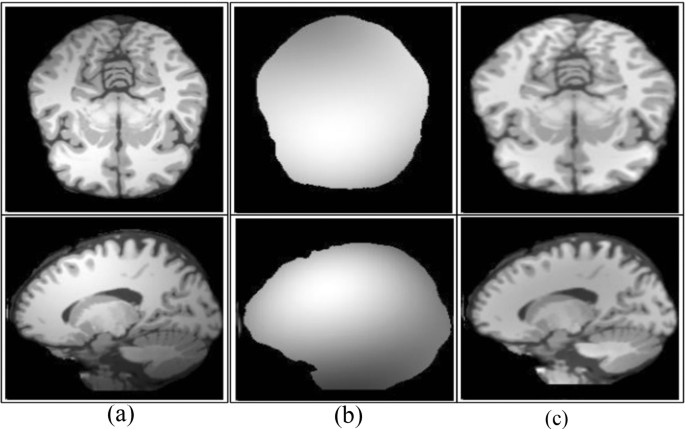

Preprocessing is a critical task [ 61 ] to extract the requisite region. 2D brain extraction algorithm (BEA) [ 62 ], FMRIB software library [ 63 ], and BSE [ 64 ] are used for non-brain tissue removal as shown in Fig. 3 . The bias field is a key problem that arises in MRI due to imperfections of radio frequency coil called intensity inhomogeneity [ 65 , 66 ]. It is corrected as shown in Fig. 4 [ 67 ]. The preprocessing methods like linear, nonlinear [ 68 ], fixed, multi-scale, and pixel-based are used in distinct circumstances [ 69 , 70 , 71 , 72 ]. The small variations among normal and abnormal tissues due to noise [ 68 ] and artifacts often provide difficulty in direct image analysis [ 73 , 74 ]. AFINITI is used for brain tumor segmentation [ 63 ]. Consequently, automated techniques are adopted in which computer software performs segmentation and eliminates the need for manual human interaction [ 75 , 76 ]. Fully and semi-automated techniques are used widely [ 77 , 78 ]. The results of brain tumor segmentation are mentioned in Table 2 . The segmentation methods are divided into the following categories.

Conventional methods.

Machine learning methods.

Different inhomogeneities related to MRI noise have shading artifacts and partial volume effects.

Skull removal a input, b skull removed [ 1 ]

Bias field correction a input, b estimated, c corrected [ 67 ]

When different types of tissues [ 61 ] take the same pixel, then it is called partial volume effect [ 92 ]. The random noise related to MRI [ 19 , 93 , 94 ] has Rician distribution [ 95 ]. In the literature, different filters such as wavelet, anisotropic diffusion, and adaptive are presented to enhance edges [ 96 ]. An anisotropic diffusion filter is more suitable in practical applications due to low computational speed [ 97 , 98 ]. When the noise level is high in the image, it is difficult to recover the edges [ 99 ]. Normalizing the image intensity is another part of the preprocessing phase [ 2 , 100 , 101 ] and modified curvature diffusion equation (MCDE) [ 102 ] are applied for intensity normalization. Wiener filter is used to enhance the local and spatial information in medical imaging [ 103 ]. The widely utilized preprocessing methods are N4ITK [ 104 ] for the correction of bias field, median filter [ 104 ] for image smoothing, anisotropic diffusion filter [ 105 ], image registration [ 106 ], sharpening [ 107 ], and skull stripping through brain extraction tool (BET) [ 108 ].

Conventional methods

The conventional methods [ 46 ] are further categorized into the following:

Thresholding methods.

Region growing methods.

Watershed methods.

- Segmentation

Segmentation extracts the required region from input images. Thus, segmenting accurate lesion regions is a more crucial task [ 109 ]. As manual segmentation process is erroneous [ 110 ]; therefore, semi- and fully automated methods are utilized [ 46 ]. Segmentation of tumor region using semi-automated methods achieves acceptable outcomes over manual segmentation [ 111 , 112 ]. Semi-automated methods are further divided into three forms: initialization, evaluation, and feedback response [ 113 , 114 ].

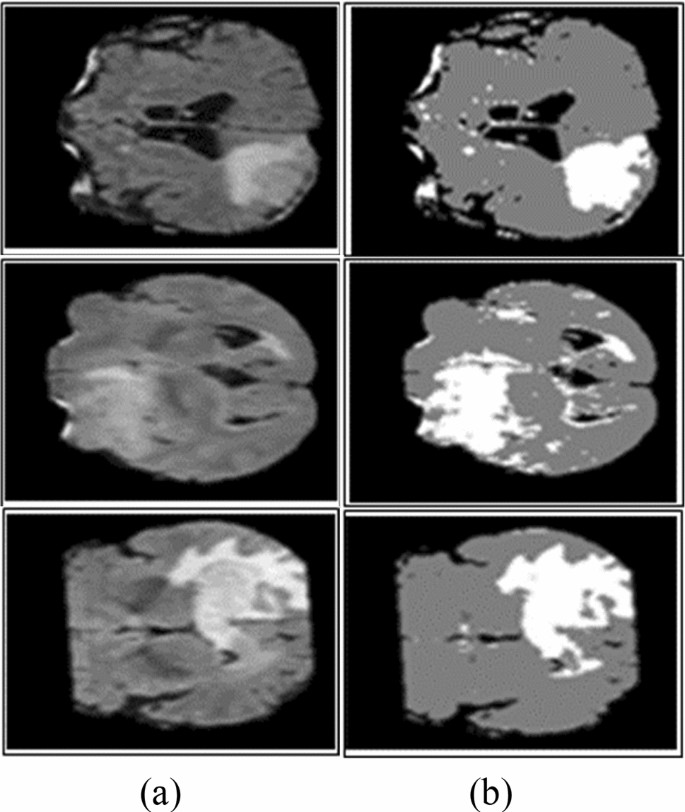

Thresholding methods

The thresholding method is a basic and powerful method to segment the required objects [ 18 ] and the selection of an optimized threshold is a difficult task in low-contrast images. Histogram analysis is used to select threshold values based on image intensity [ 115 ]. Thresholding methods are classified into local and global. If high homogeneous contrast or intensity exists among the objects and background, then the global thresholding method is the best option for segmentation. The optimal threshold value can be determined by Gaussian distribution method [ 116 ]. These methods are utilized when the threshold value cannot be measured from the whole image histogram or single value of the threshold does not provide good results of segmentation [ 117 ]. In most cases, the thresholding method is applied at the first stage for segmentation and many distinct regions are segmented within the gray-level images as shown in Fig. 5 .

Segmentation using Otsu thresholding a original images, b Otsu thresholding [ 82 ]

Region growing (RG) methods

In RG approaches, image pixels form disjoint areas are analyzed through neighboring pixels, which are merged with homogeneousness characteristics based on pre-defined similitude criteria. The region growing might fail to provide better accuracy due to the partial volume effect [ 118 , 119 ]. To overcome this effect, MRGM is preferred [ 86 , 120 ]. The region growing with BA methods is also introduced [ 87 ].

Watershed methods

As MR images have more proteinaceous fluid intensity, therefore, watershed methods are utilized to analyze the intensity of the image [ 114 , 121 , 122 ]. Due to noise [ 123 ], watershed method leads to over-segmentation [ 124 ]. The accurate segmentation [ 125 ] results can be obtained by the combination of watershed transform with the merging of statistical methods [ 126 , 127 ]. Some watershed algorithms are topological watershed [ 128 ], image foresting transform (IFT) watershed [ 129 ], and marker-based watershed [ 130 ].

The comprehensive literature review [ 131 ] on brain tumor detection shows that there is room for improvement [ 72 ]. As a brain tumor appears in variable sizes and shapes, existing segmentation approaches require additional improvements for tumor segmentation. In overcoming the limitations of existing methods, enhancement [ 132 , 133 , 134 ] and segmentation [ 135 , 136 , 137 ] have significance in tumor detection.

Feature extraction methods

The feature extraction approaches [ 12 , 138 , 139 , 140 ] including GLCM [ 15 , 141 , 142 ], geometrical features (area, perimeter, and circularity) [ 15 ], first-order statistical (FOS), GWT [ 143 , 144 ], Hu moment invariants (HMI) [ 145 ], multifractal features [ 146 ], 3D Haralick features [ 147 ], LBP [ 148 ], GWT [ 11 ], HOG [ 14 , 137 ], texture and shape [ 82 , 143 , 149 , 150 ], co-occurrence matrix, gradient, run-length matrix [ 151 ], SFTA, curvature features [ 152 , 153 ], Gabor like multi-scale texton features [ 154 ], Gabor wavelet and statistical features [ 142 , 143 ] are utilized for classification. Table 3 lists the summary of feature extraction methods.

Feature selection methods or feature selection/reduction methods

In machine learning and computer vision applications, high-dimensional features maximize the system execution time and memory requirement for processing. Therefore, to distinguish between relevant and non-relevant features, several feature selection methods are required to minimize redundant information [ 168 ]. The optimal feature extraction is still a challenging task [ 47 ]. The single-point heuristic search method, ILS, genetic algorithm (GA) [ 169 ], GA+ fuzzy rough set [ 170 ], hybrid wrapper-filter [ 171 ], TRSFFQR, tolerance rough set (TRS), firefly algorithm (FA) [ 172 ], minimum redundancy maximum relevance (mRMR) [ 152 ], Kullback–Leibler divergence measure [ 173 ], iterative sparse representation [ 174 ], recursive feature elimination (RFE) [ 175 ], CSO-SIFT [ 176 ], entropy [ 11 , 177 , 178 ], PCA [ 179 ], and LDA [ 180 ] are utilized to remove redundant features. A summary of classification methods as shown in Table 4 .

Classification methods

The classification approaches are used to categorize input data into different classes in which training and testing are performed on known and unknown samples [ 16 , 24 , 25 , 181 , 182 , 183 , 184 , 185 , 186 , 187 , 188 , 189 , 190 , 191 , 192 ]. Machine learning is widely used for tumor classification into appropriate classes, e.g., tumor substructure (complete/non-enhanced/enhanced) [ 193 ], tumor and non-tumor [ 26 ], and benign and malignant tumor [ 15 , 47 , 163 , 194 , 195 ]. KNN [ 196 ], SVM, nearest subspace classifier, and representation classifier [ 143 ] are supervised, whereas FCM [ 197 , 198 ], hidden Markova random field [ 199 ] self-organization map [ 101 ], and SSAE [ 200 ] are unsupervised methods.

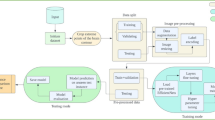

Recent trends in medical imaging to detect malignancy

Deep learning and quantum machine learning methodologies are widely utilized for tumor localization and classification [ 201 ]. In these techniques, automatic feature learning helps to discriminate complicated patterns [ 186 , 202 , 203 , 204 , 205 , 206 , 207 , 208 , 209 , 210 , 211 , 212 , 213 ].

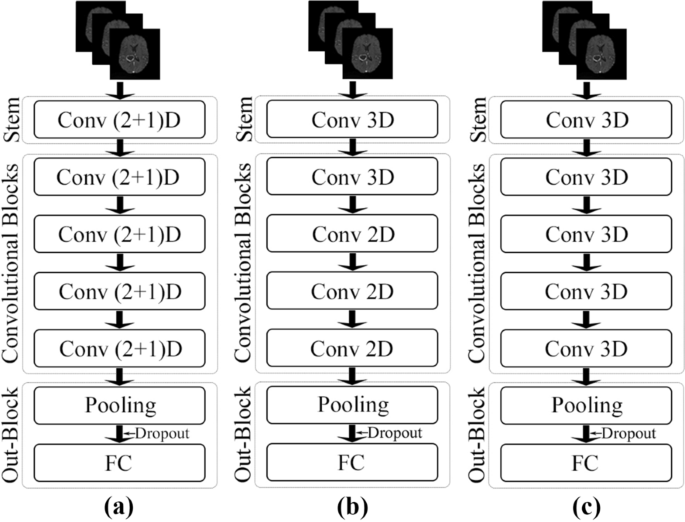

Deep learning methods

The variety of state of the art deep learning methodologies are used to learn the data in the medical domain [ 214 ] including CNN [ 215 , 216 ], Deep CNN, cascaded CNN [ 217 ], 3D-CNN [ 218 ], convolutional encoder network, LSTM, CRF [ 218 ], U-Net CNN [ 219 ], dual-force CNN [ 220 ] and WRN-PPNet [ 221 ].

The brain tumor classification problem has been solved by employing a LSTM model. In this method, input MRI images smooth using N4ITK and 5 × 5 Gaussian filter and passed as input to the four LSTM model. The LSTM model is constructed on the four hidden Units such as 200, 225, 200, 225, respectively. The performance of this model has been tested on BRATS (2012–2015 and 2018) series and SISS-2015 benchmark datasets [ 222 ]. In this work, a new framework is presented based on the fusion of different kinds of MRI sequences. The fused sequence provides more information as compared to single sequence. Later, fused sequence has been supplied to the 23 CNN model. The suggested model is trained on brat’s series for the detection of glioma [ 16 ]. The 14 layers CNN model has been trained from the scratch on six Brats series datasets for detection of glioma and stroke lesions [ 25 ]. The classification is performed using ELM and RELM classifiers. This method has been tested on BRATS series such as 2012 to 2015 [ 189 ]. The 09-layer CNN model is trained from the scratch for classification of different types of tumors such as pituitary, glioma and meningioma. The method achieved an accuracy of the classification is 98.71% [ 223 ]. This model is trained from the scratch on publicly 696 weighted-T1 sequences. The model provides an accuracy of greater than 99% for tumor classification [ 224 ]. The existing methods are summarized in Table 5 .

Although much work is done on deep learning methods, still there exist many challenges. The present methods do not achieve maximum results in the sub-structure of the tumor region. For example, if the accuracy of the complete tumor is increased, then the accuracy of the core and the enhanced tumor is decreased (as shown in Table 5 ).

Brain tumor detection using transfer learning

The manual detection of brain tumors is difficult due to asymmetrical lesions shape, location flexibility, and unclear boundaries. Therefore, a transfer-learning model has been suggested based on the super-pixel. The VGG-19 is a pre-trained model that has been utilized for the classification of the different grades of the glioma such as high/low glioma. The method achieved 0.99 AUC on the brats 2019 series[ 232 ]. The three different types of pre-trained models i.e., VGG network, Google network and Alex network are employed on the brain datasets for the classification of glioma, pituitary and meningioma. In this method, augmentation methods are also employed on MRI slices to generalize the outcomes and reduced the overfitting problem by increasing the quantity of the input data. After the experimental analysis using different pre-trained models, we conclude that VGG-16 provides greater than 98% classification accuracy [ 233 ]. The classification of brain tumors has been done using two different types of networks, i.e., visual attention network and CNN are utilized for classification of different types of brain tumor i.e., glioma, pituitary I and meningioma [ 234 ]. A pre-trained model i.e., VGG-16, Alex and Google net are investigated for the analysis of brain tumors. The frequency domain techniques have been applied on input slices to improve the image contrast. The contrast improved images are passed in the next phase. Where pre-trained VGG-16 provides maximum classification outcomes [ 235 ]. The Laplacian filter with a multi-layered dictionary model is utilized for the recognition of brain tumors. The model performed better as compared to existing works [ 236 ]. The method consists of the three major steps such as pre-processing, augmentation of data, and segmentation and classification using transfer learning models. In which ResNet-50, DenseNet-201, MobileNet-v2 and Inceptionv3 are utilized to classify the brain lesions with 0.95 IoU [ 237 ]. The deep features are extracted from the transfer learning AlexNet model. The model has eight layers, five of which are convolutional and three of which are fully linked. The SoftMax layer has been employed for classification between the different types of brain lesions [ 238 ]. The transfer learning ResNet-50 model with average global pooling is utilized to reduce the gradient vanishing and overfitting issues. The performance of this model has been evaluated on three distinct types of brain imaging benchmark samples that contain 3064 input images. The method achieved an accuracy of the 97.08% that is maximum as compared to latest existing works [ 239 ]. A deep CNN was used in this study that based on transfer learning such as ResNet, Xception and Mobilenetv2 are utilized for the extraction of deep features has been for tumors classification using MRI images. This method achieved an accuracy of up to 98% [ 240 ]. In this method, Grab Cut method has been employed for segmentation of the brain lesions. Later hand-crafted such as LBP features dimension of 1 × 20 and HOG features dimension of 1 × 100 are extracted and serially fused to the deep features dimension of 1 × 1000 that are extracted from the pre-trained VGG-19 model and final fused features vector length of 1 × that is supplied to the different kind of classifiers. The experimental analysis proves that fused features vector provide good results as compared to existing work in this domain [ 16 , 187 ]. The global thresholding method is applied to segment the actual lesion region. After segmentation, texture features such as LBP and GWF are extracted from the segmented images. After that, the retrieved features are fused to form a single fused feature vector, which is then provided to the classifiers for differentiation between healthy and unhealthy images [ 26 ]. There are two key stages to the procedure. The brain lesions are enhanced and segmented using spatial domain approaches in the first stage, then deep information’s are extracted using pre-trained models, i.e., Alex and Google-network and score vector is achieved from softmax layer that is supplied to the classifiers such as for discrimination between the glioma/non-glioma images of brain. The Brats series dataset was used to test this technique’s efficiency [ 241 ]. For brain tumor segmentation, the superpixel approach has been suggested. From the segmented images, Gabor wavelet information are retrieved and given to SVM and CRF for discrimination between the healthy/un-healthy MRI images [ 242 ].The transfer learning models such as inceptionv3, densenet-201, and to form a single vector, extracted features are merged serially and passed to softmax for tumor classification. Furthermore, different dense blocks of the densenet201 are extracted and classify the brain tumor using softmax. The approach had a 99% accuracy rate. The evaluation outcomes clearly state that the fused vector outperformed as compared to the single vector [ 243 ]. A novel U-net model with the RESnet model has been trained on the input MRI images. The classifiers are fed the salient features derived from its pictures. This method has been tested on BRATS 2017, 2018 and 2019 datasets [ 244 ]. The tumor region is localized on Flair sequences of brats 2012 series. The skull is removed from of the input pictures, and a noise-reduction filter is applied bilaterally. During the segmentation, texton features are recovered from the input images using the superpixel approach. For brain tumor classification, the leave out validation technique is used. This strategy yielded an 88 percent dice score [ 245 ]. The deep segmentation has been designed that contains two major parts such as encoder and decoder. The spatial information is extracted using a CNN in the encoder section. For determining the whole probability map resolution, the semantic mappings information is entered into the decoder component. On the basis of U-network distinct CNN networks such as ResNetwork, dense network and Nas-network are utilized for features extraction. This model has been tested successfully on Brats-2019 series. The method achieved dice scores of 0.84 [ 246 ]. The wavelet homomorphic filter has been employed for noise removal. The tumor infected region has been localized using improved YOLOv2 model [ 230 ]. The summary of the transfer learning methods is mentioned in Table 6 .

Brain tumor detection using quantum machine learning

Superposition of quantum states/parallelism/entanglement can all be used to establish quantum computer supremacy [ 258 ]. However, exploring entanglement of quantum features for efficient computation is a difficult undertaking due to a shortage of computational resources for execution of quantum algorithms. With the progress of quantum techniques, classical computers based on quantum theory and influenced through qubits are no longer able to fully exploit the benefits of quantum state and entanglement. QANN has been found to be effective in a variety of computer tasks, including classification and pattern recognition due to the intrinsic properties supplied by quantum physics [ 259 ]. On the other hand, quantum models based on genuine quantum computers use big bits of the quantum/qubits as a simple representation of matrix and the linear functions. However, the computational complexity of the quantum-inspired neural network (QINN) designs increases several fold due to complicated and time-consuming back-propagation quantum model [ 260 ]. The automatic segmentation of brain lesions from I (MRI), which removes the onerous manual work of human specialists or radiologists, greatly aids brain tumor detection. Manually, brain tumor diagnosis, on the other hand, suffers from large variances in size, shape, orientation, illumination variations, greyish overlaying, and cross-heterogeneity. Scientists in the computer vision field have paid a lot of emphasis in recent years to building robust and efficient automated segmentation approaches. The current research focuses on a unique quantum fully supervised learning process which is defined by qutrits for timely and effective lesions segmentation. The proposed work’s main goal is to speed up the QFS-convergence Net’s and make it appropriate for computerized segmentation of the brain lesions without the need for any learning/supervision. To leverage the properties of quantum correlation, suggested a quantum fully self-supervised neural network (QFS-Net) model uses qutrits/three states of quantum for segmentation of the brain lesions [ 261 ]. The QFS-Net uses a revolutionary fully supervised qutrit-based counter propagation method to replace the sophisticated quantum back-propagation method that utilized in supervised QINN networks. This approach allows for iterative quantum state that propagates among the layers of network.

Limitations of existing’s machine/deep learning methods

In this survey, recent literature regarding the detection of brain tumors is reviewed, and it is indicated that there is still room for improvement. During image acquisition, noise is included in MRI, and noise removal is an intricate task [ 2 , 262 , 263 , 264 ]. Accurate segmentation is a difficult task [ 265 ], as brain tumors have tentacles and diffused structures [ 43 , 193 , 220 , 266 ]. Selecting and extracting optimal features and appropriate number of training/testing samples for better classification is also an important task [ 191 , 192 ]. Deep learning models are gaining attention as the learning of features is accomplished automatically; however, they require high computing power and large memory. Therefore, still there is a need to design a lightweight model that provides high ACC in less computational time. Some existing machine learning methods with their limitations are mentioned in Table 7 .

The following are the main challenges of brain tumor detection.

The glioma and stroke tumors are not well contrasted. It consists of tentacle and diffused structures that make segmentation and classification processes more challenging [ 270 ].

A small volume of tumor detection is still a challenge as it can be detected as a normal region [ 269 , 273 ].

Some of the existing methods work well for only a complete tumor region and do not provide good results for other regions (enhanced, non-enhanced) and vice versa [ 267 , 271 , 274 ].

Research findings and discussion

After a comprehensive review of the state-of-the-art exiting methods, the following challenges are found:

The size of a brain tumor grows rapidly. Therefore, tumor diagnosis at an initial stage is an exigent task.

Brain tumor segmentation is difficult owing to the following factors.

MRI image owing to magnetic field fluctuations in the coil.

Gliomas are infiltrative, owing to fuzzy borders. Thus, they become more difficult to segment [ 43 ].

Stroke lesion segmentation is a very intricate task, as stroke lesions appear in complex shapes and with ambiguous boundaries and intensity variations.

The optimized and best feature extraction and selection is another difficult process inaccurate classification of brain tumors.

The accurate brain tumor detection is still very demanding because of tumor appearance, variable size, shape, and structure. Although tumor segmentation methods have shown high potential in analyzing and detecting the tumor in MR images, still many improvements are required to accurately segment and classify the tumor region. Existing work has limitations and challenges for identifying substructures of tumor region and classification of healthy and unhealthy images.

In short, this survey covers all important aspects and latest work done so far with their limitations and challenges. It will be helpful for the researchers to develop an understanding of doing new research in a short time and correct direction.

The deep learning methods have contributed significantly but still require a generic technique. These methods provided better results when training and testing are performed on similar acquisition characteristics (intensity range and resolution); however, a slight variation in the training and testing images directly affects the robustness of the methods. In future work, research can be conducted to detect brain tumors more accurately, using real patient data from any medium (different image acquisition (scanners). Handcrafted and deep features can be fused to improve the classification results. Similarly, lightweight methods such as quantum machine learning play significant role to improve the accuracy and efficacy that save the time of radiologists and increase the survival rate of patients.

Park JG, Lee C (2009) Skull stripping based on region growing for magnetic resonance brain images. Neuroimage 47:1394–1407

Article Google Scholar

Khan MA, Lali IU, Rehman A, Ishaq M, Sharif M, Saba T et al (2019) Brain tumor detection and classification: A framework of marker-based watershed algorithm and multilevel priority features selection. Microsc Res Tech 82:909–922

Raza M, Sharif M, Yasmin M, Masood S, Mohsin S (2012) Brain image representation and rendering: a survey. Res J Appl Sci Eng Technol 4:3274–3282

Google Scholar

Watson C, Kirkcaldie M, Paxinos G (2010) The brain: an introduction to functional neuroanatomy. Academic Press, New York

(2015). https://en.wikipedia.org/wiki/Brain_size . Accessed 19 Oct 2019

Dubin MW (2013) How the brain works. Wiley, New York

Koziol LF, Budding DE, Chidekel D (2012) From movement to thought: executive function, embodied cognition, and the cerebellum. Cerebellum 11:505–525

Knierim J (1997) Neuroscience Online Chapter 5: Cerebellum. The University of Texas Health Science Center, Houston

Nuñez MA, Miranda JCF, de Oliveira E, Rubino PA, Voscoboinik S, Recalde R et al (2019) Brain stem anatomy and surgical approaches. Comprehensive overview of modern surgical approaches to intrinsic brain tumors. Elsevier, Amsterdam, pp 53–105

Chapter Google Scholar

DeAngelis LM (2001) Brain tumors. N Engl J Med 344:114–123

Amin J, Sharif M, Raza M, Saba T, Sial R, Shad SA (2020) Brain tumor detection: a long short-term memory (LSTM)-based learning model. Neural Comput Appl 32:15965–15973

Sajjad S, Hanan Abdullah A, Sharif M, Mohsin S (2014) Psychotherapy through video game to target illness related problematic behaviors of children with brain tumor. Curr Med Imaging 10:62–72

Yasmin M, Sharif M, Masood S, Raza M, Mohsin S (2012) Brain image reconstruction: a short survey. World Appl Sci J 19:52–62

Amin J, Sharif M, Raza M, Yasmin M (2018) Detection of brain tumor based on features fusion and machine learning. J Ambient Intell Human Comput:1–17

Amin J, Sharif M, Yasmin M, Fernandes SL (2020) A distinctive approach in brain tumor detection and classification using MRI. Pattern Recogn Lett 139:118–127

Saba T, Mohamed AS, El-Affendi M, Amin J, Sharif M (2020) Brain tumor detection using fusion of hand crafted and deep learning features. Cogn Syst Res 59:221–230

Sharif M, Amin J, Nisar MW, Anjum MA, Muhammad N, Shad SA (2020) A unified patch based method for brain tumor detection using features fusion. Cogn Syst Res 59:273–286

Sharif M, Tanvir U, Munir EU, Khan MA, Yasmin M (2018) Brain tumor segmentation and classification by improved binomial thresholding and multi-features selection. J Ambient Intell Human Comput:1–20

Sharif MI, Li JP, Khan MA, Saleem MA (2020) Active deep neural network features selection for segmentation and recognition of brain tumors using MRI images. Pattern Recogn Lett 129:181–189

Sharif MI, Li JP, Naz J, Rashid I (2020) A comprehensive review on multi-organs tumor detection based on machine learning. Pattern Recogn Lett 131:30–37

Ohgaki H, Kleihues P (2013) The definition of primary and secondary glioblastoma. Clin Cancer Res 19:764–772

Cachia D, Kamiya-Matsuoka C, Mandel JJ, Olar A, Cykowski MD, Armstrong TS et al (2015) Primary and secondary gliosarcomas: clinical, molecular and survival characteristics. J Neurooncol 125:401–410

Amin J, Sharif M, Gul N, Yasmin M, Shad SA (2020) Brain tumor classification based on DWT fusion of MRI sequences using convolutional neural network. Pattern Recogn Lett 129:115–122

Sharif M, Amin J, Raza M, Yasmin M, Satapathy SC (2020) An integrated design of particle swarm optimization (PSO) with fusion of features for detection of brain tumor. Pattern Recogn Lett 129:150–157

Amin J, Sharif M, Anjum MA, Raza M, Bukhari SAC (2020) Convolutional neural network with batch normalization for glioma and stroke lesion detection using MRI. Cogn Syst Res 59:304–311

Amin J, Sharif M, Raza M, Saba T, Anjum MA (2019) Brain tumor detection using statistical and machine learning method. Comput Methods Progr Biomed 177:69–79

Amin J, Sharif M, Gul N, Raza M, Anjum MA, Nisar MW et al (2020) Brain tumor detection by using stacked autoencoders in deep learning. J Med Syst 44:32

Johnson DR, Guerin JB, Giannini C, Morris JM, Eckel LJ, Kaufmann TJ (2017) 2016 updates to the WHO brain tumor classification system: what the radiologist needs to know. Radiographics 37:2164–2180

Wright E, Amankwah EK, Winesett SP, Tuite GF, Jallo G, Carey C et al (2019) Incidentally found brain tumors in the pediatric population: a case series and proposed treatment algorithm. J Neurooncol 141:355–361

Pellegrino MP, Moreira F, Conforto AB (2021) Ischemic stroke. Neurocritical care for neurosurgeons. Springer, New York, pp 517–534

Garrick R, Rotundo E, Chugh SS, Brevik TA (2021) Acute kidney injury in the elderly surgical patient. Emergency general surgery in geriatrics. Springer, New York, pp 205–227

Lehmann ALCF, Alfieri DF, de Araújo MCM, Trevisani ER, Nagao MR, Pesente FS, Gelinski JR, de Freitas LB, Flauzino T, Lehmann MF, Lozovoy MAB (2021) Carotid intima media thickness measurements coupled with stroke severity strongly predict short-term outcome in patients with acute ischemic stroke: a machine learning study. Metab Brain Dis 36:1747–1761

Scott AM (2005) PET imaging in oncology. In: Bailey DL, Townsend DW, Valk PE, Maisey MN (eds) Positron emission tomography. Springer, London, pp 311–325

Wong TZ, van der Westhuizen GJ, Coleman RE (2002) Positron emission tomography imaging of brain tumors. Neuroimaging Clin 12:615–626

Wong KP, Feng D, Meikle SR, Fulham MJ (2002) Segmentation of dynamic PET images using cluster analysis. IEEE Trans Nuclear Sci 49:200–207

Brenner DJ, Hall EJ (2007) Computed tomography—an increasing source of radiation exposure. N Engl J Med 357:2277–2284

Smith-Bindman R, Lipson J, Marcus R, Kim K-P, Mahesh M, Gould R et al (2009) Radiation dose associated with common computed tomography examinations and the associated lifetime attributable risk of cancer. Arch Intern Med 169:2078–2086

Fink JR, Muzi M, Peck M, Krohn KA (2015) Multimodality brain tumor imaging: MR imaging, PET, and PET/MR imaging. J Nucl Med 56:1554–1561

Hess CP, Purcell D (2012) Exploring the brain: Is CT or MRI better for brain imaging. UCSF Dep Radiol Biomed Imaging 11:1–11

Saad NM, Bakar SARSA, Muda AS, Mokji MM (2015) Review of brain lesion detection and classification using neuroimaging analysis techniques. J Teknol 74:1–13

Huang M, Yang W, Wu Y, Jiang J, Chen W, Feng Q (2014) Brain tumor segmentation based on local independent projection-based classification. IEEE Trans Biomed Eng 61:2633–2645

Khan MA, Arshad H, Nisar W, Javed MY, Sharif M (2021) An integrated design of Fuzzy C-means and NCA-based multi-properties feature reduction for brain tumor recognition. Signal and image processing techniques for the development of intelligent healthcare systems. Springer, New York, pp 1–28

Rewari R (2021) Automatic tumor segmentation from MRI scans. Stanford University, Stanford

Tandel GS, Biswas M, Kakde OG, Tiwari A, Suri HS, Turk M et al (2019) a review on a deep learning perspective in brain cancer classification. Cancers 11:1–32

El-Dahshan E-SA, Mohsen HM, Revett K, Salem A-BM (2014) Computer-aided diagnosis of human brain tumor through MRI: A survey and a new algorithm. Expert Syst Appl 41:5526–5545

Gordillo N, Montseny E, Sobrevilla P (2013) State of the art survey on MRI brain tumor segmentation. Magn Reson Imaging 31:1426–1438

Mohan G, Subashini MM (2018) MRI based medical image analysis: Survey on brain tumor grade classification. Biomed Signal Process Control 39:139–161

Amin J, Sharif M, Yasmin M (2016) Segmentation and classification of lung cancer: a review. Immunol Endocr Metab Agents Med Chem 16:82–99

Shahzad A, Sharif M, Raza M, Hussain K (2008) Enhanced watershed image processing segmentation. J Inf Commun Technol 2:9

Joo L, Jung SC, Lee H, Park SY, Kim M, Park JE et al (2021) Stability of MRI radiomic features according to various imaging parameters in fast scanned T2-FLAIR for acute ischemic stroke patients. Sci Rep 11:1–11

Chen H, Zou Q, Wang Q (2021) Clinical manifestations of ultrasonic virtual reality in the diagnosis and treatment of cardiovascular diseases. J Healthc Eng 2021:1–12

Henneghan AM, Van Dyk K, Kaufmann T, Harrison R, Gibbons C, Heijnen C, Kesler SR (2021) Measuring self-reported cancer-related cognitive impairment: recommendations from the Cancer Neuroscience Initiative Working Group. JNCI:1–9

Drake-Pérez M, Boto J, Fitsiori A, Lovblad K, Vargas MI (2018) Clinical applications of diffusion weighted imaging in neuroradiology. Insights Imaging 9:535–547

Okorie CK, Ogbole GI, Owolabi MO, Ogun O, Adeyinka A, Ogunniyi A (2015) Role of diffusion-weighted imaging in acute stroke management using low-field magnetic resonance imaging in resource-limited settings. West Afr J Radiol 22:61

Menze BH, Jakab A, Bauer S, Kalpathy-Cramer J, Farahani K, Kirby J et al (2014) The multimodal brain tumor image segmentation benchmark (BRATS). IEEE Trans Med Imaging 34:1993–2024

Bakas S, Akbari H, Sotiras A, Bilello M, Rozycki M, Kirby JS et al (2017) Advancing the cancer genome atlas glioma MRI collections with expert segmentation labels and radiomic features. Sci Data 4:170117

Kistler M, Bonaretti S, Pfahrer M, Niklaus R, Büchler P (2013) The virtual skeleton database: an open access repository for biomedical research and collaboration. J Med Internet Res 15:e245

Summers D (2003) Harvard whole brain Atlas: www. med. harvard. edu/AANLIB/home. html. J Neurol Neurosurg Psychiatry 74:288–288

Armato S, Beichel R, Bidaut L, Clarke L, Croft B, Fenimore C, Gavrielides M et al (2008) RIDER (Reference Database to Evaluate Response) Committee Combined Report, 9/25/2008 Sponsored by NIH, NCI, CIP, ITDB Causes of and Methods for Estimating/Ameliorating variance in the evaluation of tumor change in response-to therapy. https://wiki.cancerimagingarchive.net/display/Public/Collections

Kistler M, Bonaretti S, Pfahrer M, Niklaus R, Büchler P (2013) The virtual skeleton database: an open access repository for biomedical research and collaboration. J Med Internet Res 15:1–14

Yasmin M, Mohsin S, Sharif M, Raza M, Masood S (2012) Brain image analysis: a survey. World Appl Sci J 19:1484–1494

Somasundaram K, Kalaiselvi T (2010) Fully automatic brain extraction algorithm for axial T2-weighted magnetic resonance images. Comput Biol Med 40:811–822

Zhu Y, Young GS, Xue Z, Huang RY, You H, Setayesh K et al (2012) Semi-automatic segmentation software for quantitative clinical brain glioblastoma evaluation. Acad Radiol 19:977–985

Prabhu LAJ, Jayachandran A (2018) Mixture model segmentation system for parasagittal meningioma brain tumor classification based on hybrid feature vector. J Med Syst 42:1–6

Park CR, Kim K, Lee Y (2019) Development of a bias field-based uniformity correction in magnetic resonance imaging with various standard pulse sequences. Optik 178:161–166

Patel P, Bhandari A (2019) A review on image contrast enhancement techniques. Int J Online Sci 5:14–18

Zhang Z, Song J (2019) A robust brain MRI segmentation and bias field correction method integrating local contextual information into a clustering model. Appl Sci 9:1332

Irum I, Sharif M, Yasmin M, Raza M, Azam F (2014) A noise adaptive approach to impulse noise detection and reduction. Nepal J Sci Technol 15:67–76

Robb RA (2000) 3-dimensional visualization in medicine and biology. Handb Med Imaging Process Anal:685–712

Mehmood I, Ejaz N, Sajjad M, Baik SW (2013) Prioritization of brain MRI volumes using medical image perception model and tumor region segmentation. Comput Biol Med 43:1471–1483

Lu X, Huang Z, Yuan Y (2015) MR image super-resolution via manifold regularized sparse learning. Neurocomputing 162:96–104

Irum I, Sharif M, Raza M, Mohsin S (2015) A nonlinear hybrid filter for salt & pepper noise removal from color images. J Appl Res Technol 13:79–85

Stadler A, Schima W, Ba-Ssalamah A, Kettenbach J, Eisenhuber E (2007) Artifacts in body MR imaging: their appearance and how to eliminate them. Eur Radiol 17:1242–1255

Masood S, Sharif M, Masood A, Yasmin M, Raza M (2015) A survey on medical image segmentation. Curr Med Imaging 11:3–14

Irum I, Sharif M, Raza M, Yasmin M (2014) Salt and pepper noise removal filter for 8-bit images based on local and global occurrences of grey levels as selection indicator. Nepal J Sci Technol 15:123–132

Sharif M, Irum I, Yasmin M, Raza M (2017) Salt & pepper noise removal from digital color images based on mathematical morphology and fuzzy decision. Nepal J Sci Technol 18:1–7

Prastawa M, Bullitt E, Moon N, Van Leemput K, Gerig G (2003) Automatic brain tumor segmentation by subject specific modification of atlas priors1. Acad Radiol 10:1341–1348

Wu Y, Yang W, Jiang J, Li S, Feng Q, Chen W (2013) Semi-automatic segmentation of brain tumors using population and individual information. J Digit Imaging 26:786–796

Xie K, Yang J, Zhang Z, Zhu Y (2005) Semi-automated brain tumor and edema segmentation using MRI. Eur J Radiol 56:12–19

Agn M, Puonti O, af Rosenschöld PM, Law I, Van Leemput K (2015) Brain tumor segmentation using a generative model with an RBM prior on tumor shape. In: BrainLes vol 2015, pp 168–180

Haeck T, Maes F, Suetens P (2015) ISLES challenge 2015: Automated model-based segmentation of ischemic stroke in MR images. BrainLes 2015:246–253

Abbasi S, Tajeripour F (2017) Detection of brain tumor in 3D MRI images using local binary patterns and histogram orientation gradient. Neurocomputing 219:526–535

Sauwen N, Acou M, Sima DM, Veraart J, Maes F, Himmelreich U et al (2017) Semi-automated brain tumor segmentation on multi-parametric MRI using regularized non-negative matrix factorization. BMC Med Imaging 17:29

Ilunga-Mbuyamba E, Avina–Cervantes JG, Garcia-Perez A, de Jesus Romero–Troncoso R, Aguirre–Ramos H, Cruz–Aceves I et al (2017) Localized active contour model with background intensity compensation applied on automatic MR brain tumor segmentation. Neurocomputing 220:84–97

Akbar S, Akram MU, Sharif M, Tariq A, Khan SA (2018) Decision support system for detection of hypertensive retinopathy using arteriovenous ratio. Artif Intell Med 90:15–24

Banerjee S, Mitra S, Shankar BU (2018) Automated 3D segmentation of brain tumor using visual saliency. Inf Sci 424:337–353

Article MathSciNet Google Scholar

Raja NSM, Fernandes SL, Dey N, Satapathy SC, Rajinikanth V (2018) Contrast enhanced medical MRI evaluation using Tsallis entropy and region growing segmentation. J Ambient Intell Human Comput:1–12

Subudhi A, Dash M, Sabut S (2020) Automated segmentation and classification of brain stroke using expectation-maximization and random forest classifier. Biocybern Biomed Eng 40:277–289

Gupta N, Bhatele P, Khanna P (2019) Glioma detection on brain MRIs using texture and morphological features with ensemble learning. Biomed Signal Process Control 47:115–125

Myronenko A, Hatamizadeh A (2020) Robust semantic segmentation of brain tumor regions from 3D MRIs. arXiv:2001.02040

Karayegen G, Aksahin MF (2021) Brain tumor prediction on MR images with semantic segmentation by using deep learning network and 3D imaging of tumor region. Biomed Signal Process Control 66:102458

Prima S, Ayache N, Barrick T, Roberts N (2001) Maximum likelihood estimation of the bias field in MR brain images: Investigating different modelings of the imaging process. In: International conference on medical image computing and computer-assisted intervention, pp 811–819

Haider W, Sharif M, Raza M (2011) Achieving accuracy in early stage tumor identification systems based on image segmentation and 3D structure analysis. Comput Eng Intell Syst 2:96–102

Irum I, Shahid MA, Sharif M, Raza M (2015) A review of image denoising methods. J Eng Sci Technol Rev 8:1–11

Kumar SS, Dharun VS (2016) A study of MRI segmentation methods in automatic brain tumor detection. Int J Eng Technol 8:609–614

Dhas A, Madheswaran M (2018) An improved classification system for brain tumours using wavelet transform and neural network. West Indian Med J 67:243–247

Krissian K, Aja-Fernández S (2009) Noise-driven anisotropic diffusion filtering of MRI. IEEE Trans Image Process 18:2265–2274

Article MathSciNet MATH Google Scholar

Tahir B, Iqbal S, Usman Ghani Khan M, Saba T, Mehmood Z, Anjum A et al (2019) Feature enhancement framework for brain tumor segmentation and classification. Microsc Res Tech 82:803–811

Said AB, Hadjidj R, Foufou S (2019) Total variation for image denoising based on a novel smart edge detector: an application to medical images. J Math Imaging Vision 61:106–121

Bojorquez JAZ, Jodoin P-M, Bricq S, Walker PM, Brunotte F, Lalande A (2019) Automatic classification of tissues on pelvic MRI based on relaxation times and support vector machine. PLoS ONE 14:1–17

Sandhya G, Kande GB, Satya ST (2019) An efficient MRI brain tumor segmentation by the fusion of active contour model and self-organizing-map. J Biomim Biomater Biomed Eng 40:79–91

Yang Y, Huang S (2006) Novel statistical approach for segmentation of brain magnetic resonance imaging using an improved expectation maximization algorithm. Opt Appl 36:125–136

Mittal M, Goyal LM, Kaur S, Kaur I, Verma A, Hemanth DJ (2019) Deep learning based enhanced tumor segmentation approach for MR brain images. Appl Soft Comput 78:346–354

Roy S, Bandyopadhyay SK (2012) Detection and quantification of brain tumor from MRI of brain and it’s symmetric analysis. Int J Inf Commun Technol Res 2:1–7

Gao J, Xie M (2009) Skull-stripping MR brain images using anisotropic diffusion filtering and morphological processing. In: 2009 IEEE international symposium on computer network and multimedia technology, pp 1–4

Maintz JA, Viergever MA (1998) A survey of medical image registration. Med Image Anal 2:1–36

Sharma P, Diwakar M, Choudhary S (2012) Application of edge detection for brain tumor detection. Int J Comput Appl 58:1–6

Popescu V, Battaglini M, Hoogstrate W, Verfaillie SC, Sluimer I, van Schijndel RA et al (2012) Optimizing parameter choice for FSL-brain extraction tool (BET) on 3D T1 images in multiple sclerosis. Neuroimage 61:1484–1494

Despotović I, Goossens B, Philips W (2015) MRI segmentation of the human brain: challenges, methods, and applications. Comput Math Methods Med 2015:1–23

Wong KP (2005) Medical image segmentation: methods and applications in functional imaging. In: Handbook of biomedical image analysis, pp 111–182

Chae SY, Suh S, Ryoo I, Park A, Noh KJ, Shim H et al (2017) A semi-automated volumetric software for segmentation and perfusion parameter quantification of brain tumors using 320-row multidetector computed tomography: a validation study. Neuroradiology 59:461–469

Sauwen N, Acou M, Sima DM, Veraart J, Maes F, Himmelreich U et al (2017) Semi-automated brain tumor segmentation on multi-parametric MRI using regularized non-negative matrix factorization. BMC Med Imaging 17:1–14

Foo JL (2006) A survey of user interaction and automation in medical image segmentation methods. In: Tech rep ISUHCI20062, Human Computer Interaction Department, Iowa State Univ, pp 1–11

Işın A, Direkoğlu C, Şah M (2016) Review of MRI-based brain tumor image segmentation using deep learning methods. Procedia Comput Sci 102:317–324

Shanthi KJ, Kumar MS (2007) Skull stripping and automatic segmentation of brain MRI using seed growth and threshold techniques. In: 2007 International conference on intelligent and advanced systems, pp 422–426

Yao J (2006) Image processing in tumor imaging, new techniques in oncologic imaging. Zhang, F., & Hancock, ER Zhang. New Riemannian techniques for directional and tensorial image data. Pattern Recogn 43:1590–1606

Stadlbauer A, Moser E, Gruber S, Buslei R, Nimsky C, Fahlbusch R et al (2004) Improved delineation of brain tumors: an automated method for segmentation based on pathologic changes of 1H-MRSI metabolites in gliomas. Neuroimage 23:454–461

Lakare S, Kaufman A (2000) 3D segmentation techniques for medical volumes, Center for Visual Computing. Department of Computer Science, State University of New York, New York, pp 59–68

Sato M, Lakare S, Wan M, Kaufman A, Nakajima M (2000) A gradient magnitude based region growing algorithm for accurate segmentation. In: Proceedings 2000 International Conference on Image Processing, vol 3, pp 448–451

Salman YM (2009) Modified technique for volumetric brain tumor measurements. J Biomed Sci Eng 2:16

Singh NP, Dixit S, Akshaya AS, Khodanpur BI (2017) Gradient magnitude based watershed segmentation for brain tumor segmentation and classification. In: Proceedings of the 5th international conference on frontiers in intelligent computing: theory and applications, pp 611–619

Husain RA, Zayed AS, Ahmed WM, Elhaji HS (2015) Image segmentation with improved watershed algorithm using radial bases function neural networks. In: 2015 16th International conference on sciences and techniques of automatic control and computer engineering (STA), pp 121–126

Masood S, Sharif M, Yasmin M, Raza M, Mohsin S (2013) Brain image compression: a brief survey. Res J Appl Sci Eng Technol 5:49–59

Mughal B, Muhammad N, Sharif M (2018) Deviation analysis for texture segmentation of breast lesions in mammographic images. Eur Phys J Plus 133:1–15

Anjum MA, Amin J, Sharif M, Khan HU, Malik MSA, Kadry S (2020) Deep semantic segmentation and multi-class skin lesion classification based on convolutional neural network. IEEE Access 8:129668–129678

Gies V, Bernard TM (2004) Statistical solution to watershed over-segmentation. In: 2004 International Conference on Image Processing. ICIP'04, vol 3, pp 1863–1866

Kong J, Wang J, Lu Y, Zhang J, Li Y, Zhang B (2006) A novel approach for segmentation of MRI brain images. In: MELECON 2006-2006 IEEE Mediterranean Electrotechnical Conference, pp 525–528

Couprie M, Bertrand G (1997) Topological gray-scale watershed transformation. In: Vision Geometry VI International Society for Optics and Photonics, vol 3168, pp 136–146

Lotufo RA, Falcão AX, Zampirolli FA (2002) IFT-watershed from gray-scale marker. In: Proceedings. XV Brazilian Symposium on Computer Graphics and Image Processing, pp 146–152

Benson CC, Lajish VL, Rajamani K (2015) Brain tumor extraction from MRI brain images using marker based watershed algorithm. In: 2015 International Conference on advances in computing, communications and informatics (ICACCI), pp 318–323

Nasir M, Attique Khan M, Sharif M, Lali IU, Saba T, Iqbal T (2018) An improved strategy for skin lesion detection and classification using uniform segmentation and feature selection based approach. Microsc Res Tech 81:528–543

Yasmin M, Sharif M, Masood S, Raza M, Mohsin S (2012) Brain image enhancement—a survey. World Appl Sci J 17:1192–1204

Shah GA, Khan A, Shah AA, Raza M, Sharif M (2015) A review on image contrast enhancement techniques using histogram equalization. Sci Int 27:1-10

Khan MA, Akram T, Sharif M, Shahzad A, Aurangzeb K, Alhussein M et al (2018) An implementation of normal distribution based segmentation and entropy controlled features selection for skin lesion detection and classification. BMC Cancer 18:1–20

Khan MA, Akram T, Sharif M, Saba T, Javed K, Lali IU et al (2019) Construction of saliency map and hybrid set of features for efficient segmentation and classification of skin lesion. Microsc Res Tech 82:741–763

Akram T, Khan MA, Sharif M, Yasmin M (2018) Skin lesion segmentation and recognition using multichannel saliency estimation and M-SVM on selected serially fused features. J Ambient Intell Human Comput:1–20

Yasmin M, Sharif M, Mohsin S, Azam F (2014) Pathological brain image segmentation and classification: a survey. Curr Med Imaging 10:163–177

Mughal B, Muhammad N, Sharif M (2018) Deviation analysis for texture segmentation of breast lesions in mammographic images. Eur Phys J Plus 133:455

Hameed M, Sharif M, Raza M, Haider SW, Iqbal M (2012) Framework for the comparison of classifiers for medical image segmentation with transform and moment based features. Res J Recent Sci 2277:2502

Irum I, Raza M, Sharif M (2012) Morphological techniques for medical images: a review. Res J Appl Sci Eng Technol 4:2948–2962

Jafarpour S, Sedghi Z, Amirani MC (2012) A robust brain MRI classification with GLCM features. Int J Comput Appl 37:1–5

Mughal B, Muhammad N, Sharif M (2019) Adaptive hysteresis thresholding segmentation technique for localizing the breast masses in the curve stitching domain. Int J Med Inf 126:26–34

Nabizadeh N, Kubat M (2015) Brain tumors detection and segmentation in MR images: Gabor wavelet vs. statistical features. Comput Electr Eng 45:286–301

Tiwari P, Sachdeva J, Ahuja CK, Khandelwal N (2017) Computer aided diagnosis system—a decision support system for clinical diagnosis of brain tumours. Int J Comput Intell Syst 10:104–119

Zhang Y, Yang J, Wang S, Dong Z, Phillips P (2017) Pathological brain detection in MRI scanning via Hu moment invariants and machine learning. J Exp Theor Artif Intell 29:299–312

Lahmiri S (2017) Glioma detection based on multi-fractal features of segmented brain MRI by particle swarm optimization techniques. Biomed Signal Process Control 31:148–155

Xu X, Zhang X, Tian Q, Zhang G, Liu Y, Cui G et al (2017) Three-dimensional texture features from intensity and high-order derivative maps for the discrimination between bladder tumors and wall tissues via MRI. Int J Comput Assist Radiol Surg 12:645–656

Shanthakumar P, Ganeshkumar P (2015) Performance analysis of classifier for brain tumor detection and diagnosis. Comput Electr Eng 45:302–311

Zhang B, Chang K, Ramkissoon S, Tanguturi S, Bi WL, Reardon DA et al (2017) Multimodal MRI features predict isocitrate dehydrogenase genotype in high-grade gliomas. Neuro Oncol 19:109–117

Srinivas B, Rao GS (2019) Performance evaluation of fuzzy C means segmentation and support vector machine classification for MRI brain tumor. In: Soft computing for problem solving. Springer, New York, pp 355–367

Herlidou-Meme S, Constans J, Carsin B, Olivie D, Eliat P, Nadal-Desbarats L et al (2003) MRI texture analysis on texture test objects, normal brain and intracranial tumors. Magn Reson Imaging 21:989–993

Soltaninejad M, Yang G, Lambrou T, Allinson N, Jones TL, Barrick TR et al (2017) Automated brain tumour detection and segmentation using superpixel-based extremely randomized trees in FLAIR MRI. Int J Comput Assist Radiol Surg 12:183–203

Yang D, Rao G, Martinez J, Veeraraghavan A, Rao A (2015) Evaluation of tumor-derived MRI-texture features for discrimination of molecular subtypes and prediction of 12-month survival status in glioblastoma. Med Phys 42:6725–6735

Islam A, Reza SM, Iftekharuddin KM (2013) Multifractal texture estimation for detection and segmentation of brain tumors. IEEE Trans Biomed Eng 60:3204–3215

Pei L, Bakas S, Vossough A, Reza SM, Davatzikos C, Iftekharuddin KM (2020) Longitudinal brain tumor segmentation prediction in MRI using feature and label fusion. Biomed Signal Process Control 55:101648

Khan H, Shah PM, Shah MA, ul Islam S, Rodrigues JJ (2020) Cascading handcrafted features and convolutional neural network for IoT-enabled brain tumor segmentation. Comput Commun 153:196–207

Dixit A, Nanda A (2021) An improved whale optimization algorithm-based radial neural network for multi-grade brain tumor classification. Visual Comput:1–16

Zhang Y, Dong Z, Wu L, Wang S (2011) A hybrid method for MRI brain image classification. Expert Syst Appl 38:10049–10053

Zöllner FG, Emblem KE, Schad LR (2012) SVM-based glioma grading: optimization by feature reduction analysis. Z Med Phys 22:205–214

Arakeri MP, Reddy GRM (2015) Computer-aided diagnosis system for tissue characterization of brain tumor on magnetic resonance images. SIViP 9:409–425

Nachimuthu DS, Baladhandapani A (2014) Multidimensional texture characterization: on analysis for brain tumor tissues using MRS and MRI. J Digit Imaging 27:496–506

Pinto A, Pereira S, Dinis H, Silva CA, Rasteiro DM (2015) Random decision forests for automatic brain tumor segmentation on multi-modal MRI images. In: 2015 IEEE 4th Portuguese meeting on bioengineering (ENBENG), pp 1–5

Tustison NJ, Shrinidhi K, Wintermark M, Durst CR, Kandel BM, Gee JC et al (2015) Optimal symmetric multimodal templates and concatenated random forests for supervised brain tumor segmentation (simplified) with ANTsR. Neuroinformatics 13:209–225

Yang G, Zhang Y, Yang J, Ji G, Dong Z, Wang S et al (2016) Automated classification of brain images using wavelet-energy and biogeography-based optimization. Multimedia Tools Appl 75:15601–15617

Wang S, Du S, Atangana A, Liu A, Lu Z (2018) Application of stationary wavelet entropy in pathological brain detection. Multimedia Tools Appl 77:3701–3714

Padlia M, Sharma J (2019) Fractional Sobel filter based brain tumor detection and segmentation using statistical features and SVM. In: Nanoelectronics, circuits and communication systems, pp 161–175

Ayadi W, Elhamzi W, Charfi I, Atri M (2021) Deep CNN for brain tumor classification. Neural Process Lett 53:671–700

Afza F, Khan MA, Sharif M, Rehman A (2019) Microscopic skin laceration segmentation and classification: a framework of statistical normal distribution and optimal feature selection. Microsc Res Tech 82:1471–1488

Adair J, Brownlee A, Ochoa G (2017) Evolutionary algorithms with linkage information for feature selection in brain computer interfaces. In: Advances in computational intelligence systems. Springer, New York, pp 287–307

Sharma M, Mukharjee S (2012) Brain tumor segmentation using hybrid genetic algorithm and artificial neural network fuzzy inference system (anfis). Int J Fuzzy Logic Syst 2:31–42

Huda S, Yearwood J, Jelinek HF, Hassan MM, Fortino G, Buckland M (2016) A hybrid feature selection with ensemble classification for imbalanced healthcare data: a case study for brain tumor diagnosis. IEEE Access 4:9145–9154

Jothi G (2016) Hybrid tolerance rough set-firefly based supervised feature selection for MRI brain tumor image classification. Appl Soft Comput 46:639–651

Ahmed S, Iftekharuddin KM, Vossough A (2011) Efficacy of texture, shape, and intensity feature fusion for posterior-fossa tumor segmentation in MRI. IEEE Trans Inf Technol Biomed 15:206–213

Wu G, Chen Y, Wang Y, Yu J, Lv X, Ju X et al (2018) Sparse representation-based Radiomics for the diagnosis of brain tumors. IEEE Trans Med Imaging 37:893–905

Fernandez-Lozano C, Seoane JA, Gestal M, Gaunt TR, Dorado J, Campbell C (2015) Texture classification using feature selection and kernel-based techniques. Soft Comput 19:2469–2480

Dandu JR, Thiyagarajan AP, Murugan PR, Govindaraj V (2019) Brain and pancreatic tumor segmentation using SRM and BPNN classification. Health Technol:1–9

Saritha M, Joseph KP, Mathew AT (2013) Classification of MRI brain images using combined wavelet entropy based spider web plots and probabilistic neural network. Pattern Recogn Lett 34:2151–2156

Sharif M, Khan MA, Akram T, Javed MY, Saba T, Rehman A (2017) A framework of human detection and action recognition based on uniform segmentation and combination of Euclidean distance and joint entropy-based features selection. EURASIP J Image Video Process 2017:1–18

Lakshmi A, Arivoli T, Rajasekaran MP (2018) A Novel M-ACA-based tumor segmentation and DAPP feature extraction with PPCSO-PKC-based MRI classification. Arab J Sci Eng 43:7095–7111

Rathi V, Palani S (2012) Brain tumor MRI image classification with feature selection and extraction using linear discriminant analysis. arXiv:1208.2128

Naqi SM, Sharif M, Yasmin M (2018) Multistage segmentation model and SVM-ensemble for precise lung nodule detection. Int J Comput Assist Radiol Surg 13:1083–1095

Amin J, Anjum MA, Sharif M, Kadry S, Nam Y, Wang S (2021) Convolutional bi-LSTM based human gait recognition using video sequences. CMC Comput Mater Contin 68:2693–2709

Amin J, Sharif M, Anjum MA, Nam Y, Kadry S, Taniar D (2021) Diagnosis of COVID-19 infection using three-dimensional semantic segmentation and classification of computed tomography images. Comput Mater Contin:2451–2467

Amin J, Sharif M, Raza M, Saba T, Rehman A (2019) Brain tumor classification: feature fusion. In: 2019 international conference on computer and information sciences (ICCIS), pp 1–6

Amin J, Sharif M, Yasmin M, Ali H, Fernandes SL (2017) A method for the detection and classification of diabetic retinopathy using structural predictors of bright lesions. J Comput Sci 19:153–164

Amin J, Sharif M, Yasmin M, Fernandes SL (2018) Big data analysis for brain tumor detection: deep convolutional neural networks. Futur Gener Comput Syst 87:290–297

Muhammad N, Sharif M, Amin J, Mehboob R, Gilani SA, Bibi N et al (2018) Neurochemical alterations in sudden unexplained perinatal deaths—a review. Front Pediatr 6:6

Sharif M, Amin J, Raza M, Anjum MA, Afzal H, Shad SA (2020) Brain tumor detection based on extreme learning. Neural Comput Appl:1–13

Umer MJ, Amin J, Sharif M, Anjum MA, Azam F, Shah JH (2021) An integrated framework for COVID-19 classification based on classical and quantum transfer learning from a chest radiograph. Concurr Comput Pract Exp 19:153–164

Jiang J, Wu Y, Huang M, Yang W, Chen W, Feng Q (2013) Brain tumor segmentation in multimodal MR images based on learning population-and patient-specific feature sets. Comput Med Imaging Graph 37:512–521

Ortiz A, Gorriz JM, Ramírez J, Salas-Gonzalez D, Alzheimer’s Disease Neuroimaging Initiative (2013) Improving MRI segmentation with probabilistic GHSOM and multiobjective optimization 114:118–131

Havaei M, Davy A, Warde-Farley D, Biard A, Courville A, Bengio Y, Pal C, Jodoin PM, Larochelle H (2017) Brain tumor segmentation with deep neural networks. Med Image Anal 35:18–31

Zhang N, Ruan S, Lebonvallet S, Liao Q, Zhu Y (2011) Kernel feature selection to fuse multi-spectral MRI images for brain tumor segmentation. Comput Vis Image Underst 115:256–269

Ortega-Martorell S, Lisboa PJ, Vellido A, Simoes RV, Pumarola M, Julià-Sapé M et al (2012) Convex non-negative matrix factorization for brain tumor delimitation from MRSI data. PLoS ONE 7:e47824

Ali AH, Al-hadi SA, Naeemah MR, Mazher AN (2018) Classification of brain lesion using K-nearest neighbor technique and texture analysis. J Phys Conf Ser:012018

Supot S, Thanapong C, Chuchart P, Manas S (2007) Segmentation of magnetic resonance images using discrete curve evolution and fuzzy clustering. In: 2007 IEEE International Conference on Integration Technology, pp 697–700

Fletcher-Heath LM, Hall LO, Goldgof DB, Murtagh FR (2001) Automatic segmentation of non-enhancing brain tumors in magnetic resonance images. Artif Intell Med 21:43–63

Abdulbaqi HS, Mat MZ, Omar AF, Mustafa ISB, Abood LK (2014) Detecting brain tumor in magnetic resonance images using hidden Markov random fields and threshold techniques. In: 2014 IEEE student conference on research and development, pp 1–5

Parekh VS, Laterra J, Bettegowda C, Bocchieri AE, Pillai JJ, Jacobs MA (2019) Multiparametric deep learning and radiomics for tumor grading and treatment response assessment of brain cancer: preliminary results, pp 1–6. arXiv:1906.04049

Zadeh Shirazi A, Fornaciari E, McDonnell MD, Yaghoobi M, Cevallos Y, Tello-Oquendo L et al (2020) The Application of Deep Convolutional Neural Networks to Brain Cancer Images: A Survey. J Pers Med 10:224

Guan B, Yao J, Zhang G, Wang XJPRL (2019) Thigh fracture detection using deep learning method based on new dilated convolutional feature pyramid network 125:521–526

Raza M, Sharif M, Yasmin M, Khan MA, Saba T, Fernandes SL (2018) Appearance based pedestrians’ gender recognition by employing stacked auto encoders in deep learning. Future Gener Comput Syst 88:28–39

Liaqat A, Khan MA, Shah JH, Sharif M, Yasmin M, Fernandes SL (2018) Automated ulcer and bleeding classification from WCE images using multiple features fusion and selection. J Mech Med Biol 18:1850038

Naqi S, Sharif M, Yasmin M, Fernandes SL (2018) Lung nodule detection using polygon approximation and hybrid features from CT images. Curr Med Imaging 14:108–117

Ansari GJ, Shah JH, Yasmin M, Sharif M, Fernandes SL (2018) A novel machine learning approach for scene text extraction. Futur Gener Comput Syst 87:328–340

Fatima Bokhari ST, Sharif M, Yasmin M, Fernandes SL (2018) Fundus image segmentation and feature extraction for the detection of glaucoma: a new approach. Curr Med Imaging 14:77–87

Jain VK, Kumar S, Fernandes SL (2017) Extraction of emotions from multilingual text using intelligent text processing and computational linguistics. J Comput Sci 21:316–326

Fernandes SL, Gurupur VP, Lin H, Martis RJ (2017) A novel fusion approach for early lung cancer detection using computer aided diagnosis techniques. J Med Imaging Health Inf 7:1841–1850

Raja N, Rajinikanth V, Fernandes SL, Satapathy SC (2017) Segmentation of breast thermal images using Kapur’s entropy and hidden Markov random field. J Med Imaging Health Inf 7:1825–1829

Rajinikanth V, Madhavaraja N, Satapathy SC, Fernandes SL (2017) Otsu’s multi-thresholding and active contour snake model to segment dermoscopy images. J Med Imaging Health Inf 7:1837–1840

Shah JH, Chen Z, Sharif M, Yasmin M, Fernandes SL (2017) A novel biomechanics-based approach for person re-identification by generating dense color sift salience features. J Mech Med Biol 17:1740011

Fernandes SL, Bala GJ (2017) A comparative study on various state of the art face recognition techniques under varying facial expressions. Int Arab J Inf Technol 14:254–259

Yasmin M, Sharif M, Mohsin S (2013) Neural networks in medical imaging applications: a survey. World Appl Sci J 22:85–96

Chen L, Bentley P, Rueckert D (2017) Fully automatic acute ischemic lesion segmentation in DWI using convolutional neural networks. NeuroImage Clin 15:633–643

Abd-Ellah MK, Awad AI, Khalaf AA, Hamed HF (2018) Two-phase multi-model automatic brain tumour diagnosis system from magnetic resonance images using convolutional neural networks. EURASIP J Image Video Process 2018:97

Larochelle H, Jodoin P-M (2016) A convolutional neural network approach to brain tumor segmentation. In: Brainlesion: Glioma, multiple sclerosis, stroke and traumatic brain injuries: first international workshop, brainles 2015, Held in Conjunction with MICCAI 2015, Munich, Germany, 5 Oct 5, revised selected papers

Kamnitsas K, Ledig C, Newcombe VF, Simpson JP, Kane AD, Menon DK, Rueckert D, Glocker B (2017) Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation. Med Image Anal 36:61–78

Dong H, Yang G, Liu F, Mo Y, Guo Y (2017) Automatic brain tumor detection and segmentation using U-Net based fully convolutional networks. In: Annual conference on medical image understanding and analysis, pp 506–517

Chen S, Ding C, Liu M (2019) Dual-force convolutional neural networks for accurate brain tumor segmentation. Pattern Recogn 88:90–100

Wang Y, Li C, Zhu T, Zhang J (2019) Multimodal brain tumor image segmentation using WRN-PPNet. Comput Med Imaging Graph 75:56–65

Chelghoum R, Ikhlef A, Hameurlaine A, Jacquir S (2020) Transfer learning using convolutional neural network architectures for brain tumor classification from MRI images. In: IFIP International Conference on Artificial Intelligence Applications and Innovations, pp 189–200

Mehrotra R, Ansari MA, Agrawal R, Anand RS (2020) A transfer learning approach for AI-based classification of brain tumors. Mach Learn Appl 2:1–12

Zikic D, Ioannou Y, Brown M, Criminisi A (2014) Segmentation of brain tumor tissues with convolutional neural networks. In: Proceedings MICCAI-BRATS, pp 36–39

Dvořák P, Menze B (2015) Local structure prediction with convolutional neural networks for multimodal brain tumor segmentation. In: International MICCAI workshop on medical computer vision, pp 59–71