- Privacy Policy

Buy Me a Coffee

Home » Scientific Research – Types, Purpose and Guide

Scientific Research – Types, Purpose and Guide

Table of Contents

Scientific Research

Definition:

Scientific research is the systematic and empirical investigation of phenomena, theories, or hypotheses, using various methods and techniques in order to acquire new knowledge or to validate existing knowledge.

It involves the collection, analysis, interpretation, and presentation of data, as well as the formulation and testing of hypotheses. Scientific research can be conducted in various fields, such as natural sciences, social sciences, and engineering, and may involve experiments, observations, surveys, or other forms of data collection. The goal of scientific research is to advance knowledge, improve understanding, and contribute to the development of solutions to practical problems.

Types of Scientific Research

There are different types of scientific research, which can be classified based on their purpose, method, and application. In this response, we will discuss the four main types of scientific research.

Descriptive Research

Descriptive research aims to describe or document a particular phenomenon or situation, without altering it in any way. This type of research is usually done through observation, surveys, or case studies. Descriptive research is useful in generating ideas, understanding complex phenomena, and providing a foundation for future research. However, it does not provide explanations or causal relationships between variables.

Exploratory Research

Exploratory research aims to explore a new area of inquiry or develop initial ideas for future research. This type of research is usually conducted through observation, interviews, or focus groups. Exploratory research is useful in generating hypotheses, identifying research questions, and determining the feasibility of a larger study. However, it does not provide conclusive evidence or establish cause-and-effect relationships.

Experimental Research

Experimental research aims to test cause-and-effect relationships between variables by manipulating one variable and observing the effects on another variable. This type of research involves the use of an experimental group, which receives a treatment, and a control group, which does not receive the treatment. Experimental research is useful in establishing causal relationships, replicating results, and controlling extraneous variables. However, it may not be feasible or ethical to manipulate certain variables in some contexts.

Correlational Research

Correlational research aims to examine the relationship between two or more variables without manipulating them. This type of research involves the use of statistical techniques to determine the strength and direction of the relationship between variables. Correlational research is useful in identifying patterns, predicting outcomes, and testing theories. However, it does not establish causation or control for confounding variables.

Scientific Research Methods

Scientific research methods are used in scientific research to investigate phenomena, acquire knowledge, and answer questions using empirical evidence. Here are some commonly used scientific research methods:

Observational Studies

This method involves observing and recording phenomena as they occur in their natural setting. It can be done through direct observation or by using tools such as cameras, microscopes, or sensors.

Experimental Studies

This method involves manipulating one or more variables to determine the effect on the outcome. This type of study is often used to establish cause-and-effect relationships.

Survey Research

This method involves collecting data from a large number of people by asking them a set of standardized questions. Surveys can be conducted in person, over the phone, or online.

Case Studies

This method involves in-depth analysis of a single individual, group, or organization. Case studies are often used to gain insights into complex or unusual phenomena.

Meta-analysis

This method involves combining data from multiple studies to arrive at a more reliable conclusion. This technique can be used to identify patterns and trends across a large number of studies.

Qualitative Research

This method involves collecting and analyzing non-numerical data, such as interviews, focus groups, or observations. This type of research is often used to explore complex phenomena and to gain an understanding of people’s experiences and perspectives.

Quantitative Research

This method involves collecting and analyzing numerical data using statistical techniques. This type of research is often used to test hypotheses and to establish cause-and-effect relationships.

Longitudinal Studies

This method involves following a group of individuals over a period of time to observe changes and to identify patterns and trends. This type of study can be used to investigate the long-term effects of a particular intervention or exposure.

Data Analysis Methods

There are many different data analysis methods used in scientific research, and the choice of method depends on the type of data being collected and the research question. Here are some commonly used data analysis methods:

- Descriptive statistics: This involves using summary statistics such as mean, median, mode, standard deviation, and range to describe the basic features of the data.

- Inferential statistics: This involves using statistical tests to make inferences about a population based on a sample of data. Examples of inferential statistics include t-tests, ANOVA, and regression analysis.

- Qualitative analysis: This involves analyzing non-numerical data such as interviews, focus groups, and observations. Qualitative analysis may involve identifying themes, patterns, or categories in the data.

- Content analysis: This involves analyzing the content of written or visual materials such as articles, speeches, or images. Content analysis may involve identifying themes, patterns, or categories in the content.

- Data mining: This involves using automated methods to analyze large datasets to identify patterns, trends, or relationships in the data.

- Machine learning: This involves using algorithms to analyze data and make predictions or classifications based on the patterns identified in the data.

Application of Scientific Research

Scientific research has numerous applications in many fields, including:

- Medicine and healthcare: Scientific research is used to develop new drugs, medical treatments, and vaccines. It is also used to understand the causes and risk factors of diseases, as well as to develop new diagnostic tools and medical devices.

- Agriculture : Scientific research is used to develop new crop varieties, to improve crop yields, and to develop more sustainable farming practices.

- Technology and engineering : Scientific research is used to develop new technologies and engineering solutions, such as renewable energy systems, new materials, and advanced manufacturing techniques.

- Environmental science : Scientific research is used to understand the impacts of human activity on the environment and to develop solutions for mitigating those impacts. It is also used to monitor and manage natural resources, such as water and air quality.

- Education : Scientific research is used to develop new teaching methods and educational materials, as well as to understand how people learn and develop.

- Business and economics: Scientific research is used to understand consumer behavior, to develop new products and services, and to analyze economic trends and policies.

- Social sciences : Scientific research is used to understand human behavior, attitudes, and social dynamics. It is also used to develop interventions to improve social welfare and to inform public policy.

How to Conduct Scientific Research

Conducting scientific research involves several steps, including:

- Identify a research question: Start by identifying a question or problem that you want to investigate. This question should be clear, specific, and relevant to your field of study.

- Conduct a literature review: Before starting your research, conduct a thorough review of existing research in your field. This will help you identify gaps in knowledge and develop hypotheses or research questions.

- Develop a research plan: Once you have a research question, develop a plan for how you will collect and analyze data to answer that question. This plan should include a detailed methodology, a timeline, and a budget.

- Collect data: Depending on your research question and methodology, you may collect data through surveys, experiments, observations, or other methods.

- Analyze data: Once you have collected your data, analyze it using appropriate statistical or qualitative methods. This will help you draw conclusions about your research question.

- Interpret results: Based on your analysis, interpret your results and draw conclusions about your research question. Discuss any limitations or implications of your findings.

- Communicate results: Finally, communicate your findings to others in your field through presentations, publications, or other means.

Purpose of Scientific Research

The purpose of scientific research is to systematically investigate phenomena, acquire new knowledge, and advance our understanding of the world around us. Scientific research has several key goals, including:

- Exploring the unknown: Scientific research is often driven by curiosity and the desire to explore uncharted territory. Scientists investigate phenomena that are not well understood, in order to discover new insights and develop new theories.

- Testing hypotheses: Scientific research involves developing hypotheses or research questions, and then testing them through observation and experimentation. This allows scientists to evaluate the validity of their ideas and refine their understanding of the phenomena they are studying.

- Solving problems: Scientific research is often motivated by the desire to solve practical problems or address real-world challenges. For example, researchers may investigate the causes of a disease in order to develop new treatments, or explore ways to make renewable energy more affordable and accessible.

- Advancing knowledge: Scientific research is a collective effort to advance our understanding of the world around us. By building on existing knowledge and developing new insights, scientists contribute to a growing body of knowledge that can be used to inform decision-making, solve problems, and improve our lives.

Examples of Scientific Research

Here are some examples of scientific research that are currently ongoing or have recently been completed:

- Clinical trials for new treatments: Scientific research in the medical field often involves clinical trials to test new treatments for diseases and conditions. For example, clinical trials may be conducted to evaluate the safety and efficacy of new drugs or medical devices.

- Genomics research: Scientists are conducting research to better understand the human genome and its role in health and disease. This includes research on genetic mutations that can cause diseases such as cancer, as well as the development of personalized medicine based on an individual’s genetic makeup.

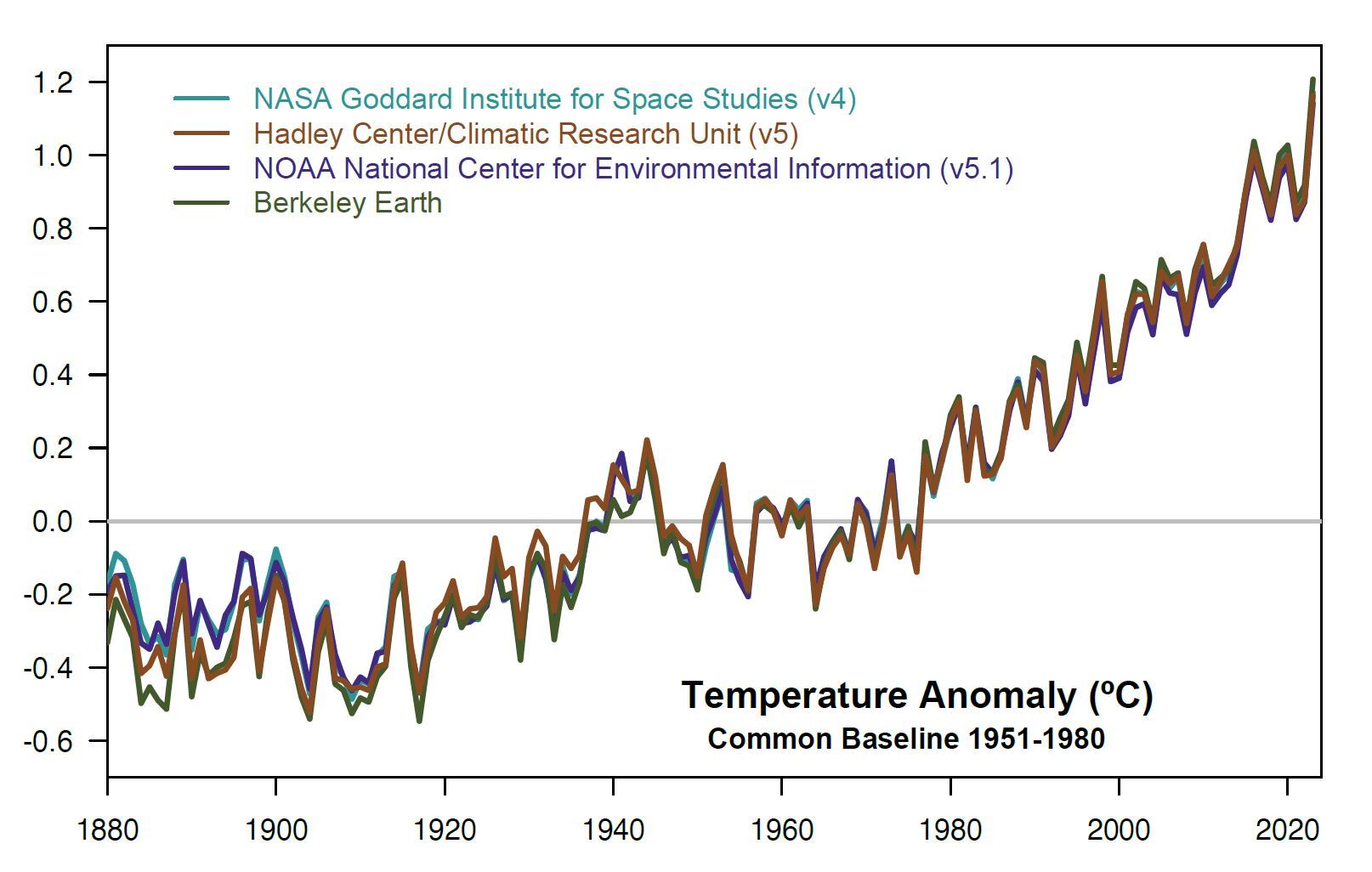

- Climate change: Scientific research is being conducted to understand the causes and impacts of climate change, as well as to develop solutions for mitigating its effects. This includes research on renewable energy technologies, carbon capture and storage, and sustainable land use practices.

- Neuroscience : Scientists are conducting research to understand the workings of the brain and the nervous system, with the goal of developing new treatments for neurological disorders such as Alzheimer’s disease and Parkinson’s disease.

- Artificial intelligence: Researchers are working to develop new algorithms and technologies to improve the capabilities of artificial intelligence systems. This includes research on machine learning, computer vision, and natural language processing.

- Space exploration: Scientific research is being conducted to explore the cosmos and learn more about the origins of the universe. This includes research on exoplanets, black holes, and the search for extraterrestrial life.

When to use Scientific Research

Some specific situations where scientific research may be particularly useful include:

- Solving problems: Scientific research can be used to investigate practical problems or address real-world challenges. For example, scientists may investigate the causes of a disease in order to develop new treatments, or explore ways to make renewable energy more affordable and accessible.

- Decision-making: Scientific research can provide evidence-based information to inform decision-making. For example, policymakers may use scientific research to evaluate the effectiveness of different policy options or to make decisions about public health and safety.

- Innovation : Scientific research can be used to develop new technologies, products, and processes. For example, research on materials science can lead to the development of new materials with unique properties that can be used in a range of applications.

- Knowledge creation : Scientific research is an important way of generating new knowledge and advancing our understanding of the world around us. This can lead to new theories, insights, and discoveries that can benefit society.

Advantages of Scientific Research

There are many advantages of scientific research, including:

- Improved understanding : Scientific research allows us to gain a deeper understanding of the world around us, from the smallest subatomic particles to the largest celestial bodies.

- Evidence-based decision making: Scientific research provides evidence-based information that can inform decision-making in many fields, from public policy to medicine.

- Technological advancements: Scientific research drives technological advancements in fields such as medicine, engineering, and materials science. These advancements can improve quality of life, increase efficiency, and reduce costs.

- New discoveries: Scientific research can lead to new discoveries and breakthroughs that can advance our knowledge in many fields. These discoveries can lead to new theories, technologies, and products.

- Economic benefits : Scientific research can stimulate economic growth by creating new industries and jobs, and by generating new technologies and products.

- Improved health outcomes: Scientific research can lead to the development of new medical treatments and technologies that can improve health outcomes and quality of life for people around the world.

- Increased innovation: Scientific research encourages innovation by promoting collaboration, creativity, and curiosity. This can lead to new and unexpected discoveries that can benefit society.

Limitations of Scientific Research

Scientific research has some limitations that researchers should be aware of. These limitations can include:

- Research design limitations : The design of a research study can impact the reliability and validity of the results. Poorly designed studies can lead to inaccurate or inconclusive results. Researchers must carefully consider the study design to ensure that it is appropriate for the research question and the population being studied.

- Sample size limitations: The size of the sample being studied can impact the generalizability of the results. Small sample sizes may not be representative of the larger population, and may lead to incorrect conclusions.

- Time and resource limitations: Scientific research can be costly and time-consuming. Researchers may not have the resources necessary to conduct a large-scale study, or may not have sufficient time to complete a study with appropriate controls and analysis.

- Ethical limitations : Certain types of research may raise ethical concerns, such as studies involving human or animal subjects. Ethical concerns may limit the scope of the research that can be conducted, or require additional protocols and procedures to ensure the safety and well-being of participants.

- Limitations of technology: Technology may limit the types of research that can be conducted, or the accuracy of the data collected. For example, certain types of research may require advanced technology that is not yet available, or may be limited by the accuracy of current measurement tools.

- Limitations of existing knowledge: Existing knowledge may limit the types of research that can be conducted. For example, if there is limited knowledge in a particular field, it may be difficult to design a study that can provide meaningful results.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Documentary Research – Types, Methods and...

Original Research – Definition, Examples, Guide

Humanities Research – Types, Methods and Examples

Historical Research – Types, Methods and Examples

Artistic Research – Methods, Types and Examples

Secondary menu

- Log in to your Library account

- Hours and Maps

- Connect from Off Campus

- UC Berkeley Home

Search form

Research methods--quantitative, qualitative, and more: overview.

- Quantitative Research

- Qualitative Research

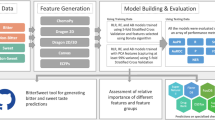

- Data Science Methods (Machine Learning, AI, Big Data)

- Text Mining and Computational Text Analysis

- Evidence Synthesis/Systematic Reviews

- Get Data, Get Help!

About Research Methods

This guide provides an overview of research methods, how to choose and use them, and supports and resources at UC Berkeley.

As Patten and Newhart note in the book Understanding Research Methods , "Research methods are the building blocks of the scientific enterprise. They are the "how" for building systematic knowledge. The accumulation of knowledge through research is by its nature a collective endeavor. Each well-designed study provides evidence that may support, amend, refute, or deepen the understanding of existing knowledge...Decisions are important throughout the practice of research and are designed to help researchers collect evidence that includes the full spectrum of the phenomenon under study, to maintain logical rules, and to mitigate or account for possible sources of bias. In many ways, learning research methods is learning how to see and make these decisions."

The choice of methods varies by discipline, by the kind of phenomenon being studied and the data being used to study it, by the technology available, and more. This guide is an introduction, but if you don't see what you need here, always contact your subject librarian, and/or take a look to see if there's a library research guide that will answer your question.

Suggestions for changes and additions to this guide are welcome!

START HERE: SAGE Research Methods

Without question, the most comprehensive resource available from the library is SAGE Research Methods. HERE IS THE ONLINE GUIDE to this one-stop shopping collection, and some helpful links are below:

- SAGE Research Methods

- Little Green Books (Quantitative Methods)

- Little Blue Books (Qualitative Methods)

- Dictionaries and Encyclopedias

- Case studies of real research projects

- Sample datasets for hands-on practice

- Streaming video--see methods come to life

- Methodspace- -a community for researchers

- SAGE Research Methods Course Mapping

Library Data Services at UC Berkeley

Library Data Services Program and Digital Scholarship Services

The LDSP offers a variety of services and tools ! From this link, check out pages for each of the following topics: discovering data, managing data, collecting data, GIS data, text data mining, publishing data, digital scholarship, open science, and the Research Data Management Program.

Be sure also to check out the visual guide to where to seek assistance on campus with any research question you may have!

Library GIS Services

Other Data Services at Berkeley

D-Lab Supports Berkeley faculty, staff, and graduate students with research in data intensive social science, including a wide range of training and workshop offerings Dryad Dryad is a simple self-service tool for researchers to use in publishing their datasets. It provides tools for the effective publication of and access to research data. Geospatial Innovation Facility (GIF) Provides leadership and training across a broad array of integrated mapping technologies on campu Research Data Management A UC Berkeley guide and consulting service for research data management issues

General Research Methods Resources

Here are some general resources for assistance:

- Assistance from ICPSR (must create an account to access): Getting Help with Data , and Resources for Students

- Wiley Stats Ref for background information on statistics topics

- Survey Documentation and Analysis (SDA) . Program for easy web-based analysis of survey data.

Consultants

- D-Lab/Data Science Discovery Consultants Request help with your research project from peer consultants.

- Research data (RDM) consulting Meet with RDM consultants before designing the data security, storage, and sharing aspects of your qualitative project.

- Statistics Department Consulting Services A service in which advanced graduate students, under faculty supervision, are available to consult during specified hours in the Fall and Spring semesters.

Related Resourcex

- IRB / CPHS Qualitative research projects with human subjects often require that you go through an ethics review.

- OURS (Office of Undergraduate Research and Scholarships) OURS supports undergraduates who want to embark on research projects and assistantships. In particular, check out their "Getting Started in Research" workshops

- Sponsored Projects Sponsored projects works with researchers applying for major external grants.

- Next: Quantitative Research >>

- Last Updated: Apr 3, 2023 3:14 PM

- URL: https://guides.lib.berkeley.edu/researchmethods

Top Science News

Latest top headlines.

- Child Development

- Brain Tumor

- Brain Injury

- Heart Disease

- Cholesterol

- Stroke Prevention

- Sleep Disorders

- Solar Flare

- Robotics Research

- Artificial Intelligence

- Energy and the Environment

- Renewable Energy

- Cell Biology

- Developmental Biology

- Cows, Sheep, Pigs

- Endangered Plants

- Coral Reefs

- Sustainability

- Agriculture and Food

- Food and Agriculture

- Understanding People Who Can't Visualize

- Illuminating Oxygen's Journey in the Brain

- Heart Disease Risk: More Than One Drink a Day

- Why Do Some Memories Become Longterm?

Top Physical/Tech

- Unlocking Supernova Stardust Secrets

- What Controls Sun's Differential Rotation?

- Robot, Can You Say 'Cheese'?

- A Solar Cell You Can Bend and Soak in Water

Top Environment

- Cell Division Quality Control 'Stopwatch'

- Century-Old Powdered Milk in Antarctica

- New Artificial Reef Stands Up to Storms

- Sugarcane's Complex Genetic Code Cracked

Health News

Latest health headlines.

- Diet and Weight Loss

- Alzheimer's Research

- Alzheimer's

- Social Psychology

- Relationships

- Mental Health

- Learning Disorders

- Mental Health Research

- Sleep Disorder Research

- Insomnia Research

- Child Psychology

- Disorders and Syndromes

- Brain-Computer Interfaces

- Neuroscience

Health & Medicine

- Sweeteners Unlikely to Increase Your Appetite

- Virtual Rehabilitation for Stroke Recovery

- Familial Alzheimer's Via Bone Marrow Transplant

- Crimean-Congo Hemorrhagic Fever Virus

Mind & Brain

- Suppressing Boredom at Work Hurts Productivity

- Younger Women: Mental Health, Heart Health

- Synaptic Protein Change During Development

- Premenstrual Disorders and Perinatal Depression

Living Well

- Too Little Sleep Linked to High Blood Pressure

- Concern for Others Begins at Around 18 Months

- Risk Factors for Faster Brain Aging

- Brain Expansion in Humans

Physical/Tech News

Latest physical/tech headlines.

- Air Quality

- Computer Modeling

- Mathematical Modeling

- Mathematics

- Quantum Physics

- Spintronics

- Engineering

- Materials Science

- Extrasolar Planets

- Kuiper Belt

- Black Holes

- Astrophysics

- Asteroids, Comets and Meteors

- Virtual Reality

- Virtual Environment

- Microarrays

- Intelligence

- Quantum Computers

Matter & Energy

- Pinpointing Freshwater Pollution Sources

- AI Boosts Super-Resolution Microscopy

- Magnetic Avalanche Triggered by Quantum Effects

- Can Metalens Be Easily Commercialized?

Space & Time

- New Molecular Signposts in Starburst Galaxy

- Protostars and Newly Formed Planets

- 'Cosmic Cannibals': Fast-Moving Jets in Space

- Deep Space Objects Can Become 'Ice Bombs'

Computers & Math

- Immersive Projection Mapping

- Artificial Nose for Gas Sensing, Odor Detection

- AI to Locate Damage to Brain After Stroke?

- A New Type of Cooling for Quantum Simulators

Environment News

Latest environment headlines.

- Epigenetics Research

- Evolutionary Biology

- Biochemistry Research

- Wild Animals

- Mating and Breeding

- Behavioral Science

- Veterinary Medicine

- Global Warming

- Environmental Science

- Air Pollution

- Ancient Civilizations

- Origin of Life

- Early Climate

- New Species

- Paleontology

- Anthropology

- Human Evolution

Plants & Animals

- Social Status Leaves Traces in the Epigenome

- Small Birds Spice Up Spotted Hyena Diets

- Lyrebird: One Real Song-And-Dance Bird

- TB Vaccine in Cattle Reduces Disease Spread

Earth & Climate

- Variability of Jet Streams in Northern ...

- Manganese: Soil Carbon Sequestration

- Built Environment and Risk of CVD

- Open Waste Burning: Air Pollution in Arctic

Fossils & Ruins

- Appearance of a 6th Century Chinese Emperor

- More Heat Likely to Reach Antarctica

- In Paleontology, Correct Names Are Key

- Mystery of Dorset's Cerne Giant

Society/Education News

Latest society/education headlines.

- Endangered Animals

- Energy Issues

- Environmental Awareness

- Children's Health

- Educational Policy

- Educational Psychology

- Education and Employment

- STEM Education

- Gender Difference

- K-12 Education

- Video Games

- Sports Science

Science & Society

- The Grey Seal Hunt Is Too Large

- Obesity: Global Study Tracks BMI Measurements

- Heat, Cold Extremes: Solar, Wind Energy Use

- N. American Cities: Major Species Turnover?

Education & Learning

- Most Teens Worry How Sick Days Impact Grades?

- Effective Teachers: Range of Student Abilities

- Students Contribute to Exoplanet Discovery

- 'Transcendent' Thinking May Grow Teens' Brains

Business & Industry

- Pairing Crypto Mining With Green Hydrogen

- Feeling Apathetic? There May Be Hope

- Tensions Between Individual and Team Wellbeing

- AI Can Track Hockey Data

- DNA Study IDs Descendants of George Washington

- Researchers Turn Back the Clock On Cancer Cells

Trending Topics

Strange & offbeat, about this site.

ScienceDaily features breaking news about the latest discoveries in science, health, the environment, technology, and more -- from leading universities, scientific journals, and research organizations.

Visitors can browse more than 500 individual topics, grouped into 12 main sections (listed under the top navigational menu), covering: the medical sciences and health; physical sciences and technology; biological sciences and the environment; and social sciences, business and education. Headlines and summaries of relevant news stories are provided on each topic page.

Stories are posted daily, selected from press materials provided by hundreds of sources from around the world. Links to sources and relevant journal citations (where available) are included at the end of each post.

For more information about ScienceDaily, please consult the links listed at the bottom of each page.

Chapter 1 Science and Scientific Research

What is research? Depending on who you ask, you will likely get very different answers to this seemingly innocuous question. Some people will say that they routinely research different online websites to find the best place to buy goods or services they want. Television news channels supposedly conduct research in the form of viewer polls on topics of public interest such as forthcoming elections or government-funded projects. Undergraduate students research the Internet to find the information they need to complete assigned projects or term papers. Graduate students working on research projects for a professor may see research as collecting or analyzing data related to their project. Businesses and consultants research different potential solutions to remedy organizational problems such as a supply chain bottleneck or to identify customer purchase patterns. However, none of the above can be considered “scientific research” unless: (1) it contributes to a body of science, and (2) it follows the scientific method. This chapter will examine what these terms mean.

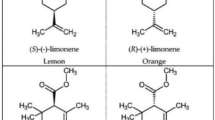

What is science? To some, science refers to difficult high school or college-level courses such as physics, chemistry, and biology meant only for the brightest students. To others, science is a craft practiced by scientists in white coats using specialized equipment in their laboratories. Etymologically, the word “science” is derived from the Latin word scientia meaning knowledge. Science refers to a systematic and organized body of knowledge in any area of inquiry that is acquired using “the scientific method” (the scientific method is described further below). Science can be grouped into two broad categories: natural science and social science. Natural science is the science of naturally occurring objects or phenomena, such as light, objects, matter, earth, celestial bodies, or the human body. Natural sciences can be further classified into physical sciences, earth sciences, life sciences, and others. Physical sciences consist of disciplines such as physics (the science of physical objects), chemistry (the science of matter), and astronomy (the science of celestial objects). Earth sciences consist of disciplines such as geology (the science of the earth). Life sciences include disciplines such as biology (the science of human bodies) and botany (the science of plants). In contrast, social science is the science of people or collections of people, such as groups, firms, societies, or economies, and their individual or collective behaviors. Social sciences can be classified into disciplines such as psychology (the science of human behaviors), sociology (the science of social groups), and economics (the science of firms, markets, and economies).

The natural sciences are different from the social sciences in several respects. The natural sciences are very precise, accurate, deterministic, and independent of the person m aking the scientific observations. For instance, a scientific experiment in physics, such as measuring the speed of sound through a certain media or the refractive index of water, should always yield the exact same results, irrespective of the time or place of the experiment, or the person conducting the experiment. If two students conducting the same physics experiment obtain two different values of these physical properties, then it generally means that one or both of those students must be in error. However, the same cannot be said for the social sciences, which tend to be less accurate, deterministic, or unambiguous. For instance, if you measure a person’s happiness using a hypothetical instrument, you may find that the same person is more happy or less happy (or sad) on different days and sometimes, at different times on the same day. One’s happiness may vary depending on the news that person received that day or on the events that transpired earlier during that day. Furthermore, there is not a single instrument or metric that can accurately measure a person’s happiness. Hence, one instrument may calibrate a person as being “more happy” while a second instrument may find that the same person is “less happy” at the same instant in time. In other words, there is a high degree of measurement error in the social sciences and there is considerable uncertainty and little agreement on social science policy decisions. For instance, you will not find many disagreements among natural scientists on the speed of light or the speed of the earth around the sun, but you will find numerous disagreements among social scientists on how to solve a social problem such as reduce global terrorism or rescue an economy from a recession. Any student studying the social sciences must be cognizant of and comfortable with handling higher levels of ambiguity, uncertainty, and error that come with such sciences, which merely reflects the high variability of social objects.

Sciences can also be classified based on their purpose. Basic sciences , also called pure sciences, are those that explain the most basic objects and forces, relationships between them, and laws governing them. Examples include physics, mathematics, and biology. Applied sciences , also called practical sciences, are sciences that apply scientific knowledge from basic sciences in a physical environment. For instance, engineering is an applied science that applies the laws of physics and chemistry for practical applications such as building stronger bridges or fuel efficient combustion engines, while medicine is an applied science that applies the laws of biology for solving human ailments. Both basic and applied sciences are required for human development. However, applied sciences cannot stand on their own right, but instead relies on basic sciences for its progress. Of course, the industry and private enterprises tend to focus more on applied sciences given their practical value, while universities study both basic and applied sciences.

Scientific Knowledge

The purpose of science is to create scientific knowledge. Scientific knowledge refers to a generalized body of laws and theories to explain a phenomenon or behavior of interest that are acquired using the scientific method. Laws are observed patterns of phenomena or behaviors, while theories are systematic explanations of the underlying phenomenon or behavior. For instance, in physics, the Newtonian Laws of Motion describe what happens when an object is in a state of rest or motion (Newton’s First Law), what force is needed to move a stationary object or stop a moving object (Newton’s Second Law), and what happens when two objects collide (Newton’s Third Law). Collectively, the three laws constitute the basis of classical mechanics – a theory of moving objects. Likewise, the theory of optics explains the properties of light and how it behaves in different media, electromagnetic theory explains the properties of electricity and how to generate it, quantum mechanics explains the properties of subatomic \particles, and thermodynamics explains the properties of energy and mechanical work. An introductory college level text book in physics will likely contain separate chapters devoted to each of these theories. Similar theories are also available in social sciences. For instance, cognitive dissonance theory in psychology explains how people react when their observations of an event is different from what they expected of that event, general deterrence theory explains why some people engage in improper or criminal behaviors, such as illegally download music or commit software piracy, and the theory of planned behavior explains how people make conscious reasoned choices in their everyday lives.

The goal of scientific research is to discover laws and postulate theories that can explain natural or social phenomena, or in other words, build scientific knowledge. It is important to understand that this knowledge may be imperfect or even quite far from the truth. Sometimes, there may not be a single universal truth, but rather an equilibrium of “multiple truths.” We must understand that the theories, upon which scientific knowledge is based, are only explanations of a particular phenomenon, as suggested by a scientist. As such, there may be good or poor explanations, depending on the extent to which those explanations fit well with reality, and consequently, there may be good or poor theories. The progress of science is marked by our progression over time from poorer theories to better theories, through better observations using more accurate instruments and more informed logical reasoning.

We arrive at scientific laws or theories through a process of logic and evidence. Logic (theory) and evidence (observations) are the two, and only two, pillars upon which scientific knowledge is based. In science, theories and observations are interrelated and cannot exist without each other. Theories provide meaning and significance to what we observe, and observations help validate or refine existing theory or construct new theory. Any other means of knowledge acquisition, such as faith or authority cannot be considered science.

Scientific Research

Given that theories and observations are the two pillars of science, scientific research operates at two levels: a theoretical level and an empirical level. The theoretical level is concerned with developing abstract concepts about a natural or social phenomenon and relationships between those concepts (i.e., build “theories”), while the empirical level is concerned with testing the theoretical concepts and relationships to see how well they reflect our observations of reality, with the goal of ultimately building better theories. Over time, a theory becomes more and more refined (i.e., fits the observed reality better), and the science gains maturity. Scientific research involves continually moving back and forth between theory and observations. Both theory and observations are essential components of scientific research. For instance, relying solely on observations for making inferences and ignoring theory is not considered valid scientific research.

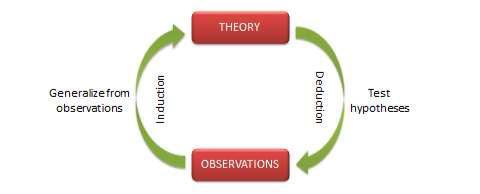

Depending on a researcher’s training and interest, scientific inquiry may take one of two possible forms: inductive or deductive. In inductive research , the goal of a researcher is to infer theoretical concepts and patterns from observed data. In deductive research , the goal of the researcher is to test concepts and patterns known from theory using new empirical data. Hence, inductive research is also called theory-building research, and deductive research is theory-testing research. Note here that the goal of theory-testing is not just to test a theory, but possibly to refine, improve, and extend it. Figure 1.1 depicts the complementary nature of inductive and deductive research. Note that inductive and deductive research are two halves of the research cycle that constantly iterates between theory and observations. You cannot do inductive or deductive research if you are not familiar with both the theory and data components of research. Naturally, a complete researcher is one who can traverse the entire research cycle and can handle both inductive and deductive research.

It is important to understand that theory-building (inductive research) and theory-testing (deductive research) are both critical for the advancement of science. Elegant theories are not valuable if they do not match with reality. Likewise, mountains of data are also useless until they can contribute to the construction to meaningful theories. Rather than viewing these two processes in a circular relationship, as shown in Figure 1.1, perhaps they can be better viewed as a helix, with each iteration between theory and data contributing to better explanations of the phenomenon of interest and better theories. Though both inductive and deductive research are important for the advancement of science, it appears that inductive (theory-building) research is more valuable when there are few prior theories or explanations, while deductive (theory-testing) research is more productive when there are many competing theories of the same phenomenon and researchers are interested in knowing which theory works best and under what circumstances.

Figure 1.1. The Cycle of Research

Theory building and theory testing are particularly difficult in the social sciences, given the imprecise nature of the theoretical concepts, inadequate tools to measure them, and the presence of many unaccounted factors that can also influence the phenomenon of interest. It is also very difficult to refute theories that do not work. For instance, Karl Marx’s theory of communism as an effective means of economic production withstood for decades, before it was finally discredited as being inferior to capitalism in promoting economic growth and social welfare. Erstwhile communist economies like the Soviet Union and China eventually moved toward more capitalistic economies characterized by profit-maximizing private enterprises. However, the recent collapse of the mortgage and financial industries in the United States demonstrates that capitalism also has its flaws and is not as effective in fostering economic growth and social welfare as previously presumed. Unlike theories in the natural sciences, social science theories are rarely perfect, which provides numerous opportunities for researchers to improve those theories or build their own alternative theories.

Conducting scientific research, therefore, requires two sets of skills – theoretical and methodological – needed to operate in the theoretical and empirical levels respectively. Methodological skills (“know-how”) are relatively standard, invariant across disciplines, and easily acquired through doctoral programs. However, theoretical skills (“know-what”) is considerably harder to master, requires years of observation and reflection, and are tacit skills that cannot be “taught” but rather learned though experience. All of the greatest scientists in the history of mankind, such as Galileo, Newton, Einstein, Neils Bohr, Adam Smith, Charles Darwin, and Herbert Simon, were master theoreticians, and they are remembered for the theories they postulated that transformed the course of science. Methodological skills are needed to be an ordinary researcher, but theoretical skills are needed to be an extraordinary researcher!

Scientific Method

In the preceding sections, we described science as knowledge acquired through a scientific method. So what exactly is the “scientific method”? Scientific method refers to a standardized set of techniques for building scientific knowledge, such as how to make valid observations, how to interpret results, and how to generalize those results. The scientific method allows researchers to independently and impartially test preexisting theories and prior findings, and subject them to open debate, modifications, or enhancements. The scientific method must satisfy four characteristics:

- Replicability: Others should be able to independently replicate or repeat a scientific study and obtain similar, if not identical, results.

- Precision: Theoretical concepts, which are often hard to measure, must be defined with such precision that others can use those definitions to measure those concepts and test that theory.

- Falsifiability: A theory must be stated in a way that it can be disproven. Theories that cannot be tested or falsified are not scientific theories and any such knowledge is not scientific knowledge. A theory that is specified in imprecise terms or whose concepts are not accurately measurable cannot be tested, and is therefore not scientific. Sigmund Freud’s ideas on psychoanalysis fall into this category and is therefore not considered a

“theory”, even though psychoanalysis may have practical utility in treating certain types of ailments.

- Parsimony: When there are multiple explanations of a phenomenon, scientists must always accept the simplest or logically most economical explanation. This concept is called parsimony or “Occam’s razor.” Parsimony prevents scientists from pursuing overly complex or outlandish theories with endless number of concepts and relationships that may explain a little bit of everything but nothing in particular.

Any branch of inquiry that does not allow the scientific method to test its basic laws or theories cannot be called “science.” For instance, theology (the study of religion) is not science because theological ideas (such as the presence of God) cannot be tested by independent observers using a replicable, precise, falsifiable, and parsimonious method. Similarly, arts, music, literature, humanities, and law are also not considered science, even though they are creative and worthwhile endeavors in their own right.

The scientific method, as applied to social sciences, includes a variety of research approaches, tools, and techniques, such as qualitative and quantitative data, statistical analysis, experiments, field surveys, case research, and so forth. Most of this book is devoted to learning about these different methods. However, recognize that the scientific method operates primarily at the empirical level of research, i.e., how to make observations and analyze and interpret these observations. Very little of this method is directly pertinent to the theoretical level, which is really the more challenging part of scientific research.

Types of Scientific Research

Depending on the purpose of research, scientific research projects can be grouped into three types: exploratory, descriptive, and explanatory. Exploratory research is often conducted in new areas of inquiry, where the goals of the research are: (1) to scope out the magnitude or extent of a particular phenomenon, problem, or behavior, (2) to generate some initial ideas (or “hunches”) about that phenomenon, or (3) to test the feasibility of undertaking a more extensive study regarding that phenomenon. For instance, if the citizens of a country are generally dissatisfied with governmental policies regarding during an economic recession, exploratory research may be directed at measuring the extent of citizens’ dissatisfaction, understanding how such dissatisfaction is manifested, such as the frequency of public protests, and the presumed causes of such dissatisfaction, such as ineffective government policies in dealing with inflation, interest rates, unemployment, or higher taxes. Such research may include examination of publicly reported figures, such as estimates of economic indicators, such as gross domestic product (GDP), unemployment, and consumer price index, as archived by third-party sources, obtained through interviews of experts, eminent economists, or key government officials, and/or derived from studying historical examples of dealing with similar problems. This research may not lead to a very accurate understanding of the target problem, but may be worthwhile in scoping out the nature and extent of the problem and serve as a useful precursor to more in-depth research.

Descriptive research is directed at making careful observations and detailed documentation of a phenomenon of interest. These observations must be based on the scientific method (i.e., must be replicable, precise, etc.), and therefore, are more reliable than casual observations by untrained people. Examples of descriptive research are tabulation of demographic statistics by the United States Census Bureau or employment statistics by the Bureau of Labor, who use the same or similar instruments for estimating employment by sector or population growth by ethnicity over multiple employment surveys or censuses. If any changes are made to the measuring instruments, estimates are provided with and without the changed instrumentation to allow the readers to make a fair before-and-after comparison regarding population or employment trends. Other descriptive research may include chronicling ethnographic reports of gang activities among adolescent youth in urban populations, the persistence or evolution of religious, cultural, or ethnic practices in select communities, and the role of technologies such as Twitter and instant messaging in the spread of democracy movements in Middle Eastern countries.

Explanatory research seeks explanations of observed phenomena, problems, or behaviors. While descriptive research examines the what, where, and when of a phenomenon, explanatory research seeks answers to why and how types of questions. It attempts to “connect the dots” in research, by identifying causal factors and outcomes of the target phenomenon. Examples include understanding the reasons behind adolescent crime or gang violence, with the goal of prescribing strategies to overcome such societal ailments. Most academic or doctoral research belongs to the explanation category, though some amount of exploratory and/or descriptive research may also be needed during initial phases of academic research. Seeking explanations for observed events requires strong theoretical and interpretation skills, along with intuition, insights, and personal experience. Those who can do it well are also the most prized scientists in their disciplines.

History of Scientific Thought

Before closing this chapter, it may be interesting to go back in history and see how science has evolved over time and identify the key scientific minds in this evolution. Although instances of scientific progress have been documented over many centuries, the terms “science,” “scientists,” and the “scientific method” were coined only in the 19 th century. Prior to this time, science was viewed as a part of philosophy, and coexisted with other branches of philosophy such as logic, metaphysics, ethics, and aesthetics, although the boundaries between some of these branches were blurred.

In the earliest days of human inquiry, knowledge was usually recognized in terms of theological precepts based on faith. This was challenged by Greek philosophers such as Plato, Aristotle, and Socrates during the 3 rd century BC, who suggested that the fundamental nature of being and the world can be understood more accurately through a process of systematic logical reasoning called rationalism . In particular, Aristotle’s classic work Metaphysics (literally meaning “beyond physical [existence]”) separated theology (the study of Gods) from ontology (the study of being and existence) and universal science (the study of first principles, upon which logic is based). Rationalism (not to be confused with “rationality”) views reason as the source of knowledge or justification, and suggests that the criterion of truth is not sensory but rather intellectual and deductive, often derived from a set of first principles or axioms (such as Aristotle’s “law of non-contradiction”).

The next major shift in scientific thought occurred during the 16 th century, when British philosopher Francis Bacon (1561-1626) suggested that knowledge can only be derived from observations in the real world. Based on this premise, Bacon emphasized knowledge acquisition as an empirical activity (rather than as a reasoning activity), and developed empiricism as an influential branch of philosophy. Bacon’s works led to the popularization of inductive methods of scientific inquiry, the development of the “scientific method” (originally called the “Baconian method”), consisting of systematic observation, measurement, and experimentation, and may have even sowed the seeds of atheism or the rejection of theological precepts as “unobservable.”

Empiricism continued to clash with rationalism throughout the Middle Ages, as philosophers sought the most effective way of gaining valid knowledge. French philosopher Rene Descartes sided with the rationalists, while British philosophers John Locke and David Hume sided with the empiricists. Other scientists, such as Galileo Galilei and Sir Issac Newton, attempted to fuse the two ideas into natural philosophy (the philosophy of nature), to focus specifically on understanding nature and the physical universe, which is considered to be the precursor of the natural sciences. Galileo (1564-1642) was perhaps the first to state that the laws of nature are mathematical, and contributed to the field of astronomy through an innovative combination of experimentation and mathematics.

In the 18 th century, German philosopher Immanuel Kant sought to resolve the dispute between empiricism and rationalism in his book Critique of Pure Reason , by arguing that experience is purely subjective and processing them using pure reason without first delving into the subjective nature of experiences will lead to theoretical illusions. Kant’s ideas led to the development of German idealism , which inspired later development of interpretive techniques such as phenomenology, hermeneutics, and critical social theory.

At about the same time, French philosopher Auguste Comte (1798–1857), founder of the discipline of sociology, attempted to blend rationalism and empiricism in a new doctrine called positivism . He suggested that theory and observations have circular dependence on each other. While theories may be created via reasoning, they are only authentic if they can be verified through observations. The emphasis on verification started the separation of modern science from philosophy and metaphysics and further development of the “scientific method” as the primary means of validating scientific claims. Comte’s ideas were expanded by Emile Durkheim in his development of sociological positivism (positivism as a foundation for social research) and Ludwig Wittgenstein in logical positivism.

In the early 20 th century, strong accounts of positivism were rejected by interpretive sociologists (antipositivists) belonging to the German idealism school of thought. Positivism was typically equated with quantitative research methods such as experiments and surveys and without any explicit philosophical commitments, while antipositivism employed qualitative methods such as unstructured interviews and participant observation. Even practitioners of positivism, such as American sociologist Paul Lazarsfield who pioneered large-scale survey research and statistical techniques for analyzing survey data, acknowledged potential problems of observer bias and structural limitations in positivist inquiry. In response, antipositivists emphasized that social actions must be studied though interpretive means based upon an understanding the meaning and purpose that individuals attach to their personal actions, which inspired Georg Simmel’s work on symbolic interactionism, Max Weber’s work on ideal types, and Edmund Husserl’s work on phenomenology.

In the mid-to-late 20 th century, both positivist and antipositivist schools of thought were subjected to criticisms and modifications. British philosopher Sir Karl Popper suggested that human knowledge is based not on unchallengeable, rock solid foundations, but rather on a set of tentative conjectures that can never be proven conclusively, but only disproven. Empirical evidence is the basis for disproving these conjectures or “theories.” This metatheoretical stance, called postpositivism (or postempiricism), amends positivism by suggesting that it is impossible to verify the truth although it is possible to reject false beliefs, though it retains the positivist notion of an objective truth and its emphasis on the scientific method.

Likewise, antipositivists have also been criticized for trying only to understand society but not critiquing and changing society for the better. The roots of this thought lie in Das Capital , written by German philosophers Karl Marx and Friedrich Engels, which critiqued capitalistic societies as being social inequitable and inefficient, and recommended resolving this inequity through class conflict and proletarian revolutions. Marxism inspired social revolutions in countries such as Germany, Italy, Russia, and China, but generally failed to accomplish the social equality that it aspired. Critical research (also called critical theory) propounded by Max Horkheimer and Jurgen Habermas in the 20 th century, retains similar ideas of critiquing and resolving social inequality, and adds that people can and should consciously act to change their social and economic circumstances, although their ability to do so is constrained by various forms of social, cultural and political domination. Critical research attempts to uncover and critique the restrictive and alienating conditions of the status quo by analyzing the oppositions, conflicts and contradictions in contemporary society, and seeks to eliminate the causes of alienation and domination (i.e., emancipate the oppressed class). More on these different research philosophies and approaches will be covered in future chapters of this book.

- Social Science Research: Principles, Methods, and Practices. Authored by : Anol Bhattacherjee. Provided by : University of South Florida. Located at : http://scholarcommons.usf.edu/oa_textbooks/3/ . License : CC BY-NC-SA: Attribution-NonCommercial-ShareAlike

TODAY'S HOURS:

Research Process

- Select a Topic

- Find Background Info

- Focus Topic

- List Keywords

- Search for Sources

- Evaluate & Integrate Sources

- Cite and Track Sources

What is Scientific Research?

Research study design, natural vs. social science, qualitative vs. quantitative research, more information on qualitative research in the social sciences, acknowledgements.

Thank you to Julie Miller, reference intern, for helping to create this page.

Some people use the term research loosely, for example:

- People will say they are researching different online websites to find the best place to buy a new appliance or locate a lawn care service.

- TV news may talk about conducting research when they conduct a viewer poll on current event topic such as an upcoming election.

- Undergraduate students working on a term paper or project may say they are researching the internet to find information.

- Private sector companies may say they are conducting research to find a solution for a supply chain holdup.

However, none of the above is considered “scientific research” unless:

- The research contributes to a body of science by providing new information through ethical study design or

- The research follows the scientific method, an iterative process of observation and inquiry.

The Scientific Method

- Make an observation: notice a phenomenon in your life or in society or find a gap in the already published literature.

- Ask a question about what you have observed.

- Hypothesize about a potential answer or explanation.

- Make predictions if our hypothesis is correct.

- Design an experiment or study that will test your prediction.

- Test the prediction by conducting an experiment or study; report the outcomes of your study.

- Iterate! Was your prediction correct? Was the outcome unexpected? Did it lead to new observations?

The scientific method is not separate from the Research Process as described in the rest of this guide, in fact the Research Process is directly related to the observation stage of the scientific method. Understanding what other scientists and researchers have already studied will help you focus your area of study and build on their knowledge.

Designing your experiment or study is important for both natural and social scientists. Sage Research Methods (SRM) has an excellent "Project Planner" that guides you through the basic stages of research design. SRM also has excellent explanations of qualitative and quantitative research methods for the social sciences.

For the natural sciences, Springer Nature Experiments and Protocol Exchange have guidance on quantitative research methods.

Books, journals, reference books, videos, podcasts, data-sets, and case studies on social science research methods.

Sage Research Methods includes over 2,000 books, reference books, journal articles, videos, datasets, and case studies on all aspects of social science research methodology. Browse the methods map or the list of methods to identify a social science method to pursue further. Includes a project planning tool and the "Which Stats Test" tool to identify the best statistical method for your project. Includes the notable "little green book" series (Quantitative Applications in the Social Sciences) and the "little blue book" series (Qualitative Research Methods).

Platform connecting researchers with protocols and methods.

Springer Nature Experiments has been designed to help users/researchers find and evaluate relevant protocols and methods across the whole Springer Nature protocols and methods portfolio using one search. This database includes:

- Nature Protocols

- Nature Reviews Methods Primers

- Nature Methods

- Springer Protocols

Open repository for sharing scientific research protocols. These protocols are posted directly on the Protocol Exchange by authors and are made freely available to the scientific community for use and comment.

Includes these topics:

- Biochemistry

- Biological techniques

- Chemical biology

- Chemical engineering

- Cheminformatics

- Climate science

- Computational biology and bioinformatics

- Drug discovery

- Electronics

- Energy sciences

- Environmental sciences

- Materials science

- Molecular biology

- Molecular medicine

- Neuroscience

- Organic chemistry

- Planetary science

Qualitative research is primarily exploratory. It is used to gain an understanding of underlying reasons, opinions, and motivations. Qualitative research is also used to uncover trends in thought and opinions and to dive deeper into a problem by studying an individual or a group.

Qualitative methods usually use unstructured or semi-structured techniques. The sample size is typically smaller than in quantitative research.

Example: interviews and focus groups.

Quantitative research is characterized by the gathering of data with the aim of testing a hypothesis. The data generated are numerical, or, if not numerical, can be transformed into useable statistics.

Quantitative data collection methods are more structured than qualitative data collection methods and sample sizes are usually larger.

Example: survey

Note: The above descriptions of qualitative and quantitative research are mainly for research in the Social Sciences, rather than for Natural Sciences as most natural sciences rely on quantitative methods for their experiments.

Qualitative research is approaching the world in its natural setting and in a way that reveals the particularities rather than doing studies in a controlled setting. It aims to understand, describe, and sometimes explain social phenomena in a number of different ways:

- Experiences of individuals or groups

- Interactions and communications

- Documents (texts, images, film, or sounds, and digital documents)

- Experiences or interactions

Qualitative researchers seek to understand how people conceptualize the world around them, what they are doing, how they are doing it or what is happening to them in terms that are significant and that offer meaningful learnings.

Qualitative researchers develop and refine concepts (or hypotheses, if they are used) in the process of research and of collecting data. Cases (its history and complexity) are an important context for understanding the issue that is studied. A major part of qualitative research is based on text and writing – from field notes and transcripts to descriptions and interpretations and finally to the presentation of the findings and of the research as a whole.

For more information, see:

- << Previous: Cite and Track Sources

- Last Updated: Mar 1, 2024 1:02 PM

- URL: https://libguides.umflint.edu/research

Graduate Research pp 55–78 Cite as

Principles of Scientific Research

- Robert V. Smith 2

246 Accesses

Scientific research has provided knowledge and understanding that has freed humankind from the ignorance that once promoted fear, mysticism, superstition, and illness. Developments in science and scientific methods, however, did not come easily. Many of our ancestors had to face persecution, even death, from religious and political groups because they dared to advance the notion that knowledge and understanding could be gained through systematic study and practice. Today, the benefits of scientific research are understood. We appreciate the advances in the biological and physical sciences that allow the control of environment, the probing of the universe, and communications around the globe. We also appreciate the advances in biochemistry and molecular biology that have led to curative drugs, to genetic counseling, and to an unparalleled understanding of structure—function relationships in living organisms. We look hopefully to the development of life itself and, in concert with social-behavioral scientists, the unraveling of the relationship between mind and brain. Despite the potential moral issues raised by the latter advances, the history of science provides us faith that knowledge and understanding can be advanced for the benefit of humanity.

- Pilot Experiment

- Nobel Laureate

- Vanadium Steel

- STEPHEN Hawking

- Engineering Graduate Student

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

... ever since the dawn of civilization, people have not been content to see events as unconnected and inexplicable. They have craved an understanding of the underlying order in the world.... Humanity’s deepest desire for knowledge is justification enough for our continuing quest. — Stephen Hawking

This is a preview of subscription content, log in via an institution .

Buying options

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Unable to display preview. Download preview PDF.

A. Agresti and B. Finlay, Statistical Methods for Social. Scientists ( San Francisco: Macmillan, 1986 ).

Google Scholar

N. C. Barford, Experimental Measurements: Precision , Error and Truth , 2nd ed. ( New York: Wiley, 1985 ).

W. I. B. Beveridge, The Art of Scientific Investigation ( New York: Vintage Books, 1960 ).

G. E. Box, W. G. Hunter, and J. S. Hunter, Statistics for Experimenters: An Introduction to Design , Data Analysis and Model Building ( New York: Wiley, 1978 ).

K. A. Brownlee, Statistical Theory and Methodology: In Science and Engineering (New York: Wiley, 1984).

W. G. Cochran and G. W. Snedecor, Statistical Methods , 7th ed. ( Ames: Iowa State University Press, 1980 ).

D. R. Cox, Applied Statistics: Principles and Examples ( New York: Chapman and Hall, 1981 ).

Book Google Scholar

R. A. Fisher, Statistical Methods for Research Workers , 14th ed. ( New York: Hafner, 1973 ).

M. Kendall and A. Stewart, The Advanced Theory of Statistics , 3 vols., 4th ed. ( New York: Hafner, 1977 ).

R. E. Kirk, Experimental Design: Procedures for the Behavorial Sciences , 2nd ed. ( Monterey, CA: Brooks/Cole, 1982 ).

R. Remington and M. A. Schork, Statistics with Applications to the Biological and Health Sciences , 2nd ed. ( Englewood Cliffs, NJ: Prentice-Hall, 1985 ).

R. R. Sokal and F. J. Rohlf, Biometry: The Principles and Practice of Statistics in Biological Research , 2nd ed. ( New York: Freeman, 1981 ).

J. W. Tukey, Exploratory Data Analysis ( Reading, MA: Addison-Wesley, 1977 ).

P. R. Bevington, Data Reduction and Error Analysis for the Physical Sciences ( New York: McGraw-Hill, 1969 ).

BMD: Biomedical Computer Programs ,rev. ed., W. J. Dixon and M. B. Brown, eds. (Berkeley: University of California Press, 1983).

S. Brandt, Statistical and Computational Methods in Data Analysis , 2nd rev. ed. ( Amsterdam: Elsevier, 1976 ).

N. H. Nie, C. H. Hull, J. G. Jenkins, K. Steinbrenner, and D. H. Bent, Statistical Package for the Social Sciences (SPSS) , 2nd ed. ( New York: McGraw-Hill, 1975 ).

T. A. Ryan, B. L. Joiner, and B. F. Ryan, Minitab Student Handbook ( North Scituate, MA: Duxbury Press, 1976 ).

SYSTAT, Mainframe Statistics Package for Microcomputers ( Evanston, IL: SYSTAT Inc., 1986 ).

P. B. Medawar, Advice to a Young Scientist ( New York: Harper and Row, 1979 ).

J. Kitfield, Laureates—Linus Pauling, Northwest Orient 17 (1) (1986), pp. 37–39.

H. C. Brown, Adventures in research, Chemical and Engineering News 59 (14) (1981), pp. 24–29.

Article Google Scholar

Rosalyn S. Yalow, Melange: Commencement 1988, The Chronicle of Higher Education34 (39) (1988), p. B-3.

E. B. Wilson, An Introduction to Scientific Research ( New York: McGraw-Hill, 1952 ).

W. Thomson (Lord Kelvin), Popular Lectures and Addresses by Sir William Thomson , 1891–1894 ( New York: Macmillan, 1894 ).

R. W. Hamming, The unreasonable effectiveness of mathematics, American Mathematics Monthly 87 (2) (1980), pp. 81–90.

Commission on Physical Sciences, Mathematics and Resources, National Academy of Sciences, Improving the Treatment of Scientific and Engineering Data Through Education (Washington, DC: National Academy Press, 1986 ).

Stephen W. Hawking, A Brief History of Time ( New York: Bantam Books, 1988 ).

A. H. Corwin, in Proceedings of the Robert A. Welch Conference on Chemical Research . XX. American Chemistry Bicentennial , W . O. Milligan, ed. ( Houston, TX: Robert A. Welch Foundation, 1977 ), pp. 45–69.

M. Gardner, Aha! Insight ( New York: Scientific American, 1978 ).

J. Jaynes, The Origin of Consciousness in the Breakdown of the Bicameral Mind ( Boston: Houghton Mifflin, 1976 ).

W. W. Rostow, The Barbaric Counterrevolution: Cause and Cure ( Austin: University of Texas Press, 1983 ).

E. A. Eschbach, Fostering creativity, PNL Profile Fall (1986), pp. 9–10; Battelle Pacific Northwest Laboratories, Document BN-FA 530 , Updated 3–88 ,Creativity, discovery, invention and the put-down.

J. C. Sheehan, The Enchanted Ring: The Untold Story of Penicillin ( Cambridge, MA: MIT Press, 1982 ).

C. W. Ceram, Gods , Graves , and Scholars , 2nd ed. ( New York: Knopf, 1982 ).

Download references

Author information

Authors and affiliations.

Pullman, Washington, USA

Robert V. Smith

You can also search for this author in PubMed Google Scholar

Rights and permissions

Reprints and permissions

Copyright information

© 1990 Robert V. Smith

About this chapter

Cite this chapter.

Smith, R.V. (1990). Principles of Scientific Research. In: Graduate Research. Springer, Boston, MA. https://doi.org/10.1007/978-1-4899-7410-5_5

Download citation

DOI : https://doi.org/10.1007/978-1-4899-7410-5_5

Publisher Name : Springer, Boston, MA

Print ISBN : 978-0-306-43465-5

Online ISBN : 978-1-4899-7410-5

eBook Packages : Springer Book Archive

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Articles on Scientific research

Displaying 1 - 20 of 88 articles.

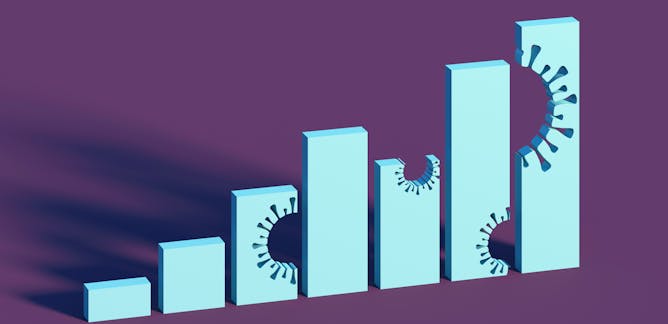

Early COVID-19 research is riddled with poor methods and low-quality results − a problem for science the pandemic worsened but didn’t create

Dennis M. Gorman , Texas A&M University

Netflix’s You Are What You Eat uses a twin study. Here’s why studying twins is so important for science

Nathan Kettlewell , University of Technology Sydney

Fact-bombing by experts doesn’t change hearts and minds. But good science communication can

Tom Carruthers , The University of Western Australia ; Heather Bray , The University of Western Australia , and Matthew Nurse , Australian National University

Talking about science and technology has positive impacts on research and society

Ashley Rose Mehlenbacher , University of Waterloo ; Donna Strickland , University of Waterloo , and Mary Wells , University of Waterloo

Tenacious curiosity in the lab can lead to a Nobel Prize – mRNA research exemplifies the unpredictable value of basic scientific research

André O. Hudson , Rochester Institute of Technology

Pigs with human brain cells and biological chips: how lab-grown hybrid lifeforms bamboozle scientific ethics

Julian Koplin , Monash University

When Greenland was green: Ancient soil from beneath a mile of ice offers warnings for the future

Paul Bierman , University of Vermont and Tammy Rittenour , Utah State University

10 reasons humans kill animals – and why we can’t avoid it

Benjamin Allen , University of Southern Queensland

Hurricanes push heat deeper into the ocean than scientists realized, boosting long-term ocean warming, new research shows

Noel Gutiérrez Brizuela , University of California, San Diego and Sally Warner , Brandeis University

Colonialism has shaped scientific plant collections around the world – here’s why that matters

Daniel Park , Purdue University

You shed DNA everywhere you go – trace samples in the water, sand and air are enough to identify who you are, raising ethical questions about privacy

Jenny Whilde , University of Florida and Jessica Alice Farrell , University of Florida

Nigeria needs to take science more seriously - an agenda for the new president

Oyewale Tomori , Nigerian Academy of Science

Two decades of stagnant funding have rendered Canada uncompetitive in biomedical research. Here’s why it matters, and how to fix it.

Stephen L Archer , Queen's University, Ontario

How tracking technology is transforming our understanding of animal behaviour

Louise Gentle , Nottingham Trent University

What the world would lose with the demise of Twitter: Valuable eyewitness accounts and raw data on human behavior, as well as a habitat for trolls

Anjana Susarla , Michigan State University

There are 8 years left to meet the UN Sustainable Development Goals, but is it enough time?

Rees Kassen , L’Université d’Ottawa/University of Ottawa and Ruth Morgan , UCL

‘Gain of function’ research can create experimental viruses. In light of COVID, it should be more strictly regulated – or banned

Colin D. Butler , Australian National University

By fact-checking Thoreau’s observations at Walden Pond, we showed how old diaries and specimens can inform modern research

Tara K. Miller , Boston University ; Abe Miller-Rushing , National Park Service , and Richard B. Primack , Boston University

New ‘ethics guidance’ for top science journals aims to root out harmful research – but can it succeed?

Cordelia Fine , The University of Melbourne

Expanding Alzheimer’s research with primates could overcome the problem with treatments that show promise in mice but don’t help humans

Agnès Lacreuse , UMass Amherst ; Allyson J. Bennett , University of Wisconsin-Madison , and Amanda M. Dettmer , Yale University

Related Topics

- Climate change

- Research funding

- Science research

- Scientific method

- Scientific publishing

- South Africa

Top contributors

Previous Vice President of the Academy of Science of South Africa and DSI-NRF SARChI chair in Fungal Genomics, Professor in Genetics, University of Pretoria, University of Pretoria

Editor-in-Chief of the South African Journal of Science and Consultant, Vice Principal for Research and Graduate Education, University of Pretoria

Professor of Public Affairs, The Ohio State University

Honorary Professor, Australian National University

Adjunct Professor of Environmental Geography, CQUniversity Australia

Professor, History & Philosophy of Science program, School of Historical & Philosophical Studies, The University of Melbourne

Associate Professor, University of Sydney

Postdoctoral Fellow in Chronobiology, National Institute for Medical Research

Professor of Medicine, Pharmacology and Biomedical Engineering, University of Illinois Chicago

Professor of Planetary Science and Astrobiology, Birkbeck, University of London; Honorary Associate Professor, UCL

Professor of Natural Philosophy in the Department of Physics, University of York

Professor in High Medieval History, Durham University

Associate Professor in Experimental Psychology (Perception), University of Oxford

PhD Student and Trainee Clinical Psychologist at the Graduate Center, City University of New York

Adjunct Senior Lecturer, University of Tasmania

- X (Twitter)

- Unfollow topic Follow topic

- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types