Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Frequency Distribution | Tables, Types & Examples

Frequency Distribution | Tables, Types & Examples

Published on June 7, 2022 by Shaun Turney . Revised on June 21, 2023.

A frequency distribution describes the number of observations for each possible value of a variable . Frequency distributions are depicted using graphs and frequency tables.

Table of contents

What is a frequency distribution, how to make a frequency table, how to graph a frequency distribution, other interesting articles, frequently asked questions about frequency distributions.

The frequency of a value is the number of times it occurs in a dataset. A frequency distribution is the pattern of frequencies of a variable. It’s the number of times each possible value of a variable occurs in a dataset.

Types of frequency distributions

There are four types of frequency distributions:

- You can use this type of frequency distribution for categorical variables .

- You can use this type of frequency distribution for quantitative variables .

- You can use this type of frequency distribution for any type of variable when you’re more interested in comparing frequencies than the actual number of observations.

- You can use this type of frequency distribution for ordinal or quantitative variables when you want to understand how often observations fall below certain values .

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

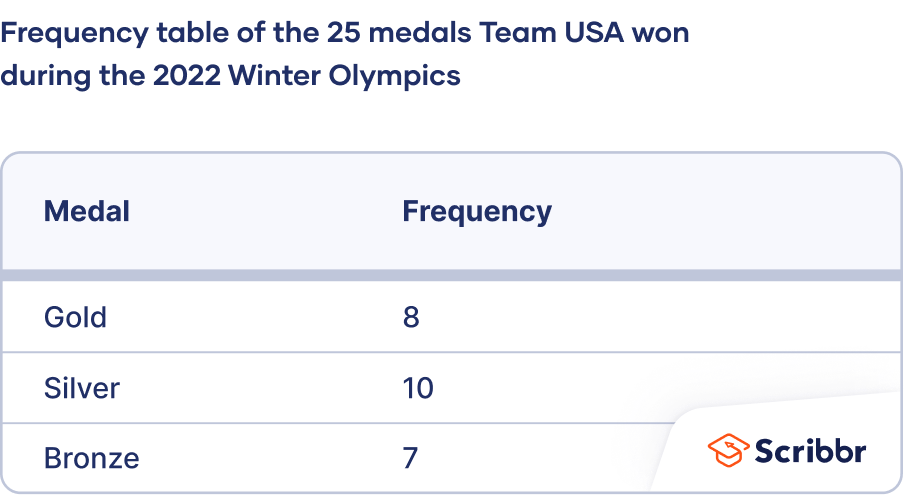

Frequency distributions are often displayed using frequency tables . A frequency table is an effective way to summarize or organize a dataset. It’s usually composed of two columns:

- The values or class intervals

- Their frequencies

The method for making a frequency table differs between the four types of frequency distributions. You can follow the guides below or use software such as Excel, SPSS, or R to make a frequency table.

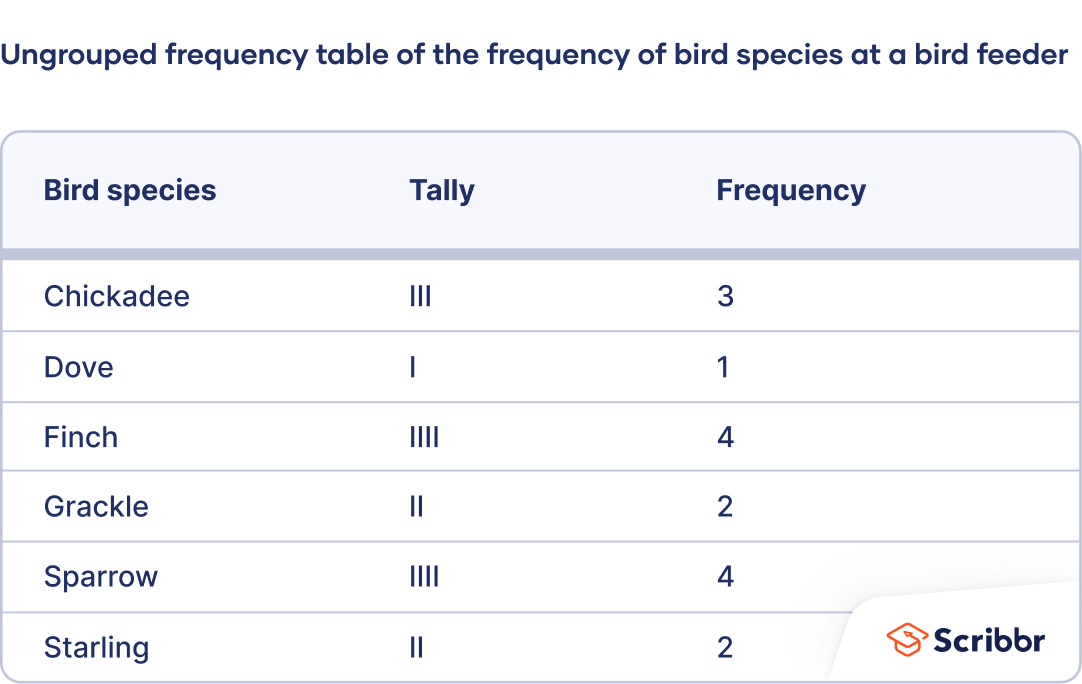

How to make an ungrouped frequency table

- For ordinal variables , the values should be ordered from smallest to largest in the table rows.

- For nominal variables , the values can be in any order in the table. You may wish to order them alphabetically or in some other logical order.

- Especially if your dataset is large, it may help to count the frequencies by tallying . Add a third column called “Tally.” As you read the observations, make a tick mark in the appropriate row of the tally column for each observation. Count the tally marks to determine the frequency.

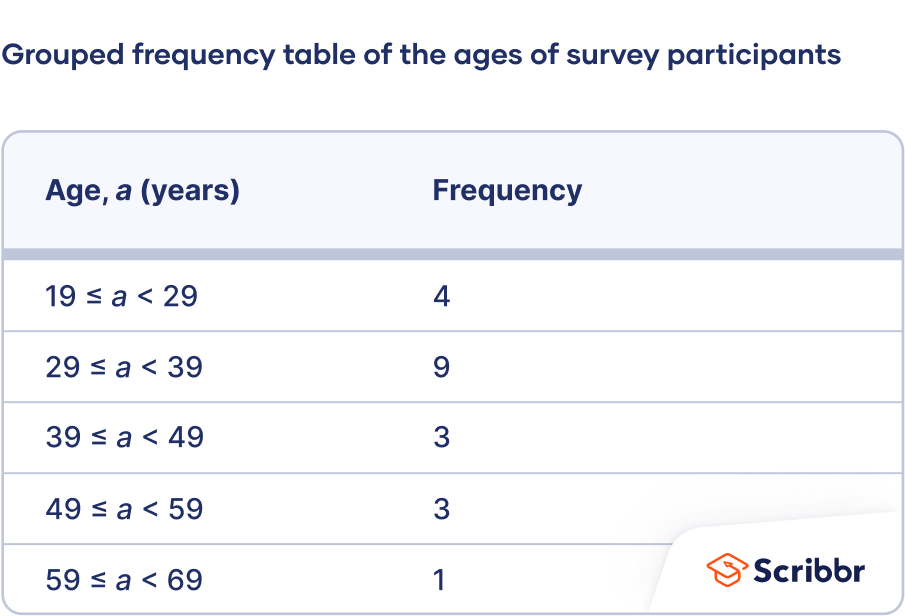

How to make a grouped frequency table

- Calculate the range . Subtract the lowest value in the dataset from the highest.

- Create a table with two columns and as many rows as there are class intervals. Label the first column using the variable name and label the second column “Frequency.” Enter the class intervals in the first column.

- Count the frequencies. The frequencies are the number of observations in each class interval. You can count by tallying if you find it helpful. Enter the frequencies in the second column of the table beside their corresponding class intervals.

Round the class interval width to 10.

The class intervals are 19 ≤ a < 29, 29 ≤ a < 39, 39 ≤ a < 49, 49 ≤ a < 59, and 59 ≤ a < 69.

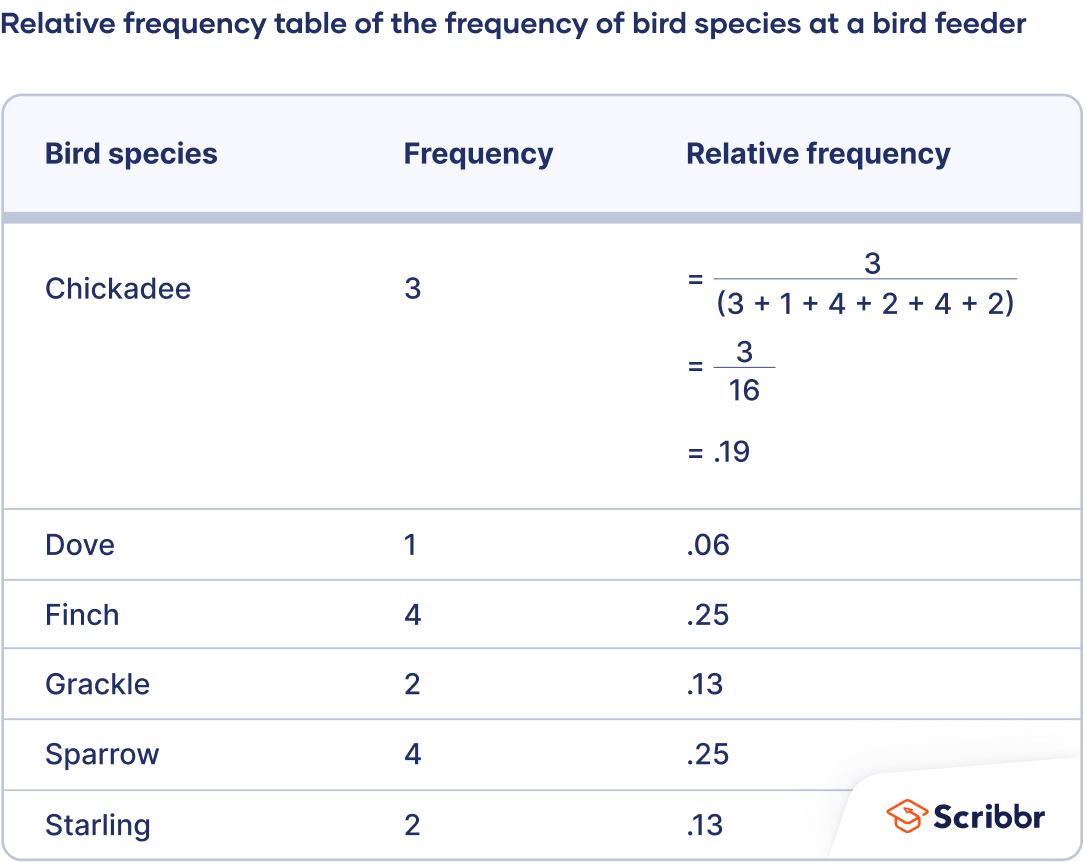

How to make a relative frequency table

- Create an ungrouped or grouped frequency table .

- Add a third column to the table for the relative frequencies. To calculate the relative frequencies, divide each frequency by the sample size. The sample size is the sum of the frequencies.

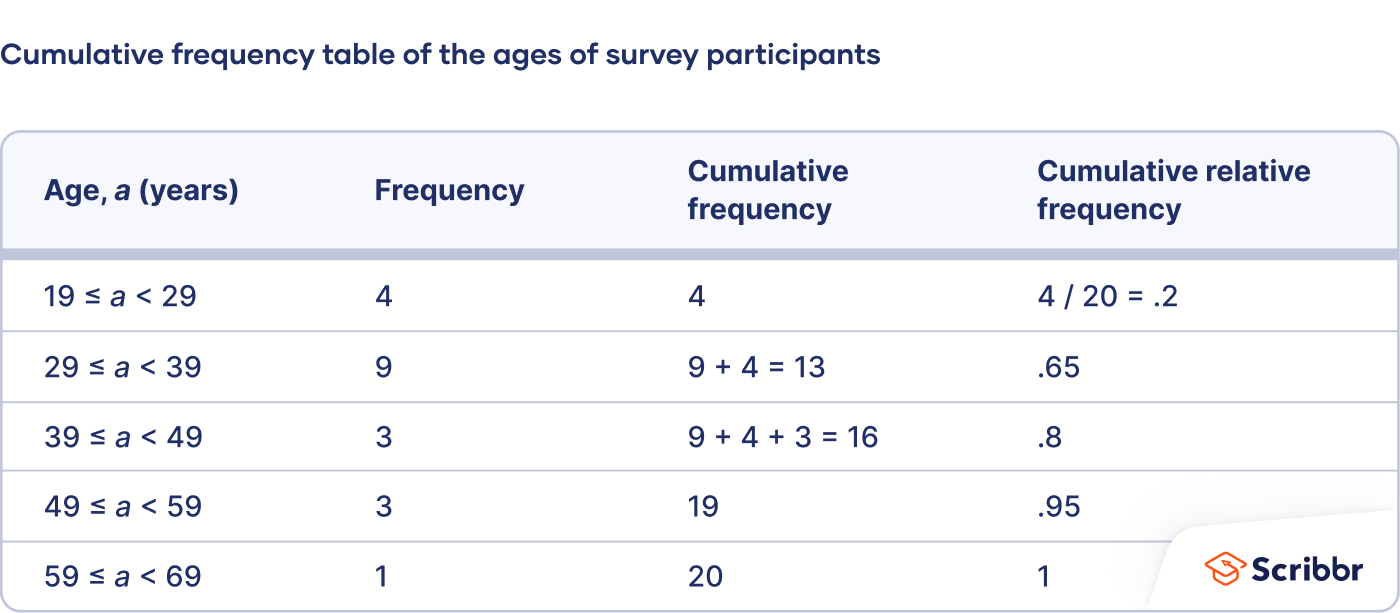

How to make a cumulative frequency table

- Create an ungrouped or grouped frequency table for an ordinal or quantitative variable. Cumulative frequencies don’t make sense for nominal variables because the values have no order—one value isn’t more than or less than another value.

- Add a third column to the table for the cumulative frequencies. The cumulative frequency is the number of observations less than or equal to a certain value or class interval. To calculate the relative frequencies, add each frequency to the frequencies in the previous rows.

- Optional: If you want to calculate the cumulative relative frequency , add another column and divide each cumulative frequency by the sample size.

Pie charts, bar charts, and histograms are all ways of graphing frequency distributions. The best choice depends on the type of variable and what you’re trying to communicate.

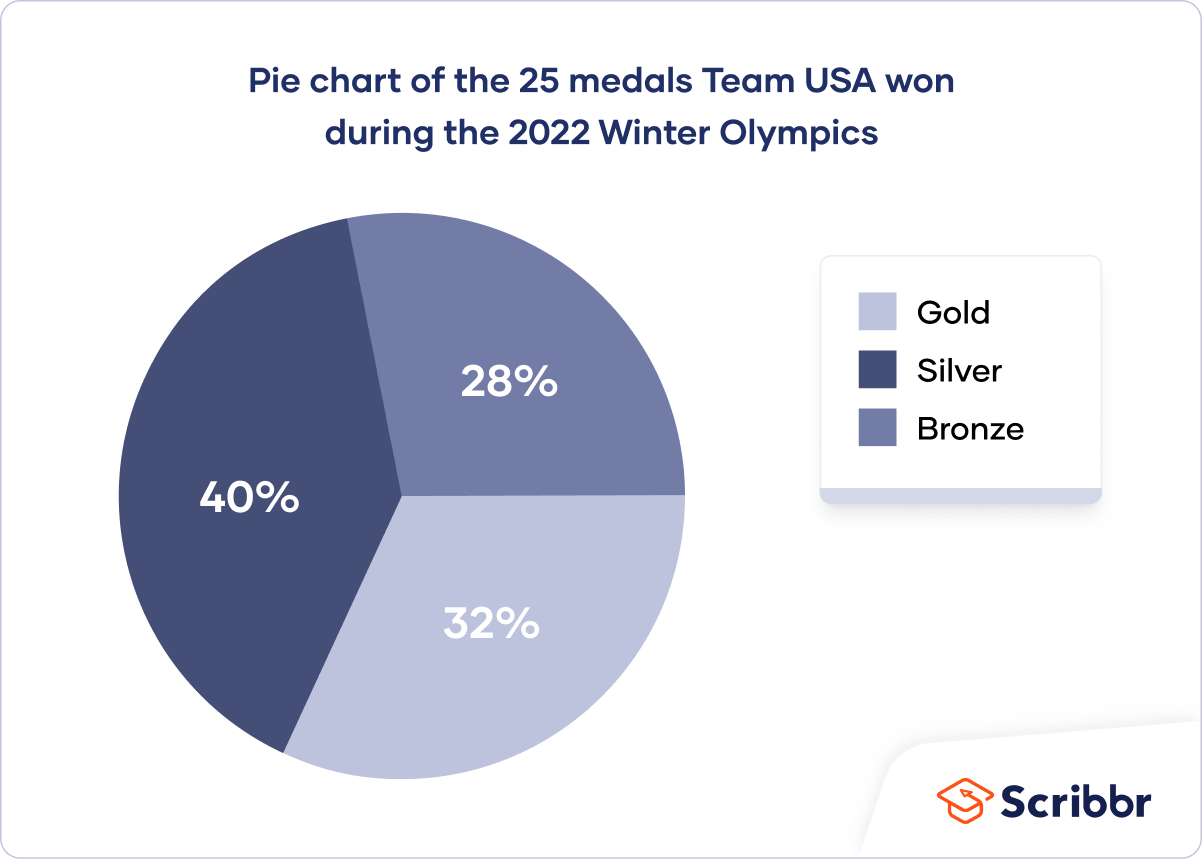

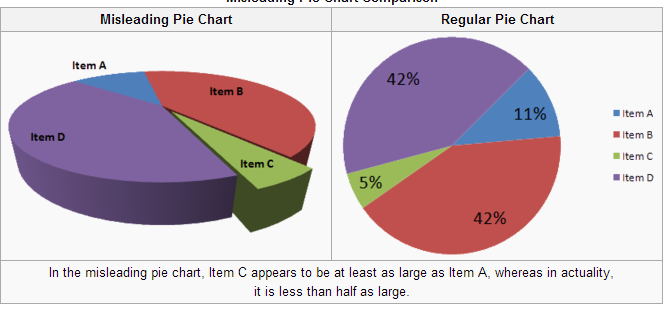

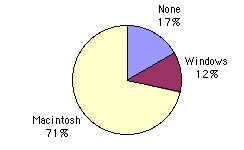

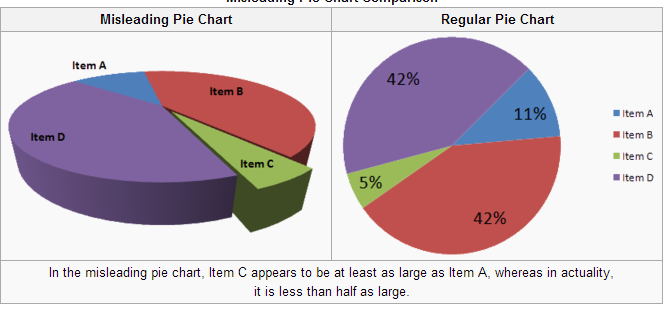

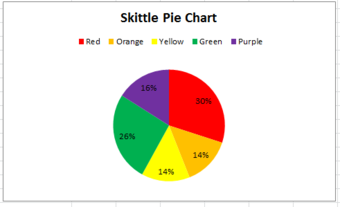

A pie chart is a graph that shows the relative frequency distribution of a nominal variable .

A pie chart is a circle that’s divided into one slice for each value. The size of the slices shows their relative frequency.

This type of graph can be a good choice when you want to emphasize that one variable is especially frequent or infrequent, or you want to present the overall composition of a variable.

A disadvantage of pie charts is that it’s difficult to see small differences between frequencies. As a result, it’s also not a good option if you want to compare the frequencies of different values.

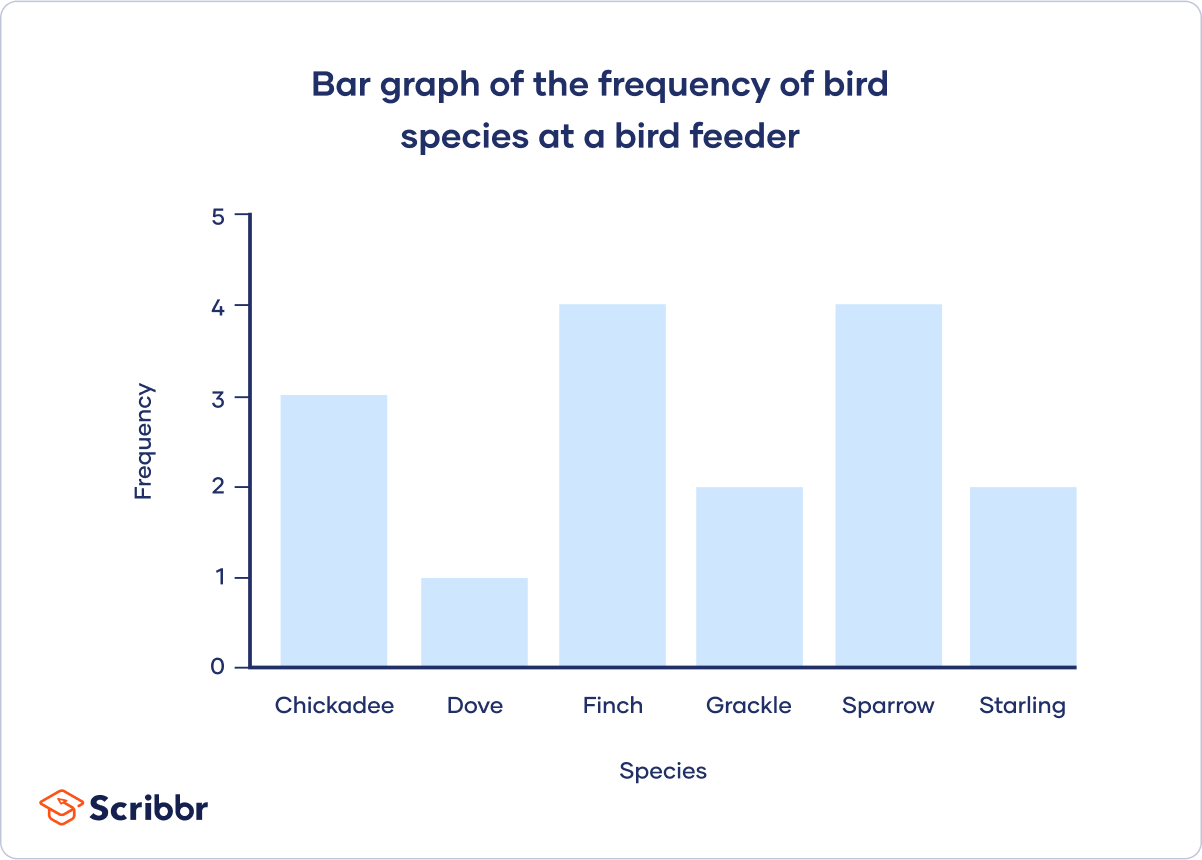

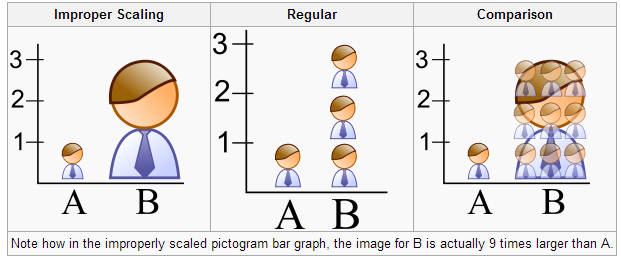

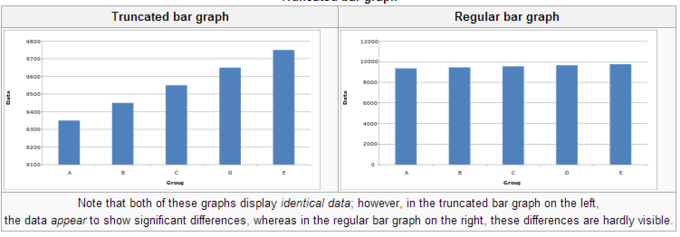

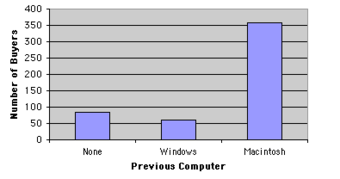

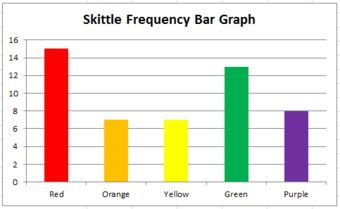

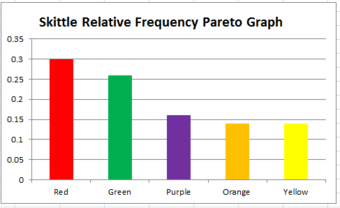

A bar chart is a graph that shows the frequency or relative frequency distribution of a categorical variable (nominal or ordinal).

The y -axis of the bars shows the frequencies or relative frequencies, and the x -axis shows the values. Each value is represented by a bar, and the length or height of the bar shows the frequency of the value.

A bar chart is a good choice when you want to compare the frequencies of different values. It’s much easier to compare the heights of bars than the angles of pie chart slices.

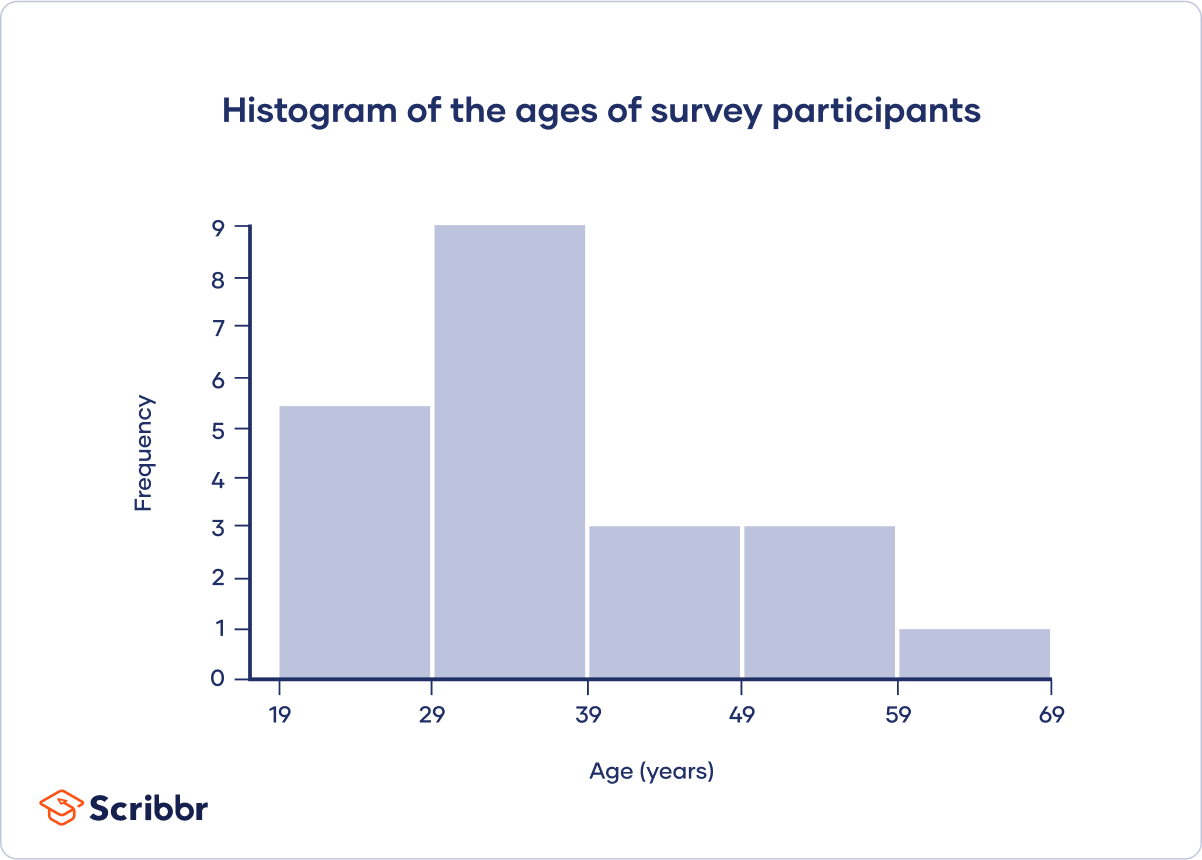

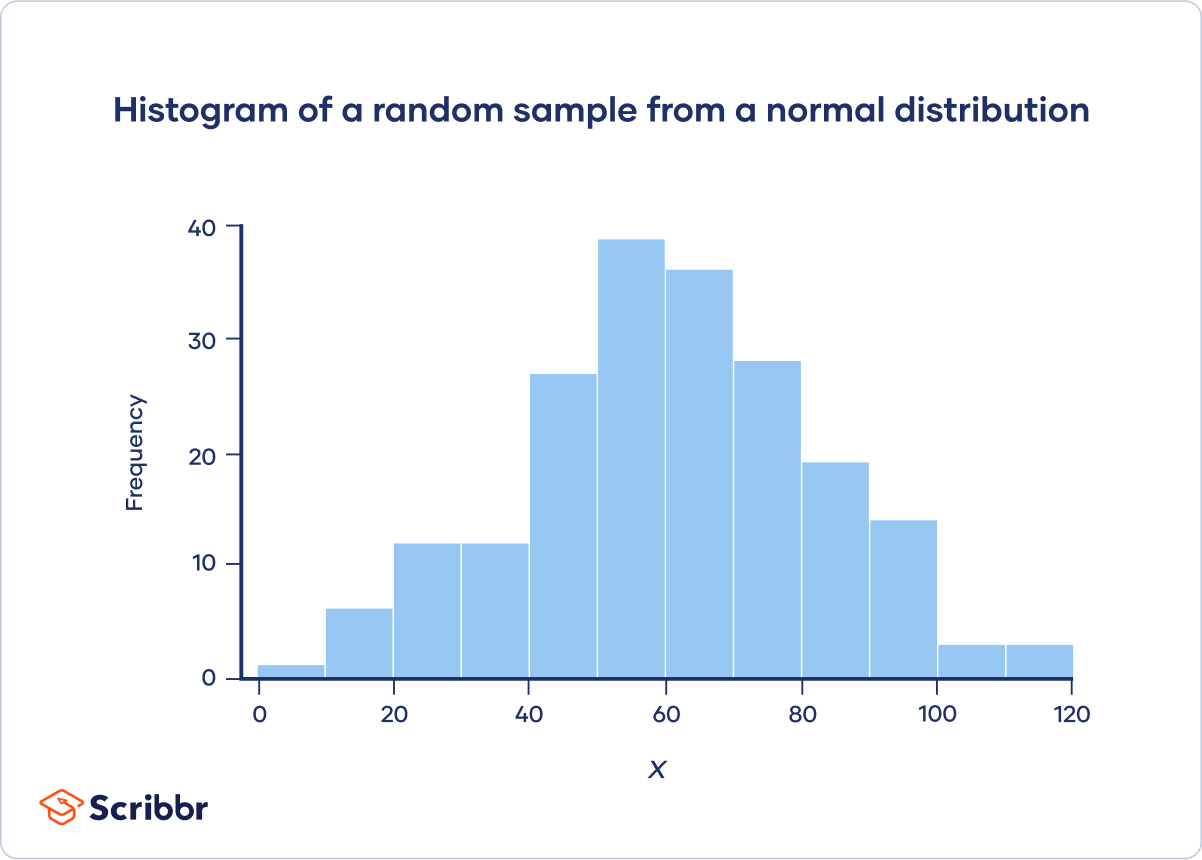

A histogram is a graph that shows the frequency or relative frequency distribution of a quantitative variable . It looks similar to a bar chart.

The continuous variable is grouped into interval classes , just like a grouped frequency table . The y -axis of the bars shows the frequencies or relative frequencies, and the x -axis shows the interval classes. Each interval class is represented by a bar, and the height of the bar shows the frequency or relative frequency of the interval class.

Although bar charts and histograms are similar, there are important differences:

A histogram is an effective visual summary of several important characteristics of a variable. At a glance, you can see a variable’s central tendency and variability , as well as what probability distribution it appears to follow, such as a normal , Poisson , or uniform distribution.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t table

- Student’s t distribution

- Quartiles & Quantiles

- Measures of central tendency

- Correlation coefficient

Methodology

- Cluster sampling

- Stratified sampling

- Types of interviews

- Cohort study

- Thematic analysis

Research bias

- Implicit bias

- Cognitive bias

- Survivorship bias

- Availability heuristic

- Nonresponse bias

- Regression to the mean

Prevent plagiarism. Run a free check.

A histogram is an effective way to tell if a frequency distribution appears to have a normal distribution .

Plot a histogram and look at the shape of the bars. If the bars roughly follow a symmetrical bell or hill shape, like the example below, then the distribution is approximately normally distributed.

Categorical variables can be described by a frequency distribution. Quantitative variables can also be described by a frequency distribution, but first they need to be grouped into interval classes .

Probability is the relative frequency over an infinite number of trials.

For example, the probability of a coin landing on heads is .5, meaning that if you flip the coin an infinite number of times, it will land on heads half the time.

Since doing something an infinite number of times is impossible, relative frequency is often used as an estimate of probability. If you flip a coin 1000 times and get 507 heads, the relative frequency, .507, is a good estimate of the probability.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Turney, S. (2023, June 21). Frequency Distribution | Tables, Types & Examples. Scribbr. Retrieved April 10, 2024, from https://www.scribbr.com/statistics/frequency-distributions/

Is this article helpful?

Shaun Turney

Other students also liked, variability | calculating range, iqr, variance, standard deviation, types of variables in research & statistics | examples, normal distribution | examples, formulas, & uses, what is your plagiarism score.

Frequency Distribution

A frequency distribution shows the frequency of repeated items in a graphical form or tabular form. It gives a visual display of the frequency of items or shows the number of times they occurred. Let's learn about frequency distribution in this article in detail.

What is Frequency Distribution?

Frequency distribution is used to organize the collected data in table form. The data could be marks scored by students, temperatures of different towns, points scored in a volleyball match, etc. After data collection, we have to show data in a meaningful manner for better understanding. Organize the data in such a way that all its features are summarized in a table. This is known as frequency distribution.

Let's consider an example to understand this better. The following are the scores of 10 students in the G.K. quiz released by Mr. Chris 15, 17, 20, 15, 20, 17, 17, 14, 14, 20. Let's represent this data in frequency distribution and find out the number of students who got the same marks.

We can see that all the collected data is organized under the column quiz marks and the number of students. This makes it easier to understand the given information and we can see that the number of students who obtained the same marks. Thus, frequency distribution in statistics helps us to organize the data in an easy way to understand its features at a glance.

Frequency Distribution Graphs

There is another way to show data that is in the form of graphs and it can be done by using a frequency distribution graph. The graphs help us to understand the collected data in an easy way. The graphical representation of a frequency distribution can be shown using the following:

- Bar Graphs : Bar graphs represent data using rectangular bars of uniform width along with equal spacing between the rectangular bars.

- Histograms : A histogram is a graphical presentation of data using rectangular bars of different heights. In a histogram, there is no space between the rectangular bars.

- Pie Chart : A pie chart is a type of graph that visually displays data in a circular chart. It records data in a circular manner and then it is further divided into sectors that show a particular part of data out of the whole part.

- Frequency Polygon: A frequency polygon is drawn by joining the mid-points of the bars in a histogram.

Types of Frequency Distribution

There are four types of frequency distribution under statistics which are explained below:

- Ungrouped frequency distribution: It shows the frequency of an item in each separate data value rather than groups of data values.

- Grouped frequency distribution: In this type, the data is arranged and separated into groups called class intervals. The frequency of data belonging to each class interval is noted in a frequency distribution table. The grouped frequency table shows the distribution of frequencies in class intervals.

- Relative frequency distribution: It tells the proportion of the total number of observations associated with each category.

- Cumulative frequency distribution: It is the sum of the first frequency and all frequencies below it in a frequency distribution. You have to add a value with the next value then add the sum with the next value again and so on till the last. The last cumulative frequency will be the total sum of all frequencies.

- Frequency Distribution Table

A frequency distribution table is a chart that shows the frequency of each of the items in a data set. Let's consider an example to understand how to make a frequency distribution table using tally marks. A jar containing beads of different colors- red, green, blue, black, red, green, blue, yellow, red, red, green, green, green, yellow, red, green, yellow. To know the exact number of beads of each particular color, we need to classify the beads into categories. An easy way to find the number of beads of each color is to use tally marks . Pick the beads one by one and enter the tally marks in the respective row and column. Then, indicate the frequency for each item in the table.

Thus, the table so obtained is called a frequency distribution table .

Types of Frequency Distribution Table

There are two types of frequency distribution tables: Grouped and ungrouped frequency distribution tables.

Grouped Frequency Distribution Table: To arrange a large number of observations or data, we use grouped frequency distribution table. In this, we form class intervals to tally the frequency for the data that belongs to that particular class interval.

For example, Marks obtained by 20 students in the test are as follows. 5, 10, 20, 15, 5, 20, 20, 15, 15, 15, 10, 10, 10, 20, 15, 5, 18, 18, 18, 18. To arrange the data in grouped table we have to make class intervals. Thus, we will make class intervals of marks like 0 – 5, 6 – 10, and so on. Given below table shows two columns one is of class intervals (marks obtained in test) and the second is of frequency (no. of students). In this, we have not used tally marks as we counted the marks directly.

Ungrouped Frequency Distribution Table: In the ungrouped frequency distribution table, we don't make class intervals, we write the accurate frequency of individual data. Considering the above example, the ungrouped table will be like this. Given below table shows two columns: one is of marks obtained in the test and the second is of frequency (no. of students).

Important Notes:

Following are the important points related to frequency distribution.

- Figures or numbers collected for some definite purpose is called data.

- Frequency is the value in numbers that shows how often a particular item occurs in the given data set.

- There are two types of frequency table - Grouped Frequency Distribution and Ungrouped Frequency Distribution.

- Data can be shown using graphs like histograms, bar graphs, frequency polygons, and so on.

Related Articles on Frequency Distribution

To learn more about the frequency distribution, check the given articles.

- Data Handling

Frequency Distribution Examples

Example 1: There are 20 students in a class. The teacher, Ms. Jolly, asked the students to tell their favorite subject. The results are as follows - Mathematics, English, Science, Science, Mathematics, Science, English, Art, Mathematics, Mathematics, Science, Art, Art, Science, Mathematics, Art, Mathematics, English, English, Mathematics.

Represent this data in the form of frequency distribution and identify the most-liked subject?

Solution: 20 students have indicated their choices of preferred subjects. Let us represent this data using tally marks. The tally marks are showing the frequency of each subject.

According to the above frequency distribution, mathematics is the most liked subject.

Example 2: 100 schools decided to plant 100 tree saplings in their gardens on world environment day. Represent the given data in the form of frequency distribution and find the number of schools that are able to plant 50% of the plants or more? 95, 67, 28, 32, 65, 65, 69, 33, 98, 96, 76, 42, 32, 38, 42, 40, 40, 69, 95, 92, 75, 83, 76, 83, 85, 62, 37, 65, 63, 42, 89, 65, 73, 81, 49, 52, 64, 76, 83, 92, 93, 68, 52, 79, 81, 83, 59, 82, 75, 82, 86, 90, 44, 62, 31, 36, 38, 42, 39, 83, 87, 56, 58, 23, 35, 76, 83, 85, 30, 68, 69, 83, 86, 43, 45, 39, 83, 75, 66, 83, 92, 75, 89, 66, 91, 27, 88, 89, 93, 42, 53, 69, 90, 55, 66, 49, 52, 83, 34, 36

Solution: To include all the observations in groups, we will create various groups of equal intervals. These intervals are called class intervals. In the frequency distribution, the number of plants survived is showing the class intervals, tally marks are showing frequency, and the number of schools is the frequency in numbers.

So, according to class intervals starting from 50 – 59 to 90 – 99, the frequency of schools able to retain 50% or more plants are 8 + 18 + 10 + 23 + 12 = 71 schools. Thus, 71 schools are able to retain 50% or more plants in their garden.

go to slide go to slide

Book a Free Trial Class

Frequency Distribution Practice Questions

Faqs on frequency distribution, what is a frequency distribution in math.

In statistics, the frequency distribution is a graph or data set organized to represent the frequency of occurrence of each possible outcome of an event that is observed a specific number of times. Frequency distribution is a tabular or graphical representation of the data that shows the frequency of all the observations.

What are the 2 Types of Frequency Distribution Table?

The 2 types of frequency distributions are:

- Ungrouped frequency distribution

- Grouped frequency distribution

Why are Frequency Distributions Important?

Frequency charts are the best way to organize data. Doctors use it to understand the frequency of diseases. Sports analysts use it to understand the performance of a sportsperson. Wherever you have a large amount of data, frequency distribution makes it easy to analyze the data.

How do you find Frequency Distribution?

Follow the steps to find frequency distribution:

- Step 1: To make a frequency chart, first, write the categories in the first column.

- Step 2: In the next step, tally the score in the second column.

- Step 3: And finally, count the tally to write the frequency of each category in the third column.

Thus, in this way, we can find the frequency distribution of an event.

What is the Difference Between Frequency Table and Frequency Distribution?

The frequency table is a tabular method where the frequency is assigned to its respective category. Whereas, a frequency distribution is known as the graphical representation of the frequency table.

What is Grouped Frequency Distribution?

A grouped frequency distribution shows the scores by grouping the observations into intervals and then lists these intervals in the frequency distribution table. The intervals in grouped frequency distribution are called class limits.

What is Ungrouped Frequency Distribution?

The ungrouped frequency distribution is a type of frequency distribution that displays the frequency of each individual data value instead of groups of data values. In this type of frequency distribution, we can directly see how often different values occurred in the table.

What are the Components of Frequency Distribution?

The components of the frequency distribution are as follows:

- Class interval

- Types of class interval

- Class boundaries

- Midpoint or classmark

- Width or size of class interval

- Class frequency

- Frequency class width

- Search Search Please fill out this field.

What Is a Frequency Distribution?

- How It Works

- Visual Representation

Frequency Distribution in Trading

- Frequency Distribution FAQs

The Bottom Line

- Corporate Finance

- Financial Analysis

Frequency Distribution: Definition in Statistics and Trading

:max_bytes(150000):strip_icc():format(webp)/profile_photo-5bfc2a3446e0fb0026012f81.png)

Investopedia / Sydney Saporito

A frequency distribution is a representation, either in a graphical or tabular format, that displays the number of observations within a given interval. The frequency is how often a value occurs in an interval while the distribution is the pattern of frequency of the variable.

The interval size depends on the data being analyzed and the goals of the analyst. The intervals must be mutually exclusive and exhaustive. Frequency distributions are typically used within a statistical context. Generally, frequency distributions can be associated with the charting of a normal distribution .

Key Takeaways

- A frequency distribution in statistics is a representation that displays the number of observations within a given interval.

- The representation of a frequency distribution can be graphical or tabular so that it is easier to understand.

- Frequency distributions are particularly useful for normal distributions, which show the observations of probabilities divided among standard deviations.

- In finance, traders use frequency distributions to take note of price action and identify trends.

Understanding a Frequency Distribution

As a statistical tool, a frequency distribution provides a visual representation of the distribution of observations within a particular test. Analysts often use a frequency distribution to visualize or illustrate the data collected in a sample. For example, the height of children can be split into several different categories or ranges.

In measuring the height of 50 children, some are tall and some are short, but there is a high probability of a higher frequency or concentration in the middle range. The most important factors for gathering data are that the intervals used must not overlap and must contain all of the possible observations.

Visual Representation of a Frequency Distribution

Both histograms and bar charts provide a visual display using columns, with the y-axis representing the frequency count, and the x-axis representing the variable to be measured. In the height of children, for example, the y-axis is the number of children, and the x-axis is the height. The columns represent the number of children observed with heights measured in each interval.

In general, a histogram chart will typically show a normal distribution, which means that the majority of occurrences will fall in the middle columns. Frequency distributions can be a key aspect of charting normal distributions which show observation probabilities divided among standard deviations .

Frequency distributions can be presented as a frequency table, a histogram, or a bar chart. Below is an example of a frequency distribution as a table.

Frequency distributions are not commonly used in the world of investments; however, traders who follow Richard D. Wyckoff , a pioneering early 20th-century trader, use an approach to trading that involves frequency distribution.

Investment houses still use the approach, which requires considerable practice, to teach traders. The frequency chart is referred to as a point-and-figure chart and was created out of a need for floor traders to take note of price action and to identify trends.

The y-axis is the variable measured, and the x-axis is the frequency count. Each change in price action is denoted in Xs and Os. Traders interpret it as an uptrend when three X's emerge; in this case, demand has overcome supply. In the reverse situation, when the chart shows three O's, it indicates that supply has overcome demand.

What Are the Types of Frequency Distribution?

The types of frequency distribution are grouped frequency distribution, ungrouped frequency distribution, cumulative frequency distribution, relative frequency distribution, and relative cumulative frequency distribution.

What Is the Importance of a Frequency Distribution?

A frequency distribution is a means to organize a large amount of data. It takes data from a population based on certain characteristics and organizes the data in a way that is comprehensible to an individual that wants to make assumptions about a given population.

How Can I Construct a Frequency Distribution?

To construct a frequency distribution, first, note the specific classes determined by intervals in one column then sum the numbers in each isolated category based on how many times it shows up. The frequency can then be noted in the second column.

A frequency distribution is used to display the number of observations within a particular interval. This method, while not always commonly used in investing, is still used by some traders. In this case, the frequency chart is called a point-and-figure chart and is used to identify trends through the observation of price action.

National Association of Securities Dealers Automated Quotations. " Point & Figure Basics ," Page 3.

:max_bytes(150000):strip_icc():format(webp)/800px-Histogram_of_arrivals_per_minute-d887a0bc75ab42f1b26f22631b6c29ca.png)

- Terms of Service

- Editorial Policy

- Privacy Policy

- Your Privacy Choices

An official website of the United States government

The .gov means it's official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- Browse Titles

NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2024 Jan-.

StatPearls [Internet].

Exploratory data analysis: frequencies, descriptive statistics, histograms, and boxplots.

Jacob Shreffler ; Martin R. Huecker .

Affiliations

Last Update: November 3, 2023 .

- Definition/Introduction

Researchers must utilize exploratory data techniques to present findings to a target audience and create appropriate graphs and figures. Researchers can determine if outliers exist, data are missing, and statistical assumptions will be upheld by understanding data. Additionally, it is essential to comprehend these data when describing them in conclusions of a paper, in a meeting with colleagues invested in the findings, or while reading others’ work.

- Issues of Concern

This comprehension begins with exploring these data through the outputs discussed in this article. Individuals who do not conduct research must still comprehend new studies, and knowledge of fundamentals in analyzing data and interpretation of histograms and boxplots facilitates the ability to appraise recent publications accurately. Without this familiarity, decisions could be implemented based on inaccurate delivery or interpretation of medical studies.

Frequencies and Descriptive Statistics

Effective presentation of study results, in presentation or manuscript form, typically starts with frequencies and descriptive statistics (ie, mean, medians, standard deviations). One can get a better sense of the variables by examining these data to determine whether a balanced and sufficient research design exists. Frequencies also inform on missing data and give a sense of outliers (will be discussed below).

Luckily, software programs are available to conduct exploratory data analysis. For this chapter, we will be examining the following research question.

RQ: Are there differences in drug life (length of effect) for Drug 23 based on the administration site?

A more precise hypothesis could be: Is drug 23 longer-lasting when administered via site A compared to site B?

To address this research question, exploratory data analysis is conducted. First, it is essential to start with the frequencies of the variables. To keep things simple, only variables of minutes (drug life effect) and administration site (A vs B) are included. See Image. Figure 1 for outputs for frequencies.

Figure 1 shows that the administration site appears to be a balanced design with 50 individuals in each group. The excerpt for minutes frequencies is the bottom portion of Figure 1 and shows how many cases fell into each time frame with the cumulative percent on the right-hand side. In examining Figure 1, one suspiciously low measurement (135) was observed, considering time variables. If a data point seems inaccurate, a researcher should find this case and confirm if this was an entry error. For the sake of this review, the authors state that this was an entry error and should have been entered 535 and not 135. Had the analysis occurred without checking this, the data analysis, results, and conclusions would have been invalid. When finding any entry errors and determining how groups are balanced, potential missing data is explored. If not responsibly evaluated, missing values can nullify results.

After replacing the incorrect 135 with 535, descriptive statistics, including the mean, median, mode, minimum/maximum scores, and standard deviation were examined. Output for the research example for the variable of minutes can be seen in Figure 2. Observe each variable to ensure that the mean seems reasonable and that the minimum and maximum are within an appropriate range based on medical competence or an available codebook. One assumption common in statistical analyses is a normal distribution. Image . Figure 2 shows that the mode differs from the mean and the median. We have visualization tools such as histograms to examine these scores for normality and outliers before making decisions.

Histograms are useful in assessing normality, as many statistical tests (eg, ANOVA and regression) assume the data have a normal distribution. When data deviate from a normal distribution, it is quantified using skewness and kurtosis. [1] Skewness occurs when one tail of the curve is longer. If the tail is lengthier on the left side of the curve (more cases on the higher values), this would be negatively skewed, whereas if the tail is longer on the right side, it would be positively skewed. Kurtosis is another facet of normality. Positive kurtosis occurs when the center has many values falling in the middle, whereas negative kurtosis occurs when there are very heavy tails. [2]

Additionally, histograms reveal outliers: data points either entered incorrectly or truly very different from the rest of the sample. When there are outliers, one must determine accuracy based on random chance or the error in the experiment and provide strong justification if the decision is to exclude them. [3] Outliers require attention to ensure the data analysis accurately reflects the majority of the data and is not influenced by extreme values; cleaning these outliers can result in better quality decision-making in clinical practice. [4] A common approach to determining if a variable is approximately normally distributed is converting values to z scores and determining if any scores are less than -3 or greater than 3. For a normal distribution, about 99% of scores should lie within three standard deviations of the mean. [5] Importantly, one should not automatically throw out any values outside of this range but consider it in corroboration with the other factors aforementioned. Outliers are relatively common, so when these are prevalent, one must assess the risks and benefits of exclusion. [6]

Image . Figure 3 provides examples of histograms. In Figure 3A, 2 possible outliers causing kurtosis are observed. If values within 3 standard deviations are used, the result in Figure 3B are observed. This histogram appears much closer to an approximately normal distribution with the kurtosis being treated. Remember, all evidence should be considered before eliminating outliers. When reporting outliers in scientific paper outputs, account for the number of outliers excluded and justify why they were excluded.

Boxplots can examine for outliers, assess the range of data, and show differences among groups. Boxplots provide a visual representation of ranges and medians, illustrating differences amongst groups, and are useful in various outlets, including evidence-based medicine. [7] Boxplots provide a picture of data distribution when there are numerous values, and all values cannot be displayed (ie, a scatterplot). [8] Figure 4 illustrates the differences between drug site administration and the length of drug life from the above example.

Image . Figure 4 shows differences with potential clinical impact. Had any outliers existed (data from the histogram were cleaned), they would appear outside the line endpoint. The red boxes represent the middle 50% of scores. The lines within each red box represent the median number of minutes within each administration site. The horizontal lines at the top and bottom of each line connected to the red box represent the 25th and 75th percentiles. In examining the difference boxplots, an overlap in minutes between 2 administration sites were observed: the approximate top 25 percent from site B had the same time noted as the bottom 25 percent at site A. Site B had a median minute amount under 525, whereas administration site A had a length greater than 550. If there were no differences in adverse reactions at site A, analysis of this figure provides evidence that healthcare providers should administer the drug via site A. Researchers could follow by testing a third administration site, site C. Image . Figure 5 shows what would happen if site C led to a longer drug life compared to site A.

Figure 5 displays the same site A data as Figure 4, but something looks different. The significant variance at site C makes site A’s variance appear smaller. In order words, patients who were administered the drug via site C had a larger range of scores. Thus, some patients experience a longer half-life when the drug is administered via site C than the median of site A; however, the broad range (lack of accuracy) and lower median should be the focus. The precision of minutes is much more compacted in site A. Therefore, the median is higher, and the range is more precise. One may conclude that this makes site A a more desirable site.

- Clinical Significance

Ultimately, by understanding basic exploratory data methods, medical researchers and consumers of research can make quality and data-informed decisions. These data-informed decisions will result in the ability to appraise the clinical significance of research outputs. By overlooking these fundamentals in statistics, critical errors in judgment can occur.

- Nursing, Allied Health, and Interprofessional Team Interventions

All interprofessional healthcare team members need to be at least familiar with, if not well-versed in, these statistical analyses so they can read and interpret study data and apply the data implications in their everyday practice. This approach allows all practitioners to remain abreast of the latest developments and provides valuable data for evidence-based medicine, ultimately leading to improved patient outcomes.

- Review Questions

- Access free multiple choice questions on this topic.

- Comment on this article.

Exploratory Data Analysis Figure 1 Contributed by Martin Huecker, MD and Jacob Shreffler, PhD

Exploratory Data Analysis Figure 2 Contributed by Martin Huecker, MD and Jacob Shreffler, PhD

Exploratory Data Analysis Figure 3 Contributed by Martin Huecker, MD and Jacob Shreffler, PhD

Exploratory Data Analysis Figure 4 Contributed by Martin Huecker, MD and Jacob Shreffler, PhD

Exploratory Data Analysis Figure 5 Contributed by Martin Huecker, MD and Jacob Shreffler, PhD

Disclosure: Jacob Shreffler declares no relevant financial relationships with ineligible companies.

Disclosure: Martin Huecker declares no relevant financial relationships with ineligible companies.

This book is distributed under the terms of the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0) ( http://creativecommons.org/licenses/by-nc-nd/4.0/ ), which permits others to distribute the work, provided that the article is not altered or used commercially. You are not required to obtain permission to distribute this article, provided that you credit the author and journal.

- Cite this Page Shreffler J, Huecker MR. Exploratory Data Analysis: Frequencies, Descriptive Statistics, Histograms, and Boxplots. [Updated 2023 Nov 3]. In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2024 Jan-.

In this Page

Bulk download.

- Bulk download StatPearls data from FTP

Related information

- PMC PubMed Central citations

- PubMed Links to PubMed

Similar articles in PubMed

- Contour boxplots: a method for characterizing uncertainty in feature sets from simulation ensembles. [IEEE Trans Vis Comput Graph. 2...] Contour boxplots: a method for characterizing uncertainty in feature sets from simulation ensembles. Whitaker RT, Mirzargar M, Kirby RM. IEEE Trans Vis Comput Graph. 2013 Dec; 19(12):2713-22.

- Review Univariate Outliers: A Conceptual Overview for the Nurse Researcher. [Can J Nurs Res. 2019] Review Univariate Outliers: A Conceptual Overview for the Nurse Researcher. Mowbray FI, Fox-Wasylyshyn SM, El-Masri MM. Can J Nurs Res. 2019 Mar; 51(1):31-37. Epub 2018 Jul 3.

- Qualitative Study. [StatPearls. 2024] Qualitative Study. Tenny S, Brannan JM, Brannan GD. StatPearls. 2024 Jan

- [Descriptive statistics]. [Rev Alerg Mex. 2016] [Descriptive statistics]. Rendón-Macías ME, Villasís-Keever MÁ, Miranda-Novales MG. Rev Alerg Mex. 2016 Oct-Dec; 63(4):397-407.

- Review Graphics and statistics for cardiology: comparing categorical and continuous variables. [Heart. 2016] Review Graphics and statistics for cardiology: comparing categorical and continuous variables. Rice K, Lumley T. Heart. 2016 Mar; 102(5):349-55. Epub 2016 Jan 27.

Recent Activity

- Exploratory Data Analysis: Frequencies, Descriptive Statistics, Histograms, and ... Exploratory Data Analysis: Frequencies, Descriptive Statistics, Histograms, and Boxplots - StatPearls

Your browsing activity is empty.

Activity recording is turned off.

Turn recording back on

Connect with NLM

National Library of Medicine 8600 Rockville Pike Bethesda, MD 20894

Web Policies FOIA HHS Vulnerability Disclosure

Help Accessibility Careers

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Goodness of Fit and Related Chi-Square Tests

13 Frequency Distributions

13.1 analyzing distributions of data.

Throughout this text, we will focus on using frequency analysis and descriptive statistics. These simple but powerful analyses enable you to examine your data and identify patterns including the shapes and distributions of data, missing values, and outliers. Frequencies and distributions are important concepts in the quantitative analysis of data that underlie the overall statistical approach covered in this book. In fact, this approach is often referred to as “frequentist statistics” because it relies on frequencies to make inferences about the data. An alternative approach is called the Bayesian statistical analysis which relies on probabilities. While we won’t go into detail about the differences between frequentist and Bayesian statistical approaches, it is important to recognize that frequencies play a key role in the approach that we are demonstrating here but that a frequentist approach is not the only way to analyze your data.

A frequency is simply the number of times something happens. It could be, for example, the number of people with brown hair, the number of children in a family, the number of deaths in a hospital. It could also be the number of times an electrical signal with a given level of energy-intensity is recorded.

A distribution shows the relative frequencies of each possible value or category for a variable. Distributions are used to describe the organization or shape of a set of scores or values for a particular variable. If you studied statistics previously you are most likely familiar with the normal distribution or bell curve. What you may not realize is that distributions other than the normal distribution are also used in statistic analyses and that datasets can take the shape of these other distributions. For example, datasets that include only discrete scores ranging from 1 to 5 would not be expected to fit a normal distribution curve but would rather be compared to a categorical distribution curve – like the chi-square distribution or the Poisson distribution.

Distributions can be obtained by counting the number of events that occur or how many participants in a sample have a specific score on a questionnaire or measure (i.e., counting frequencies). For example, you might look at the number of patients presenting to the Emergency Department for different reasons: cardiovascular concerns, accidents, infections, reported symptoms. You might also consider responses to an anxiety questionnaire scored on a Likert scale (using discrete scaled scores) ranging from 1 to 5. You may consider reviewing the number of respondents in your sample had each possible score (i.e. 1, 2, 3, 4, or 5) – in other words, how frequent each score appeared within the total set of scores.

The following is an example of a table showing a frequency distribution for a set of responses to a categorical variable ranging from 1 to 5, and a graphical representation of the frequency of responses in each category. The Category Label is presented on the x-axis, and the number of responses—frequencies for each response are presented on the y-axis.

Table 13.1 Frequency Distribution For Categorical Responses

The PROC FREQ Procedure

SAS Code to produce the Frequency Distribution and Corresponding Figure

Figure 13.1 Frequency Distribution For a Categorical Response Variable

Frequency distributions are useful in describing variables, helping to identify errors (impossible values) and outliers, assessing how well a continuous variable fits the normal distribution, or to test hypotheses using specific statistical tests such as using a chi-square test to evaluate categorical variables.

Frequency & Distribution of a Count Variable

Count variables refer to those that simply tally the number of items or events that occur. For example, you might want to count the number of adverse events that occur when people take a medication, the number of times nurses wash their hands during their shift or the number of babies born in each month of the year. In health research, there are many items or events that can be counted!

Note that for a count variable, the values are arithmetically meaningful and represent the number of events or items for a specific variable– the count variable is quite literally storing the count of items of interest. Therefore, values differ by a magnitude and are meaningful. For example, 4 adverse events are twice as many as 2 adverse events.

Count variables are different than categorical variables.

Categorical variables are used when the researcher wishes to use numbers to represent different kinds of items or events. In the categorical variable the numbers are arbitrary. For example, hair colour could be coded as 1 = blonde, 2 = brown, 3 = gray, 4 = red, and 5 = other but it could also be coded as 11 = blonde, 22 = brown, 33 = gray, 44 = red, and 55 = other. The numbers representing a category label are not mathematically meaningful and do not represent the number of people with a specific hair colour. Of course, you can analyze the frequency of people with each response which we will cover later when we talk about categorical variables in more detail.

Working example to process a “count” variable

Let’s say we would like to take a sample of 50 families from a population of 1000 households in a small town and record the number of children in each household. Here we will create two variables, the first we will call “ NKIDS ” and the second we will call “ HOUSEHOLDS ”. The variable NKIDS is the categorical variable for the number of children in each household that we sampled, while the variable HOUSEHOLDS represents the number of response houses that report having a given number of children.

The Scenario

We arrive at the small town and knock on the front door of the first house. Below is the dialogue between the researchers and the respondents.

“Good day, we are Biostatisticians and we are conducting a study of the number of children in your family.”

“Oh we don’t have any children.”

“Okay, thank-you.”

We note that for Household #1 there are 0 children. We then knock on the front door of the second house.

“We have 7 children. Would you like some?”

“No thank-you, but have a nice day.”

We note that for Household #2 there are 7 children. We then knock on the front door of the third house, and continue our process for each of 50 houses in the town.

In this example, the categorical variable is NKIDS and is considered the independent variable, while the continuous-discrete variable is HOUSEHOLDS and is considered the dependent variable – aka the measure of interest.

Since there can only be whole numbers for the variable NKIDS (i.e., you can’t actually have 1.2 children), the variable NKIDS is a discrete categorical variable, and likewise, because we are counting families on a whole number line (i.e. not partial families) then the variable NFAMILIES is a discrete random variable.

The frequency distribution recording sheet for this example is shown below. Notice that as a rule, we want to keep our variable labels at or near 8 characters so that HOUSEHOLDS is shortened to HSEHLD.

Table 13.2 Tally Sheet to produce the Frequency Distribution for Number of Children in Each Household Sampled

Counting events such as the number of children in a family, the number of needles found on the ground near a safe injection site, or the number of patients readmitted to the hospital after discharge, typically follow the whole number line. Frequency tables are often used to show how many times an event has occurred.

In our example, we can say that the variable HOUSEHOLDS is a discrete random variable because in a given sample of 50 families the variable can take on (contain) any value between 0 and 50 (the total sample) on the whole number line.

Table 13.1 shows how we can determine the frequency and relative frequency (percentage out of 100) for the number of children in each of the families in our sample. Of the 50 families in our sample, nine families did not have children, 7 families had 1 child, 12 families had 2 children, 9 families had 3 children, 5 families had 4 children, 6 families had 5 children, no families had 6 children, and 2 families had 7 children. Notice here that the variable of interest is the number of families reporting each of the possible number of children.

Relative frequency refers to the proportion of the entire sample that had a particular value. In this example, the relative frequency tells us what percentage of the sample had a specific number of children. To calculate the relative frequency, simply divide each frequency by the total number of families and then multiply the result by 100 to calculate the percentage value. For example, from the data in Table 4.1 we see that in this sample, 24% of the families had 2 children while only 4% had 7 children.

Creating the SAS Program to compute a frequency distribution for a discrete random variable

Below are the SAS commands to produce the frequency distribution table of the data recorded for the number of children in our sample of 50 families.

SAS Code to produce a Frequency Distribution Table For Number of Children in Each Household sampled

In this SAS program, we are using the PROC FREQ statistical processing command with the keyword TABLES to produce a frequency distribution for the data recorded for our sample of 50 households. Notice in the PROC FREQ command sequence we included the statement WEIGHT HSEHLD. In this example, the independent or categorical variable is NKIDS and the dependent discrete random variable is HSEHLD. The WEIGHT command enables us to enter the summary data for the dependent variable HSEHLD as the count related to the categorical variable NKIDS.

Notice the table indicates that 9 households reported no children, while no households reported having 6 children. The table also indicates that most households reported having 2 children.

TABLE 13.3 Frequency distribution for number of children in each household

The FREQ Procedure

The following is a SAS program to compute elements of PROC FREQ for frequency distributions. The data are fictitious and are used here to enable you to work through the various options and features of the PROC FREQ command with relevant options.

As you work through the SAS program take note of the specific features that are identified, therein. The scenario is based on a public health study in which a group of researchers intended to determine the number of discarded needles left on the ground within a 100-metre radius of safe injection sites. We begin the program first by reading the data set and then using the essential SAS statistical processing commands with relevant options for PROC FREQ.

The program begins by labeling the working SAS program as DATA FREQ13_4; – which simply creates a label for the SAS program in the present SAS work session;

The second line is the listing of variables to be read within the sample data set. The SAS command begins with the SAS keyword INPUT which is followed by the names of each variable. Notice that the variable names are kept to eight characters and each variable name begins with an alphabetic character rather than a number or a special character. In this example the variables SITE, NDLCNT and INCREG are used to indicate that we have a variable to list the various sites from which the data were collected (SITE), the number of needles found on the ground within a 100-metre radius of the exit door of the safe injection site (NDLCNT), and the estimated average household income reported in thousands of dollars for the region in which the injection site is located (INCREG).

We also use simple IF-THEN logic commands to create summary groups for both the variable NDLCNT – number of needles recorded at each site, as well as to group the average household income – INCGRP.

SAS Code to Demonstrate Features of PROC FREQ

F REQUENCY DISTRIBUTION FOR THE NUMBER NEEDLES FOUND ACROSS ALL SITES

The SAS commands to sort the data and run the PROC FREQ using the * between variables helps to summarize the data into 2-way frequency distribution tables. In this way, we can see at a glance, a summary of the dataset.

In the sequence of SAS processing commands, we first sort the data using PROC SORT, followed by the SAS commands PROC FREQ with the keyword TABLES and then the two variables that we wish to include in the 2-way table – NDLGRP * INCGRP.

Code Snippet for SAS Code to Demonstrate PROC SORT code added to PROC FREQ

PROC SORT; BY INCGRP; PROC FREQ; TABLES NDLGRP*INCGRP; TITLE1 ‘FREQ DIST FOR GROUP NEEDLES BY INCOME GROUP’; RUN;

The result of this sequence of commands enables us to produce the 2-way SAS table of the groups of needles found arranged by income groups. The problem with this table is that the delivery of information is not optimized for the reader if the reader does not know what an income group of 5, or an NDLGRP of 3 refers.

Using the PROC FORMAT command enables us to explain the categories within each variable. The code to explain the levels of each category uses the following two-step approach.

1.) At the start of the program add the PROC FORMAT statement and the VALUE for each categorical variable.

In our example, we have two categorical variables: INCGRP and NDLGRP. The variable INCGRP has 5 levels, while the variable NDLGRP has three groups.

Later in the program, after we call a SAS procedure, like in this case we call PROC FREQ, we then call the FORMAT function and assign the predefined format to each variable used by the SAS procedure.

Notice we first call the variable – in this example the variable of interest is NDLGRP and this is followed by the PROC FORMAT VALUE name NDL. Notice also that when we include the VALUE name we follow it with a period(.). This command will place the full text for the variable category in the frequency distribution.

The results of this analysis demonstrate that the highest number of needles found near the areas of safe injection sites tended to be higher among low-income neighborhoods than the number of needles found near the safe injection sites located in more affluent areas.

Figure 13.5 Features of Proc Freq: Adding Proc Format to the Frequency Procedure for a block chart

In the following output the SAS syntax is shown here.

At the top of the program add:

13.2 Distribution for a categorical variable

As previously discussed, categorical variables involve grouping items, persons, or attributes, whereby the assignment of numbers to each group is arbitrary. For example, you might be interested in looking at the employment status of nursing home workers. The variable: employment status would be a categorical or grouping variable and might contain the following categories: full-time, part-time, casual, and temporary . You could assign any number you wish to represent the group label because the number is merely a label when applied to represent the category and doesn’t hold any mathematical significance – the number simply enables you to group persons based on that variable (in this case, employment status).

It is important to remember that with categorical data our interest is not to compute measures of centrality or variance like means and standard deviations, and therefore we won’t compare the distribution of items of persons to a normal distribution (i.e., the bell curve). Rather, the data that is held in the categories are counts and so our evaluation approach is to use statistical methods based on frequencies and ranks.

In the following steps, we calculate frequencies, relative frequencies, proportions, and percentages for categorical variables. Consider this simple data set.

We add up how many participants are in each employment status group and transfer the information to our chart:

Better yet, here we will use SAS to produce a frequency distribution table.

SAS code to produce a frequency distribution for employment status

This program produces the basic frequency distribution table for a set of categorical data and since we included the PROC FORMAT commands we can explain the data output clearly.

Features of Proc Freq: Distribution for a Categorical Variable

FREQUENCY DISTRIBUTION OF EMPLOYMENT STATUS

The FREQ Procedure for Employment Status

Distribution for a continuous variable

Now let’s talk about analyzing data for continuous variables.

Suppose we recorded the heights (in inches) of 200 students. In this example, height is a continuous variable since the possible values include decimals (not just whole numbers), there are equal intervals between each line on a tape measure, and there is a meaningful 0.

While we can examine frequencies and create a histogram for a continuous variable, it is likely that we will have many different values in our dataset because each student will have a slightly different height. For example, Tom might be 61.5 inches tall while Cara is 61.6 inches tall. As a result, few students will record the exact same height. It may be, therefore, more meaningful to group these data and create categories. In other words, you can transform a continuous variable into a categorical variable simply by grouping the data with the IF-THEN logic statements.

In the following example, we will use the grouping approach so that we can create a more comprehensive frequency distribution.

Let’s start with a dataset that includes two variables for our sample of 200 students (ID and HEIGHT). For each participant, we assign an ID and then record the height in inches for each of our participants. (note: despite that in Canada we use the metric scale for most of our measurements, we continue to refer to our heights in inches and feet – old habits die hard!)

Here we can use SAS to produce the frequency distribution table based on our grouping strategy for the data. We start by naming the working file and then include the appropriate SYNTAX to describe the variables and add the simple logic statements.

Raw Dataset 13.1 Two-hundred Height Measurements (inches)

Below is the SAS code required for the frequency analysis for the dataset above:

PROC FORMAT; VALUE HT 1=’LESS THAN 66.0′ 2=’66.1 TO 68.0′ 3=’68.1 TO 70.0′ 4=’70.1 TO 72.0′ 5=’MORE THAN 72.0′; DATA HEIGHTS; LABEL ID = ‘PARTICIPANT ID’ HEIGHT = ‘PARTICIPANT HEIGHT’ HTGRP=’HEIGHT GROUP’; INPUT ID HEIGHT @@;

Notice some specific features of the INPUT statement above. Here we list two variables: ID and HEIGHT, followed by two @ symbols at the end of the list of variables. When two @ symbols are presented together SAS does not skip to a new line after reading the list of variables (in this case ID and HEIGHT), but rather reads across the page. This format enables us to read the data as a constant stream across the page for as many rows as is required to present the entire dataset. The computer reads the data in the order of the variables listed. That is, the computer reads through the dataset assigning the first value as the ID and the second value as the HEIGHT until all data are read.

Below is the paragraph of simple logic statements that follow the INPUT format statement. With these simple IF-THEN logic statements we organize the large unwieldy data set into six manageable groups.

IF HEIGHT <=66.0 THEN HTGRP=1; IF HEIGHT >66.0 AND HEIGHT <=68.0 THEN HTGRP=2; IF HEIGHT >68.0 AND HEIGHT <=70.0 THEN HTGRP=3; IF HEIGHT >70.0 AND HEIGHT <=72.0 THEN HTGRP=4; IF HEIGHT >72.0 THEN HTGRP=5; DATALINES; 001 58.5 002 58.8 003 60.1 004 61.3 005 61.75 006 61.96 . . .

199 79.40 200 79.47 ; PROC FREQ; TABLES HEIGHT; RUN; PROC FREQ; TABLES HTGRP; FORMAT HTGRP HT. ; RUN;

As you see in the partial output presented in Figure 13.4 below, when we run the SAS command: PROC FREQ; TABLES HEIGHT; RUN; most of the values occur only once because height is a continuous variable which allows greater variation than categorical or count type variables. When reading this output, make sure that you screen for outliers by looking at the high and low values for the variable. SAS will also indicate the number of missing values which is also important when you are cleaning and screening your data.

Table 13.4 The output from PROC FREQ Applied to Continuous Data –Participant Height.

13.3 Creating a Histogram in SAS

Producing graphs in SAS enables us to examine the distribution of the data visually rather than in a table. The SAS code shown here includes the option to produce a histogram. A histogram is more than a vertical bar chart. Histograms use rectangles to illustrate the frequency and interval, whereby the height of the rectangle is relative to the frequency (y axis) and the width of the rectangle is relative to the interval (x axis).

The SAS code used here provides the analysis for our sample of 200 measures of height within a cohort of children. In order to establish the appropriate number of intervals in our sample we calculate the range of our set of scores. The range refers to the spread of scores between the lowest estimate from our sample, and the highest estimate from our sample. We can estimate the range apriori by running the PROC UNIVARIATE command. When we include the command MIDPOINTS= we can customize the output. Here we include the command HISTOGRAM /MIDPOINTS = 55 TO 85 BY 0.5, to produce the expected RANGE of highest and lowest values and then plot the midpoints for all categories within the range.

PROC UNIVARIATE; VAR HEIGHT; HISTOGRAM / MIDPOINTS=55 TO 85 BY 0.5 NORMAL; RUN;

Notice in Figure 13.6 that the x-axis is a continuous variable. An overlay of the shape of the distribution is represented by the blue BELL-SHAPED normal curve.

Figure 13.6 Histogram for Heights of Students in Sample of 200 Participants

Use the following SAS Code to group the data into categories and add a representation of the shape of the distribution (CTEXT = BLUE) when plotting the histogram.

proc univariate; var HEIGHT; histogram HEIGHT / normal midpoints = 55 60 65 70 75 80 85 90 CTEXT = BLUE;

Of you can use: proc sgplot; histogram HEIGHT; density HEIGHT;

Figure 13.7 Histogram for Heights of Students N= 200 Grouped Data

Dividing a continuous variable into categories

In the example shown above, it is easy to see how helpful it is to arrange these continuous data into categories that represent a range of values rather than individual values on a continuum.

In our height example, we can optimize these data by creating height categories rather than exact heights because there is so much variance in exact heights. However, in this process of arbitrarily categorizing our response variable by grouping the data together we recognize that there will be a loss of information. When grouping data we are essentially saying that the responses are exactly the same even though differences are observed. For example, when you group a student who is 61.5 inches tall with a student who is 64 inches tall, the difference between the two individuals will be ignored within the category. There is absolutely nothing wrong with using categories that represent a range of continuous values but if you are planning to collect your own data, it is usually best to collect continuous data from the source and group the data later. Generally speaking, you can always convert data from continuous data to categories but without the original estimates, you cannot go the other way!

Once you deem it helpful to transform a continuous variable into categories, next you need to decide how to chop up your data. Ideally each you should have an equal range of values in each group. In our example, which you can see the would-be participants are quite tall, we decided to transform the continuous height data into categories that are each 2 inches wide. Group 1 includes students with a height of less than 66 inches, Group 2 starts at 66.1 and tops out at 68 inches, Group 3 starts at 68.1 and tops out at 70 inches … and so on.

Alternatively, we could have decided to group students in intervals of 5 inches or even 1 inch. As the researcher, you decide how to group the data based on what will make meaningful groupings. This might be based on past literature, clinical reference values, logic, or a combination of these factors. Once we create our categories we can sort students into each group based on their height. Then we can count how many students fall into each group and create a frequency distribution table (Table 13.5) and a histogram. Notice that the shape (distribution) of this histogram is the one we created using the continuous version of the height variable (Figure 13.6). This is because in the first histogram exact values are included and the data is divided into quintiles based on the mean. On the other hand, in the second histogram students are grouped together in 2-inch height intervals – depending on the cut-off values that we chose the distribution would be different.

Using the grouping routines with simple logic statements helps to simplify the organization of the data. The output for the grouped data is invoked with the SAS command: PROC FREQ; TABLES HTGRP; FORMAT HTGRP HT. ; RUN;

Table 13.5 Grouping PROC FREQ output for –Participant Height.

Figure 13.7 Histogram for Heights of Students using Height Group (N=200)

In addition to the histogram, SAS also includes a number of tables in the output with the PROC UNIVARIATE command. The data in these tables provide important information about our variable (height, in this example). As you can see in Table 13.8 the moments’ table provides descriptive statistics about our variable including the mean, standard deviation, and standard error, as well as the overall variance. Skewness and kurtosis are also provided which provide valuable information about how well the variable fits the normal distribution. Keep your critical thinking hat on when looking at these data because some of this information is not relevant for categorical variables. For example, you cannot have an average for a categorical variable and the normal distribution doesn’t make sense.

Table 13.8 Descriptive Statistics for the Dataset of Heights

The next table presents the Basic Statistical Measures (Figure 13.9). In addition to the mean, standard deviation, and variance, this table also provides the median, mode, range, and interquartile range for the variable.

Table 13.9 Basic Statistical Measures Table

Finally, Table 13.10 is the Extreme Observations Table which identifies the highest and lowest values of the variable. Here the data do not differ from what we would expect but when datasets contain outliers, this table is one way to identify the outlier data points easily. Notice that the table provides the case number along with the data point value, making it easy to revisit the original dataset and verify original values or consider adjustments to extreme values if needed.

Figure 13.10 Extreme Observations Table

Below is a SAS program to produce a graphical presentation of the data while also creating an organized frequency distribution table.

OPTIONS PAGESIZE=55 LINESIZE=120 CENTER DATE; DATA RPRT1; INPUT DIVISION $ 1-12 CASES 14-16; LABEL DIVISION=’CATEGORIES’; DATALINES; 58.5-61.5 4 61.5-64.5 12 64.5-67.5 44 67.5-70.5 64 70.5-73.5 56 73.5-76.5 16 76.5-79.5 4 ; RUN; PROC GCHART DATA=REPORTS.RPRT1; VBAR DIVISION/SUMVAR=CASES; RUN;

Figure 13.8. Vertical Bar Chart of height categories produced in SAS.

PROC FREQ DATA=REPORTS.RPRT1; WEIGHT CASES; TABLES DIVISION; RUN;

Table 13.11 Frequency table of height categories produced in SAS

13.4 Outliers

As depicted in Table 13.12. below, outliers can be defined as Cases with extreme values on one (univariate) or more variables (multivariate) (Tabachnick & Fidell, 2013). Outliers can be either error outliers (incorrect values) or “interesting” outliers (correct but unusual) (Orr, Sackett, & Dubois, 1991). Error outliers need to be checked against the original data for verification and then either corrected or removed from the data set. Interesting outliers are less easy to deal with (see Tabachnick and Fidell, 2013 for recommended strategies) but they are important to think about because they pull the mean towards them and have a stronger influence on the data than other values. The bottom line is that before you move on to further analysis or data transformation, it is essential to run a frequency analysis and screen your data for outliers.

Error outliers can be detected using PROC FREQ and checking for values that don’t make sense. For example, if you had 976 as someone’s age, that would be a red flag and you would investigate further.

Interesting outliers, while unusual, are still within the realm of possibility. They can be identified using the PROC GPLOT procedure outlined here This command produces a table with the five highest and five lowest values for a particular variable.

In the graph below, one person’s data doesn’t follow the same pattern as the rest of the sample. This is an example of an outlier.

Figure 13.12. Example of an Outlier within a Distribution

Applied Statistics in Healthcare Research Copyright © 2020 by William J. Montelpare, Ph.D., Emily Read, Ph.D., Teri McComber, Alyson Mahar, Ph.D., and Krista Ritchie, Ph.D. is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License , except where otherwise noted.

Share This Book

Encyclopedia of Mathematical Geosciences pp 1–4 Cite as

Frequency Distribution

- Ricardo A. Olea 7

- Living reference work entry

- Later version available View entry history

- First Online: 22 September 2021

27 Accesses

Part of the book series: Encyclopedia of Earth Sciences Series ((EESS))

Given a numerical dataset, a frequency distribution is a summary displaying fluctuations of an attribute within the range of values. In contrast to an analytical probability distribution, a frequency distribution always deals with empirically observed values (Everitt and Skondall 2010 ). In general, the larger the number of values, the more useful is the frequency distribution relative to listing all values. Today, multiple software packages allow easy display of a frequency distribution.

The Merriam-Webster Dictionary ( 2020 ) states that the first use of the term with the meaning observed here was in 1895. However, it provides no reference.

The dataset may be univariate or multivariate. Here, for simplicity, the entry deals with univariate datasets.

Different Forms

In the broadest sense, a frequency distribution may be tabular or graphical. In either case, the most common practice is to classify the data into classes with the same interval length. Table 1 and Fig. 1 provide...

This is a preview of subscription content, log in via an institution .

Bibliography

de Gruijter J, Brus DJ, Bierkens MFP, Knotters M (2006) Sampling for natural resource monitoring: statistics and methodology of sampling and data analysis. Springer, 348 pp

Book Google Scholar

Deutsch CV (1989) DECLUS: a FORTRAN 77 program for determining optimum spatial declustering weights. Comput Geosci 15(3):325–332

Article Google Scholar

Everitt BS, Skondall A (2010) The Cambridge dictionary of statistics, 4th edn. Cambridge University Press, 431 pp

Merriam-Webster Dictionary (2020) Frequency distribution. https://www.merriam-webster.com/dictionary/frequency%20distribution . Accessed 15 Sept 2020

Olea RA (2017) Resampling of spatially correlated data with preferential sampling for the estimation of frequency distributions and semivariograms. Stoch Env Res Risk A 31(2):481–491

Scott DC, Shaffer BN, Haacke, JE, Pierce PE, Kinney SA (2019) Coal geology and assessment of coal resources and reserves in the Little Snake River coal field and Red Desert assessment area, Greater Green River Basin, Wyoming: U.S. Geological Survey Professional Paper 1836, 169 pp. https://doi.org/10.3133/pp1836 . Accessed 15 Sept 2019

Download references

Author information

Authors and affiliations.

Geology, Energy and Minerals Science Center, U.S. Geological Survey, Reston, VA, USA

Ricardo A. Olea

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Ricardo A. Olea .

Editor information

Editors and affiliations.

System Science & Informatics Unit, Indian Statistical Institute- Bangalore Centre, Bangalore, India

B.S. Daya Sagar

Insititue of Earth Sciences, China University of Geosciences, Beijing, China

Qiuming Cheng

School of Natural and Built Environment, Queen's University Belfast, Belfast, UK

Jennifer McKinley

Canada Geological Survey, Ottawa, ON, Canada

Frits Agterberg

Rights and permissions

Reprints and permissions

Copyright information

© 2021 This is a U.S. Government work and not under copyright protection in the U.S.; foreign copyright protection may apply

About this entry

Cite this entry.

Olea, R.A. (2021). Frequency Distribution. In: Daya Sagar, B., Cheng, Q., McKinley, J., Agterberg, F. (eds) Encyclopedia of Mathematical Geosciences. Encyclopedia of Earth Sciences Series. Springer, Cham. https://doi.org/10.1007/978-3-030-26050-7_125-1

Download citation

DOI : https://doi.org/10.1007/978-3-030-26050-7_125-1

Received : 04 October 2020

Accepted : 19 May 2021

Published : 22 September 2021

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-26050-7

Online ISBN : 978-3-030-26050-7

eBook Packages : Springer Reference Earth and Environm. Science Reference Module Physical and Materials Science Reference Module Earth and Environmental Sciences

- Publish with us

Policies and ethics

Chapter history

DOI: https://doi.org/10.1007/978-3-030-26050-7_125-2

DOI: https://doi.org/10.1007/978-3-030-26050-7_125-1

- Find a journal

- Track your research

Quickonomics

Frequency Distribution

Definition of frequency distribution.

A frequency distribution is a representation of how often different values or intervals occur in a dataset. It organizes data into groups or intervals, along with a count of how many times each value or interval appears. Frequency distributions are commonly used to analyze and understand the distribution of data.

Let’s consider a dataset of students’ test scores in a class. The scores range from 60 to 100. We want to create a frequency distribution to understand how many students scored within certain ranges. We decide to group the scores into intervals of 10 (e.g., 60-69, 70-79, etc.).

After analyzing the dataset, we find the following frequency distribution:

– 60-69: 4 students – 70-79: 8 students – 80-89: 10 students – 90-99: 5 students – 100: 2 students

This frequency distribution tells us that there are 4 students who scored between 60 and 69, 8 students who scored between 70 and 79, and so on. We can use this information to identify the most common score range and understand the distribution of scores in the class.

Why Frequency Distribution Matters

Frequency distributions are important for analyzing and summarizing data. They provide an organized and concise representation of how values are distributed within a dataset. By visualizing the frequency distribution, patterns and trends in the data can be identified, such as the presence of outliers or the shape of the distribution (e.g., normal, skewed, etc.). This helps in making informed decisions, identifying areas of improvement, and understanding the characteristics of the data. Frequency distributions are widely used in statistics, research, and data analysis across various fields and industries.

To provide the best experiences, we and our partners use technologies like cookies to store and/or access device information. Consenting to these technologies will allow us and our partners to process personal data such as browsing behavior or unique IDs on this site and show (non-) personalized ads. Not consenting or withdrawing consent, may adversely affect certain features and functions.

Click below to consent to the above or make granular choices. Your choices will be applied to this site only. You can change your settings at any time, including withdrawing your consent, by using the toggles on the Cookie Policy, or by clicking on the manage consent button at the bottom of the screen.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

11 Frequency Distributions

Jenna Lehmann

In statistics, a lot of tests are run using many different points of data and it’s important to understand how those data are spread out and what their individual values are in comparison with other data points. A frequency distribution is just that–an outline of what the data look like as a unit. A frequency table is one way to go about this. It’s an organized tabulation showing the number of individuals located in each category on the scale of measurement. When used in a table, you are given each score from highest to lowest (X) and next to it the number of times that score appears in the data (f). A table in which one is able to read the scores that appear in a data set and how often those particular scores appear in the data set. Here’s a Khan Academy video we found to be helpful in explaining this concept:

Organizing Data into a Frequency Distribution

- Find the range

- Order the table from highest score to lowest score, not skipping scores that might not have shown up in the data set

- In the next column, document how many times this score shows up in the data set

Organizing data into a group frequency table

- The grouped frequency table should have about 10 intervals. A good strategy is to come up with some widths according to Guideline 2 and divide the total range of numbers by that width to see if there are close to 10 intervals.

- The width of the interval should be a relatively simple number (like 2, 5, or 10)

- The bottom score in each class interval should be a multiple of the width (0-9, 10-19, 20-19, etc.)

- All intervals should be the same width.

Proportions and Percentages

Proportions measure the fraction of the total group that is associated with each score (they’re called relative frequencies because they describe the frequency in relation to the total number of scores). For example, if I have 10 pieces of fruit and 3 of them are oranges, 3/10 is the proportion of oranges. On the other hand, percentages express relative frequency out of 100, but essentially report the same values. Keeping in line with our fruit example, 30% of my fruit is oranges. Here’s a YouTube video which might be helpful:

Real Limits