- Search Search Please fill out this field.

- Fundamental Analysis

Hypothesis to Be Tested: Definition and 4 Steps for Testing with Example

:max_bytes(150000):strip_icc():format(webp)/ChristinaMajaski-5c9433ea46e0fb0001d880b1.jpeg)

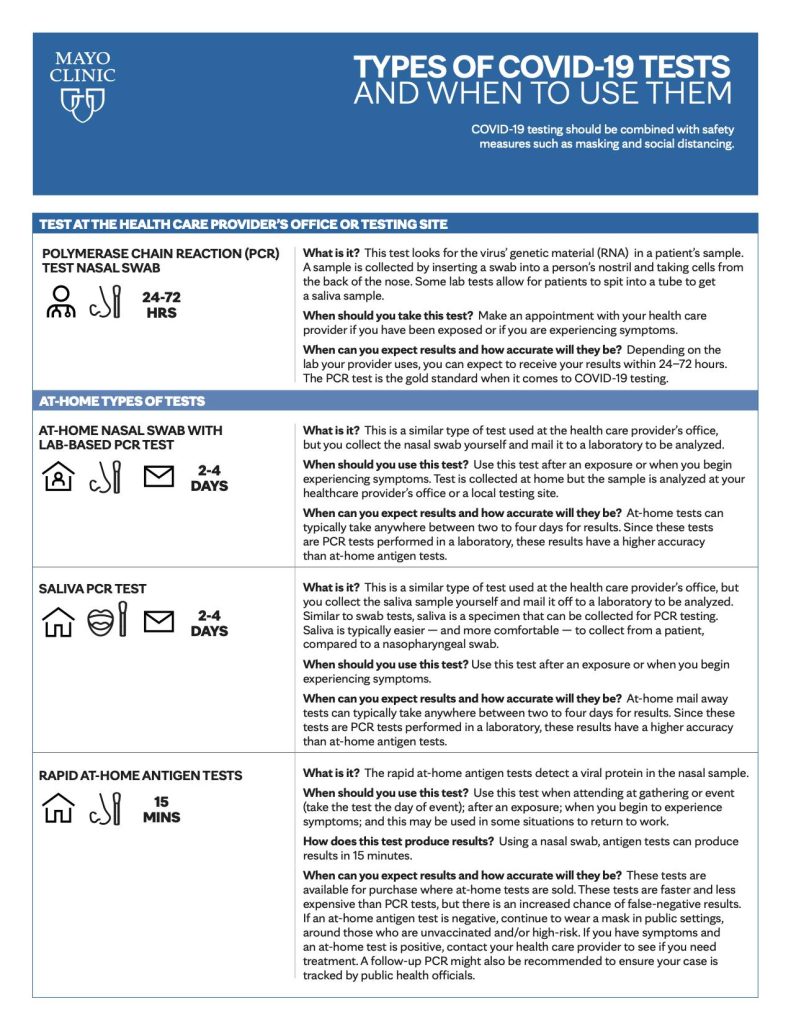

What Is Hypothesis Testing?

Hypothesis testing, sometimes called significance testing, is an act in statistics whereby an analyst tests an assumption regarding a population parameter. The methodology employed by the analyst depends on the nature of the data used and the reason for the analysis.

Hypothesis testing is used to assess the plausibility of a hypothesis by using sample data. Such data may come from a larger population, or from a data-generating process. The word "population" will be used for both of these cases in the following descriptions.

Key Takeaways

- Hypothesis testing is used to assess the plausibility of a hypothesis by using sample data.

- The test provides evidence concerning the plausibility of the hypothesis, given the data.

- Statistical analysts test a hypothesis by measuring and examining a random sample of the population being analyzed.

- The four steps of hypothesis testing include stating the hypotheses, formulating an analysis plan, analyzing the sample data, and analyzing the result.

How Hypothesis Testing Works

In hypothesis testing, an analyst tests a statistical sample, with the goal of providing evidence on the plausibility of the null hypothesis.

Statistical analysts test a hypothesis by measuring and examining a random sample of the population being analyzed. All analysts use a random population sample to test two different hypotheses: the null hypothesis and the alternative hypothesis.

The null hypothesis is usually a hypothesis of equality between population parameters; e.g., a null hypothesis may state that the population mean return is equal to zero. The alternative hypothesis is effectively the opposite of a null hypothesis (e.g., the population mean return is not equal to zero). Thus, they are mutually exclusive , and only one can be true. However, one of the two hypotheses will always be true.

The null hypothesis is a statement about a population parameter, such as the population mean, that is assumed to be true.

4 Steps of Hypothesis Testing

All hypotheses are tested using a four-step process:

- The first step is for the analyst to state the hypotheses.

- The second step is to formulate an analysis plan, which outlines how the data will be evaluated.

- The third step is to carry out the plan and analyze the sample data.

- The final step is to analyze the results and either reject the null hypothesis, or state that the null hypothesis is plausible, given the data.

Real-World Example of Hypothesis Testing

If, for example, a person wants to test that a penny has exactly a 50% chance of landing on heads, the null hypothesis would be that 50% is correct, and the alternative hypothesis would be that 50% is not correct.

Mathematically, the null hypothesis would be represented as Ho: P = 0.5. The alternative hypothesis would be denoted as "Ha" and be identical to the null hypothesis, except with the equal sign struck-through, meaning that it does not equal 50%.

A random sample of 100 coin flips is taken, and the null hypothesis is then tested. If it is found that the 100 coin flips were distributed as 40 heads and 60 tails, the analyst would assume that a penny does not have a 50% chance of landing on heads and would reject the null hypothesis and accept the alternative hypothesis.

If, on the other hand, there were 48 heads and 52 tails, then it is plausible that the coin could be fair and still produce such a result. In cases such as this where the null hypothesis is "accepted," the analyst states that the difference between the expected results (50 heads and 50 tails) and the observed results (48 heads and 52 tails) is "explainable by chance alone."

Some staticians attribute the first hypothesis tests to satirical writer John Arbuthnot in 1710, who studied male and female births in England after observing that in nearly every year, male births exceeded female births by a slight proportion. Arbuthnot calculated that the probability of this happening by chance was small, and therefore it was due to “divine providence.”

What is Hypothesis Testing?

Hypothesis testing refers to a process used by analysts to assess the plausibility of a hypothesis by using sample data. In hypothesis testing, statisticians formulate two hypotheses: the null hypothesis and the alternative hypothesis. A null hypothesis determines there is no difference between two groups or conditions, while the alternative hypothesis determines that there is a difference. Researchers evaluate the statistical significance of the test based on the probability that the null hypothesis is true.

What are the Four Key Steps Involved in Hypothesis Testing?

Hypothesis testing begins with an analyst stating two hypotheses, with only one that can be right. The analyst then formulates an analysis plan, which outlines how the data will be evaluated. Next, they move to the testing phase and analyze the sample data. Finally, the analyst analyzes the results and either rejects the null hypothesis or states that the null hypothesis is plausible, given the data.

What are the Benefits of Hypothesis Testing?

Hypothesis testing helps assess the accuracy of new ideas or theories by testing them against data. This allows researchers to determine whether the evidence supports their hypothesis, helping to avoid false claims and conclusions. Hypothesis testing also provides a framework for decision-making based on data rather than personal opinions or biases. By relying on statistical analysis, hypothesis testing helps to reduce the effects of chance and confounding variables, providing a robust framework for making informed conclusions.

What are the Limitations of Hypothesis Testing?

Hypothesis testing relies exclusively on data and doesn’t provide a comprehensive understanding of the subject being studied. Additionally, the accuracy of the results depends on the quality of the available data and the statistical methods used. Inaccurate data or inappropriate hypothesis formulation may lead to incorrect conclusions or failed tests. Hypothesis testing can also lead to errors, such as analysts either accepting or rejecting a null hypothesis when they shouldn’t have. These errors may result in false conclusions or missed opportunities to identify significant patterns or relationships in the data.

The Bottom Line

Hypothesis testing refers to a statistical process that helps researchers and/or analysts determine the reliability of a study. By using a well-formulated hypothesis and set of statistical tests, individuals or businesses can make inferences about the population that they are studying and draw conclusions based on the data presented. There are different types of hypothesis testing, each with their own set of rules and procedures. However, all hypothesis testing methods have the same four step process, which includes stating the hypotheses, formulating an analysis plan, analyzing the sample data, and analyzing the result. Hypothesis testing plays a vital part of the scientific process, helping to test assumptions and make better data-based decisions.

Sage. " Introduction to Hypothesis Testing. " Page 4.

Elder Research. " Who Invented the Null Hypothesis? "

Formplus. " Hypothesis Testing: Definition, Uses, Limitations and Examples. "

:max_bytes(150000):strip_icc():format(webp)/GettyImages-950067042-f066fa3ce8d249c49e12496ab057fcbc.jpg)

- Terms of Service

- Editorial Policy

- Privacy Policy

- Your Privacy Choices

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- How to Write a Strong Hypothesis | Steps & Examples

How to Write a Strong Hypothesis | Steps & Examples

Published on May 6, 2022 by Shona McCombes . Revised on November 20, 2023.

A hypothesis is a statement that can be tested by scientific research. If you want to test a relationship between two or more variables, you need to write hypotheses before you start your experiment or data collection .

Example: Hypothesis

Daily apple consumption leads to fewer doctor’s visits.

Table of contents

What is a hypothesis, developing a hypothesis (with example), hypothesis examples, other interesting articles, frequently asked questions about writing hypotheses.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess – it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Variables in hypotheses

Hypotheses propose a relationship between two or more types of variables .

- An independent variable is something the researcher changes or controls.

- A dependent variable is something the researcher observes and measures.

If there are any control variables , extraneous variables , or confounding variables , be sure to jot those down as you go to minimize the chances that research bias will affect your results.

In this example, the independent variable is exposure to the sun – the assumed cause . The dependent variable is the level of happiness – the assumed effect .

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

Step 1. Ask a question

Writing a hypothesis begins with a research question that you want to answer. The question should be focused, specific, and researchable within the constraints of your project.

Step 2. Do some preliminary research

Your initial answer to the question should be based on what is already known about the topic. Look for theories and previous studies to help you form educated assumptions about what your research will find.

At this stage, you might construct a conceptual framework to ensure that you’re embarking on a relevant topic . This can also help you identify which variables you will study and what you think the relationships are between them. Sometimes, you’ll have to operationalize more complex constructs.

Step 3. Formulate your hypothesis

Now you should have some idea of what you expect to find. Write your initial answer to the question in a clear, concise sentence.

4. Refine your hypothesis

You need to make sure your hypothesis is specific and testable. There are various ways of phrasing a hypothesis, but all the terms you use should have clear definitions, and the hypothesis should contain:

- The relevant variables

- The specific group being studied

- The predicted outcome of the experiment or analysis

5. Phrase your hypothesis in three ways

To identify the variables, you can write a simple prediction in if…then form. The first part of the sentence states the independent variable and the second part states the dependent variable.

In academic research, hypotheses are more commonly phrased in terms of correlations or effects, where you directly state the predicted relationship between variables.

If you are comparing two groups, the hypothesis can state what difference you expect to find between them.

6. Write a null hypothesis

If your research involves statistical hypothesis testing , you will also have to write a null hypothesis . The null hypothesis is the default position that there is no association between the variables. The null hypothesis is written as H 0 , while the alternative hypothesis is H 1 or H a .

- H 0 : The number of lectures attended by first-year students has no effect on their final exam scores.

- H 1 : The number of lectures attended by first-year students has a positive effect on their final exam scores.

If you want to know more about the research process , methodology , research bias , or statistics , make sure to check out some of our other articles with explanations and examples.

- Sampling methods

- Simple random sampling

- Stratified sampling

- Cluster sampling

- Likert scales

- Reproducibility

Statistics

- Null hypothesis

- Statistical power

- Probability distribution

- Effect size

- Poisson distribution

Research bias

- Optimism bias

- Cognitive bias

- Implicit bias

- Hawthorne effect

- Anchoring bias

- Explicit bias

A hypothesis is not just a guess — it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Null and alternative hypotheses are used in statistical hypothesis testing . The null hypothesis of a test always predicts no effect or no relationship between variables, while the alternative hypothesis states your research prediction of an effect or relationship.

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

McCombes, S. (2023, November 20). How to Write a Strong Hypothesis | Steps & Examples. Scribbr. Retrieved March 12, 2024, from https://www.scribbr.com/methodology/hypothesis/

Is this article helpful?

Shona McCombes

Other students also liked, construct validity | definition, types, & examples, what is a conceptual framework | tips & examples, operationalization | a guide with examples, pros & cons, what is your plagiarism score.

Statistics Made Easy

Introduction to Hypothesis Testing

A statistical hypothesis is an assumption about a population parameter .

For example, we may assume that the mean height of a male in the U.S. is 70 inches.

The assumption about the height is the statistical hypothesis and the true mean height of a male in the U.S. is the population parameter .

A hypothesis test is a formal statistical test we use to reject or fail to reject a statistical hypothesis.

The Two Types of Statistical Hypotheses

To test whether a statistical hypothesis about a population parameter is true, we obtain a random sample from the population and perform a hypothesis test on the sample data.

There are two types of statistical hypotheses:

The null hypothesis , denoted as H 0 , is the hypothesis that the sample data occurs purely from chance.

The alternative hypothesis , denoted as H 1 or H a , is the hypothesis that the sample data is influenced by some non-random cause.

Hypothesis Tests

A hypothesis test consists of five steps:

1. State the hypotheses.

State the null and alternative hypotheses. These two hypotheses need to be mutually exclusive, so if one is true then the other must be false.

2. Determine a significance level to use for the hypothesis.

Decide on a significance level. Common choices are .01, .05, and .1.

3. Find the test statistic.

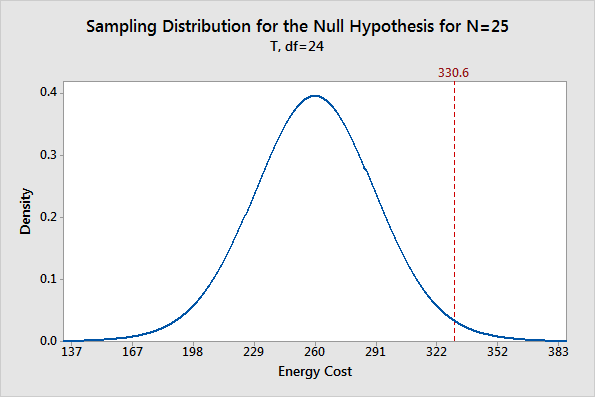

Find the test statistic and the corresponding p-value. Often we are analyzing a population mean or proportion and the general formula to find the test statistic is: (sample statistic – population parameter) / (standard deviation of statistic)

4. Reject or fail to reject the null hypothesis.

Using the test statistic or the p-value, determine if you can reject or fail to reject the null hypothesis based on the significance level.

The p-value tells us the strength of evidence in support of a null hypothesis. If the p-value is less than the significance level, we reject the null hypothesis.

5. Interpret the results.

Interpret the results of the hypothesis test in the context of the question being asked.

The Two Types of Decision Errors

There are two types of decision errors that one can make when doing a hypothesis test:

Type I error: You reject the null hypothesis when it is actually true. The probability of committing a Type I error is equal to the significance level, often called alpha , and denoted as α.

Type II error: You fail to reject the null hypothesis when it is actually false. The probability of committing a Type II error is called the Power of the test or Beta , denoted as β.

One-Tailed and Two-Tailed Tests

A statistical hypothesis can be one-tailed or two-tailed.

A one-tailed hypothesis involves making a “greater than” or “less than ” statement.

For example, suppose we assume the mean height of a male in the U.S. is greater than or equal to 70 inches. The null hypothesis would be H0: µ ≥ 70 inches and the alternative hypothesis would be Ha: µ < 70 inches.

A two-tailed hypothesis involves making an “equal to” or “not equal to” statement.

For example, suppose we assume the mean height of a male in the U.S. is equal to 70 inches. The null hypothesis would be H0: µ = 70 inches and the alternative hypothesis would be Ha: µ ≠ 70 inches.

Note: The “equal” sign is always included in the null hypothesis, whether it is =, ≥, or ≤.

Related: What is a Directional Hypothesis?

Types of Hypothesis Tests

There are many different types of hypothesis tests you can perform depending on the type of data you’re working with and the goal of your analysis.

The following tutorials provide an explanation of the most common types of hypothesis tests:

Introduction to the One Sample t-test Introduction to the Two Sample t-test Introduction to the Paired Samples t-test Introduction to the One Proportion Z-Test Introduction to the Two Proportion Z-Test

Published by Zach

Leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

- Quality Improvement

- Talk To Minitab

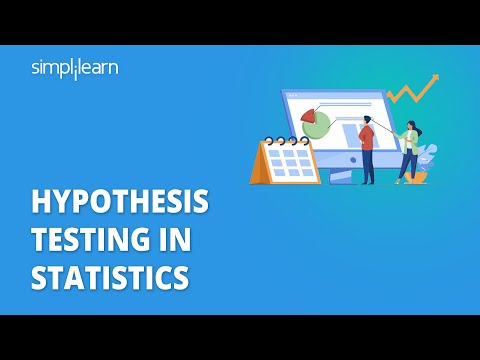

Understanding Hypothesis Tests: Why We Need to Use Hypothesis Tests in Statistics

Topics: Hypothesis Testing , Data Analysis , Statistics

Hypothesis testing is an essential procedure in statistics. A hypothesis test evaluates two mutually exclusive statements about a population to determine which statement is best supported by the sample data. When we say that a finding is statistically significant, it’s thanks to a hypothesis test. How do these tests really work and what does statistical significance actually mean?

In this series of three posts, I’ll help you intuitively understand how hypothesis tests work by focusing on concepts and graphs rather than equations and numbers. After all, a key reason to use statistical software like Minitab is so you don’t get bogged down in the calculations and can instead focus on understanding your results.

To kick things off in this post, I highlight the rationale for using hypothesis tests with an example.

The Scenario

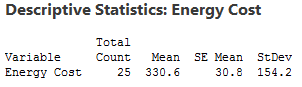

An economist wants to determine whether the monthly energy cost for families has changed from the previous year, when the mean cost per month was $260. The economist randomly samples 25 families and records their energy costs for the current year. (The data for this example is FamilyEnergyCost and it is just one of the many data set examples that can be found in Minitab’s Data Set Library.)

I’ll use these descriptive statistics to create a probability distribution plot that shows you the importance of hypothesis tests. Read on!

The Need for Hypothesis Tests

Why do we even need hypothesis tests? After all, we took a random sample and our sample mean of 330.6 is different from 260. That is different, right? Unfortunately, the picture is muddied because we’re looking at a sample rather than the entire population.

Sampling error is the difference between a sample and the entire population. Thanks to sampling error, it’s entirely possible that while our sample mean is 330.6, the population mean could still be 260. Or, to put it another way, if we repeated the experiment, it’s possible that the second sample mean could be close to 260. A hypothesis test helps assess the likelihood of this possibility!

Use the Sampling Distribution to See If Our Sample Mean is Unlikely

For any given random sample, the mean of the sample almost certainly doesn’t equal the true mean of the population due to sampling error. For our example, it’s unlikely that the mean cost for the entire population is exactly 330.6. In fact, if we took multiple random samples of the same size from the same population, we could plot a distribution of the sample means.

A sampling distribution is the distribution of a statistic, such as the mean, that is obtained by repeatedly drawing a large number of samples from a specific population. This distribution allows you to determine the probability of obtaining the sample statistic.

Fortunately, I can create a plot of sample means without collecting many different random samples! Instead, I’ll create a probability distribution plot using the t-distribution , the sample size, and the variability in our sample to graph the sampling distribution.

Our goal is to determine whether our sample mean is significantly different from the null hypothesis mean. Therefore, we’ll use the graph to see whether our sample mean of 330.6 is unlikely assuming that the population mean is 260. The graph below shows the expected distribution of sample means.

You can see that the most probable sample mean is 260, which makes sense because we’re assuming that the null hypothesis is true. However, there is a reasonable probability of obtaining a sample mean that ranges from 167 to 352, and even beyond! The takeaway from this graph is that while our sample mean of 330.6 is not the most probable, it’s also not outside the realm of possibility.

The Role of Hypothesis Tests

We’ve placed our sample mean in the context of all possible sample means while assuming that the null hypothesis is true. Are these results statistically significant?

As you can see, there is no magic place on the distribution curve to make this determination. Instead, we have a continual decrease in the probability of obtaining sample means that are further from the null hypothesis value. Where do we draw the line?

This is where hypothesis tests are useful. A hypothesis test allows us quantify the probability that our sample mean is unusual.

For this series of posts, I’ll continue to use this graphical framework and add in the significance level, P value, and confidence interval to show how hypothesis tests work and what statistical significance really means.

- Part Two: Significance Levels (alpha) and P values

- Part Three: Confidence Intervals and Confidence Levels

If you'd like to see how I made these graphs, please read: How to Create a Graphical Version of the 1-sample t-Test .

You Might Also Like

- Trust Center

© 2023 Minitab, LLC. All Rights Reserved.

- Terms of Use

- Privacy Policy

- Cookies Settings

- Hypothesis Testing: Definition, Uses, Limitations + Examples

Hypothesis testing is as old as the scientific method and is at the heart of the research process.

Research exists to validate or disprove assumptions about various phenomena. The process of validation involves testing and it is in this context that we will explore hypothesis testing.

What is a Hypothesis?

A hypothesis is a calculated prediction or assumption about a population parameter based on limited evidence. The whole idea behind hypothesis formulation is testing—this means the researcher subjects his or her calculated assumption to a series of evaluations to know whether they are true or false.

Typically, every research starts with a hypothesis—the investigator makes a claim and experiments to prove that this claim is true or false . For instance, if you predict that students who drink milk before class perform better than those who don’t, then this becomes a hypothesis that can be confirmed or refuted using an experiment.

Read: What is Empirical Research Study? [Examples & Method]

What are the Types of Hypotheses?

1. simple hypothesis.

Also known as a basic hypothesis, a simple hypothesis suggests that an independent variable is responsible for a corresponding dependent variable. In other words, an occurrence of the independent variable inevitably leads to an occurrence of the dependent variable.

Typically, simple hypotheses are considered as generally true, and they establish a causal relationship between two variables.

Examples of Simple Hypothesis

- Drinking soda and other sugary drinks can cause obesity.

- Smoking cigarettes daily leads to lung cancer.

2. Complex Hypothesis

A complex hypothesis is also known as a modal. It accounts for the causal relationship between two independent variables and the resulting dependent variables. This means that the combination of the independent variables leads to the occurrence of the dependent variables .

Examples of Complex Hypotheses

- Adults who do not smoke and drink are less likely to develop liver-related conditions.

- Global warming causes icebergs to melt which in turn causes major changes in weather patterns.

3. Null Hypothesis

As the name suggests, a null hypothesis is formed when a researcher suspects that there’s no relationship between the variables in an observation. In this case, the purpose of the research is to approve or disapprove this assumption.

Examples of Null Hypothesis

- This is no significant change in a student’s performance if they drink coffee or tea before classes.

- There’s no significant change in the growth of a plant if one uses distilled water only or vitamin-rich water.

Read: Research Report: Definition, Types + [Writing Guide]

4. Alternative Hypothesis

To disapprove a null hypothesis, the researcher has to come up with an opposite assumption—this assumption is known as the alternative hypothesis. This means if the null hypothesis says that A is false, the alternative hypothesis assumes that A is true.

An alternative hypothesis can be directional or non-directional depending on the direction of the difference. A directional alternative hypothesis specifies the direction of the tested relationship, stating that one variable is predicted to be larger or smaller than the null value while a non-directional hypothesis only validates the existence of a difference without stating its direction.

Examples of Alternative Hypotheses

- Starting your day with a cup of tea instead of a cup of coffee can make you more alert in the morning.

- The growth of a plant improves significantly when it receives distilled water instead of vitamin-rich water.

5. Logical Hypothesis

Logical hypotheses are some of the most common types of calculated assumptions in systematic investigations. It is an attempt to use your reasoning to connect different pieces in research and build a theory using little evidence. In this case, the researcher uses any data available to him, to form a plausible assumption that can be tested.

Examples of Logical Hypothesis

- Waking up early helps you to have a more productive day.

- Beings from Mars would not be able to breathe the air in the atmosphere of the Earth.

6. Empirical Hypothesis

After forming a logical hypothesis, the next step is to create an empirical or working hypothesis. At this stage, your logical hypothesis undergoes systematic testing to prove or disprove the assumption. An empirical hypothesis is subject to several variables that can trigger changes and lead to specific outcomes.

Examples of Empirical Testing

- People who eat more fish run faster than people who eat meat.

- Women taking vitamin E grow hair faster than those taking vitamin K.

7. Statistical Hypothesis

When forming a statistical hypothesis, the researcher examines the portion of a population of interest and makes a calculated assumption based on the data from this sample. A statistical hypothesis is most common with systematic investigations involving a large target audience. Here, it’s impossible to collect responses from every member of the population so you have to depend on data from your sample and extrapolate the results to the wider population.

Examples of Statistical Hypothesis

- 45% of students in Louisiana have middle-income parents.

- 80% of the UK’s population gets a divorce because of irreconcilable differences.

What is Hypothesis Testing?

Hypothesis testing is an assessment method that allows researchers to determine the plausibility of a hypothesis. It involves testing an assumption about a specific population parameter to know whether it’s true or false. These population parameters include variance, standard deviation, and median.

Typically, hypothesis testing starts with developing a null hypothesis and then performing several tests that support or reject the null hypothesis. The researcher uses test statistics to compare the association or relationship between two or more variables.

Explore: Research Bias: Definition, Types + Examples

Researchers also use hypothesis testing to calculate the coefficient of variation and determine if the regression relationship and the correlation coefficient are statistically significant.

How Hypothesis Testing Works

The basis of hypothesis testing is to examine and analyze the null hypothesis and alternative hypothesis to know which one is the most plausible assumption. Since both assumptions are mutually exclusive, only one can be true. In other words, the occurrence of a null hypothesis destroys the chances of the alternative coming to life, and vice-versa.

Interesting: 21 Chrome Extensions for Academic Researchers in 2021

What Are The Stages of Hypothesis Testing?

To successfully confirm or refute an assumption, the researcher goes through five (5) stages of hypothesis testing;

- Determine the null hypothesis

- Specify the alternative hypothesis

- Set the significance level

- Calculate the test statistics and corresponding P-value

- Draw your conclusion

- Determine the Null Hypothesis

Like we mentioned earlier, hypothesis testing starts with creating a null hypothesis which stands as an assumption that a certain statement is false or implausible. For example, the null hypothesis (H0) could suggest that different subgroups in the research population react to a variable in the same way.

- Specify the Alternative Hypothesis

Once you know the variables for the null hypothesis, the next step is to determine the alternative hypothesis. The alternative hypothesis counters the null assumption by suggesting the statement or assertion is true. Depending on the purpose of your research, the alternative hypothesis can be one-sided or two-sided.

Using the example we established earlier, the alternative hypothesis may argue that the different sub-groups react differently to the same variable based on several internal and external factors.

- Set the Significance Level

Many researchers create a 5% allowance for accepting the value of an alternative hypothesis, even if the value is untrue. This means that there is a 0.05 chance that one would go with the value of the alternative hypothesis, despite the truth of the null hypothesis.

Something to note here is that the smaller the significance level, the greater the burden of proof needed to reject the null hypothesis and support the alternative hypothesis.

Explore: What is Data Interpretation? + [Types, Method & Tools]

- Calculate the Test Statistics and Corresponding P-Value

Test statistics in hypothesis testing allow you to compare different groups between variables while the p-value accounts for the probability of obtaining sample statistics if your null hypothesis is true. In this case, your test statistics can be the mean, median and similar parameters.

If your p-value is 0.65, for example, then it means that the variable in your hypothesis will happen 65 in100 times by pure chance. Use this formula to determine the p-value for your data:

- Draw Your Conclusions

After conducting a series of tests, you should be able to agree or refute the hypothesis based on feedback and insights from your sample data.

Applications of Hypothesis Testing in Research

Hypothesis testing isn’t only confined to numbers and calculations; it also has several real-life applications in business, manufacturing, advertising, and medicine.

In a factory or other manufacturing plants, hypothesis testing is an important part of quality and production control before the final products are approved and sent out to the consumer.

During ideation and strategy development, C-level executives use hypothesis testing to evaluate their theories and assumptions before any form of implementation. For example, they could leverage hypothesis testing to determine whether or not some new advertising campaign, marketing technique, etc. causes increased sales.

In addition, hypothesis testing is used during clinical trials to prove the efficacy of a drug or new medical method before its approval for widespread human usage.

What is an Example of Hypothesis Testing?

An employer claims that her workers are of above-average intelligence. She takes a random sample of 20 of them and gets the following results:

Mean IQ Scores: 110

Standard Deviation: 15

Mean Population IQ: 100

Step 1: Using the value of the mean population IQ, we establish the null hypothesis as 100.

Step 2: State that the alternative hypothesis is greater than 100.

Step 3: State the alpha level as 0.05 or 5%

Step 4: Find the rejection region area (given by your alpha level above) from the z-table. An area of .05 is equal to a z-score of 1.645.

Step 5: Calculate the test statistics using this formula

Z = (110–100) ÷ (15÷√20)

10 ÷ 3.35 = 2.99

If the value of the test statistics is higher than the value of the rejection region, then you should reject the null hypothesis. If it is less, then you cannot reject the null.

In this case, 2.99 > 1.645 so we reject the null.

Importance/Benefits of Hypothesis Testing

The most significant benefit of hypothesis testing is it allows you to evaluate the strength of your claim or assumption before implementing it in your data set. Also, hypothesis testing is the only valid method to prove that something “is or is not”. Other benefits include:

- Hypothesis testing provides a reliable framework for making any data decisions for your population of interest.

- It helps the researcher to successfully extrapolate data from the sample to the larger population.

- Hypothesis testing allows the researcher to determine whether the data from the sample is statistically significant.

- Hypothesis testing is one of the most important processes for measuring the validity and reliability of outcomes in any systematic investigation.

- It helps to provide links to the underlying theory and specific research questions.

Criticism and Limitations of Hypothesis Testing

Several limitations of hypothesis testing can affect the quality of data you get from this process. Some of these limitations include:

- The interpretation of a p-value for observation depends on the stopping rule and definition of multiple comparisons. This makes it difficult to calculate since the stopping rule is subject to numerous interpretations, plus “multiple comparisons” are unavoidably ambiguous.

- Conceptual issues often arise in hypothesis testing, especially if the researcher merges Fisher and Neyman-Pearson’s methods which are conceptually distinct.

- In an attempt to focus on the statistical significance of the data, the researcher might ignore the estimation and confirmation by repeated experiments.

- Hypothesis testing can trigger publication bias, especially when it requires statistical significance as a criterion for publication.

- When used to detect whether a difference exists between groups, hypothesis testing can trigger absurd assumptions that affect the reliability of your observation.

Connect to Formplus, Get Started Now - It's Free!

- alternative hypothesis

- alternative vs null hypothesis

- complex hypothesis

- empirical hypothesis

- hypothesis testing

- logical hypothesis

- simple hypothesis

- statistical hypothesis

- busayo.longe

You may also like:

What is Pure or Basic Research? + [Examples & Method]

Simple guide on pure or basic research, its methods, characteristics, advantages, and examples in science, medicine, education and psychology

Alternative vs Null Hypothesis: Pros, Cons, Uses & Examples

We are going to discuss alternative hypotheses and null hypotheses in this post and how they work in research.

Internal Validity in Research: Definition, Threats, Examples

In this article, we will discuss the concept of internal validity, some clear examples, its importance, and how to test it.

Type I vs Type II Errors: Causes, Examples & Prevention

This article will discuss the two different types of errors in hypothesis testing and how you can prevent them from occurring in your research

Formplus - For Seamless Data Collection

Collect data the right way with a versatile data collection tool. try formplus and transform your work productivity today..

- Business Essentials

- Leadership & Management

- Credential of Leadership, Impact, and Management in Business (CLIMB)

- Entrepreneurship & Innovation

- *New* Digital Transformation

- Finance & Accounting

- Business in Society

- For Organizations

- Support Portal

- Media Coverage

- Founding Donors

- Leadership Team

- Harvard Business School →

- HBS Online →

- Business Insights →

Business Insights

Harvard Business School Online's Business Insights Blog provides the career insights you need to achieve your goals and gain confidence in your business skills.

- Career Development

- Communication

- Decision-Making

- Earning Your MBA

- Negotiation

- News & Events

- Productivity

- Staff Spotlight

- Student Profiles

- Work-Life Balance

- Alternative Investments

- Business Analytics

- Business Strategy

- Business and Climate Change

- Design Thinking and Innovation

- Digital Marketing Strategy

- Disruptive Strategy

- Economics for Managers

- Entrepreneurship Essentials

- Financial Accounting

- Global Business

- Launching Tech Ventures

- Leadership Principles

- Leadership, Ethics, and Corporate Accountability

- Leading with Finance

- Management Essentials

- Negotiation Mastery

- Organizational Leadership

- Power and Influence for Positive Impact

- Strategy Execution

- Sustainable Business Strategy

- Sustainable Investing

- Winning with Digital Platforms

A Beginner’s Guide to Hypothesis Testing in Business

- 30 Mar 2021

Becoming a more data-driven decision-maker can bring several benefits to your organization, enabling you to identify new opportunities to pursue and threats to abate. Rather than allowing subjective thinking to guide your business strategy, backing your decisions with data can empower your company to become more innovative and, ultimately, profitable.

If you’re new to data-driven decision-making, you might be wondering how data translates into business strategy. The answer lies in generating a hypothesis and verifying or rejecting it based on what various forms of data tell you.

Below is a look at hypothesis testing and the role it plays in helping businesses become more data-driven.

Access your free e-book today.

What Is Hypothesis Testing?

To understand what hypothesis testing is, it’s important first to understand what a hypothesis is.

A hypothesis or hypothesis statement seeks to explain why something has happened, or what might happen, under certain conditions. It can also be used to understand how different variables relate to each other. Hypotheses are often written as if-then statements; for example, “If this happens, then this will happen.”

Hypothesis testing , then, is a statistical means of testing an assumption stated in a hypothesis. While the specific methodology leveraged depends on the nature of the hypothesis and data available, hypothesis testing typically uses sample data to extrapolate insights about a larger population.

Hypothesis Testing in Business

When it comes to data-driven decision-making, there’s a certain amount of risk that can mislead a professional. This could be due to flawed thinking or observations, incomplete or inaccurate data , or the presence of unknown variables. The danger in this is that, if major strategic decisions are made based on flawed insights, it can lead to wasted resources, missed opportunities, and catastrophic outcomes.

The real value of hypothesis testing in business is that it allows professionals to test their theories and assumptions before putting them into action. This essentially allows an organization to verify its analysis is correct before committing resources to implement a broader strategy.

As one example, consider a company that wishes to launch a new marketing campaign to revitalize sales during a slow period. Doing so could be an incredibly expensive endeavor, depending on the campaign’s size and complexity. The company, therefore, may wish to test the campaign on a smaller scale to understand how it will perform.

In this example, the hypothesis that’s being tested would fall along the lines of: “If the company launches a new marketing campaign, then it will translate into an increase in sales.” It may even be possible to quantify how much of a lift in sales the company expects to see from the effort. Pending the results of the pilot campaign, the business would then know whether it makes sense to roll it out more broadly.

Related: 9 Fundamental Data Science Skills for Business Professionals

Key Considerations for Hypothesis Testing

1. alternative hypothesis and null hypothesis.

In hypothesis testing, the hypothesis that’s being tested is known as the alternative hypothesis . Often, it’s expressed as a correlation or statistical relationship between variables. The null hypothesis , on the other hand, is a statement that’s meant to show there’s no statistical relationship between the variables being tested. It’s typically the exact opposite of whatever is stated in the alternative hypothesis.

For example, consider a company’s leadership team that historically and reliably sees $12 million in monthly revenue. They want to understand if reducing the price of their services will attract more customers and, in turn, increase revenue.

In this case, the alternative hypothesis may take the form of a statement such as: “If we reduce the price of our flagship service by five percent, then we’ll see an increase in sales and realize revenues greater than $12 million in the next month.”

The null hypothesis, on the other hand, would indicate that revenues wouldn’t increase from the base of $12 million, or might even decrease.

Check out the video below about the difference between an alternative and a null hypothesis, and subscribe to our YouTube channel for more explainer content.

2. Significance Level and P-Value

Statistically speaking, if you were to run the same scenario 100 times, you’d likely receive somewhat different results each time. If you were to plot these results in a distribution plot, you’d see the most likely outcome is at the tallest point in the graph, with less likely outcomes falling to the right and left of that point.

With this in mind, imagine you’ve completed your hypothesis test and have your results, which indicate there may be a correlation between the variables you were testing. To understand your results' significance, you’ll need to identify a p-value for the test, which helps note how confident you are in the test results.

In statistics, the p-value depicts the probability that, assuming the null hypothesis is correct, you might still observe results that are at least as extreme as the results of your hypothesis test. The smaller the p-value, the more likely the alternative hypothesis is correct, and the greater the significance of your results.

3. One-Sided vs. Two-Sided Testing

When it’s time to test your hypothesis, it’s important to leverage the correct testing method. The two most common hypothesis testing methods are one-sided and two-sided tests , or one-tailed and two-tailed tests, respectively.

Typically, you’d leverage a one-sided test when you have a strong conviction about the direction of change you expect to see due to your hypothesis test. You’d leverage a two-sided test when you’re less confident in the direction of change.

4. Sampling

To perform hypothesis testing in the first place, you need to collect a sample of data to be analyzed. Depending on the question you’re seeking to answer or investigate, you might collect samples through surveys, observational studies, or experiments.

A survey involves asking a series of questions to a random population sample and recording self-reported responses.

Observational studies involve a researcher observing a sample population and collecting data as it occurs naturally, without intervention.

Finally, an experiment involves dividing a sample into multiple groups, one of which acts as the control group. For each non-control group, the variable being studied is manipulated to determine how the data collected differs from that of the control group.

Learn How to Perform Hypothesis Testing

Hypothesis testing is a complex process involving different moving pieces that can allow an organization to effectively leverage its data and inform strategic decisions.

If you’re interested in better understanding hypothesis testing and the role it can play within your organization, one option is to complete a course that focuses on the process. Doing so can lay the statistical and analytical foundation you need to succeed.

Do you want to learn more about hypothesis testing? Explore Business Analytics —one of our online business essentials courses —and download our Beginner’s Guide to Data & Analytics .

About the Author

Teach yourself statistics

How to Test Statistical Hypotheses

This lesson describes a general procedure that can be used to test statistical hypotheses.

How to Conduct Hypothesis Tests

All hypothesis tests are conducted the same way. The researcher states a hypothesis to be tested, formulates an analysis plan, analyzes sample data according to the plan, and accepts or rejects the null hypothesis, based on results of the analysis.

- State the hypotheses. Every hypothesis test requires the analyst to state a null hypothesis and an alternative hypothesis . The hypotheses are stated in such a way that they are mutually exclusive. That is, if one is true, the other must be false; and vice versa.

- Significance level. Often, researchers choose significance levels equal to 0.01, 0.05, or 0.10; but any value between 0 and 1 can be used.

- Test method. Typically, the test method involves a test statistic and a sampling distribution . Computed from sample data, the test statistic might be a mean score, proportion, difference between means, difference between proportions, z-score, t statistic, chi-square, etc. Given a test statistic and its sampling distribution, a researcher can assess probabilities associated with the test statistic. If the test statistic probability is less than the significance level, the null hypothesis is rejected.

Test statistic = (Statistic - Parameter) / (Standard deviation of statistic)

Test statistic = (Statistic - Parameter) / (Standard error of statistic)

- P-value. The P-value is the probability of observing a sample statistic as extreme as the test statistic, assuming the null hypothesis is true.

- Interpret the results. If the sample findings are unlikely, given the null hypothesis, the researcher rejects the null hypothesis. Typically, this involves comparing the P-value to the significance level , and rejecting the null hypothesis when the P-value is less than the significance level.

Applications of the General Hypothesis Testing Procedure

The next few lessons show how to apply the general hypothesis testing procedure to different kinds of statistical problems.

- Proportions

- Difference between proportions

- Regression slope

- Difference between means

- Difference between matched pairs

- Goodness of fit

- Homogeneity

- Independence

At this point, don't worry if the general procedure for testing hypotheses seems a little bit unclear. The procedure will be clearer as you see it applied in the next few lessons.

Test Your Understanding

In hypothesis testing, which of the following statements is always true?

I. The P-value is greater than the significance level. II. The P-value is computed from the significance level. III. The P-value is the parameter in the null hypothesis. IV. The P-value is a test statistic. V. The P-value is a probability.

(A) I only (B) II only (C) III only (D) IV only (E) V only

The correct answer is (E). The P-value is the probability of observing a sample statistic as extreme as the test statistic. It can be greater than the significance level, but it can also be smaller than the significance level. It is not computed from the significance level, it is not the parameter in the null hypothesis, and it is not a test statistic.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- Advanced Search

- Journal List

- R Soc Open Sci

- v.10(8); 2023 Aug

- PMC10465209

On the scope of scientific hypotheses

William hedley thompson.

1 Department of Applied Information Technology, University of Gothenburg, Gothenburg, Sweden

2 Institute of Neuroscience and Physiology, Sahlgrenska Academy, University of Gothenburg, Gothenburg, Sweden

3 Department of Pedagogical, Curricular and Professional Studies, Faculty of Education, University of Gothenburg, Gothenburg, Sweden

4 Department of Clinical Neuroscience, Karolinska Institutet, Stockholm, Sweden

Associated Data

This article has no additional data.

Hypotheses are frequently the starting point when undertaking the empirical portion of the scientific process. They state something that the scientific process will attempt to evaluate, corroborate, verify or falsify. Their purpose is to guide the types of data we collect, analyses we conduct, and inferences we would like to make. Over the last decade, metascience has advocated for hypotheses being in preregistrations or registered reports, but how to formulate these hypotheses has received less attention. Here, we argue that hypotheses can vary in specificity along at least three independent dimensions: the relationship, the variables, and the pipeline. Together, these dimensions form the scope of the hypothesis. We demonstrate how narrowing the scope of a hypothesis in any of these three ways reduces the hypothesis space and that this reduction is a type of novelty. Finally, we discuss how this formulation of hypotheses can guide researchers to formulate the appropriate scope for their hypotheses and should aim for neither too broad nor too narrow a scope. This framework can guide hypothesis-makers when formulating their hypotheses by helping clarify what is being tested, chaining results to previous known findings, and demarcating what is explicitly tested in the hypothesis.

1. Introduction

Hypotheses are an important part of the scientific process. However, surprisingly little attention is given to hypothesis-making compared to other skills in the scientist's skillset within current discussions aimed at improving scientific practice. Perhaps this lack of emphasis is because the formulation of the hypothesis is often considered less relevant, as it is ultimately the scientific process that will eventually decide the veracity of the hypothesis. However, there are more hypotheses than scientific studies as selection occurs at various stages: from funder selection and researcher's interests. So which hypotheses are worthwhile to pursue? Which hypotheses are the most effective or pragmatic for extending or enhancing our collective knowledge? We consider the answer to these questions by discussing how broad or narrow a hypothesis can or should be (i.e. its scope).

We begin by considering that the two statements below are both hypotheses and vary in scope:

- H 1 : For every 1 mg decrease of x , y will increase by, on average, 2.5 points.

- H 2 : Changes in x 1 or x 2 correlate with y levels in some way.

Clearly, the specificity of the two hypotheses is very different. H 1 states a precise relationship between two variables ( x and y ), while H 2 specifies a vaguer relationship and does not specify which variables will show the relationship. However, they are both still hypotheses about how x and y relate to each other. This claim of various degrees of the broadness of hypotheses is, in and of itself, not novel. In Epistemetrics, Rescher [ 1 ], while drawing upon the physicist Duhem's work, develops what he calls Duhem's Law. This law considers a trade-off between certainty or precision in statements about physics when evaluating them. Duhem's Law states that narrower hypotheses, such as H 1 above, are more precise but less likely to be evaluated as true than broader ones, such as H 2 above. Similarly, Popper, when discussing theories, describes the reverse relationship between content and probability of a theory being true, i.e. with increased content, there is a decrease in probability and vice versa [ 2 ]. Here we will argue that it is important that both H 1 and H 2 are still valid scientific hypotheses, and their appropriateness depends on certain scientific questions.

The question of hypothesis scope is relevant since there are multiple recent prescriptions to improve science, ranging from topics about preregistrations [ 3 ], registered reports [ 4 ], open science [ 5 ], standardization [ 6 ], generalizability [ 7 ], multiverse analyses [ 8 ], dataset reuse [ 9 ] and general questionable research practices [ 10 ]. Within each of these issues, there are arguments to demarcate between confirmatory and exploratory research or normative prescriptions about how science should be done (e.g. science is ‘bad’ or ‘worse’ if code/data are not open). Despite all these discussions and improvements, much can still be done to improve hypothesis-making. A recent evaluation of preregistered studies in psychology found that over half excluded the preregistered hypotheses [ 11 ]. Further, evaluations of hypotheses in ecology showed that most hypotheses are not explicitly stated [ 12 , 13 ]. Other research has shown that obfuscated hypotheses are more prevalent in retracted research [ 14 ]. There have been recommendations for simpler hypotheses in psychology to avoid misinterpretations and misspecifications [ 15 ]. Finally, several evaluations of preregistration practices have found that a significant proportion of articles do not abide by their stated hypothesis or add additional hypotheses [ 11 , 16 – 18 ]. In sum, while multiple efforts exist to improve scientific practice, our hypothesis-making could improve.

One of our intentions is to provide hypothesis-makers with tools to assist them when making hypotheses. We consider this useful and timely as, with preregistrations becoming more frequent, the hypothesis-making process is now open and explicit . However, preregistrations are difficult to write [ 19 ], and preregistered articles can change or omit hypotheses [ 11 ] or they are vague and certain degrees of freedom hard to control for [ 16 – 18 ]. One suggestion has been to do less confirmatory research [ 7 , 20 ]. While we agree that all research does not need to be confirmatory, we also believe that not all preregistrations of confirmatory work must test narrow hypotheses. We think there is a possible point of confusion that the specificity in preregistrations, where researcher degrees of freedom should be stated, necessitates the requirement that the hypothesis be narrow. Our belief that this confusion is occurring is supported by the study Akker et al . [ 11 ] where they found that 18% of published psychology studies changed their preregistered hypothesis (e.g. its direction), and 60% of studies selectively reported hypotheses in some way. It is along these lines that we feel the framework below can be useful to help formulate appropriate hypotheses to mitigate these identified issues.

We consider this article to be a discussion of the researcher's different choices when formulating hypotheses and to help link hypotheses over time. Here we aim to deconstruct what aspects there are in the hypothesis about their specificity. Throughout this article, we intend to be neutral to many different philosophies of science relating to the scientific method (i.e. how one determines the veracity of a hypothesis). Our idea of neutrality here is that whether a researcher adheres to falsification, verification, pragmatism, or some other philosophy of science, then this framework can be used when formulating hypotheses. 1

The framework this article advocates for is that there are (at least) three dimensions that hypotheses vary along regarding their narrowness and broadness: the selection of relationships, variables, and pipelines. We believe this discussion is fruitful for the current debate regarding normative practices as some positions make, sometimes implicit, commitments about which set of hypotheses the scientific community ought to consider good or permissible. We proceed by outlining a working definition of ‘scientific hypothesis' and then discuss how it relates to theory. Then, we justify how hypotheses can vary along the three dimensions. Using this framework, we then discuss the scopes in relation to appropriate hypothesis-making and an argument about what constitutes a scientifically novel hypothesis. We end the article with practical advice for researchers who wish to use this framework.

2. The scientific hypothesis

In this section, we will describe a functional and descriptive role regarding how scientists use hypotheses. Jeong & Kwon [ 21 ] investigated and summarized the different uses the concept of ‘hypothesis’ had in philosophical and scientific texts. They identified five meanings: assumption, tentative explanation, tentative cause, tentative law, and prediction. Jeong & Kwon [ 21 ] further found that researchers in science and philosophy used all the different definitions of hypotheses, although there was some variance in frequency between fields. Here we see, descriptively , that the way researchers use the word ‘hypothesis’ is diverse and has a wide range in specificity and function. However, whichever meaning a hypothesis has, it aims to be true, adequate, accurate or useful in some way.

Not all hypotheses are ‘scientific hypotheses'. For example, consider the detective trying to solve a crime and hypothesizing about the perpetrator. Such a hypothesis still aims to be true and is a tentative explanation but differs from the scientific hypothesis. The difference is that the researcher, unlike the detective, evaluates the hypothesis with the scientific method and submits the work for evaluation by the scientific community. Thus a scientific hypothesis entails a commitment to evaluate the statement with the scientific process . 2 Additionally, other types of hypotheses can exist. As discussed in more detail below, scientific theories generate not only scientific hypotheses but also contain auxiliary hypotheses. The latter refers to additional assumptions considered to be true and not explicitly evaluated. 3

Next, the scientific hypothesis is generally made antecedent to the evaluation. This does not necessitate that the event (e.g. in archaeology) or the data collection (e.g. with open data reuse) must be collected before the hypothesis is made, but that the evaluation of the hypothesis cannot happen before its formulation. This claim state does deny the utility of exploratory hypothesis testing of post hoc hypotheses (see [ 25 ]). However, previous results and exploration can generate new hypotheses (e.g. via abduction [ 22 , 26 – 28 ], which is the process of creating hypotheses from evidence), which is an important part of science [ 29 – 32 ], but crucially, while these hypotheses are important and can be the conclusion of exploratory work, they have yet to be evaluated (by whichever method of choice). Hence, they still conform to the antecedency requirement. A further way to justify the antecedency is seen in the practice of formulating a post hoc hypothesis, and considering it to have been evaluated is seen as a questionable research practice (known as ‘hypotheses after results are known’ or HARKing [ 33 ]). 4

While there is a varying range of specificity, is the hypothesis a critical part of all scientific work, or is it reserved for some subset of investigations? There are different opinions regarding this. Glass and Hall, for example, argue that the term only refers to falsifiable research, and model-based research uses verification [ 36 ]. However, this opinion does not appear to be the consensus. Osimo and Rumiati argue that any model based on or using data is never wholly free from hypotheses, as hypotheses can, even implicitly, infiltrate the data collection [ 37 ]. For our definition, we will consider hypotheses that can be involved in different forms of scientific evaluation (i.e. not just falsification), but we do not exclude the possibility of hypothesis-free scientific work.

Finally, there is a debate about whether theories or hypotheses should be linguistic or formal [ 38 – 40 ]. Neither side in this debate argues that verbal or formal hypotheses are not possible, but instead, they discuss normative practices. Thus, for our definition, both linguistic and formal hypotheses are considered viable.

Considering the above discussion, let us summarize the scientific process and the scientific hypothesis: a hypothesis guides what type of data are sampled and what analysis will be done. With the new observations, evidence is analysed or quantified in some way (often using inferential statistics) to judge the hypothesis's truth value, utility, credibility, or likelihood. The following working definition captures the above:

- Scientific hypothesis : an implicit or explicit statement that can be verbal or formal. The hypothesis makes a statement about some natural phenomena (via an assumption, explanation, cause, law or prediction). The scientific hypothesis is made antecedent to performing a scientific process where there is a commitment to evaluate it.

For simplicity, we will only use the term ‘hypothesis’ for ‘scientific hypothesis' to refer to the above definition for the rest of the article except when it is necessary to distinguish between other types of hypotheses. Finally, this definition could further be restrained in multiple ways (e.g. only explicit hypotheses are allowed, or assumptions are never hypotheses). However, if the definition is more (or less) restrictive, it has little implication for the argument below.

3. The hypothesis, theory and auxiliary assumptions

While we have a definition of the scientific hypothesis, we have yet to link it with how it relates to scientific theory, where there is frequently some interconnection (i.e. a hypothesis tests a scientific theory). Generally, for this paper, we believe our argument applies regardless of how scientific theory is defined. Further, some research lacks theory, sometimes called convenience or atheoretical studies [ 41 ]. Here a hypothesis can be made without a wider theory—and our framework fits here too. However, since many consider hypotheses to be defined or deducible from scientific theory, there is an important connection between the two. Therefore, we will briefly clarify how hypotheses relate to common formulations of scientific theory.

A scientific theory is generally a set of axioms or statements about some objects, properties and their relations relating to some phenomena. Hypotheses can often be deduced from the theory. Additionally, a theory has boundary conditions. The boundary conditions specify the domain of the theory stating under what conditions it applies (e.g. all things with a central neural system, humans, women, university teachers) [ 42 ]. Boundary conditions of a theory will consequently limit all hypotheses deduced from the theory. For example, with a boundary condition ‘applies to all humans’, then the subsequent hypotheses deduced from the theory are limited to being about humans. While this limitation of the hypothesis by the theory's boundary condition exists, all the considerations about a hypothesis scope detailed below still apply within the boundary conditions. Finally, it is also possible (depending on the definition of scientific theory) for a hypothesis to test the same theory under different boundary conditions. 5

The final consideration relating scientific theory to scientific hypotheses is auxiliary hypotheses. These hypotheses are theories or assumptions that are considered true simultaneously with the theory. Most philosophies of science from Popper's background knowledge [ 24 ], Kuhn's paradigms during normal science [ 44 ], and Laktos' protective belt [ 45 ] all have their own versions of this auxiliary or background information that is required for the hypothesis to test the theory. For example, Meelh [ 46 ] auxiliary theories/assumptions are needed to go from theoretical terms to empirical terms (e.g. neural activity can be inferred from blood oxygenation in fMRI research or reaction time to an indicator of cognition) and auxiliary theories about instruments (e.g. the experimental apparatus works as intended) and more (see also Other approaches to categorizing hypotheses below). As noted in the previous section, there is a difference between these auxiliary hypotheses, regardless of their definition, and the scientific hypothesis defined above. Recall that our definition of the scientific hypothesis included a commitment to evaluate it. There are no such commitments with auxiliary hypotheses, but rather they are assumed to be correct to test the theory adequately. This distinction proves to be important as auxiliary hypotheses are still part of testing a theory but are separate from the hypothesis to be evaluated (discussed in more detail below).

4. The scope of hypotheses

In the scientific hypothesis section, we defined the hypothesis and discussed how it relates back to the theory. In this section, we want to defend two claims about hypotheses:

- (A1) Hypotheses can have different scopes . Some hypotheses are narrower in their formulation, and some are broader.

- (A2) The scope of hypotheses can vary along three dimensions relating to relationship selection , variable selection , and pipeline selection .

A1 may seem obvious, but it is important to establish what is meant by narrower and broader scope. When a hypothesis is very narrow, it is specific. For example, it might be specific about the type of relationship between some variables. In figure 1 , we make four different statements regarding the relationship between x and y . The narrowest hypothesis here states ‘there is a positive linear relationship with a magnitude of 0.5 between x and y ’ ( figure 1 a ), and the broadest hypothesis states ‘there is a relationship between x and y ’ ( figure 1 d ). Note that many other hypotheses are possible that are not included in this example (such as there being no relationship).

Examples of narrow and broad hypotheses between x and y . Circles indicate a set of possible relationships with varying slopes that can pivot or bend.

We see that the narrowest of these hypotheses claims a type of relationship (linear), a direction of the relationship (positive) and a magnitude of the relationship (0.5). As the hypothesis becomes broader, the specific magnitude disappears ( figure 1 b ), the relationship has additional options than just being linear ( figure 1 c ), and finally, the direction of the relationship disappears. Crucially, all the examples in figure 1 can meet the above definition of scientific hypotheses. They are all statements that can be evaluated with the same scientific method. There is a difference between these statements, though— they differ in the scope of the hypothesis . Here we have justified A1.

Within this framework, when we discuss whether a hypothesis is narrower or broader in scope, this is a relation between two hypotheses where one is a subset of the other. This means that if H 1 is narrower than H 2 , and if H 1 is true, then H 2 is also true. This can be seen in figure 1 a–d . Suppose figure 1 a , the narrowest of all the hypotheses, is true. In that case, all the other broader statements are also true (i.e. a linear correlation of 0.5 necessarily entails that there is also a positive linear correlation, a linear correlation, and some relationship). While this property may appear trivial, it entails that it is only possible to directly compare the hypothesis scope between two hypotheses (i.e. their broadness or narrowness) where one is the subset of the other. 6

4.1. Sets, disjunctions and conjunctions of elements

The above restraint defines the scope as relations between sets. This property helps formalize the framework of this article. Below, when we discuss the different dimensions that can impact the scope, these become represented as a set. Each set contains elements. Each element is a permissible situation that allows the hypothesis to be accepted. We denote elements as lower case with italics (e.g. e 1 , e 2 , e 3 ) and sets as bold upper case (e.g. S ). Each of the three different dimensions discussed below will be formalized as sets, while the total number of elements specifies their scope.

Let us reconsider the above restraint about comparing hypotheses as narrower or broader. This can be formally shown if:

- e 1 , e 2 , e 3 are elements of S 1 ; and

- e 1 and e 2 are elements of S 2 ,

then S 2 is narrower than S 1 .

Each element represents specific propositions that, if corroborated, would support the hypothesis. Returning to figure 1 a , b , the following statements apply to both:

- ‘There is a positive linear relationship between x and y with a slope of 0.5’.

Whereas the following two apply to figure 1 b but not figure 1 a :

- ‘There is a positive linear relationship between x and y with a slope of 0.4’ ( figure 1 b ).

- ‘There is a positive linear relationship between x and y with a slope of 0.3’ ( figure 1 b ).

Figure 1 b allows for a considerably larger number of permissible situations (which is obvious as it allows for any positive linear relationship). When formulating the hypothesis in figure 1 b , we do not need to specify every single one of these permissible relationships. We can simply specify all possible positive slopes, which entails the set of permissible elements it includes.

That broader hypotheses have more elements in their sets entails some important properties. When we say S contains the elements e 1 , e 2 , and e 3 , the hypothesis is corroborated if e 1 or e 2 or e 3 is the case. This means that the set requires only one of the elements to be corroborated for the hypothesis to be considered correct (i.e. the positive linear relationship needs to be 0.3 or 0.4 or 0.5). Contrastingly, we will later see cases when conjunctions of elements occur (i.e. both e 1 and e 2 are the case). When a conjunction occurs, in this formulation, the conjunction itself becomes an element in the set (i.e. ‘ e 1 and e 2 ’ is a single element). Figure 2 illustrates how ‘ e 1 and e 2 ’ is narrower than ‘ e 1 ’, and ‘ e 1 ’ is narrower than ‘ e 1 or e 2 ’. 7 This property relating to the conjunction being narrower than individual elements is explained in more detail in the pipeline selection section below.

Scope as sets. Left : four different sets (grey, red, blue and purple) showing different elements which they contain. Right : a list of each colour explaining which set is a subset of the other (thereby being ‘narrower’).

4.2. Relationship selection

We move to A2, which is to show the different dimensions that a hypothesis scope can vary along. We have already seen an example of the first dimension of a hypothesis in figure 1 , the relationship selection . Let R denote the set of all possible configurations of relationships that are permissible for the hypothesis to be considered true. For example, in the narrowest formulation above, there was one allowed relationship for the hypothesis to be true. Consequently, the size of R (denoted | R |) is one. As discussed above, in the second narrowest formulation ( figure 1 b ), R has more possible relationships where it can still be considered true:

- r 1 = ‘a positive linear relationship of 0.1’

- r 2 = ‘a positive linear relationship of 0.2’

- r 3 = ‘a positive linear relationship of 0.3’.

Additionally, even broader hypotheses will be compatible with more types of relationships. In figure 1 c , d , nonlinear and negative relationships are also possible relationships included in R . For this broader statement to be affirmed, more elements are possible to be true. Thus if | R | is greater (i.e. contains more possible configurations for the hypothesis to be true), then the hypothesis is broader. Thus, the scope of relating to the relationship selection is specified by | R |. Finally, if |R H1 | > |R H2 | , then H 1 is broader than H 2 regarding the relationship selection.

Figure 1 is an example of the relationship narrowing. That the relationship became linear is only an example and does not necessitate a linear relationship or that this scope refers only to correlations. An alternative example of a relationship scope is a broad hypothesis where there is no knowledge about the distribution of some data. In such situations, one may assume a uniform relationship or a Cauchy distribution centred at zero. Over time the specific distribution can be hypothesized. Thereafter, the various parameters of the distribution can be hypothesized. At each step, the hypothesis of the distribution gets further specified to narrower formulations where a smaller set of possible relationships are included (see [ 47 , 48 ] for a more in-depth discussion about how specific priors relate to more narrow tests). Finally, while figure 1 was used to illustrate the point of increasingly narrow relationship hypotheses, it is more likely to expect the narrowest relationship, within fields such as psychology, to have considerable uncertainty and be formulated with confidence or credible intervals (i.e. we will rarely reach point estimates).

4.3. Variable selection

We have demonstrated that relationship selection can affect the scope of a hypothesis. Additionally, at least two other dimensions can affect the scope of a hypothesis: variable selection and pipeline selection . The variable selection in figure 1 was a single bivariate relationship (e.g. x 's relationship with y ). However, it is not always the case that we know which variables will be involved. For example, in neuroimaging, we can be confident that one or more brain regions will be processing some information following a stimulus. Still, we might not be sure which brain region(s) this will be. Consequently, our hypothesis becomes broader because we have selected more variables. The relationship selection may be identical for each chosen variable, but the variable selection becomes broader. We can consider the following three hypotheses to be increasing in their scope:

- H 1 : x relates to y with relationship R .

- H 2 : x 1 or x 2 relates to y with relationship R .

- H 3 : x 1 or x 2 or x 3 relates to y with relationship R .

For H 1 –H 3 above, we assume that R is the same. Further, we assume that there is no interaction between these variables.

In the above examples, we have multiple x ( x 1 , x 2 , x 3 , … , x n ). Again, we can symbolize the variable selection as a non-empty set XY , containing either a single variable or many variables. Our motivation for designating it XY is that the variable selection can include multiple possibilities for both the independent variable ( x ) and the dependent variable ( y ). Like with relationship selection, we can quantify the broadness between two hypotheses with the size of the set XY . Consequently, | XY | denotes the total scope concerning variable selection. Thus, in the examples above | XY H1 | < | XY H2 | < | XY H3 |. Like with relationship selection, hypotheses that vary in | XY | still meet the definition of a hypothesis. 8