Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Type I & Type II Errors | Differences, Examples, Visualizations

Type I & Type II Errors | Differences, Examples, Visualizations

Published on January 18, 2021 by Pritha Bhandari . Revised on June 22, 2023.

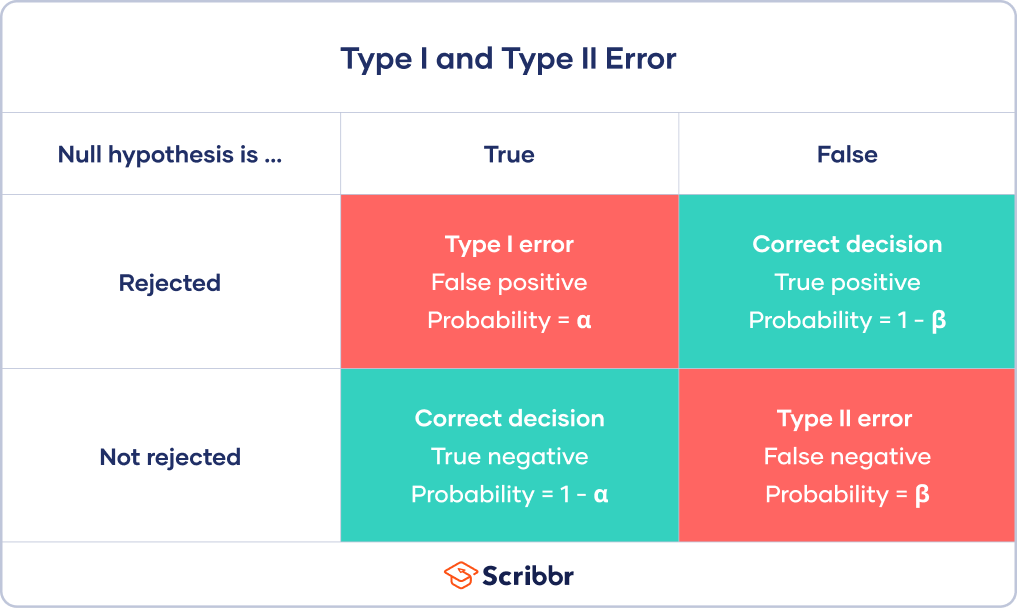

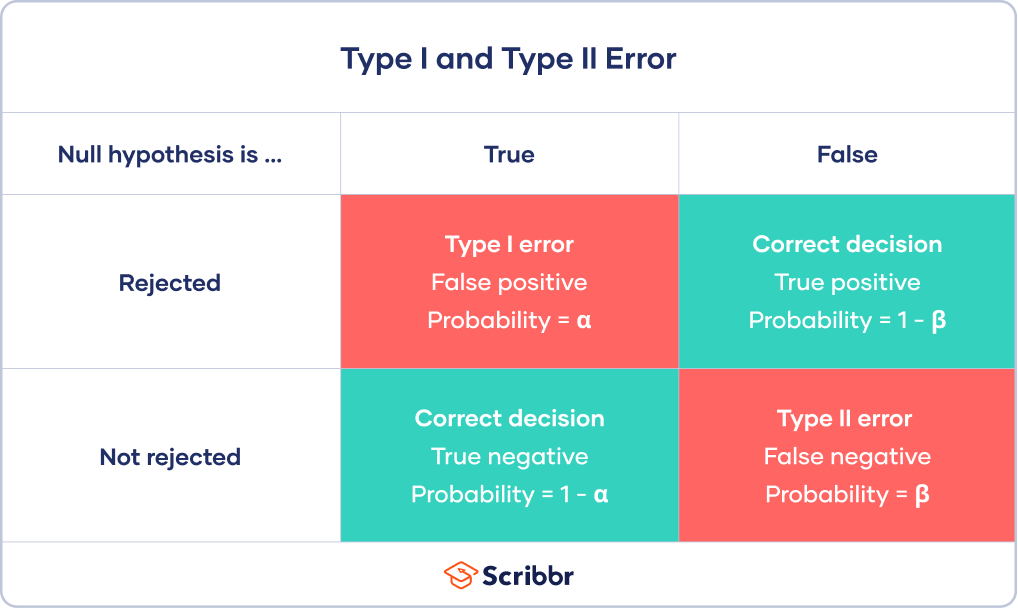

In statistics , a Type I error is a false positive conclusion, while a Type II error is a false negative conclusion.

Making a statistical decision always involves uncertainties, so the risks of making these errors are unavoidable in hypothesis testing .

The probability of making a Type I error is the significance level , or alpha (α), while the probability of making a Type II error is beta (β). These risks can be minimized through careful planning in your study design.

- Type I error (false positive) : the test result says you have coronavirus, but you actually don’t.

- Type II error (false negative) : the test result says you don’t have coronavirus, but you actually do.

Table of contents

Error in statistical decision-making, type i error, type ii error, trade-off between type i and type ii errors, is a type i or type ii error worse, other interesting articles, frequently asked questions about type i and ii errors.

Using hypothesis testing, you can make decisions about whether your data support or refute your research predictions with null and alternative hypotheses .

Hypothesis testing starts with the assumption of no difference between groups or no relationship between variables in the population—this is the null hypothesis . It’s always paired with an alternative hypothesis , which is your research prediction of an actual difference between groups or a true relationship between variables .

In this case:

- The null hypothesis (H 0 ) is that the new drug has no effect on symptoms of the disease.

- The alternative hypothesis (H 1 ) is that the drug is effective for alleviating symptoms of the disease.

Then , you decide whether the null hypothesis can be rejected based on your data and the results of a statistical test . Since these decisions are based on probabilities, there is always a risk of making the wrong conclusion.

- If your results show statistical significance , that means they are very unlikely to occur if the null hypothesis is true. In this case, you would reject your null hypothesis. But sometimes, this may actually be a Type I error.

- If your findings do not show statistical significance, they have a high chance of occurring if the null hypothesis is true. Therefore, you fail to reject your null hypothesis. But sometimes, this may be a Type II error.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

A Type I error means rejecting the null hypothesis when it’s actually true. It means concluding that results are statistically significant when, in reality, they came about purely by chance or because of unrelated factors.

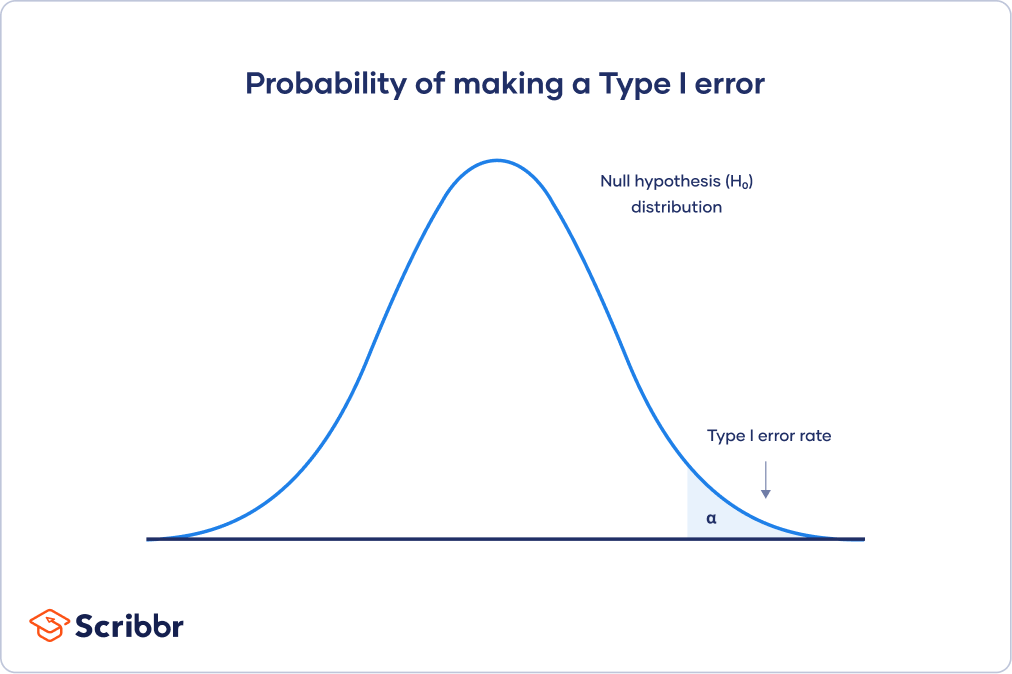

The risk of committing this error is the significance level (alpha or α) you choose. That’s a value that you set at the beginning of your study to assess the statistical probability of obtaining your results ( p value).

The significance level is usually set at 0.05 or 5%. This means that your results only have a 5% chance of occurring, or less, if the null hypothesis is actually true.

If the p value of your test is lower than the significance level, it means your results are statistically significant and consistent with the alternative hypothesis. If your p value is higher than the significance level, then your results are considered statistically non-significant.

To reduce the Type I error probability, you can simply set a lower significance level.

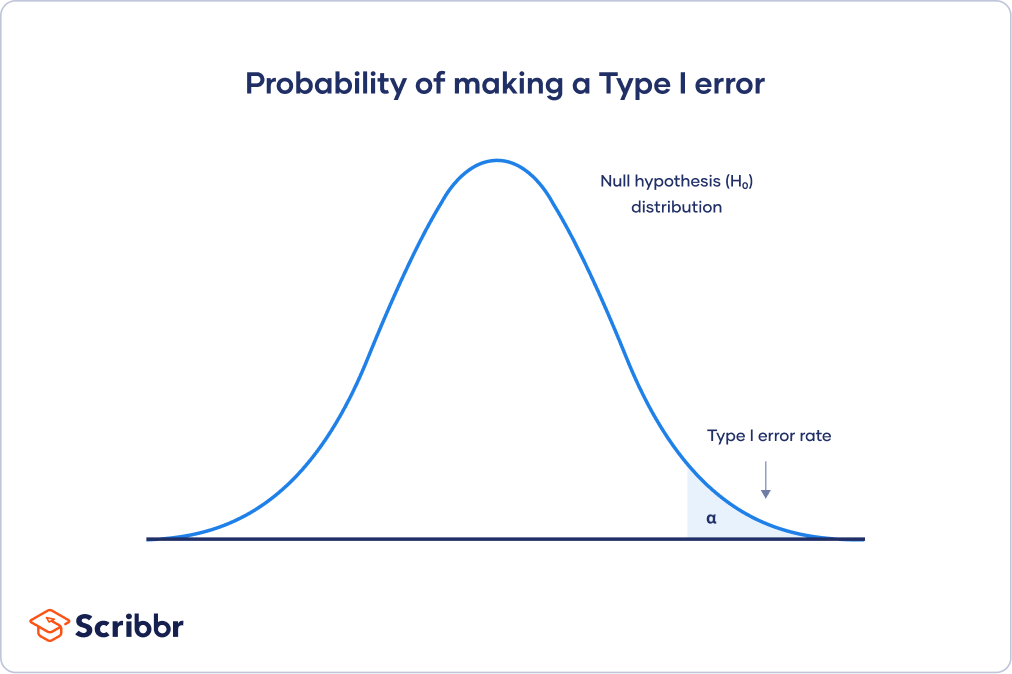

Type I error rate

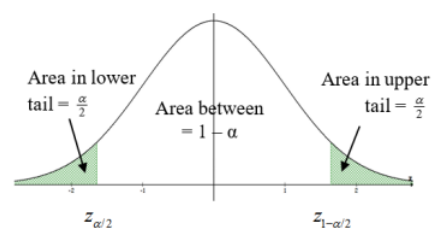

The null hypothesis distribution curve below shows the probabilities of obtaining all possible results if the study were repeated with new samples and the null hypothesis were true in the population .

At the tail end, the shaded area represents alpha. It’s also called a critical region in statistics.

If your results fall in the critical region of this curve, they are considered statistically significant and the null hypothesis is rejected. However, this is a false positive conclusion, because the null hypothesis is actually true in this case!

A Type II error means not rejecting the null hypothesis when it’s actually false. This is not quite the same as “accepting” the null hypothesis, because hypothesis testing can only tell you whether to reject the null hypothesis.

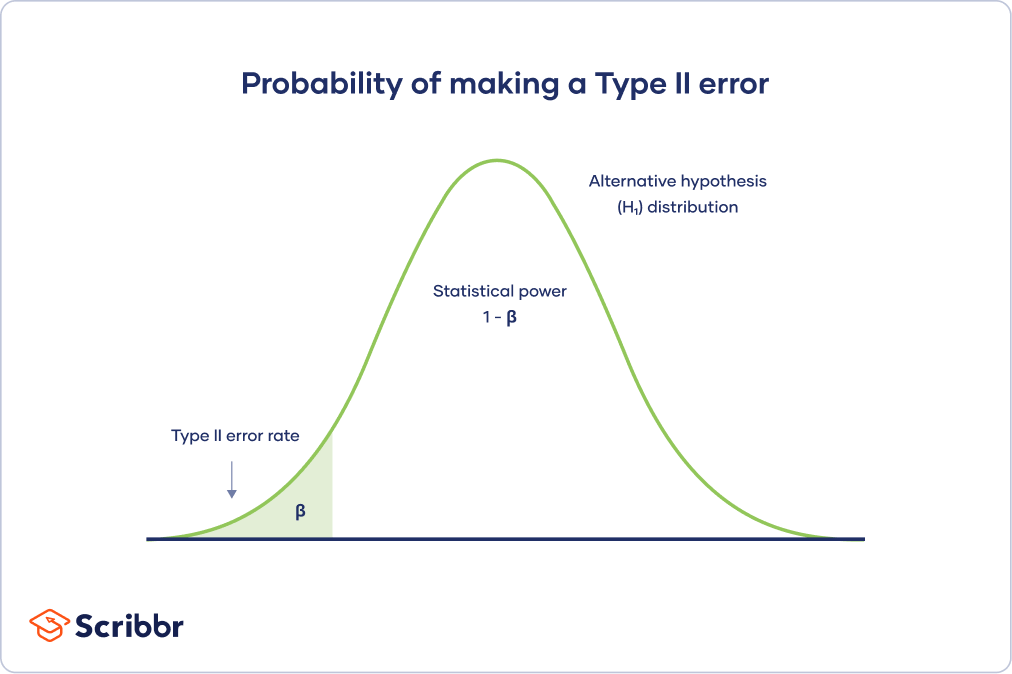

Instead, a Type II error means failing to conclude there was an effect when there actually was. In reality, your study may not have had enough statistical power to detect an effect of a certain size.

Power is the extent to which a test can correctly detect a real effect when there is one. A power level of 80% or higher is usually considered acceptable.

The risk of a Type II error is inversely related to the statistical power of a study. The higher the statistical power, the lower the probability of making a Type II error.

Statistical power is determined by:

- Size of the effect : Larger effects are more easily detected.

- Measurement error : Systematic and random errors in recorded data reduce power.

- Sample size : Larger samples reduce sampling error and increase power.

- Significance level : Increasing the significance level increases power.

To (indirectly) reduce the risk of a Type II error, you can increase the sample size or the significance level.

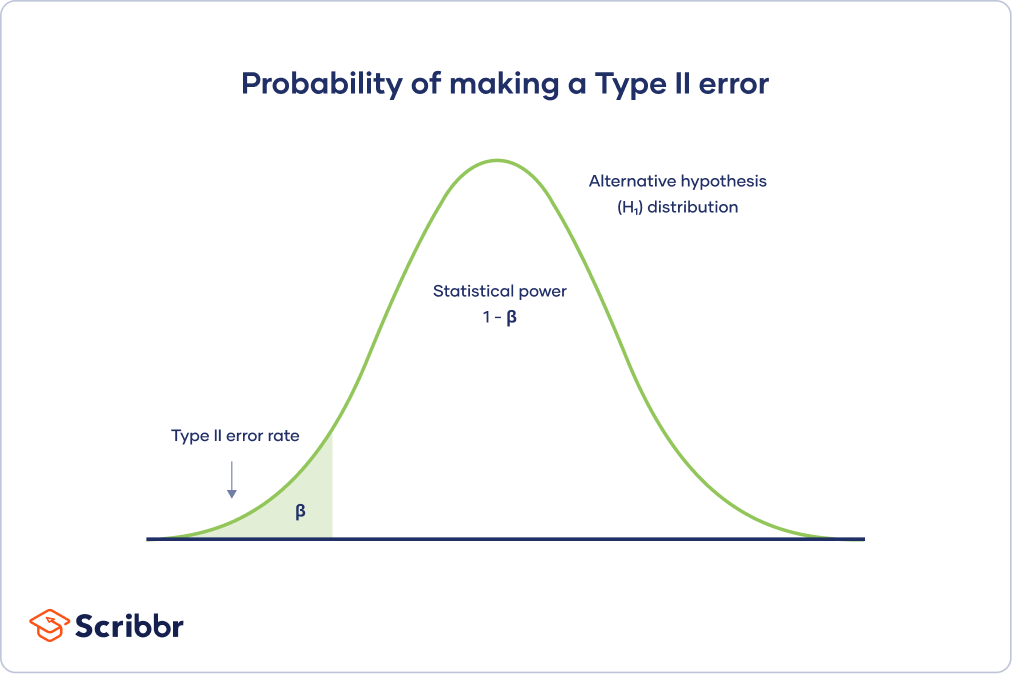

Type II error rate

The alternative hypothesis distribution curve below shows the probabilities of obtaining all possible results if the study were repeated with new samples and the alternative hypothesis were true in the population .

The Type II error rate is beta (β), represented by the shaded area on the left side. The remaining area under the curve represents statistical power, which is 1 – β.

Increasing the statistical power of your test directly decreases the risk of making a Type II error.

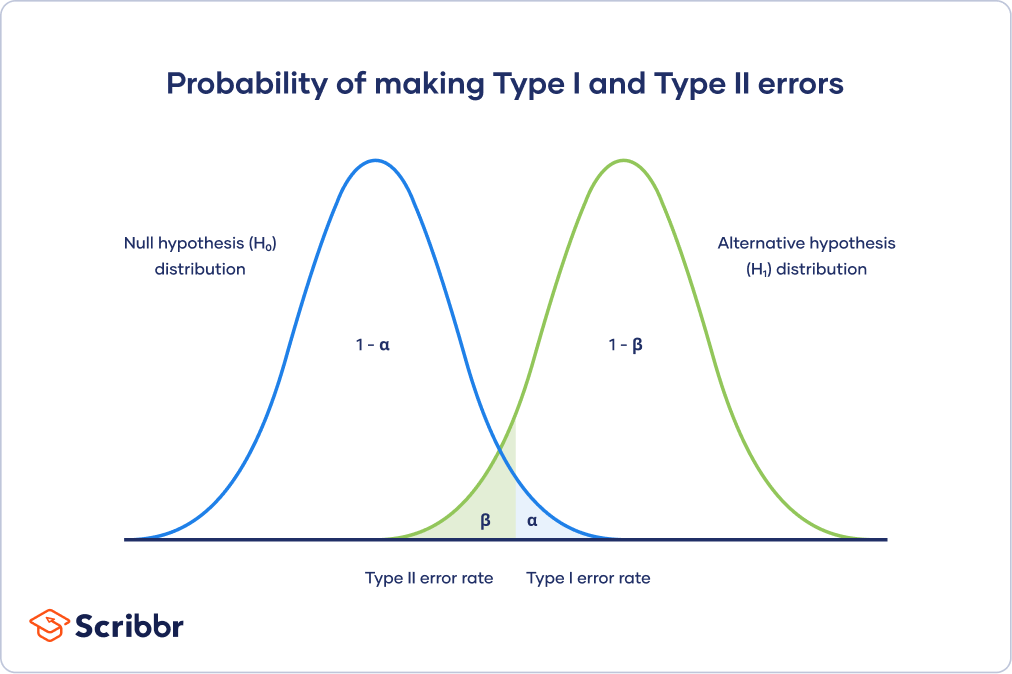

The Type I and Type II error rates influence each other. That’s because the significance level (the Type I error rate) affects statistical power, which is inversely related to the Type II error rate.

This means there’s an important tradeoff between Type I and Type II errors:

- Setting a lower significance level decreases a Type I error risk, but increases a Type II error risk.

- Increasing the power of a test decreases a Type II error risk, but increases a Type I error risk.

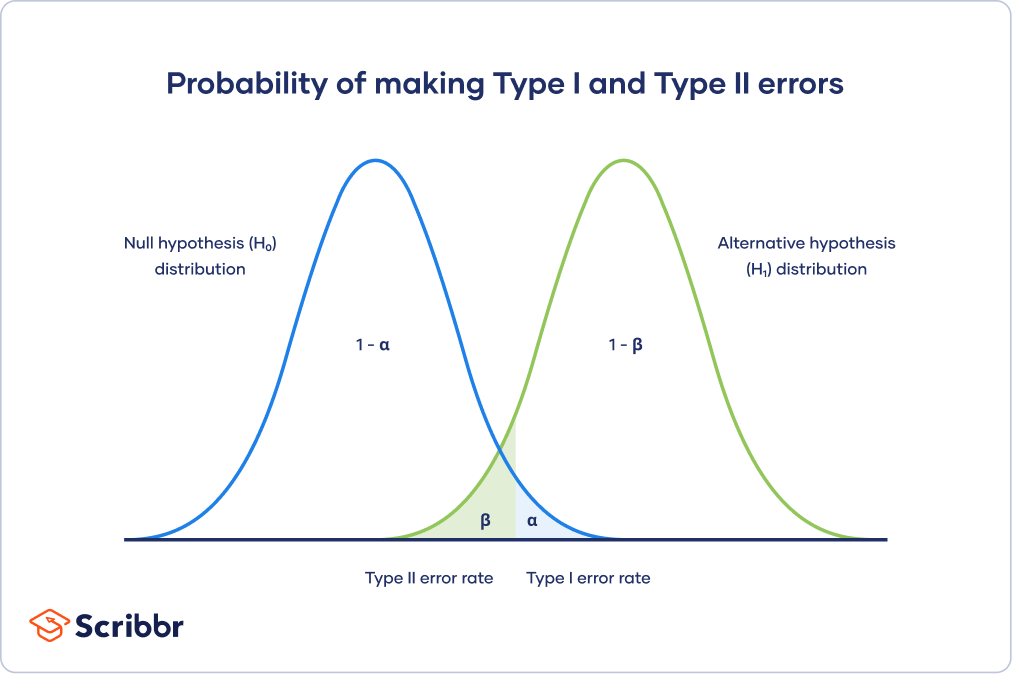

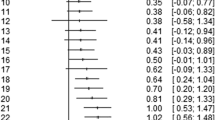

This trade-off is visualized in the graph below. It shows two curves:

- The null hypothesis distribution shows all possible results you’d obtain if the null hypothesis is true. The correct conclusion for any point on this distribution means not rejecting the null hypothesis.

- The alternative hypothesis distribution shows all possible results you’d obtain if the alternative hypothesis is true. The correct conclusion for any point on this distribution means rejecting the null hypothesis.

Type I and Type II errors occur where these two distributions overlap. The blue shaded area represents alpha, the Type I error rate, and the green shaded area represents beta, the Type II error rate.

By setting the Type I error rate, you indirectly influence the size of the Type II error rate as well.

It’s important to strike a balance between the risks of making Type I and Type II errors. Reducing the alpha always comes at the cost of increasing beta, and vice versa .

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

For statisticians, a Type I error is usually worse. In practical terms, however, either type of error could be worse depending on your research context.

A Type I error means mistakenly going against the main statistical assumption of a null hypothesis. This may lead to new policies, practices or treatments that are inadequate or a waste of resources.

In contrast, a Type II error means failing to reject a null hypothesis. It may only result in missed opportunities to innovate, but these can also have important practical consequences.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Descriptive statistics

- Measures of central tendency

- Correlation coefficient

- Null hypothesis

Methodology

- Cluster sampling

- Stratified sampling

- Types of interviews

- Cohort study

- Thematic analysis

Research bias

- Implicit bias

- Cognitive bias

- Survivorship bias

- Availability heuristic

- Nonresponse bias

- Regression to the mean

In statistics, a Type I error means rejecting the null hypothesis when it’s actually true, while a Type II error means failing to reject the null hypothesis when it’s actually false.

The risk of making a Type I error is the significance level (or alpha) that you choose. That’s a value that you set at the beginning of your study to assess the statistical probability of obtaining your results ( p value ).

To reduce the Type I error probability, you can set a lower significance level.

The risk of making a Type II error is inversely related to the statistical power of a test. Power is the extent to which a test can correctly detect a real effect when there is one.

To (indirectly) reduce the risk of a Type II error, you can increase the sample size or the significance level to increase statistical power.

Statistical significance is a term used by researchers to state that it is unlikely their observations could have occurred under the null hypothesis of a statistical test . Significance is usually denoted by a p -value , or probability value.

Statistical significance is arbitrary – it depends on the threshold, or alpha value, chosen by the researcher. The most common threshold is p < 0.05, which means that the data is likely to occur less than 5% of the time under the null hypothesis .

When the p -value falls below the chosen alpha value, then we say the result of the test is statistically significant.

In statistics, power refers to the likelihood of a hypothesis test detecting a true effect if there is one. A statistically powerful test is more likely to reject a false negative (a Type II error).

If you don’t ensure enough power in your study, you may not be able to detect a statistically significant result even when it has practical significance. Your study might not have the ability to answer your research question.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bhandari, P. (2023, June 22). Type I & Type II Errors | Differences, Examples, Visualizations. Scribbr. Retrieved March 25, 2024, from https://www.scribbr.com/statistics/type-i-and-type-ii-errors/

Is this article helpful?

Pritha Bhandari

Other students also liked, an easy introduction to statistical significance (with examples), understanding p values | definition and examples, statistical power and why it matters | a simple introduction, what is your plagiarism score.

Type 1 and Type 2 Errors in Statistics

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

On This Page:

A statistically significant result cannot prove that a research hypothesis is correct (which implies 100% certainty). Because a p -value is based on probabilities, there is always a chance of making an incorrect conclusion regarding accepting or rejecting the null hypothesis ( H 0 ).

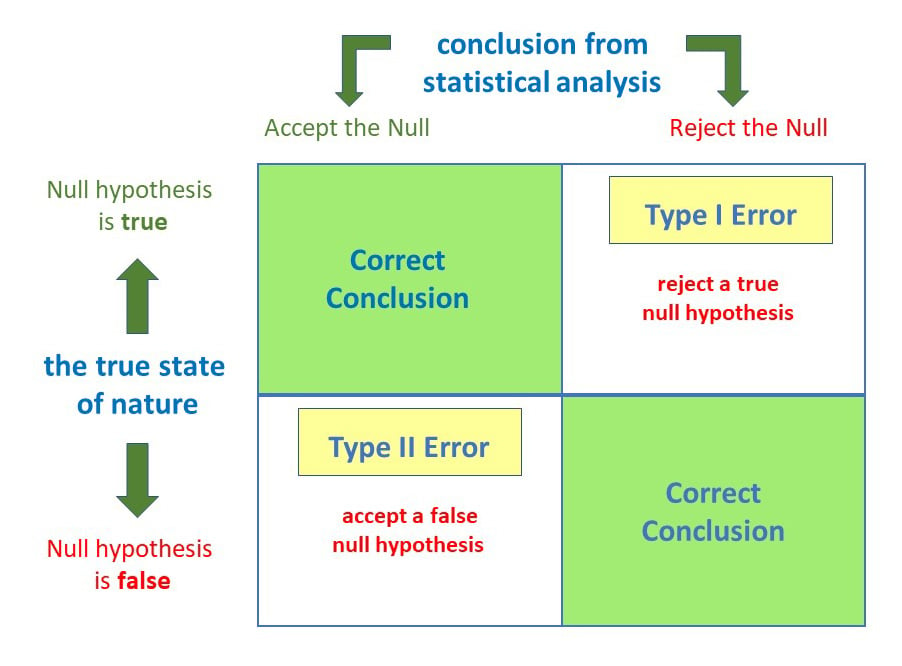

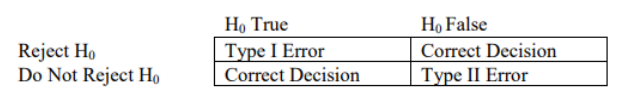

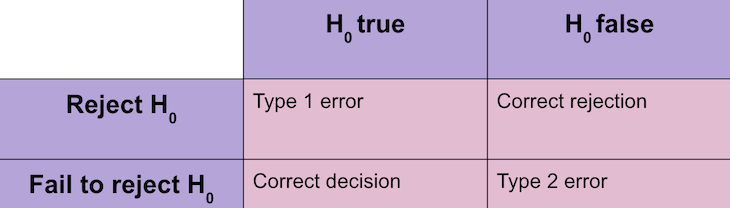

Anytime we make a decision using statistics, there are four possible outcomes, with two representing correct decisions and two representing errors.

The chances of committing these two types of errors are inversely proportional: that is, decreasing type I error rate increases type II error rate and vice versa.

As the significance level (α) increases, it becomes easier to reject the null hypothesis, decreasing the chance of missing a real effect (Type II error, β). If the significance level (α) goes down, it becomes harder to reject the null hypothesis , increasing the chance of missing an effect while reducing the risk of falsely finding one (Type I error).

Type I error

A type 1 error is also known as a false positive and occurs when a researcher incorrectly rejects a true null hypothesis. Simply put, it’s a false alarm.

This means that you report that your findings are significant when they have occurred by chance.

The probability of making a type 1 error is represented by your alpha level (α), the p- value below which you reject the null hypothesis.

A p -value of 0.05 indicates that you are willing to accept a 5% chance of getting the observed data (or something more extreme) when the null hypothesis is true.

You can reduce your risk of committing a type 1 error by setting a lower alpha level (like α = 0.01). For example, a p-value of 0.01 would mean there is a 1% chance of committing a Type I error.

However, using a lower value for alpha means that you will be less likely to detect a true difference if one really exists (thus risking a type II error).

Scenario: Drug Efficacy Study

Imagine a pharmaceutical company is testing a new drug, named “MediCure”, to determine if it’s more effective than a placebo at reducing fever. They experimented with two groups: one receives MediCure, and the other received a placebo.

- Null Hypothesis (H0) : MediCure is no more effective at reducing fever than the placebo.

- Alternative Hypothesis (H1) : MediCure is more effective at reducing fever than the placebo.

After conducting the study and analyzing the results, the researchers found a p-value of 0.04.

If they use an alpha (α) level of 0.05, this p-value is considered statistically significant, leading them to reject the null hypothesis and conclude that MediCure is more effective than the placebo.

However, MediCure has no actual effect, and the observed difference was due to random variation or some other confounding factor. In this case, the researchers have incorrectly rejected a true null hypothesis.

Error : The researchers have made a Type 1 error by concluding that MediCure is more effective when it isn’t.

Implications

Resource Allocation : Making a Type I error can lead to wastage of resources. If a business believes a new strategy is effective when it’s not (based on a Type I error), they might allocate significant financial and human resources toward that ineffective strategy.

Unnecessary Interventions : In medical trials, a Type I error might lead to the belief that a new treatment is effective when it isn’t. As a result, patients might undergo unnecessary treatments, risking potential side effects without any benefit.

Reputation and Credibility : For researchers, making repeated Type I errors can harm their professional reputation. If they frequently claim groundbreaking results that are later refuted, their credibility in the scientific community might diminish.

Type II error

A type 2 error (or false negative) happens when you accept the null hypothesis when it should actually be rejected.

Here, a researcher concludes there is not a significant effect when actually there really is.

The probability of making a type II error is called Beta (β), which is related to the power of the statistical test (power = 1- β). You can decrease your risk of committing a type II error by ensuring your test has enough power.

You can do this by ensuring your sample size is large enough to detect a practical difference when one truly exists.

Scenario: Efficacy of a New Teaching Method

Educational psychologists are investigating the potential benefits of a new interactive teaching method, named “EduInteract”, which utilizes virtual reality (VR) technology to teach history to middle school students.

They hypothesize that this method will lead to better retention and understanding compared to the traditional textbook-based approach.

- Null Hypothesis (H0) : The EduInteract VR teaching method does not result in significantly better retention and understanding of history content than the traditional textbook method.

- Alternative Hypothesis (H1) : The EduInteract VR teaching method results in significantly better retention and understanding of history content than the traditional textbook method.

The researchers designed an experiment where one group of students learns a history module using the EduInteract VR method, while a control group learns the same module using a traditional textbook.

After a week, the student’s retention and understanding are tested using a standardized assessment.

Upon analyzing the results, the psychologists found a p-value of 0.06. Using an alpha (α) level of 0.05, this p-value isn’t statistically significant.

Therefore, they fail to reject the null hypothesis and conclude that the EduInteract VR method isn’t more effective than the traditional textbook approach.

However, let’s assume that in the real world, the EduInteract VR truly enhances retention and understanding, but the study failed to detect this benefit due to reasons like small sample size, variability in students’ prior knowledge, or perhaps the assessment wasn’t sensitive enough to detect the nuances of VR-based learning.

Error : By concluding that the EduInteract VR method isn’t more effective than the traditional method when it is, the researchers have made a Type 2 error.

This could prevent schools from adopting a potentially superior teaching method that might benefit students’ learning experiences.

Missed Opportunities : A Type II error can lead to missed opportunities for improvement or innovation. For example, in education, if a more effective teaching method is overlooked because of a Type II error, students might miss out on a better learning experience.

Potential Risks : In healthcare, a Type II error might mean overlooking a harmful side effect of a medication because the research didn’t detect its harmful impacts. As a result, patients might continue using a harmful treatment.

Stagnation : In the business world, making a Type II error can result in continued investment in outdated or less efficient methods. This can lead to stagnation and the inability to compete effectively in the marketplace.

How do Type I and Type II errors relate to psychological research and experiments?

Type I errors are like false alarms, while Type II errors are like missed opportunities. Both errors can impact the validity and reliability of psychological findings, so researchers strive to minimize them to draw accurate conclusions from their studies.

How does sample size influence the likelihood of Type I and Type II errors in psychological research?

Sample size in psychological research influences the likelihood of Type I and Type II errors. A larger sample size reduces the chances of Type I errors, which means researchers are less likely to mistakenly find a significant effect when there isn’t one.

A larger sample size also increases the chances of detecting true effects, reducing the likelihood of Type II errors.

Are there any ethical implications associated with Type I and Type II errors in psychological research?

Yes, there are ethical implications associated with Type I and Type II errors in psychological research.

Type I errors may lead to false positive findings, resulting in misleading conclusions and potentially wasting resources on ineffective interventions. This can harm individuals who are falsely diagnosed or receive unnecessary treatments.

Type II errors, on the other hand, may result in missed opportunities to identify important effects or relationships, leading to a lack of appropriate interventions or support. This can also have negative consequences for individuals who genuinely require assistance.

Therefore, minimizing these errors is crucial for ethical research and ensuring the well-being of participants.

Further Information

- Publication manual of the American Psychological Association

- Statistics for Psychology Book Download

Have a thesis expert improve your writing

Check your thesis for plagiarism in 10 minutes, generate your apa citations for free.

- Knowledge Base

- Type I & Type II Errors | Differences, Examples, Visualizations

Type I & Type II Errors | Differences, Examples, Visualizations

Published on 18 January 2021 by Pritha Bhandari . Revised on 2 February 2023.

In statistics , a Type I error is a false positive conclusion, while a Type II error is a false negative conclusion.

Making a statistical decision always involves uncertainties, so the risks of making these errors are unavoidable in hypothesis testing .

The probability of making a Type I error is the significance level , or alpha (α), while the probability of making a Type II error is beta (β). These risks can be minimized through careful planning in your study design.

- Type I error (false positive) : the test result says you have coronavirus, but you actually don’t.

- Type II error (false negative) : the test result says you don’t have coronavirus, but you actually do.

Table of contents

Error in statistical decision-making, type i error, type ii error, trade-off between type i and type ii errors, is a type i or type ii error worse, frequently asked questions about type i and ii errors.

Using hypothesis testing, you can make decisions about whether your data support or refute your research predictions with null and alternative hypotheses .

Hypothesis testing starts with the assumption of no difference between groups or no relationship between variables in the population—this is the null hypothesis . It’s always paired with an alternative hypothesis , which is your research prediction of an actual difference between groups or a true relationship between variables .

In this case:

- The null hypothesis (H 0 ) is that the new drug has no effect on symptoms of the disease.

- The alternative hypothesis (H 1 ) is that the drug is effective for alleviating symptoms of the disease.

Then , you decide whether the null hypothesis can be rejected based on your data and the results of a statistical test . Since these decisions are based on probabilities, there is always a risk of making the wrong conclusion.

- If your results show statistical significance , that means they are very unlikely to occur if the null hypothesis is true. In this case, you would reject your null hypothesis. But sometimes, this may actually be a Type I error.

- If your findings do not show statistical significance, they have a high chance of occurring if the null hypothesis is true. Therefore, you fail to reject your null hypothesis. But sometimes, this may be a Type II error.

A Type I error means rejecting the null hypothesis when it’s actually true. It means concluding that results are statistically significant when, in reality, they came about purely by chance or because of unrelated factors.

The risk of committing this error is the significance level (alpha or α) you choose. That’s a value that you set at the beginning of your study to assess the statistical probability of obtaining your results ( p value).

The significance level is usually set at 0.05 or 5%. This means that your results only have a 5% chance of occurring, or less, if the null hypothesis is actually true.

If the p value of your test is lower than the significance level, it means your results are statistically significant and consistent with the alternative hypothesis. If your p value is higher than the significance level, then your results are considered statistically non-significant.

To reduce the Type I error probability, you can simply set a lower significance level.

Type I error rate

The null hypothesis distribution curve below shows the probabilities of obtaining all possible results if the study were repeated with new samples and the null hypothesis were true in the population .

At the tail end, the shaded area represents alpha. It’s also called a critical region in statistics.

If your results fall in the critical region of this curve, they are considered statistically significant and the null hypothesis is rejected. However, this is a false positive conclusion, because the null hypothesis is actually true in this case!

A Type II error means not rejecting the null hypothesis when it’s actually false. This is not quite the same as “accepting” the null hypothesis, because hypothesis testing can only tell you whether to reject the null hypothesis.

Instead, a Type II error means failing to conclude there was an effect when there actually was. In reality, your study may not have had enough statistical power to detect an effect of a certain size.

Power is the extent to which a test can correctly detect a real effect when there is one. A power level of 80% or higher is usually considered acceptable.

The risk of a Type II error is inversely related to the statistical power of a study. The higher the statistical power, the lower the probability of making a Type II error.

Statistical power is determined by:

- Size of the effect : Larger effects are more easily detected.

- Measurement error : Systematic and random errors in recorded data reduce power.

- Sample size : Larger samples reduce sampling error and increase power.

- Significance level : Increasing the significance level increases power.

To (indirectly) reduce the risk of a Type II error, you can increase the sample size or the significance level.

Type II error rate

The alternative hypothesis distribution curve below shows the probabilities of obtaining all possible results if the study were repeated with new samples and the alternative hypothesis were true in the population .

The Type II error rate is beta (β), represented by the shaded area on the left side. The remaining area under the curve represents statistical power, which is 1 – β.

Increasing the statistical power of your test directly decreases the risk of making a Type II error.

The Type I and Type II error rates influence each other. That’s because the significance level (the Type I error rate) affects statistical power, which is inversely related to the Type II error rate.

This means there’s an important tradeoff between Type I and Type II errors:

- Setting a lower significance level decreases a Type I error risk, but increases a Type II error risk.

- Increasing the power of a test decreases a Type II error risk, but increases a Type I error risk.

This trade-off is visualized in the graph below. It shows two curves:

- The null hypothesis distribution shows all possible results you’d obtain if the null hypothesis is true. The correct conclusion for any point on this distribution means not rejecting the null hypothesis.

- The alternative hypothesis distribution shows all possible results you’d obtain if the alternative hypothesis is true. The correct conclusion for any point on this distribution means rejecting the null hypothesis.

Type I and Type II errors occur where these two distributions overlap. The blue shaded area represents alpha, the Type I error rate, and the green shaded area represents beta, the Type II error rate.

By setting the Type I error rate, you indirectly influence the size of the Type II error rate as well.

It’s important to strike a balance between the risks of making Type I and Type II errors. Reducing the alpha always comes at the cost of increasing beta, and vice versa .

For statisticians, a Type I error is usually worse. In practical terms, however, either type of error could be worse depending on your research context.

A Type I error means mistakenly going against the main statistical assumption of a null hypothesis. This may lead to new policies, practices or treatments that are inadequate or a waste of resources.

In contrast, a Type II error means failing to reject a null hypothesis. It may only result in missed opportunities to innovate, but these can also have important practical consequences.

In statistics, a Type I error means rejecting the null hypothesis when it’s actually true, while a Type II error means failing to reject the null hypothesis when it’s actually false.

The risk of making a Type I error is the significance level (or alpha) that you choose. That’s a value that you set at the beginning of your study to assess the statistical probability of obtaining your results ( p value ).

To reduce the Type I error probability, you can set a lower significance level.

The risk of making a Type II error is inversely related to the statistical power of a test. Power is the extent to which a test can correctly detect a real effect when there is one.

To (indirectly) reduce the risk of a Type II error, you can increase the sample size or the significance level to increase statistical power.

Statistical significance is a term used by researchers to state that it is unlikely their observations could have occurred under the null hypothesis of a statistical test . Significance is usually denoted by a p -value , or probability value.

Statistical significance is arbitrary – it depends on the threshold, or alpha value, chosen by the researcher. The most common threshold is p < 0.05, which means that the data is likely to occur less than 5% of the time under the null hypothesis .

When the p -value falls below the chosen alpha value, then we say the result of the test is statistically significant.

In statistics, power refers to the likelihood of a hypothesis test detecting a true effect if there is one. A statistically powerful test is more likely to reject a false negative (a Type II error).

If you don’t ensure enough power in your study, you may not be able to detect a statistically significant result even when it has practical significance. Your study might not have the ability to answer your research question.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Bhandari, P. (2023, February 02). Type I & Type II Errors | Differences, Examples, Visualizations. Scribbr. Retrieved 25 March 2024, from https://www.scribbr.co.uk/stats/type-i-and-type-ii-error/

Is this article helpful?

Pritha Bhandari

- Search Search Please fill out this field.

What Is a Type I Error?

- How It Works

- False Positive

The Bottom Line

- Business Leaders

- Math and Statistics

Type 1 Error: Definition, False Positives, and Examples

:max_bytes(150000):strip_icc():format(webp)/wk_headshot_aug_2018_02__william_kenton-5bfc261446e0fb005118afc9.jpg)

ljubaphoto / Getty Images

In statistical research, a type 1 error is when the null hypothesis is rejected, which incorrectly leads to the study stating that notable differences were found in the variables when actually there were no differences. Put simply, a type I error is a false positive result.

Making a type I error often can't be avoided because of the degree of uncertainty involved. A null hypothesis is established during hypothesis testing before a test begins. In some cases, a type I error assumes there's no cause-and-effect relationship between the tested item and the stimuli to trigger an outcome to the test.

Key Takeaways

- A type I error occurs during hypothesis testing when a null hypothesis is rejected, even though it is accurate and should not be rejected.

- Hypothesis testing is a testing process that uses sample data.

- The null hypothesis assumes no cause-and-effect relationship between the tested item and the stimuli applied during the test.

- A type I error is a false positive leading to an incorrect rejection of the null hypothesis.

- A false positive can occur if something other than the stimuli causes the outcome of the test.

How a Type I Error Works

Hypothesis testing is a testing process that uses sample data. The test is designed to provide evidence that the hypothesis or conjecture is supported by the data being tested. A null hypothesis is a belief that there is no statistical significance or effect between the two data sets, variables, or populations being considered in the hypothesis. A researcher would generally try to disprove the null hypothesis.

For example, let's say the null hypothesis states that an investment strategy doesn't perform any better than a market index like the S&P 500 . The researcher would take samples of data and test the historical performance of the investment strategy to determine if the strategy performed at a higher level than the S&P. If the test results show that the strategy performed at a higher rate than the index, the null hypothesis is rejected.

This condition is denoted as n=0. If the result seems to indicate that the stimuli applied to the test subject caused a reaction when the test is conducted, the null hypothesis stating that the stimuli do not affect the test subject then needs to be rejected.

A null hypothesis should ideally never be rejected if it's found to be true. It should always be rejected if it's found to be false. However, there are situations when errors can occur.

False Positive Type I Error

A type I error is also called a false positive result. This result leads to an incorrect rejection of the null hypothesis. It rejects an idea that shouldn't have been rejected in the first place.

Rejecting the null hypothesis under the assumption that there is no relationship between the test subject, the stimuli, and the outcome may sometimes be incorrect. If something other than the stimuli causes the outcome of the test, it can cause a false positive result.

Examples of Type I Errors

Let's look at a couple of hypothetical examples to show how type I errors occur.

Criminal Trials

Type I errors commonly occur in criminal trials, where juries are required to come up with a verdict of either innocent or guilty. In this case, the null hypothesis is that the person is innocent, while the alternative is guilty. A jury may come up with a type I error if the members find that the person is found guilty and is sent to jail, despite actually being innocent.

Medical Testing

In medical testing, a type I error would cause the appearance that a treatment for a disease has the effect of reducing the severity of the disease when, in fact, it does not. When a new medicine is being tested, the null hypothesis will be that the medicine does not affect the progression of the disease.

Let's say a lab is researching a new cancer drug . Their null hypothesis might be that the drug does not affect the growth rate of cancer cells.

After applying the drug to the cancer cells, the cancer cells stop growing. This would cause the researchers to reject their null hypothesis that the drug would have no effect. If the drug caused the growth stoppage, the conclusion to reject the null, in this case, would be correct.

However, if something else during the test caused the growth stoppage instead of the administered drug, this would be an example of an incorrect rejection of the null hypothesis (i.e., a type I error).

How Does a Type I Error Occur?

A type I error occurs when the null hypothesis, which is the belief that there is no statistical significance or effect between the data sets considered in the hypothesis, is mistakenly rejected. The type I error should never be rejected even though it's accurate. It is also known as a false positive result.

What Is the Difference Between a Type I and Type II Error?

Type I and type II errors occur during statistical hypothesis testing. While the type I error (a false positive) rejects a null hypothesis when it is, in fact, correct, the type II error (a false negative) fails to reject a false null hypothesis. For example, a type I error would convict someone of a crime when they are actually innocent. A type II error would acquit a guilty individual when they are guilty of a crime.

What Is a Null Hypothesis?

A null hypothesis occurs in statistical hypothesis testing. It states that no relationship exists between two data sets or populations. When a null hypothesis is accurate and rejected, the result is a false positive or a type I error. When it is false and fails to be rejected, a false negative occurs. This is also referred to as a type II error.

What's the Difference Between a Type I Error and a False Positive?

A type I error is often called a false positive. This occurs when the null hypothesis is rejected even though it's correct. The rejection takes place because of the assumption that there is no relationship between the data sets and the stimuli. As such, the outcome is assumed to be incorrect.

Hypothesis testing is a form of testing that uses data sets to either accept or determine a specific outcome using a null hypothesis. Although we often don't realize it, we use hypothesis testing in our everyday lives.

This comes in many areas, such as making investment decisions or deciding the fate of a person in a criminal trial. Sometimes, the result may be a type I error. This false positive is the incorrect rejection of the null hypothesis even when it is true.

:max_bytes(150000):strip_icc():format(webp)/investing19-5bfc2b8f4cedfd0026c1194c.jpg)

- Terms of Service

- Editorial Policy

- Privacy Policy

- Your Privacy Choices

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

8.2: Type I and II Errors

- Last updated

- Save as PDF

- Page ID 24056

- Rachel Webb

- Portland State University

How do you quantify really small? Is 5% or 10% or 15% really small? How do you decide? That depends on your field of study and the importance of the situation. Is this a pilot study? Is someone’s life at risk? Would you lose your job? Most industry standards use 5% as the cutoff point for how small is small enough, but 1%, 5% and 10% are frequently used depending on what the situation calls for.

Now, how small is small enough? To answer that, you really want to know the types of errors you can make in hypothesis testing.

The first error is if you say that H 0 is false, when in fact it is true. This means you reject H 0 when H 0 was true. The second error is if you say that H 0 is true, when in fact it is false. This means you fail to reject H 0 when H 0 is false.

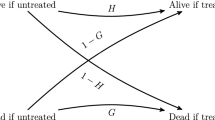

Figure 8-4 shows that if we “Reject H 0 ” when H 0 is actually true, we are committing a type I error. The probability of committing a type I error is the Greek letter \(\alpha\), pronounced alpha. This can be controlled by the researcher by choosing a specific level of significance \(\alpha\).

Figure 8-4 shows that if we “Do Not Reject H 0 ” when H 0 is actually false, we are committing a type II error. The probability of committing a type II error is denoted with the Greek letter β, pronounced beta. When we increase the sample size this will reduce β. The power of a test is 1 – β.

A jury trial is about to take place to decide if a person is guilty of committing murder. The hypotheses for this situation would be:

- \(H_0\): The defendant is innocent

- \(H_1\): The defendant is not innocent

The jury has two possible decisions to make, either acquit or convict the person on trial, based on the evidence that is presented. There are two possible ways that the jury could make a mistake. They could convict an innocent person or they could let a guilty person go free. Both are bad news, but if the death penalty was sentenced to the convicted person, the justice system could be killing an innocent person. If a murderer is let go without enough evidence to convict them then they could possibly murder again. In statistics we call these two types of mistakes a type I and II error.

Figure 8-5 is a diagram to see the four possible jury decisions and two errors.

Type I Error is rejecting H 0 when H 0 is true, and Type II Error is failing to reject H 0 when H 0 is false.

Since these are the only two possible errors, one can define the probabilities attached to each error.

\(\alpha\) = P(Type I Error) = P(Rejecting H 0 | H 0 is true)

β = P(Type II Error) = P(Failing to reject H 0 | H 0 is false)

An investment company wants to build a new food cart. They know from experience that food carts are successful if they have on average more than 100 people a day walk by the location. They have a potential site to build on, but before they begin, they want to see if they have enough foot traffic. They observe how many people walk by the site every day over a month. They will build if there is more than an average of 100 people who walk by the site each day. In simple terms, explain what the type I & II errors would be using context from the problem.

The hypotheses are: H 0 : μ = 100 and H 1 : μ > 100.

Sometimes it is helpful to use words next to your hypotheses instead of the formal symbols

- H 0 : μ ≤ 100 (Do not build)

- H 1 : μ > 100 (Build).

A type I error would be to reject the null when in fact it is true. Take your finger and cover up the null hypothesis (our decision is to reject the null), then what is showing? The alternative hypothesis is what action we take.

If we reject H 0 then we would build the new food cart. However, H 0 was actually true, which means that the mean was less than or equal to 100 people walking by.

In more simple terms, this would mean that our evidence showed that we have enough foot traffic to support the food cart. Once we build, though, there was not on average more than 100 people that walk by and the food cart may fail.

A type II error would be to fail to reject the null when in fact the null is false. Evidence shows that we should not build on the site, but this actually would have been a prime location to build on.

The missed opportunity of a type II error is not as bad as possibly losing thousands of dollars on a bad investment.

What is more severe of an error is dependent on what side of the desk you are sitting on. For instance, if a hypothesis is about miles per gallon for a new car the hypotheses may be set up differently depending on if you are buying the car or selling the car. For this course, the claim will be stated in the problem and always set up the hypotheses to match the stated claim. In general, the research question should be set up as some type of change in the alternative hypothesis.

Controlling for Type I Error

The significance level used by the researcher should be picked prior to collection and analyzing data. This is called “a priori,” versus picking α after you have done your analysis which is called “post hoc.” When deciding on what significance level to pick, one needs to look at the severity of the consequences of the type I and type II errors. For example, if the type I error may cause the loss of life or large amounts of money the researcher would want to set \(\alpha\) low.

Controlling for Type II Error

The power of a test is the complement of a type II error or correctly rejecting a false null hypothesis. You can increase the power of the test and hence decrease the type II error by increasing the sample size. Similar to confidence intervals, where we can reduce our margin of error when we increase the sample size. In general, we would like to have a high confidence level and a high power for our hypothesis tests. When you increase your confidence level, then in turn the power of the test will decrease. Calculating the probability of a type II error is a little more difficult and it is a conditional probability based on the researcher’s hypotheses and is not discussed in this course.

“‘That's right!’ shouted Vroomfondel, ‘we demand rigidly defined areas of doubt and uncertainty!’” (Adams, 2002)

Visualizing \(\alpha\) and β

If \(\alpha\) increases that means the chances of making a type I error will increase. It is more likely that a type I error will occur. It makes sense that you are less likely to make type II errors, only because you will be rejecting H 0 more often. You will be failing to reject H 0 less, and therefore, the chance of making a type II error will decrease. Thus, as α increases, β will decrease, and vice versa. That makes them seem like complements, but they are not complements. Consider one more factor – sample size.

Consider if you have a larger sample that is representative of the population, then it makes sense that you have more accuracy than with a smaller sample. Think of it this way, which would you trust more, a sample mean of 890 if you had a sample size of 35 or sample size of 350 (assuming a representative sample)? Of course, the 350 because there are more data points and so more accuracy. If you are more accurate, then there is less chance that you will make any error.

By increasing the sample size of a representative sample, you decrease β.

- For a constant sample size, n , if \(\alpha\) increases, β decreases.

- For a constant significance level, \(\alpha\), if n increases, β decreases.

When the sample size becomes large, point estimates become more precise and any real differences in the mean and null value become easier to detect and recognize. Even a very small difference would likely be detected if we took a large enough sample size. Sometimes researchers will take such a large sample size that even the slightest difference is detected. While we still say that difference is statistically significant, it might not be practically significant. Statistically significant differences are sometimes so minor that they are not practically relevant. This is especially important to research: if we conduct a study, we want to focus on finding a meaningful result. We do not want to spend lots of money finding results that hold no practical value.

The role of a statistician in conducting a study often includes planning the size of the study. The statistician might first consult experts or scientific literature to learn what would be the smallest meaningful difference from the null value. They also would obtain some reasonable estimate for the standard deviation. With these important pieces of information, they would choose a sufficiently large sample size so that the power for the meaningful difference is perhaps 80% or 90%. While larger sample sizes may still be used, the statistician might advise against using them in some cases, especially in sensitive areas of research.

If we look at the following two sampling distributions in Figure 8-6, the one on the left represents the sampling distribution for the true unknown mean. The curve on the right represents the sampling distribution based on the hypotheses the researcher is making. Do you remember the difference between a sampling distribution, the distribution of a sample, and the distribution of the population? Revisit the Central Limit Theorem in Chapter 6 if needed.

If we start with \(\alpha\) = 0.05, the critical value is represented by the vertical green line at \(z_{\alpha}\) = 1.96. Then the blue shaded area to the right of this line represents \(\alpha\). The area under the curve to the left of \(z_{\alpha / 2}\) = 1.96 based on the researcher’s claim would represent β.

If we were to change \(\alpha\) from 0.05 to 0.01 then we get a critical value of \(z_{\alpha / 2}\) = 2.576. Note that when \(\alpha\) decreases, then β increases which means your power 1 – β decreases. See Figure 8-7.

This text does not go over how to calculate β. You will need to be able to write out a sentence interpreting either the type I or II errors given a set of hypotheses. You also need to know the relationship between \(\alpha\), β, confidence level, and power.

Hypothesis tests are not flawless, since we can make a wrong decision in statistical hypothesis tests based on the data. For example, in the court system, innocent people are sometimes wrongly convicted and the guilty sometimes walk free, or diagnostic tests that have false negatives or false positives. However, the difference is that in statistical hypothesis tests, we have the tools necessary to quantify how often we make such errors. A type I Error is rejecting the null hypothesis when H 0 is actually true. A type II Error is failing to reject the null hypothesis when the alternative is actually true (H 0 is false).

We use the symbols \(\alpha\) = P(Type I Error) and β = P(Type II Error). The critical value is a cutoff point on the horizontal axis of the sampling distribution that you can compare your test statistic to see if you should reject the null hypothesis. For a left-tailed test the critical value will always be on the left side of the sampling distribution, the right-tailed test will always be on the right side, and a two-tailed test will be on both tails. Use technology to find the critical values. Most of the time in this course the shortcut menus that we use will give you the critical values as part of the output.

8.2.1 Finding Critical Values

A researcher decides they want to have a 5% chance of making a type I error so they set α = 0.05. What z-score would represent that 5% area? It would depend on if the hypotheses were a left-tailed, two-tailed or right-tailed test. This zscore is called a critical value. Figure 8-8 shows examples of critical values for the three possible sets of hypotheses.

Two-tailed Test

If we are doing a two-tailed test then the \(\alpha\) = 5% area gets divided into both tails. We denote these critical values \(z_{\alpha / 2}\) and \(z_{1-\alpha / 2}\). When the sample data finds a z-score ( test statistic ) that is either less than or equal to \(z_{\alpha / 2}\) or greater than or equal to \(z_{1-\alpha / 2}\) then we would reject H 0 . The area to the left of the critical value \(z_{\alpha / 2}\) and to the right of the critical value \(z_{1-\alpha / 2}\) is called the critical or rejection region. See Figure 8-9.

When \(\alpha\) = 0.05 then the critical values \(z_{\alpha / 2}\) and \(z_{1-\alpha / 2}\) are found using the following technology.

Excel: \(z_{\alpha / 2}\) =NORM.S.INV(0.025) = –1.96 and \(z_{1-\alpha / 2}\) =NORM.S.INV(0.975) = 1.96

TI-Calculator: \(z_{\alpha / 2}\) = invNorm(0.025,0,1) = –1.96 and \(z_{1-\alpha / 2}\) = invNorm(0.975,0,1) = 1.96

Since the normal distribution is symmetric, you only need to find one side’s z-score and we usually represent the critical values as ± \(z_{\alpha / 2}\).

Most of the time we will be finding a probability (p-value) instead of the critical values. The p-value and critical values are related and tell the same information so it is important to know what a critical value represents.

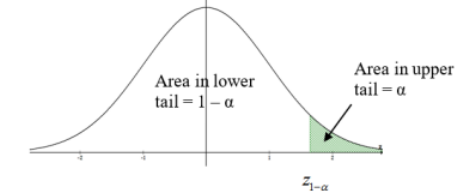

Right-tailed Test

If we are doing a right-tailed test then the \(\alpha\) = 5% area goes into the right tail. We denote this critical value \(z_{1-\alpha}\). When the sample data finds a z-score more than \(z_{1-\alpha}\) then we would reject H 0 , reject H 0 if the test statistic is ≥ \(z_{1-\alpha}\). The area to the right of the critical value \(z_{1-\alpha}\) is called the critical region. See Figure 8-10.

Figure 8-10

When \(\alpha\) = 0.05 then the critical value \(z_{1-\alpha}\) is found using the following technology.

Excel: \(z_{1-\alpha}\) =NORM.S.INV(0.95) = 1.645 Figure 8-10

TI-Calculator: \(z_{1-\alpha}\) = invNorm(0.95,0,1) = 1.645

Left-tailed Test

If we are doing a left-tailed test then the \(\alpha\) = 5% area goes into the left tail. If the sampling distribution is a normal distribution then we can use the inverse normal function in Excel or calculator to find the corresponding z-score. We denote this critical value \(z_{\alpha}\).

When the sample data finds a z-score less than \(z_{\alpha}\) then we would reject H0, reject Ho if the test statistic is ≤ \(z_{\alpha}\). The area to the left of the critical value \(z_{\alpha}\) is called the critical region. See Figure 8-11.

Figure 8-11

When \(\alpha\) = 0.05 then the critical value \(z_{\alpha}\) is found using the following technology.

Excel: \(z_{\alpha}\) =NORM.S.INV(0.05) = –1.645

TI-Calculator: \(z_{\alpha}\) = invNorm(0.05,0,1) = –1.645

The Claim and Summary

The wording on the summary statement changes depending on which hypothesis the researcher claims to be true. We really should always be setting up the claim in the alternative hypothesis since most of the time we are collecting evidence to show that a change has occurred, but occasionally a textbook will have the claim in the null hypothesis. Do not use the phrase “accept H 0 ” since this implies that H0 is true. The lack of evidence is not evidence of nothing.

There were only two possible correct answers for the decision step.

i. Reject H 0

ii. Fail to reject H 0

Caution! If we fail to reject the null this does not mean that there was no change, we just do not have any evidence that change has occurred. The absence of evidence is not evidence of absence. On the other hand, we need to be careful when we reject the null hypothesis we have not proved that there is change.

When we reject the null hypothesis, there is only evidence that a change has occurred. Our evidence could have been false and lead to an incorrect decision. If we use the phrase, “accept H 0 ” this implies that H 0 was true, but we just do not have evidence that it is false. Hence you will be marked incorrect for your decision if you use accept H 0 , use instead “fail to reject H 0 ” or “do not reject H 0 .”

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

6.1 - type i and type ii errors.

When conducting a hypothesis test there are two possible decisions: reject the null hypothesis or fail to reject the null hypothesis. You should remember though, hypothesis testing uses data from a sample to make an inference about a population. When conducting a hypothesis test we do not know the population parameters. In most cases, we don't know if our inference is correct or incorrect.

When we reject the null hypothesis there are two possibilities. There could really be a difference in the population, in which case we made a correct decision. Or, it is possible that there is not a difference in the population (i.e., \(H_0\) is true) but our sample was different from the hypothesized value due to random sampling variation. In that case we made an error. This is known as a Type I error.

When we fail to reject the null hypothesis there are also two possibilities. If the null hypothesis is really true, and there is not a difference in the population, then we made the correct decision. If there is a difference in the population, and we failed to reject it, then we made a Type II error.

Rejecting \(H_0\) when \(H_0\) is really true, denoted by \(\alpha\) ("alpha") and commonly set at .05

\(\alpha=P(Type\;I\;error)\)

Failing to reject \(H_0\) when \(H_0\) is really false, denoted by \(\beta\) ("beta")

\(\beta=P(Type\;II\;error)\)

Example: Trial Section

A man goes to trial where he is being tried for the murder of his wife.

We can put it in a hypothesis testing framework. The hypotheses being tested are:

- \(H_0\) : Not Guilty

- \(H_a\) : Guilty

Type I error is committed if we reject \(H_0\) when it is true. In other words, did not kill his wife but was found guilty and is punished for a crime he did not really commit.

Type II error is committed if we fail to reject \(H_0\) when it is false. In other words, if the man did kill his wife but was found not guilty and was not punished.

Example: Culinary Arts Study Section

A group of culinary arts students is comparing two methods for preparing asparagus: traditional steaming and a new frying method. They want to know if patrons of their school restaurant prefer their new frying method over the traditional steaming method. A sample of patrons are given asparagus prepared using each method and asked to select their preference. A statistical analysis is performed to determine if more than 50% of participants prefer the new frying method:

- \(H_{0}: p = .50\)

- \(H_{a}: p>.50\)

Type I error occurs if they reject the null hypothesis and conclude that their new frying method is preferred when in reality is it not. This may occur if, by random sampling error, they happen to get a sample that prefers the new frying method more than the overall population does. If this does occur, the consequence is that the students will have an incorrect belief that their new method of frying asparagus is superior to the traditional method of steaming.

Type II error occurs if they fail to reject the null hypothesis and conclude that their new method is not superior when in reality it is. If this does occur, the consequence is that the students will have an incorrect belief that their new method is not superior to the traditional method when in reality it is.

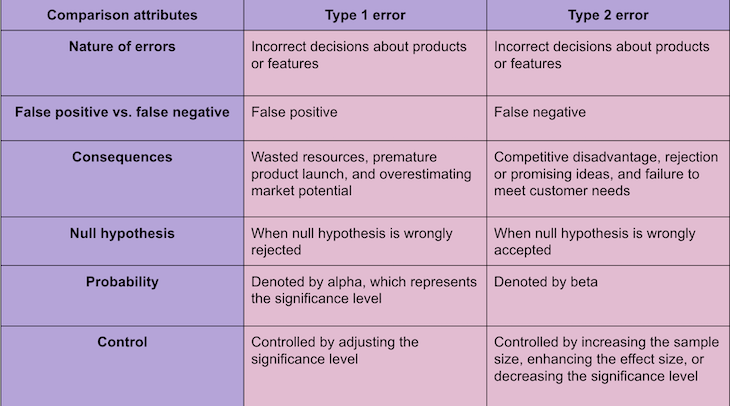

- Type I vs Type II Errors: Causes, Examples & Prevention

There are two common types of errors, type I and type II errors you’ll likely encounter when testing a statistical hypothesis. The mistaken rejection of the finding or the null hypothesis is known as a type I error. In other words, type I error is the false-positive finding in hypothesis testing . Type II error on the other hand is the false-negative finding in hypothesis testing.

To better understand the two types of errors, here’s an example:

Let’s assume you notice some flu-like symptoms and decide to go to a hospital to get tested for the presence of malaria. There is a possibility of two errors occurring:

- In type I error (False positive): The result of the test shows you have malaria but you actually don’t have it.

- Type II error (false negative): The test result indicates that you don’t have malaria when you in fact do.

Type I error and Type II error are extensively used in areas such as computer science, Engineering, Statistics, and many more.

The chance of committing a type I error is known as alpha (α), while the chance of committing a type II error is known as beta (β). If you carefully plan your study design, you can minimize the probability of committing either of the errors.

Read: Survey Errors To Avoid: Types, Sources, Examples, Mitigation

What are Type I Errors?

Type I error is an omission that happens when a null hypothesis is reprobated during hypothesis testing. This is when it is indeed precise or positive and should not have been initially disapproved. So if a null hypothesis is erroneously rejected when it is positive, it is called a Type I error.

What this means is that results are concluded to be significant when in actual fact, it was obtained by chance.

When conducting hypothesis testing, a null hypothesis is determined before carrying out the actual test. The null hypothesis may presume that there is no chain of circumstances between the items being tested which may cause an outcome for the test.

When a null hypothesis is rejected, it means a chain of circumstances has been established between the items being tested even though it is a false alarm or false positive. This could lead to an error or many errors in a test, known as a Type I error.

It is worthy of note that statistical outcomes of every testing involve uncertainties, so making errors while performing these hypothesis testings is unavoidable. It is inherent that type I error may be considered as an error of commission in the sense that the producer or researcher mistakenly decides on a false outcome.

Read: Systematic Errors in Research: Definition, Examples

Causes of Type I Error

- When a factor other than the variable affects the variables being tested. This factor that causes the effect produces a result that supports the decision to reject the null hypothesis.

- When the result of a hypothesis testing is caused by chance, it is a Type I error.

- Lastly, because a null hypothesis and the significance level are decided before conducting a hypothesis test, and also the sample size is not considered, a type I error may occur due to chance.

Read: Margin of error – Definition, Formula + Application

Risk Factor and Probability of Type I Error

- The risk factor and probability of Type I error are mostly set in advance and the level of significance of the hypothesis testing is known.

- The level of significance in a test is represented by α and it signifies the rate of the possibility of Type I error.

- While it is possible to reduce the rate of Type I error by using a determined sample size. The consequence of this, however, is that the possibility of a Type II error occurring in a test will increase.

- In a case where Type I error is decided at 5 percent, it means in the null hypothesis ( H 0), chances are there that 5 in the 100 hypotheses even if true will be rejected.

- Another risk factor is that both Type I and Type II errors can not be changed simultaneously. To reduce the possibility of one error occurring means the possibility of the other error will increase. Hence changing the outcome of one test inherently affects the outcome of the other test.

Read: Sampling Bias: Definition, Types + [Examples]

Consequences of a Type I Error

A type I error will result in a false alarm. The outcome of the hypothesis testing will be a false positive. This implies that the researcher decided the result of a hypothesis testing is true when in fact, it is not.

For a sales group, the consequences of a type I error may result in losing potential market and missing out on probable sales because the findings of a test are faulty.

What are Type II Errors?

A Type II error means a researcher or producer did not disapprove of the alternate hypothesis when it is in fact negative or false. This does not mean the null hypothesis is accepted as positive as hypothesis testing only indicates if a null hypothesis should be rejected.

A Type II error means a conclusion on the effect of the test wasn’t recognized when an effect truly existed. Before a test can be said to have a real effect, it has to have a power level that is 80% or more.

This implies the statistical power of a test determines the risk of a type II error. The probability of a type II error occurring largely depends on how high the statistical power is.

Note: Null hypothesis is represented as (H0) and alternative hypothesis is represented as (H1)

Causes of Type II Error

- Type II error is mainly caused by the statistical power of a test being low. A Type II error will occur if the statistical test is not powerful enough.

- The size of the sample can also lead to a Type I error because the outcome of the test will be affected. A small sample size might hide the significant level of the items being tested.

- Another cause of Type Ii error is the possibility that a researcher may disapprove of the actual outcome of a hypothesis even when it is correct.

Probability of Type II Error

- To arrive at the possibility of a Type II error occurring, the power of the test must be deducted from type 1.

- The level of significance in a test is represented by β and it shows the rate of the possibility of Type I error.

- It is possible to reduce the rate of Type II error if the significance level of the test is increased.

- In a case where Type II error is decided at 5 percent, it means in the null hypothesis ( H 0), chances are there that 5 in the 100 hypotheses even if it is false will be accepted.

- Type I error and Type II error are connected. Hence, to reduce the possibility of one type of error from occurring means the possibility of the other error will increase.

- It is important to decide which error has lesser effects on the test.

Consequences of a Type II Error

Type II errors can also result in a wrong decision that will affect the outcomes of a test and have real-life consequences.

Note that even if you proved your test hypothesis, your conversion result can invalidate the outcome unintended. This turn of events can be discouraging, hence the need to be extra careful when conducting hypothesis testing.

How to Avoid Type I and II errors

Type I error and type II errors can not be entirely avoided in hypothesis testing, but the researcher can reduce the probability of them occurring.

For Type I error, minimize the significance level to avoid making errors. This can be determined by the researcher.

To avoid type II errors, ensure the test has high statistical power. The higher the statistical power, the higher the chance of avoiding an error. Set your statistical power to 80% and above and conduct your test.

Increase the sample size of the hypothesis testing.

The Type II error can also be avoided if the significance level of the test hypothesis is chosen.

How to Detect Type I and Type II Errors in Data

After completing a study, the researcher can conduct any of the available statistical tests to reject the default hypothesis in favor of its alternative. If the study is free of bias, there are four possible outcomes. See the image below;

Image source: IPJ

If the findings in the sample and reality in the population match, the researchers’ inferences will be correct. However, if in any of the situations a type I or II error has been made, the inference will be incorrect.

Key Differences between Type I & II Errors

- In statistical hypothesis testing, a type I error is caused by disapproving a null hypothesis that is otherwise correct while in contrast, Type II error occurs when the null hypothesis is not rejected even though it is not true.

- Type I error is the same as a false alarm or false positive while Type II error is also referred to as false negative.

- A Type I error is represented by α while a Type II error is represented by β.

- The level of significance determines the possibility of a type I error while type II error is the possibility of deducting the power of the test from 1.

- You can decrease the possibility of Type I error by reducing the level of significance. The same way you can reduce the probability of a Type II error by increasing the significance level of the test.

- Type I error occurs when you reject the null hypothesis, in contrast, Type II error occurs when you accept an incorrect outcome of a false hypothesis

Examples of Type I & II errors

Type i error examples.

To understand the statistical significance of Type I error, let us look at this example.

In this hypothesis, a driver wants to determine the relationship between him getting a new driving wheel and the number of passengers he carries in a week.

Now, if the number of passengers he carries in a week increases after he got a new driving wheel than the number of passengers he carried in a week with the old driving wheel, this driver might assume that there is a relationship between the new wheel and the increase in the number of passengers and support the alternative hypothesis.

However, the increment in the number of passengers he carried in a week, might have been influenced by chance and not by the new wheel which results in type I error.

By this indication, the driver should have supported the null hypothesis because the increment of his passengers might have been due to chance and not fact.

Type II error examples

For Type II error and statistical power, let us assume a hypothesis where a farmer that rears birds assumes none of his birds have bird-flu. He observes his birds for four days to find out if there are symptoms of the flu.

If after four days, the farmer sees no symptoms of the flu in his birds, he might assume his birds are indeed free from bird flu whereas the bird flu might have affected his birds and the symptoms are obvious on the sixth day.

By this indication, the farmer accepts that no flu exists in his birds. This leads to a type II error where it supports the null hypothesis when it is in fact false.

Frequently Asked Questions about Type I and II Errors

- Is a Type I or Type II error worse?

Both Type I and type II errors could be worse based on the type of research being conducted.

A Type I error means an incorrect assumption has been made when the assumption is in reality not true. The consequence of this is that other alternatives are disapproved of to accept this conclusion. A type II error implies that a null hypothesis was not rejected. This means that a significant outcome wouldn’t have any benefit in reality.

A Type I error however may be terrible for statisticians. It is difficult to decide which of the errors is worse than the other but both types of errors could do enough damage to your research.

- Does sample size affect type 1 error?

Small or large sample size does not affect type I error . So sample size will not increase the occurrence of Type I error.

The only principle is that your test has a normal sample size. If the sample size is small in Type II errors, the level of significance will decrease.

This may cause a false assumption from the researcher and discredit the outcome of the hypothesis testing.

- What is statistical power as it relates to Type I or Type II errors

Statistical power is used in type II to deduce the measurement error. This is because random errors reduce the statistical power of hypothesis testing. Not only that, the larger the size of the effect, the more detectable the errors are.

The statistical power of a hypothesis increases when the level of significance increases. The statistical power also increases when a larger sample size is being tested thereby reducing the errors. If you want the risk of Type II error to reduce, increase the level of significance of the test.

- What is statistical significance as it relates to Type I or Type II errors

Statistical significance relates to Type I error. Researchers sometimes assume that the outcome of a test is statistically significant when they are not and the researcher then rejects the null hypothesis. The fact is, the outcome might have happened due to chance.

A type I error decreases when a lower significance level is set.

If your test power is lower compared to the significance level, then the alternative hypothesis is relevant to the statistical significance of your test, then the outcome is relevant.

In this article, we have extensively discussed Type I error and Type II error. We have also discussed their causes, the probabilities of their occurrence, and how to avoid them. We have seen that both Types of errors have their usefulness and limitations. The best approach as a researcher is to know which to apply and when.

Connect to Formplus, Get Started Now - It's Free!

- alternative vs null hypothesis

- hypothesis testing

- level of errors

- level of significance

- statistical hypothesis

- statistical power

- type i errors

- type ii errors

- busayo.longe

You may also like:

Hypothesis Testing: Definition, Uses, Limitations + Examples

The process of research validation involves testing and it is in this context that we will explore hypothesis testing.

Alternative vs Null Hypothesis: Pros, Cons, Uses & Examples

We are going to discuss alternative hypotheses and null hypotheses in this post and how they work in research.

Internal Validity in Research: Definition, Threats, Examples

In this article, we will discuss the concept of internal validity, some clear examples, its importance, and how to test it.

What is Pure or Basic Research? + [Examples & Method]

Simple guide on pure or basic research, its methods, characteristics, advantages, and examples in science, medicine, education and psychology

Formplus - For Seamless Data Collection

Collect data the right way with a versatile data collection tool. try formplus and transform your work productivity today..

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

Curbing type I and type II errors

Kenneth j. rothman.

RTI Health Solutions, Research Triangle Park, NC USA

The statistical education of scientists emphasizes a flawed approach to data analysis that should have been discarded long ago. This defective method is statistical significance testing. It degrades quantitative findings into a qualitative decision about the data. Its underlying statistic, the P -value, conflates two important but distinct aspects of the data, effect size and precision [ 1 ]. It has produced countless misinterpretations of data that are often amusing for their folly, but also hair-raising in view of the serious consequences.

Significance testing maintains its hold through brilliant marketing tactics—the appeal of having a “significant” result is nearly irresistible—and through a herd mentality. Novices quickly learn that significant findings are the key to publication and promotion, and that statistical significance is the mantra of many senior scientists who will judge their efforts. Stang et al. [ 2 ], in this issue of the journal, liken the grip of statistical significance testing on the biomedical sciences to tyranny, as did Loftus in the social sciences two decades ago [ 3 ]. The tyranny depends on collaborators to maintain its stranglehold. Some collude because they do not know better. Others do so because they lack the backbone to swim against the tide.

Students of significance testing are warned about two types of errors, type I and II, also known as alpha and beta errors. A type I error is a false positive, rejecting a null hypothesis that is correct. A type II error is a false negative, a failure to reject a null hypothesis that is false. A large literature, much of it devoted to the topic of multiple comparisons, subgroup analysis, pre-specification of hypotheses, and related topics, are aimed at reducing type I errors [ 4 ]. This lopsided emphasis on type I errors comes at the expense of type II errors. The type I error, the false positive, is only possible if the null hypothesis is true. If the null hypothesis is false, a type I error is impossible, but a type II error, the false negative, can occur.

Type I and type II errors are the product of forcing the results of a quantitative analysis into the mold of a decision, which is whether to reject or not to reject the null hypothesis. Reducing interpretations to a dichotomy, however, seriously degrades the information. The consequence is often a misinterpretation of study results, stemming from a failure to separate effect size from precision. Both effect size and precision need to be assessed, but they need to be assessed separately, rather than blended into the P -value, which is then degraded into a dichotomous decision about statistical significance.