- Python Programming

- C Programming

- Numerical Methods

- Dart Language

- Computer Basics

- Deep Learning

- C Programming Examples

- Python Programming Examples

Problem Solving Using Computer (Steps)

Computer based problem solving is a systematic process of designing, implementing and using programming tools during the problem solving stage. This method enables the computer system to be more intuitive with human logic than machine logic. Final outcome of this process is software tools which is dedicated to solve the problem under consideration. Software is just a collection of computer programs and programs are a set of instructions which guides computer’s hardware. These instructions need to be well specified for solving the problem. After its creation, the software should be error free and well documented. Software development is the process of creating such software, which satisfies end user’s requirements and needs.

The following six steps must be followed to solve a problem using computer.

- Problem Analysis

- Program Design - Algorithm, Flowchart and Pseudocode

- Compilation and Execution

- Debugging and Testing

- Program Documentation

Exploring the Problem Solving Cycle in Computer Science – Strategies, Techniques, and Tools

- Post author By bicycle-u

- Post date 08.12.2023

The world of computer science is built on the foundation of problem solving. Whether it’s finding a solution to a complex algorithm or analyzing data to make informed decisions, the problem solving cycle is at the core of every computer science endeavor.

At its essence, problem solving in computer science involves breaking down a complex problem into smaller, more manageable parts. This allows for a systematic approach to finding a solution by analyzing each part individually. The process typically starts with gathering and understanding the data or information related to the problem at hand.

Once the data is collected, computer scientists use various techniques and algorithms to analyze and explore possible solutions. This involves evaluating different approaches and considering factors such as efficiency, accuracy, and scalability. During this analysis phase, it is crucial to think critically and creatively to come up with innovative solutions.

After a thorough analysis, the next step in the problem solving cycle is designing and implementing a solution. This involves creating a detailed plan of action, selecting the appropriate tools and technologies, and writing the necessary code to bring the solution to life. Attention to detail and precision are key in this stage to ensure that the solution functions as intended.

The final step in the problem solving cycle is evaluating the solution and its effectiveness. This includes testing the solution against different scenarios and data sets to ensure its reliability and performance. If any issues or limitations are discovered, adjustments and optimizations are made to improve the solution.

In conclusion, the problem solving cycle is a fundamental process in computer science, involving analysis, data exploration, algorithm development, solution implementation, and evaluation. It is through this cycle that computer scientists are able to tackle complex problems and create innovative solutions that drive progress in the field of computer science.

Understanding the Importance

In computer science, problem solving is a crucial skill that is at the core of the problem solving cycle. The problem solving cycle is a systematic approach to analyzing and solving problems, involving various stages such as problem identification, analysis, algorithm design, implementation, and evaluation. Understanding the importance of this cycle is essential for any computer scientist or programmer.

Data Analysis and Algorithm Design

The first step in the problem solving cycle is problem identification, which involves recognizing and defining the issue at hand. Once the problem is identified, the next crucial step is data analysis. This involves gathering and examining relevant data to gain insights and understand the problem better. Data analysis helps in identifying patterns, trends, and potential solutions.

After data analysis, the next step is algorithm design. An algorithm is a step-by-step procedure or set of rules to solve a problem. Designing an efficient algorithm is crucial as it determines the effectiveness and efficiency of the solution. A well-designed algorithm takes into consideration the constraints, resources, and desired outcomes while implementing the solution.

Implementation and Evaluation

Once the algorithm is designed, the next step in the problem solving cycle is implementation. This involves translating the algorithm into a computer program using a programming language. The implementation phase requires coding skills and expertise in a specific programming language.

After implementation, the solution needs to be evaluated to ensure that it solves the problem effectively. Evaluation involves testing the program and verifying its correctness and efficiency. This step is critical to identify any errors or issues and to make necessary improvements or adjustments.

In conclusion, understanding the importance of the problem solving cycle in computer science is essential for any computer scientist or programmer. It provides a systematic and structured approach to analyze and solve problems, ensuring efficient and effective solutions. By following the problem solving cycle, computer scientists can develop robust algorithms, implement them in efficient programs, and evaluate their solutions to ensure their correctness and efficiency.

Identifying the Problem

In the problem solving cycle in computer science, the first step is to identify the problem that needs to be solved. This step is crucial because without a clear understanding of the problem, it is impossible to find a solution.

Identification of the problem involves a thorough analysis of the given data and understanding the goals of the task at hand. It requires careful examination of the problem statement and any constraints or limitations that may affect the solution.

During the identification phase, the problem is broken down into smaller, more manageable parts. This can involve breaking the problem down into sub-problems or identifying the different aspects or components that need to be addressed.

Identifying the problem also involves considering the resources and tools available for solving it. This may include considering the specific tools and programming languages that are best suited for the problem at hand.

By properly identifying the problem, computer scientists can ensure that they are focused on the right goals and are better equipped to find an effective and efficient solution. It sets the stage for the rest of the problem solving cycle, including the analysis, design, implementation, and evaluation phases.

Gathering the Necessary Data

Before finding a solution to a computer science problem, it is essential to gather the necessary data. Whether it’s writing a program or developing an algorithm, data serves as the backbone of any solution. Without proper data collection and analysis, the problem-solving process can become inefficient and ineffective.

The Importance of Data

In computer science, data is crucial for a variety of reasons. First and foremost, it provides the information needed to understand and define the problem at hand. By analyzing the available data, developers and programmers can gain insights into the nature of the problem and determine the most efficient approach for solving it.

Additionally, data allows for the evaluation of potential solutions. By collecting and organizing relevant data, it becomes possible to compare different algorithms or strategies and select the most suitable one. Data also helps in tracking progress and measuring the effectiveness of the chosen solution.

Data Gathering Process

The process of gathering data involves several steps. Firstly, it is necessary to identify the type of data needed for the particular problem. This may include numerical values, textual information, or other types of data. It is important to determine the sources of data and assess their reliability.

Once the required data has been identified, it needs to be collected. This can be done through various methods, such as surveys, experiments, observations, or by accessing existing data sets. The collected data should be properly organized, ensuring its accuracy and validity.

Data cleaning and preprocessing are vital steps in the data gathering process. This involves removing any irrelevant or erroneous data and transforming it into a suitable format for analysis. Properly cleaned and preprocessed data will help in generating reliable and meaningful insights.

Data Analysis and Interpretation

After gathering and preprocessing the data, the next step is data analysis and interpretation. This involves applying various statistical and analytical methods to uncover patterns, trends, and relationships within the data. By analyzing the data, programmers can gain valuable insights that can inform the development of an effective solution.

During the data analysis process, it is crucial to remain objective and unbiased. The analysis should be based on sound reasoning and logical thinking. It is also important to communicate the findings effectively, using visualizations or summaries to convey the information to stakeholders or fellow developers.

In conclusion, gathering the necessary data is a fundamental step in solving computer science problems. It provides the foundation for understanding the problem, evaluating potential solutions, and tracking progress. By following a systematic and rigorous approach to data gathering and analysis, developers can ensure that their solutions are efficient, effective, and well-informed.

Analyzing the Data

Once you have collected the necessary data, the next step in the problem-solving cycle is to analyze it. Data analysis is a crucial component of computer science, as it helps us understand the problem at hand and develop effective solutions.

To analyze the data, you need to break it down into manageable pieces and examine each piece closely. This process involves identifying patterns, trends, and outliers that may be present in the data. By doing so, you can gain insights into the problem and make informed decisions about the best course of action.

There are several techniques and tools available for data analysis in computer science. Some common methods include statistical analysis, data visualization, and machine learning algorithms. Each approach has its own strengths and limitations, so it’s essential to choose the most appropriate method for the problem you are solving.

Statistical Analysis

Statistical analysis involves using mathematical models and techniques to analyze data. It helps in identifying correlations, distributions, and other statistical properties of the data. By applying statistical tests, you can determine the significance and validity of your findings.

Data Visualization

Data visualization is the process of presenting data in a visual format, such as charts, graphs, or maps. It allows for a better understanding of complex data sets and facilitates the communication of findings. Through data visualization, patterns and trends can become more apparent, making it easier to derive meaningful insights.

Machine Learning Algorithms

Machine learning algorithms are powerful tools for analyzing large and complex data sets. These algorithms can automatically detect patterns and relationships in the data, leading to the development of predictive models and solutions. By training the algorithm on a labeled dataset, it can learn from the data and make accurate predictions or classifications.

In conclusion, analyzing the data is a critical step in the problem-solving cycle in computer science. It helps us gain a deeper understanding of the problem and develop effective solutions. Whether through statistical analysis, data visualization, or machine learning algorithms, data analysis plays a vital role in transforming raw data into actionable insights.

Exploring Possible Solutions

Once you have gathered data and completed the analysis, the next step in the problem-solving cycle is to explore possible solutions. This is where the true power of computer science comes into play. With the use of algorithms and the application of scientific principles, computer scientists can develop innovative solutions to complex problems.

During this stage, it is important to consider a variety of potential solutions. This involves brainstorming different ideas and considering their feasibility and potential effectiveness. It may be helpful to consult with colleagues or experts in the field to gather additional insights and perspectives.

Developing an Algorithm

One key aspect of exploring possible solutions is the development of an algorithm. An algorithm is a step-by-step set of instructions that outlines a specific process or procedure. In the context of problem solving in computer science, an algorithm provides a clear roadmap for implementing a solution.

The development of an algorithm requires careful thought and consideration. It is important to break down the problem into smaller, manageable steps and clearly define the inputs and outputs of each step. This allows for the creation of a logical and efficient solution.

Evaluating the Solutions

Once you have developed potential solutions and corresponding algorithms, the next step is to evaluate them. This involves analyzing each solution to determine its strengths, weaknesses, and potential impact. Consider factors such as efficiency, scalability, and resource requirements.

It may be helpful to conduct experiments or simulations to further assess the effectiveness of each solution. This can provide valuable insights and data to support the decision-making process.

Ultimately, the goal of exploring possible solutions is to find the most effective and efficient solution to the problem at hand. By leveraging the power of data, analysis, algorithms, and scientific principles, computer scientists can develop innovative solutions that drive progress and solve complex problems in the world of technology.

Evaluating the Options

Once you have identified potential solutions and algorithms for a problem, the next step in the problem-solving cycle in computer science is to evaluate the options. This evaluation process involves analyzing the potential solutions and algorithms based on various criteria to determine the best course of action.

Consider the Problem

Before evaluating the options, it is important to take a step back and consider the problem at hand. Understand the requirements, constraints, and desired outcomes of the problem. This analysis will help guide the evaluation process.

Analyze the Options

Next, it is crucial to analyze each solution or algorithm option individually. Look at factors such as efficiency, accuracy, ease of implementation, and scalability. Consider whether the solution or algorithm meets the specific requirements of the problem, and if it can be applied to related problems in the future.

Additionally, evaluate the potential risks and drawbacks associated with each option. Consider factors such as cost, time, and resources required for implementation. Assess any potential limitations or trade-offs that may impact the overall effectiveness of the solution or algorithm.

Select the Best Option

Based on the analysis, select the best option that aligns with the specific problem-solving goals. This may involve prioritizing certain criteria or making compromises based on the limitations identified during the evaluation process.

Remember that the best option may not always be the most technically complex or advanced solution. Consider the practicality and feasibility of implementation, as well as the potential impact on the overall system or project.

In conclusion, evaluating the options is a critical step in the problem-solving cycle in computer science. By carefully analyzing the potential solutions and algorithms, considering the problem requirements, and considering the limitations and trade-offs, you can select the best option to solve the problem at hand.

Making a Decision

Decision-making is a critical component in the problem-solving process in computer science. Once you have analyzed the problem, identified the relevant data, and generated a potential solution, it is important to evaluate your options and choose the best course of action.

Consider All Factors

When making a decision, it is important to consider all relevant factors. This includes evaluating the potential benefits and drawbacks of each option, as well as understanding any constraints or limitations that may impact your choice.

In computer science, this may involve analyzing the efficiency of different algorithms or considering the scalability of a proposed solution. It is important to take into account both the short-term and long-term impacts of your decision.

Weigh the Options

Once you have considered all the factors, it is important to weigh the options and determine the best approach. This may involve assigning weights or priorities to different factors based on their importance.

Using techniques such as decision matrices or cost-benefit analysis can help you systematically compare and evaluate different options. By quantifying and assessing the potential risks and rewards, you can make a more informed decision.

Remember: Decision-making in computer science is not purely subjective or based on personal preference. It is crucial to use analytical and logical thinking to select the most optimal solution.

In conclusion, making a decision is a crucial step in the problem-solving process in computer science. By considering all relevant factors and weighing the options using logical analysis, you can choose the best possible solution to a given problem.

Implementing the Solution

Once the problem has been analyzed and a solution has been proposed, the next step in the problem-solving cycle in computer science is implementing the solution. This involves turning the proposed solution into an actual computer program or algorithm that can solve the problem.

In order to implement the solution, computer science professionals need to have a strong understanding of various programming languages and data structures. They need to be able to write code that can manipulate and process data in order to solve the problem at hand.

During the implementation phase, the proposed solution is translated into a series of steps or instructions that a computer can understand and execute. This involves breaking down the problem into smaller sub-problems and designing algorithms to solve each sub-problem.

Computer scientists also need to consider the efficiency of their solution during the implementation phase. They need to ensure that the algorithm they design is able to handle large amounts of data and solve the problem in a reasonable amount of time. This often requires optimization techniques and careful consideration of the data structures used.

Once the code has been written and the algorithm has been implemented, it is important to test and debug the solution. This involves running test cases and checking the output to ensure that the program is working correctly. If any errors or bugs are found, they need to be fixed before the solution can be considered complete.

In conclusion, implementing the solution is a crucial step in the problem-solving cycle in computer science. It requires strong programming skills and a deep understanding of algorithms and data structures. By carefully designing and implementing the solution, computer scientists can solve problems efficiently and effectively.

Testing and Debugging

In computer science, testing and debugging are critical steps in the problem-solving cycle. Testing helps ensure that a program or algorithm is functioning correctly, while debugging analyzes and resolves any issues or bugs that may arise.

Testing involves running a program with specific input data to evaluate its output. This process helps verify that the program produces the expected results and handles different scenarios correctly. It is important to test both the normal and edge cases to ensure the program’s reliability.

Debugging is the process of identifying and fixing errors or bugs in a program. When a program does not produce the expected results or crashes, it is necessary to go through the code to find and fix the problem. This can involve analyzing the program’s logic, checking for syntax errors, and using debugging tools to trace the flow of data and identify the source of the issue.

Data analysis plays a crucial role in both testing and debugging. It helps to identify patterns, anomalies, or inconsistencies in the program’s behavior. By analyzing the data, developers can gain insights into potential issues and make informed decisions on how to improve the program’s performance.

In conclusion, testing and debugging are integral parts of the problem-solving cycle in computer science. Through testing and data analysis, developers can verify the correctness of their programs and identify and resolve any issues that may arise. This ensures that the algorithms and programs developed in computer science are robust, reliable, and efficient.

Iterating for Improvement

In computer science, problem solving often involves iterating through multiple cycles of analysis, solution development, and evaluation. This iterative process allows for continuous improvement in finding the most effective solution to a given problem.

The problem solving cycle starts with problem analysis, where the specific problem is identified and its requirements are understood. This step involves examining the problem from various angles and gathering all relevant information.

Once the problem is properly understood, the next step is to develop an algorithm or a step-by-step plan to solve the problem. This algorithm is a set of instructions that, when followed correctly, will lead to the solution.

After the algorithm is developed, it is implemented in a computer program. This step involves translating the algorithm into a programming language that a computer can understand and execute.

Once the program is implemented, it is then tested and evaluated to ensure that it produces the correct solution. This evaluation step is crucial in identifying any errors or inefficiencies in the program and allows for further improvement.

If any issues or problems are found during testing, the cycle iterates, starting from problem analysis again. This iterative process allows for refinement and improvement of the solution until the desired results are achieved.

Iterating for improvement is a fundamental concept in computer science problem solving. By continually analyzing, developing, and evaluating solutions, computer scientists are able to find the most optimal and efficient approaches to solving problems.

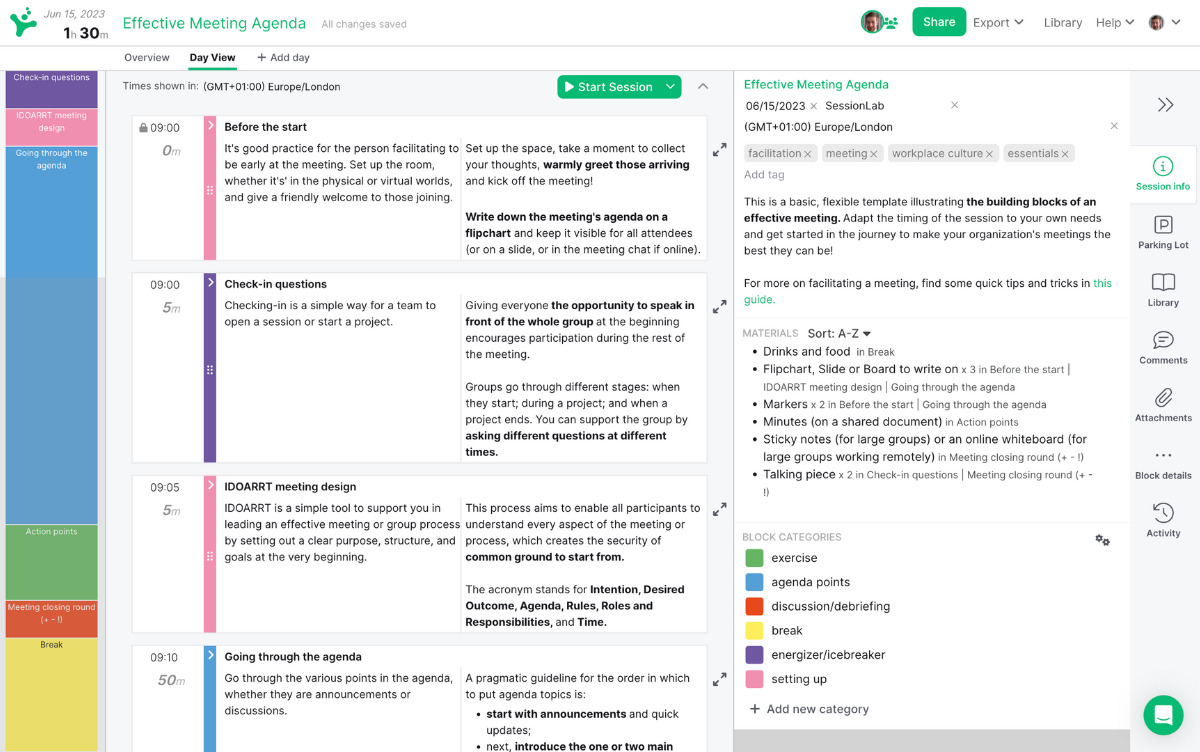

Documenting the Process

Documenting the problem-solving process in computer science is an essential step to ensure that the cycle is repeated successfully. The process involves gathering information, analyzing the problem, and designing a solution.

During the analysis phase, it is crucial to identify the specific problem at hand and break it down into smaller components. This allows for a more targeted approach to finding the solution. Additionally, analyzing the data involved in the problem can provide valuable insights and help in designing an effective solution.

Once the analysis is complete, it is important to document the findings. This documentation can take various forms, such as written reports, diagrams, or even code comments. The goal is to create a record that captures the problem, the analysis, and the proposed solution.

Documenting the process serves several purposes. Firstly, it allows for easy communication and collaboration between team members or future developers. By documenting the problem, analysis, and solution, others can easily understand the thought process behind the solution and potentially build upon it.

Secondly, documenting the process provides an opportunity for reflection and improvement. By reviewing the documentation, developers can identify areas where the problem-solving cycle can be strengthened or optimized. This continuous improvement is crucial in the field of computer science, as new challenges and technologies emerge rapidly.

In conclusion, documenting the problem-solving process is an integral part of the computer science cycle. It allows for effective communication, collaboration, and reflection on the solutions devised. By taking the time to document the process, developers can ensure a more efficient and successful problem-solving experience.

Communicating the Solution

Once the problem solving cycle is complete, it is important to effectively communicate the solution. This involves explaining the analysis, data, and steps taken to arrive at the solution.

Analyzing the Problem

During the problem solving cycle, a thorough analysis of the problem is conducted. This includes understanding the problem statement, gathering relevant data, and identifying any constraints or limitations. It is important to clearly communicate this analysis to ensure that others understand the problem at hand.

Presenting the Solution

The next step in communicating the solution is presenting the actual solution. This should include a detailed explanation of the steps taken to solve the problem, as well as any algorithms or data structures used. It is important to provide clear and concise descriptions of the solution, so that others can understand and reproduce the results.

Overall, effective communication of the solution in computer science is essential to ensure that others can understand and replicate the problem solving process. By clearly explaining the analysis, data, and steps taken, the solution can be communicated in a way that promotes understanding and collaboration within the field of computer science.

Reflecting and Learning

Reflecting and learning are crucial steps in the problem solving cycle in computer science. Once a problem has been solved, it is essential to reflect on the entire process and learn from the experience. This allows for continuous improvement and growth in the field of computer science.

During the reflecting phase, one must analyze and evaluate the problem solving process. This involves reviewing the initial problem statement, understanding the constraints and requirements, and assessing the effectiveness of the chosen algorithm and solution. It is important to consider the efficiency and accuracy of the solution, as well as any potential limitations or areas for optimization.

By reflecting on the problem solving cycle, computer scientists can gain valuable insights into their own strengths and weaknesses. They can identify areas where they excelled and areas where improvement is needed. This self-analysis helps in honing problem solving skills and becoming a better problem solver.

Learning from Mistakes

Mistakes are an integral part of the problem solving cycle, and they provide valuable learning opportunities. When a problem is not successfully solved, it is essential to analyze the reasons behind the failure and learn from them. This involves identifying errors in the algorithm or solution, understanding the underlying concepts or principles that were misunderstood, and finding alternative approaches or strategies.

Failure should not be seen as a setback, but rather as an opportunity for growth. By learning from mistakes, computer scientists can improve their problem solving abilities and expand their knowledge and understanding of computer science. It is through these failures and the subsequent learning process that new ideas and innovations are often born.

Continuous Improvement

Reflecting and learning should not be limited to individual problem solving experiences, but should be an ongoing practice. As computer science is a rapidly evolving field, it is crucial to stay updated with new technologies, algorithms, and problem solving techniques. Continuous learning and improvement contribute to staying competitive and relevant in the field.

Computer scientists can engage in continuous improvement by seeking feedback from peers, participating in research and development activities, attending conferences and workshops, and actively seeking new challenges and problem solving opportunities. This dedication to learning and improvement ensures that one’s problem solving skills remain sharp and effective.

In conclusion, reflecting and learning are integral parts of the problem solving cycle in computer science. They enable computer scientists to refine their problem solving abilities, learn from mistakes, and continuously improve their skills and knowledge. By embracing these steps, computer scientists can stay at the forefront of the ever-changing world of computer science and contribute to its advancements.

Applying Problem Solving in Real Life

In computer science, problem solving is not limited to the realm of programming and algorithms. It is a skill that can be applied to various aspects of our daily lives, helping us to solve problems efficiently and effectively. By using the problem-solving cycle and applying the principles of analysis, data, solution, algorithm, and cycle, we can tackle real-life challenges with confidence and success.

The first step in problem-solving is to analyze the problem at hand. This involves breaking it down into smaller, more manageable parts and identifying the key issues or goals. By understanding the problem thoroughly, we can gain insights into its root causes and potential solutions.

For example, let’s say you’re facing a recurring issue in your daily commute – traffic congestion. By analyzing the problem, you may discover that the main causes are a lack of alternative routes and a lack of communication between drivers. This analysis helps you identify potential solutions such as using navigation apps to find alternate routes or promoting carpooling to reduce the number of vehicles on the road.

Gathering and Analyzing Data

Once we have identified the problem, it is important to gather relevant data to support our analysis. This may involve conducting surveys, collecting statistics, or reviewing existing research. By gathering data, we can make informed decisions and prioritize potential solutions based on their impact and feasibility.

Continuing with the traffic congestion example, you may gather data on the average commute time, the number of vehicles on the road, and the impact of carpooling on congestion levels. This data can help you analyze the problem more accurately and determine the most effective solutions.

Generating and Evaluating Solutions

After analyzing the problem and gathering data, the next step is to generate potential solutions. This can be done through brainstorming, researching best practices, or seeking input from experts. It is important to consider multiple options and think outside the box to find innovative and effective solutions.

For our traffic congestion problem, potential solutions can include implementing a smart traffic management system that optimizes traffic flow or investing in public transportation to incentivize people to leave their cars at home. By evaluating each solution’s potential impact, cost, and feasibility, you can make an informed decision on the best course of action.

Implementing and Iterating

Once a solution has been chosen, it is time to implement it in real life. This may involve developing a plan, allocating resources, and executing the solution. It is important to monitor the progress and collect feedback to learn from the implementation and make necessary adjustments.

For example, if the chosen solution to address traffic congestion is implementing a smart traffic management system, you would work with engineers and transportation authorities to develop and deploy the system. Regular evaluation and iteration of the system’s performance would ensure that it is effective and making a positive impact on reducing congestion.

By applying the problem-solving cycle derived from computer science to real-life situations, we can approach challenges with a systematic and analytical mindset. This can help us make better decisions, improve our problem-solving skills, and ultimately achieve more efficient and effective solutions.

Building Problem Solving Skills

In the field of computer science, problem-solving is a fundamental skill that is crucial for success. Whether you are a computer scientist, programmer, or student, developing strong problem-solving skills will greatly benefit your work and studies. It allows you to approach challenges with a logical and systematic approach, leading to efficient and effective problem resolution.

The Problem Solving Cycle

Problem-solving in computer science involves a cyclical process known as the problem-solving cycle. This cycle consists of several stages, including problem identification, data analysis, solution development, implementation, and evaluation. By following this cycle, computer scientists are able to tackle complex problems and arrive at optimal solutions.

Importance of Data Analysis

Data analysis is a critical step in the problem-solving cycle. It involves gathering and examining relevant data to gain insights and identify patterns that can inform the development of a solution. Without proper data analysis, computer scientists may overlook important information or make unfounded assumptions, leading to subpar solutions.

To effectively analyze data, computer scientists can employ various techniques such as data visualization, statistical analysis, and machine learning algorithms. These tools enable them to extract meaningful information from large datasets and make informed decisions during the problem-solving process.

Developing Effective Solutions

Developing effective solutions requires creativity, critical thinking, and logical reasoning. Computer scientists must evaluate multiple approaches, consider various factors, and assess the feasibility of different solutions. They should also consider potential limitations and trade-offs to ensure that the chosen solution addresses the problem effectively.

Furthermore, collaboration and communication skills are vital when building problem-solving skills. Computer scientists often work in teams and need to effectively communicate their ideas, propose solutions, and address any challenges that arise during the problem-solving process. Strong interpersonal skills facilitate collaboration and enhance problem-solving outcomes.

- Mastering programming languages and algorithms

- Staying updated with technological advancements in the field

- Practicing problem solving through coding challenges and projects

- Seeking feedback and learning from mistakes

- Continuing to learn and improve problem-solving skills

By following these strategies, individuals can strengthen their problem-solving abilities and become more effective computer scientists or programmers. Problem-solving is an essential skill in computer science and plays a central role in driving innovation and advancing the field.

Questions and answers:

What is the problem solving cycle in computer science.

The problem solving cycle in computer science refers to a systematic approach that programmers use to solve problems. It involves several steps, including problem definition, algorithm design, implementation, testing, and debugging.

How important is the problem solving cycle in computer science?

The problem solving cycle is extremely important in computer science as it allows programmers to effectively tackle complex problems and develop efficient solutions. It helps in organizing the thought process and ensures that the problem is approached in a logical and systematic manner.

What are the steps involved in the problem solving cycle?

The problem solving cycle typically consists of the following steps: problem definition and analysis, algorithm design, implementation, testing, and debugging. These steps are repeated as necessary until a satisfactory solution is achieved.

Can you explain the problem definition and analysis step in the problem solving cycle?

During the problem definition and analysis step, the programmer identifies and thoroughly understands the problem that needs to be solved. This involves analyzing the requirements, constraints, and possible inputs and outputs. It is important to have a clear understanding of the problem before proceeding to the next steps.

Why is testing and debugging an important step in the problem solving cycle?

Testing and debugging are important steps in the problem solving cycle because they ensure that the implemented solution functions as intended and is free from errors. Through testing, the programmer can identify and fix any issues or bugs in the code, thereby improving the quality and reliability of the solution.

What is the problem-solving cycle in computer science?

The problem-solving cycle in computer science refers to the systematic approach that computer scientists use to solve problems. It involves various steps, including problem analysis, algorithm design, coding, testing, and debugging.

Related posts:

- The Stages of the Problem Solving Cycle in Cognitive Psychology – Understanding, Planning, Execution, Evaluation, and Reflection

- A Comprehensive Guide to the Problem Solving Cycle in Psychology – Strategies, Techniques, and Applications

- The Step-by-Step Problem Solving Cycle for Effective Solutions

- The Importance of Implementing the Problem Solving Cycle in Education to Foster Critical Thinking and Problem-Solving Skills in Students

- The Importance of the Problem Solving Cycle in Business Studies – Strategies for Success

- The Comprehensive Guide to the Problem Solving Cycle in PDF Format

- A Comprehensive Guide on the Problem Solving Cycle – Step-by-Step Approach with Real-Life Example

- The Seven Essential Steps of the Problem Solving Cycle

What Is Problem Solving? How Software Engineers Approach Complex Challenges

From debugging an existing system to designing an entirely new software application, a day in the life of a software engineer is filled with various challenges and complexities. The one skill that glues these disparate tasks together and makes them manageable? Problem solving .

Throughout this blog post, we’ll explore why problem-solving skills are so critical for software engineers, delve into the techniques they use to address complex challenges, and discuss how hiring managers can identify these skills during the hiring process.

What Is Problem Solving?

But what exactly is problem solving in the context of software engineering? How does it work, and why is it so important?

Problem solving, in the simplest terms, is the process of identifying a problem, analyzing it, and finding the most effective solution to overcome it. For software engineers, this process is deeply embedded in their daily workflow. It could be something as simple as figuring out why a piece of code isn’t working as expected, or something as complex as designing the architecture for a new software system.

In a world where technology is evolving at a blistering pace, the complexity and volume of problems that software engineers face are also growing. As such, the ability to tackle these issues head-on and find innovative solutions is not only a handy skill — it’s a necessity.

The Importance of Problem-Solving Skills for Software Engineers

Problem-solving isn’t just another ability that software engineers pull out of their toolkits when they encounter a bug or a system failure. It’s a constant, ongoing process that’s intrinsic to every aspect of their work. Let’s break down why this skill is so critical.

Driving Development Forward

Without problem solving, software development would hit a standstill. Every new feature, every optimization, and every bug fix is a problem that needs solving. Whether it’s a performance issue that needs diagnosing or a user interface that needs improving, the capacity to tackle and solve these problems is what keeps the wheels of development turning.

It’s estimated that 60% of software development lifecycle costs are related to maintenance tasks, including debugging and problem solving. This highlights how pivotal this skill is to the everyday functioning and advancement of software systems.

Innovation and Optimization

The importance of problem solving isn’t confined to reactive scenarios; it also plays a major role in proactive, innovative initiatives . Software engineers often need to think outside the box to come up with creative solutions, whether it’s optimizing an algorithm to run faster or designing a new feature to meet customer needs. These are all forms of problem solving.

Consider the development of the modern smartphone. It wasn’t born out of a pre-existing issue but was a solution to a problem people didn’t realize they had — a device that combined communication, entertainment, and productivity into one handheld tool.

Increasing Efficiency and Productivity

Good problem-solving skills can save a lot of time and resources. Effective problem-solvers are adept at dissecting an issue to understand its root cause, thus reducing the time spent on trial and error. This efficiency means projects move faster, releases happen sooner, and businesses stay ahead of their competition.

Improving Software Quality

Problem solving also plays a significant role in enhancing the quality of the end product. By tackling the root causes of bugs and system failures, software engineers can deliver reliable, high-performing software. This is critical because, according to the Consortium for Information and Software Quality, poor quality software in the U.S. in 2022 cost at least $2.41 trillion in operational issues, wasted developer time, and other related problems.

Problem-Solving Techniques in Software Engineering

So how do software engineers go about tackling these complex challenges? Let’s explore some of the key problem-solving techniques, theories, and processes they commonly use.

Decomposition

Breaking down a problem into smaller, manageable parts is one of the first steps in the problem-solving process. It’s like dealing with a complicated puzzle. You don’t try to solve it all at once. Instead, you separate the pieces, group them based on similarities, and then start working on the smaller sets. This method allows software engineers to handle complex issues without being overwhelmed and makes it easier to identify where things might be going wrong.

Abstraction

In the realm of software engineering, abstraction means focusing on the necessary information only and ignoring irrelevant details. It is a way of simplifying complex systems to make them easier to understand and manage. For instance, a software engineer might ignore the details of how a database works to focus on the information it holds and how to retrieve or modify that information.

Algorithmic Thinking

At its core, software engineering is about creating algorithms — step-by-step procedures to solve a problem or accomplish a goal. Algorithmic thinking involves conceiving and expressing these procedures clearly and accurately and viewing every problem through an algorithmic lens. A well-designed algorithm not only solves the problem at hand but also does so efficiently, saving computational resources.

Parallel Thinking

Parallel thinking is a structured process where team members think in the same direction at the same time, allowing for more organized discussion and collaboration. It’s an approach popularized by Edward de Bono with the “ Six Thinking Hats ” technique, where each “hat” represents a different style of thinking.

In the context of software engineering, parallel thinking can be highly effective for problem solving. For instance, when dealing with a complex issue, the team can use the “White Hat” to focus solely on the data and facts about the problem, then the “Black Hat” to consider potential problems with a proposed solution, and so on. This structured approach can lead to more comprehensive analysis and more effective solutions, and it ensures that everyone’s perspectives are considered.

This is the process of identifying and fixing errors in code . Debugging involves carefully reviewing the code, reproducing and analyzing the error, and then making necessary modifications to rectify the problem. It’s a key part of maintaining and improving software quality.

Testing and Validation

Testing is an essential part of problem solving in software engineering. Engineers use a variety of tests to verify that their code works as expected and to uncover any potential issues. These range from unit tests that check individual components of the code to integration tests that ensure the pieces work well together. Validation, on the other hand, ensures that the solution not only works but also fulfills the intended requirements and objectives.

Explore verified tech roles & skills.

The definitive directory of tech roles, backed by machine learning and skills intelligence.

Explore all roles

Evaluating Problem-Solving Skills

We’ve examined the importance of problem-solving in the work of a software engineer and explored various techniques software engineers employ to approach complex challenges. Now, let’s delve into how hiring teams can identify and evaluate problem-solving skills during the hiring process.

Recognizing Problem-Solving Skills in Candidates

How can you tell if a candidate is a good problem solver? Look for these indicators:

- Previous Experience: A history of dealing with complex, challenging projects is often a good sign. Ask the candidate to discuss a difficult problem they faced in a previous role and how they solved it.

- Problem-Solving Questions: During interviews, pose hypothetical scenarios or present real problems your company has faced. Ask candidates to explain how they would tackle these issues. You’re not just looking for a correct solution but the thought process that led them there.

- Technical Tests: Coding challenges and other technical tests can provide insight into a candidate’s problem-solving abilities. Consider leveraging a platform for assessing these skills in a realistic, job-related context.

Assessing Problem-Solving Skills

Once you’ve identified potential problem solvers, here are a few ways you can assess their skills:

- Solution Effectiveness: Did the candidate solve the problem? How efficient and effective is their solution?

- Approach and Process: Go beyond whether or not they solved the problem and examine how they arrived at their solution. Did they break the problem down into manageable parts? Did they consider different perspectives and possibilities?

- Communication: A good problem solver can explain their thought process clearly. Can the candidate effectively communicate how they arrived at their solution and why they chose it?

- Adaptability: Problem-solving often involves a degree of trial and error. How does the candidate handle roadblocks? Do they adapt their approach based on new information or feedback?

Hiring managers play a crucial role in identifying and fostering problem-solving skills within their teams. By focusing on these abilities during the hiring process, companies can build teams that are more capable, innovative, and resilient.

Key Takeaways

As you can see, problem solving plays a pivotal role in software engineering. Far from being an occasional requirement, it is the lifeblood that drives development forward, catalyzes innovation, and delivers of quality software.

By leveraging problem-solving techniques, software engineers employ a powerful suite of strategies to overcome complex challenges. But mastering these techniques isn’t simple feat. It requires a learning mindset, regular practice, collaboration, reflective thinking, resilience, and a commitment to staying updated with industry trends.

For hiring managers and team leads, recognizing these skills and fostering a culture that values and nurtures problem solving is key. It’s this emphasis on problem solving that can differentiate an average team from a high-performing one and an ordinary product from an industry-leading one.

At the end of the day, software engineering is fundamentally about solving problems — problems that matter to businesses, to users, and to the wider society. And it’s the proficient problem solvers who stand at the forefront of this dynamic field, turning challenges into opportunities, and ideas into reality.

This article was written with the help of AI. Can you tell which parts?

Get started with HackerRank

Over 2,500 companies and 40% of developers worldwide use HackerRank to hire tech talent and sharpen their skills.

Recommended topics

- Hire Developers

- Problem Solving

Computer Science

- Quantitative Finance

Take a guided, problem-solving based approach to learning Computer Science. These compilations provide unique perspectives and applications you won't find anywhere else.

Computer Science Fundamentals

What's inside.

- Tools of Computer Science

- Computational Problem Solving

- Algorithmic Thinking

Algorithm Fundamentals

- Building Blocks

- Array Algorithms

- The Speed of Algorithms

- Stable Matching

Programming with Python

- Introduction

- String Manipulation

- Loops, Functions and Arguments

Community Wiki

Browse through thousands of Computer Science wikis written by our community of experts.

Types and Data Structures

- Abstract Data Types

- Array (ADT)

- Double Ended Queues

- Associative Arrays

- Priority Queues

- Array (Data Structure)

- Disjoint-set Data Structure (Union-Find)

- Dynamic Array

- Linked List

- Unrolled Linked List

- Hash Tables

- Bloom Filter

- Cuckoo Filter

- Merkle Tree

- Recursive Backtracking

- Fenwick Tree

- Binary Search Trees

- Red-Black Tree

- Scapegoat Tree

- Binary Heap

- Binomial Heap

- Fibonacci Heap

- Pairing Heap

- Graph implementation and representation

- Adjacency Matrix

- Spanning Trees

- Social Networks

- Kruskal's Algorithm

- Regular Expressions

- Divide and Conquer

- Greedy Algorithms

- Randomized Algorithms

- Complexity Theory

- Big O Notation

- Master Theorem

- Amortized Analysis

- Complexity Classes

- P versus NP

- Dynamic Programming

- Backpack Problem

- Egg Dropping

- Fast Fibonacci Transform

- Karatsuba Algorithm

- Sorting Algorithms

- Insertion Sort

- Bubble Sort

- Counting Sort

- Median-finding Algorithm

- Binary Search

- Depth-First Search (DFS)

- Breadth-First Search (BFS)

- Shortest Path Algorithms

- Dijkstra's Shortest Path Algorithm

- Bellman-Ford Algorithm

- Floyd-Warshall Algorithm

- Johnson's Algorithm

- Matching (Graph Theory)

- Matching Algorithms (Graph Theory)

- Flow Network

- Max-flow Min-cut Algorithm

- Ford-Fulkerson Algorithm

- Edmonds-Karp Algorithm

- Shunting Yard Algorithm

- Rabin-Karp Algorithm

- Knuth-Morris-Pratt Algorithm

- Basic Shapes, Polygons, Trigonometry

- Convex Hull

- Finite State Machines

- Turing Machines

- Halting Problem

- Kolmogorov Complexity

- Traveling Salesperson Problem

- Pushdown Automata

- Regular Languages

- Context Free Grammars

- Context Free Languages

- Signals and Systems

- Linear Time Invariant Systems

- Predicting System Behavior

Programming Languages

- Subroutines

- List comprehension

- Primality Testing

- Pattern matching

- Logic Gates

- Control Flow Statements

- Object-Oriented Programming

- Classes (OOP)

- Methods (OOP)

Cryptography and Simulations

- Caesar Cipher

- Vigenère Cipher

- RSA Encryption

- Enigma Machine

- Diffie-Hellman

- Knapsack Cryptosystem

- Secure Hash Algorithms

- Entropy (Information Theory)

- Huffman Code

- Error correcting codes

- Symmetric Ciphers

- Inverse Transform Sampling

- Monte-Carlo Simulation

- Genetic Algorithms

- Programming Blackjack

- Machine Learning

- Supervised Learning

- Unsupervised Learning

- Feature Vector

- Naive Bayes Classifier

- K-nearest Neighbors

- Support Vector Machines

- Principal Component Analysis

- Ridge Regression

- k-Means Clustering

- Markov Chains

- Hidden Markov Models

- Gaussian Mixture Model

- Collaborative Filtering

- Artificial Neural Network

- Feedforward Neural Networks

- Backpropagation

- Recurrent Neural Network

Problem Loading...

Note Loading...

Set Loading...

- Computers & Technology

- Computer Science

Buy new: $92.68 $92.68 FREE delivery: April 8 - 12 Ships from: EMC_STORE Sold by: EMC_STORE

Buy used: $56.76, other sellers on amazon.

Download the free Kindle app and start reading Kindle books instantly on your smartphone, tablet, or computer - no Kindle device required .

Read instantly on your browser with Kindle for Web.

Using your mobile phone camera - scan the code below and download the Kindle app.

Image Unavailable

- To view this video download Flash Player

Follow the author

COMPUTER-BASED PROBLEM SOLVING PROCESS

Purchase options and add-ons.

One side-effect of having made great leaps in computing over the last few decades, is the resulting over-abundance in software tools created to solve the diverse problems. Problem solving with computers has, in consequence, become more demanding; instead of focusing on the problem when conceptualizing strategies to solve them, users are side-tracked by the pursuit of even more programming tools (as available).

Computer-Based Problem Solving Process is a work intended to offer a systematic treatment to the theory and practice of designing, implementing, and using software tools during the problem solving process. This method is obtained by enabling computer systems to be more Intuitive with human logic rather than machine logic. Instead of software dedicated to computer experts, the author advocates an approach dedicated to computer users in general. This approach does not require users to have an advanced computer education, though it does advocate a deeper education of the computer user in his or her problem domain logic.

This book is intended for system software teachers, designers and implementers of various aspects of system software, as well as readers who have made computers a part of their day-today problem solving.

- ISBN-10 9814663735

- ISBN-13 978-9814663731

- Publisher World Scientific Pub Co Inc

- Publication date March 20, 2015

- Language English

- Dimensions 6.25 x 1 x 9.25 inches

- Print length 344 pages

- See all details

Editorial Reviews

From the back cover, product details.

- Publisher : World Scientific Pub Co Inc (March 20, 2015)

- Language : English

- Hardcover : 344 pages

- ISBN-10 : 9814663735

- ISBN-13 : 978-9814663731

- Item Weight : 1.45 pounds

- Dimensions : 6.25 x 1 x 9.25 inches

- #1,323 in Computer Algorithms

- #1,716 in Artificial Intelligence Expert Systems

- #2,296 in Natural Language Processing (Books)

About the author

Discover more of the author’s books, see similar authors, read author blogs and more

Customer reviews

Customer Reviews, including Product Star Ratings help customers to learn more about the product and decide whether it is the right product for them.

To calculate the overall star rating and percentage breakdown by star, we don’t use a simple average. Instead, our system considers things like how recent a review is and if the reviewer bought the item on Amazon. It also analyzed reviews to verify trustworthiness.

No customer reviews

- Amazon Newsletter

- About Amazon

- Accessibility

- Sustainability

- Press Center

- Investor Relations

- Amazon Devices

- Amazon Science

- Start Selling with Amazon

- Sell apps on Amazon

- Supply to Amazon

- Protect & Build Your Brand

- Become an Affiliate

- Become a Delivery Driver

- Start a Package Delivery Business

- Advertise Your Products

- Self-Publish with Us

- Host an Amazon Hub

- › See More Ways to Make Money

- Amazon Visa

- Amazon Store Card

- Amazon Secured Card

- Amazon Business Card

- Shop with Points

- Credit Card Marketplace

- Reload Your Balance

- Amazon Currency Converter

- Your Account

- Your Orders

- Shipping Rates & Policies

- Amazon Prime

- Returns & Replacements

- Manage Your Content and Devices

- Recalls and Product Safety Alerts

- Conditions of Use

- Privacy Notice

- Consumer Health Data Privacy Disclosure

- Your Ads Privacy Choices

ORIGINAL RESEARCH article

Analysis of process data of pisa 2012 computer-based problem solving: application of the modified multilevel mixture irt model.

- 1 Faculty of Psychology, Beijing Normal University, Beijing, China

- 2 Beijing Key Laboratory of Applied Experimental Psychology, Faculty of Psychology, National Demonstration Center for Experimental Psychology Education, Beijing Normal University, Beijing, China

- 3 Collaborative Innovation Center of Assessment Toward Basic Education Quality, Beijing Normal University, Beijing, China

- 4 Educational Supervision and Quality Assessment Research Center, Beijing Academy of Educational Sciences, Beijing, China

Computer-based assessments provide new insights into cognitive processes related to task completion that cannot be easily observed using paper-based instruments. In particular, such new insights may be revealed by time-tamped actions, which are recorded as computer log-files in the assessments. These actions, nested in individual level, are logically interconnected. This interdependency can be modeled straightforwardly in a multi-level framework. This study draws on process data recorded in one of complex problem-solving tasks (Traffic CP007Q02) in Program for International Student Assessment (PISA) 2012 and proposes a modified Multilevel Mixture IRT model (MMixIRT) to explore the problem-solving strategies. It was found that the model can not only explore whether the latent classes differ in their response strategies at the process level, but provide ability estimates at both the process level and the student level. The two level abilities are different across latent classes, and they are related to operational variables such as the number of resets or clicks. The proposed method may allow for better exploration of students' specific strategies for solving a problem, and the strengths and weaknesses of the strategies. Such findings may be further used to design targeted instructional interventions.

Introduction

The problem-solving competence is defined as the capacity to engage in cognitive processing to understand and resolve problem situations where a solution is not immediately obvious. It includes the willingness to engage in these situations in order to achieve one's potential as a constructive and reflective citizen ( OECD, 2014 ; Kurniati and Annizar, 2017 ). Problem solving can be conceptualized as a sequential process where the problem solver must understand the problem, devise a plan, carry out the plan, and monitor the progress in relation to the goal ( Garofalo and Lester, 1985 ; OECD, 2013 ). These problem-solving skills are key to success in all pursuits, and they can be developed in school through curricular subjects. Therefore, it is no surprise that the problem-solving competency is increasingly becoming the focus of many testing programs worldwide.

Advances in technology have expanded opportunities for educational measurement. Computer-based assessments, such as simulation-, scenario-, and game-based assessments, constantly change item design, item delivery, and data collection ( DiCerbo and Behrens, 2012 ; Mislevy et al., 2014 ). These assessments usually provide an interactive environment in which students can solve a problem through choosing among a set of available actions and taking one or more steps to complete a task. All student actions are automatically recorded in system logs as coded and time-stamped strings ( Kerr et al., 2011 ). These strings can be used for instant feedback to students, or for diagnostic and scoring purposes at a later time ( DiCerbo and Behrens, 2012 ). And they are called process data. For example, the problem solving assessment of PISA 2012, which is computer-based, used simulated real-life problem situations, such as a malfunctioning electronic device, to analyze students' reasoning skills, problem-solving ability, and problem-solving strategies. The computer-based assessment of problem solving not only ascertains whether students produce correct responses for their items, but also records a large amount of process data on answering these items. These data make it possible to understand students' strategies to the solution. So far, to evaluate students' higher order thinking, more and more large-scale assessments of problem solving become computer-based.

Recent research has focused on characterizing and scoring process data and using them to measure individual student's abilities. Characterizing process data can be conducted via a variety of approaches, including visualization, clustering, and classification ( Romero and Ventura, 2010 ). DiCerbo et al. (2011) used diagraphs to visualize and analyze sequential process data from assessments. Bergner et al. (2014) used cluster analysis to classify similar behaving groups. Some other researchers used decision trees, neural networks, and Bayesian belief networks (BBNs) ( Romero et al., 2008 ; Desmarais and Baker, 2012 ; Zhu et al., 2016 ), to classify the performance of problem solvers ( Zoanetti, 2010 ) and to predict their success ( Romero et al., 2013 ). Compared to characterizing process data, the research of scoring process data is very limited. Hao et al. (2015) introduced “the editing distance” to score students' behavior sequences based on the process data in a scenario-based task of the National Assessment of Educational Progress (NAEP). Meanwhile, these process data have been used in psychometric studies. Researchers analyzed students' sequential response process data to estimate their ability by combining Markov model and item response theory (IRT) ( Shu et al., 2017 ). It is noteworthy that all these practices have examined process data that describe students' sequential actions to solve a problem.

All the actions, recorded as process level data, which are nested in individual level, are logically interconnected. This interdependency allows a straightforward modeling in a multi-level framework ( Goldstein, 1987 ; Raudenbush and Bryk, 2002 ; Hox, 2010 ). This framework is similar to those used in longitudinal studies, yet with some differences. In longitudinal studies, measurements are typically consistent to show the development pattern of certain traits. For process data, however, actions are typically different within each individual. These successive actions are used to characterizing individuals' problem solving strategies.

It is common in computer-based assessments that a nested data structure exists. To appropriately analyze process data (e.g., time series actions) within a nested structure (e.g., process within individuals), the multi-level IRT model can be modified by allowing process data to be a function of the latent traits at both process and individual levels. It is noteworthy that in the modified model, the concept of “item” in IRT changed to each action in individuals' responses, which was scored based on certain rules.

With respect to the assessment of problem solving competency, the focus of this study is the ability estimate at the student level. We were not concerned with individual's ability reflected from each action at the process level, since the task needs to be completed by taking series actions. Even for individuals with high problem solving ability, the first few actions may not accurately reflect test takers' ability. As a result, more attention was put on the development of ability at the process level because it can reveal students' problem solving strategies. Mixture item response theory (MixIRT) models have been used in describing important effects in assessment, including the differential use of response strategies ( Mislevy and Verhelst, 1990 ; Rost, 1990 ; Bolt et al., 2001 ). The value of MixIRT models lies in that they provide a way of detecting different latent groups which are formed by the dimensionality arising directly from the process data. These groups are substantively useful because they reflect how and why students responded the way they did.

In this study, we incorporated the multilevel structure into a mixture IRT model and used the modified multilevel mixture IRT (MMixIRT) model to detect and compare the latent groups in the data that have differential problem solving strategies. The advantage of this approach is the usage of latent groups. Although they are not immediately observable, these latent groups, which are defined by certain shared response patterns, can help explain process-level performance about how members of one latent group differ from another. The approach proposed in this study was used to estimate abilities both at process and student levels, and classify students into different latent groups according to their response strategies.

The goal of this study is to illustrate steps involved in applying the modified MMixIRT model in a computer-based problem solving assessment then to further present and interpret the results. Specifically, this article focuses on (a) describing and demonstrating the modified MMixIRT model using a task of PISA 2012 problem-solving process data; (b) interpreting the different action patterns; (c) analyzing the correlation between characteristics of different strategies and task performance, as well as some other operational variables such as the number of resets or clicks. All the following analysis was based on one sample data set.

Measurement Material and Dataset

Problem solving item and log data file.

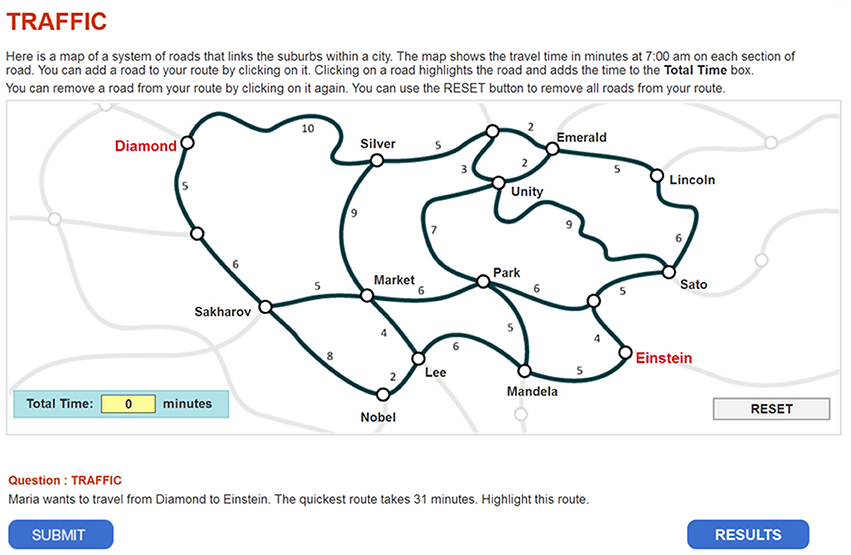

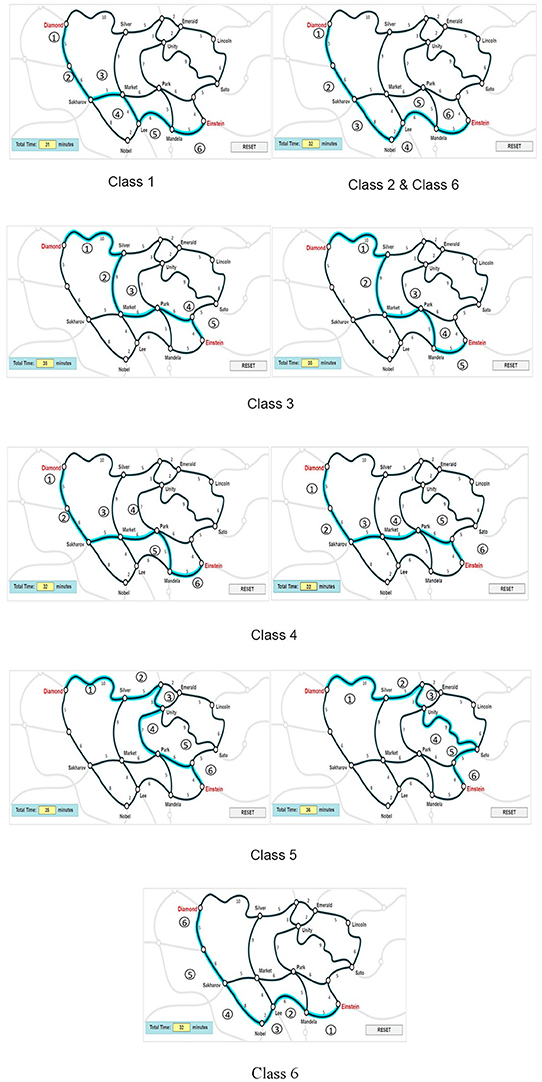

This study illustrates the use of the modified MMixIRT model in analyzing process data through one of the problem-solving tasks in PISA 2012 (Traffic CP007Q02). The task is shown in Figure 1 . In this task, students were given a map and the travel time on each route, and then they were asked to find the quickest route from Diamond to Einsten, which takes 31 min.

Figure 1 . Traffic.

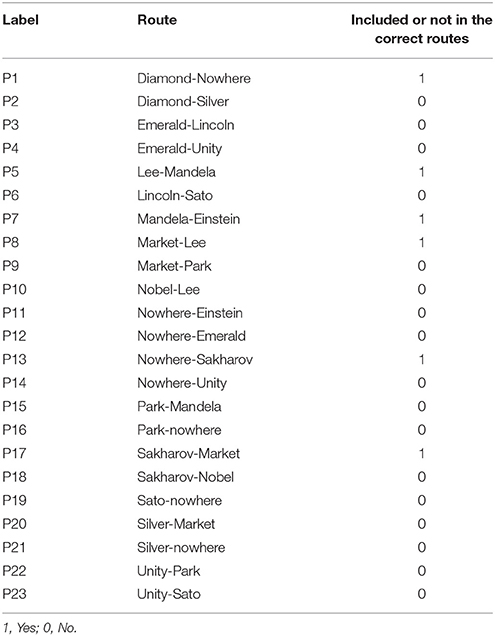

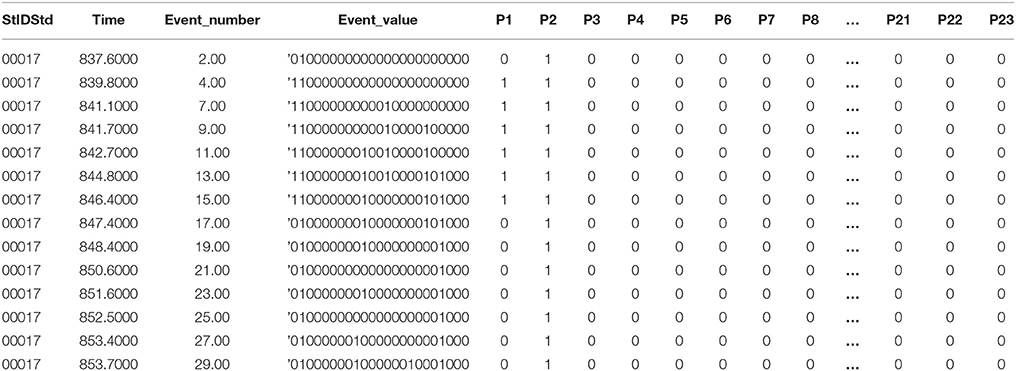

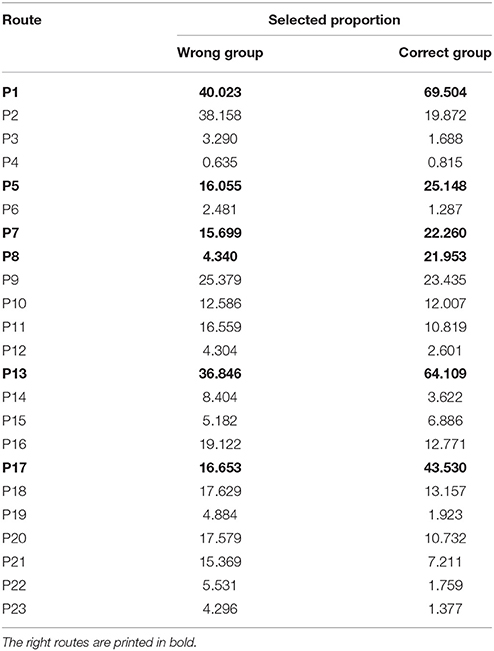

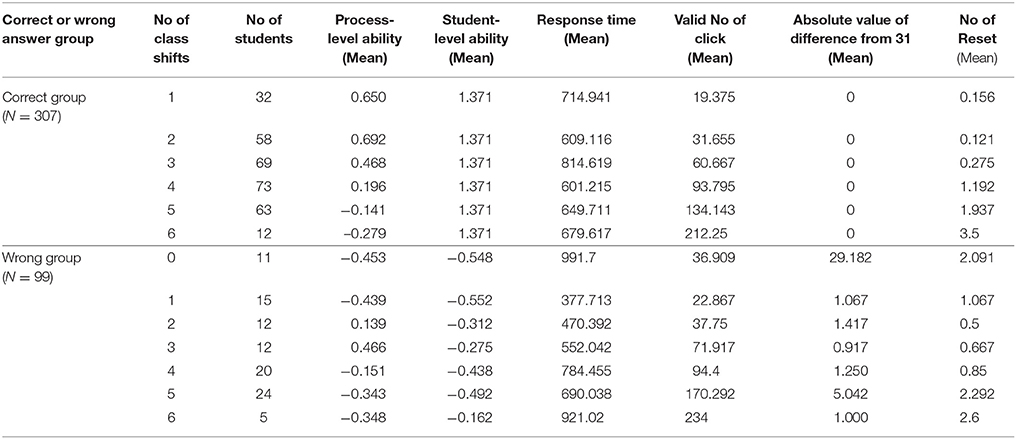

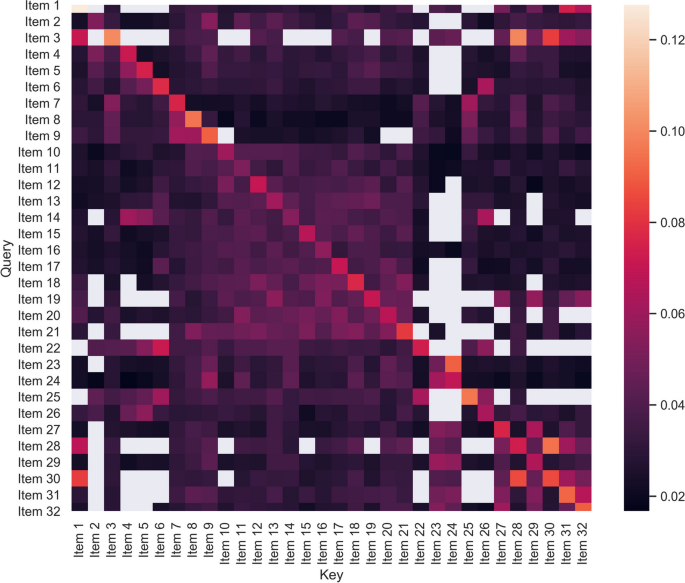

The data are from the task's log file (CBA_cp007q02_logs12_SPSS.SAV, data source: http://www.oecd.org/pisa/data/ ) (an example of log data file is shown in Appendix 1 ). The data file contains four variables associated with the process. The “event” variable refers to the type of event, which may be either system generated (start item, end item) or student generated (e.g., ACER_EVENT, Click, Dblclick). The “time” variable is the event time for this item, given in seconds since the beginning of the assessment, with all click and double-click events included. The “event_value” variable is recorded in two rows, as a click event involves selecting or de-selecting a route of the map. For example, in the eleventh row where the state of the entire map is given, 1 in the sequence means that the route was selected, and 0 means that it was not; the twelfth row records an event involving highlighting, or un-highlighting. A route of the map represents the same click event, and it is in the form “hit_segment name” (The notes on log file data can be downloaded from http://www.oecd.org/pisa/data/ ). All the “click” and “double-click” events represent that a student performs a click action that is not related to select a route. Table 1 shows the label, the route and the correct state of the entire selected routes.

Table 1 . The routes of the map.

The study sample was drawn from PISA 2012 released dataset, consisting of a total of 413 students from 157 American schools who participated in the traffic problem-solving assessment (47.2% as females). The average age of students was 15.80 years ( SD = 0.29 years), ranging from 15.33 to 16.33 years.

For the traffic item response, the total effective sample size under analysis was 406, after excluding seven incomplete responses. For the log file of the process record, there were 15,897 records in the final data file, and the average record number for each student was 39 ( SD = 33), ranging from 1 to 183. The average response time was 672.64 s ( SD = 518.85 s), ranging from 58.30 to 1995.20 s.

The Modified MMixIRT Model for Process Data

Process-level data coding.

In this task log file, “ACER_EVENT” is associated with “click.” However, in this study we only collected the information of ACER_EVENT and deleted the redundant click data. Then, we split and rearranged the data by routes, making each row represent a step in the process of individual students, and each column represent a route (0 for de-selecting, and 1 for selecting). Table 2 shows part of the reorganized data file, indicating how individual student selected each route in each step. For example, the first line represents that student 00017 selected P2 in his/her first step.

Table 2 . Example of the reorganized data file.

Process data were first recoded for the analysis purpose. Twenty-three variables were created to represent a total number of available routes that can possibly be selected (similar to 23 items). The right way for solving this problem is to select the following six routes: Diamond–Nowhere–Sakharov–Market–Lee–Mandela–Einstein (i.e., P1, P5, P7, P8, P13, and P17). For the correct routes, the scored response was 1 if one was selected, and 0 otherwise; for the incorrect routes, the scored response was 0 if one was selected, and 1 otherwise. Each row in the data file represents an effective step (or action) a student took during the process. In each step, when a route was selected or not, the response for this route was recoded accordingly. When a student finished an item, all the steps during the process were recorded. Therefore, for the completed data set, the responses of the 23 variables were obtained and the steps were nested within students.

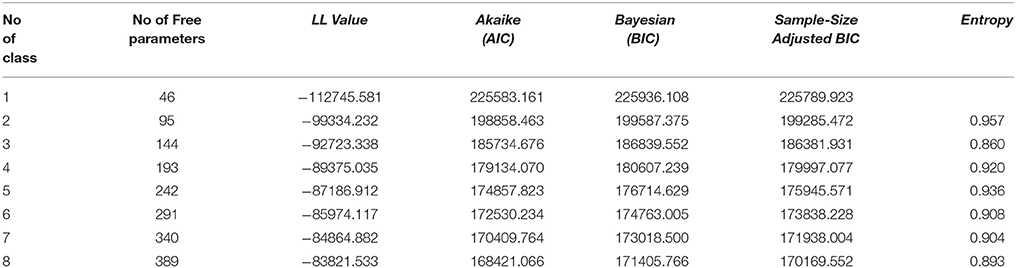

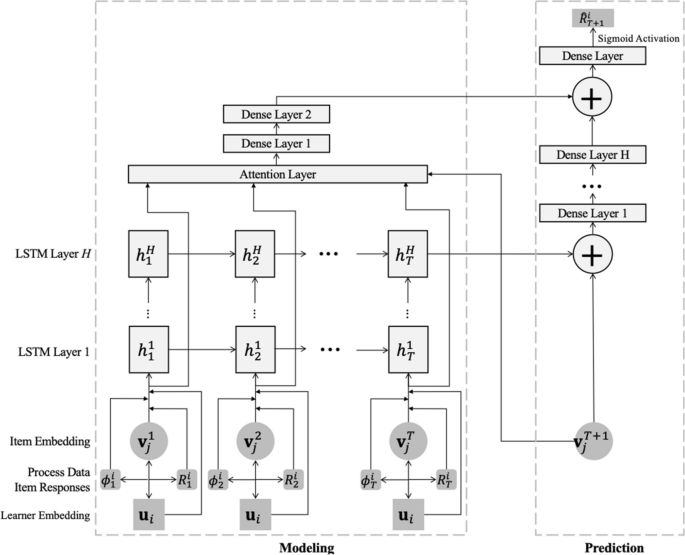

The Modified MMixIRT Model Specification

The MMixIRT model has mixtures of latent classes at the process level or at both process and student levels. It assumes that possible heterogeneity exists in response patterns at the process level and therefore are not to be ignored ( Mislevy and Verhelst, 1990 ; Rost, 1990 ). Latent classes can capture the interactions among the responses at the process level ( Vermunt, 2003 ). It is interesting to note that if no process-level latent classes exist, there are no student-level latent classes, either. The reason lies in that student-level units are clustered based on the likelihood of the processes belonging to one of the latent classes. For this particular consideration, the main focus in this study is to explore how to classify the process-level data, and the modified MMixIRT model only focus on latent classes at the process level.

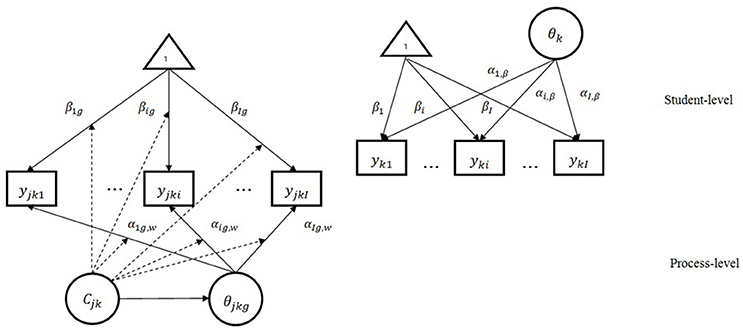

The MMixIRT model accounts for the heterogeneity by incorporating categorical or continuous latent variables at different levels. Because mixture models have categorical latent variables and item response models have continuous latent variables, latent variables at each level may be categorical or continuous. In this study, the modified MMixIRT includes both categorical (latent class estimates) and continuous latent variables at the process level and only continuous (ability estimates) latent variables at the student level.

The modified MMixIRT model for process-level data is specified as follows:

Process-Level

Student-Level

For the process level, in Equation (1), i is an index for i th route ( i = 1, …, I ), k is an index for a student ( k = 1,…, K ), j is an index for the j th valid step of a student during the response process ( j = 1, …, J k ),( J is the total steps of the k th student) and g indexes the latent classes ( C jk = 1, …, g … G , where G is the number of latent classes), C jk is a categorical latent variable at the process level for the j th valid step of student k , which captures the heterogeneity of the selections of routes in each step. P ( y jki = 1|θ jkg , C jk = g ) is the probability of selecting an route i in the j th step of student k , which is predicted by the two-parameter logistic (2PL) model, and α ig . W is the discrimination parameter of process-level in class g, W means within-level, β ig is the location parameter in class g , and θ jkg is the latent ability of examinee k for a specific step j during the process of selecting the route, which is called the process ability in this study (θ jkg ~ N( μ jkg , σ j k g 2 ) ). The process abilities across different latent classes are constrained to follow a normal distribution ( θ jk ~ N(0, 1)) . In Equation (2), P ( y jk 1 = ω 1 , y jk 2 = ω 2 , ⋯ , y jkI = ω I ) is the joint probability of the actions in the j th step of student k . ω i denotes either selected or not selected for i th route. For the correct routes, 1 represents that the route was selected, and 0 otherwise; for the incorrect routes, 0 represents that the route was selected, and 1 otherwise. γ jkg is the proportion of the j th step in each latent class and ∑ g = 1 G γ j k g = 1 . As can be seen from the Equation (2), the probability of the actions ( y jki ) are assumed to be independent from each other given class membership, which is known as the local independence assumption for mixture models.

For the student level, in Equation (3), α i . B is the item discrimination parameter where B represents between-level. β i is the item location parameter which is correlated with the responses of the final step of the item. θ k is the ability estimate at the student level based on the final step of the process, which also represents the problem-solving ability of student k in this study ( θ k ~ N(0 , 1)) .

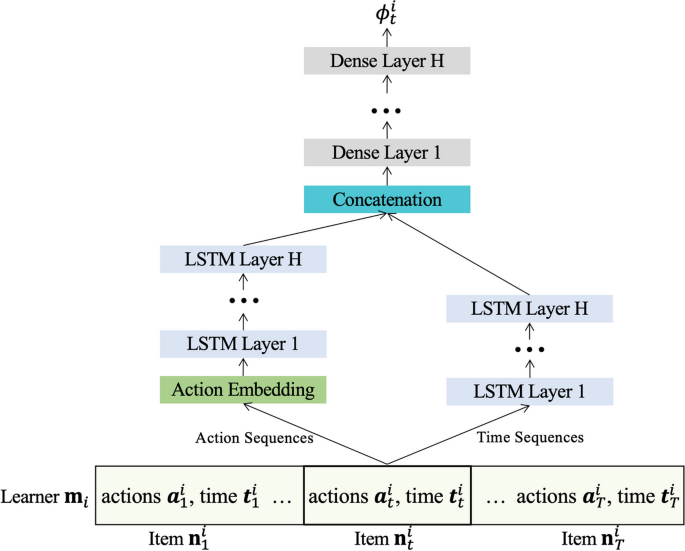

Figure 2 demonstrates a modified two-level mixture item response model with within-level latent classes. The squares in the figure represent item responses, the ellipses represent latent variables, and 1 inside the triangle represents a vector of 1 s. As is shown in the figure, the response for each route of the jth step [ y jk 1 ,…, y jki ,…, y jkI ] is explained by both categorical and continuous latent variables ( C jk and θ jkg , respectively) at the process level; and the final response of students for each route [ y k 1 ,…, y ki ,…, y kI ] is explained by a continuous latent variable (θ k ) at the student level. The arrows from the continuous latent variables to the item (route) represent item (route) discrimination parameters (α ig, W at the process level and α i, B at the student level), and the arrows from the triangle to the item responses represent item location parameters at both levels. The dotted arrows from the categorical latent variable to the other arrows indicate that all item parameters are class-specific.

Figure 2 . The modified MMixIRT model for process data.

It should be noted that the MMixIRT model is different from the traditional two-level mixture item response model in the definition of the latent variables at the between-level. In the standard MMixIRT model, the between-level latent variables are generally obtained from the measurement results made by within-level response variables [ y jk 1 ,…, y jki ,…, y jkI ] on between-level latent variables ( Lee et al., 2017 ). In this study, the process-level data mainly reflect the strategies for problem solving, while the responses at the last step represent students' final answers on this task. Therefore, students' final responses are used to estimate their problem-solving abilities (latent variable at the between-level, i.e., ability of the student level) in the modified MMixIRT model.

Mplus Software ( Muthén and Muthén, 1998-2015 ) was used to estimate the parameters of the modified MMixIRT model, as specified above. In addition, the detailed syntax are presented in Appendix 5 .

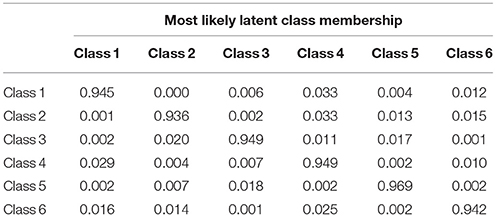

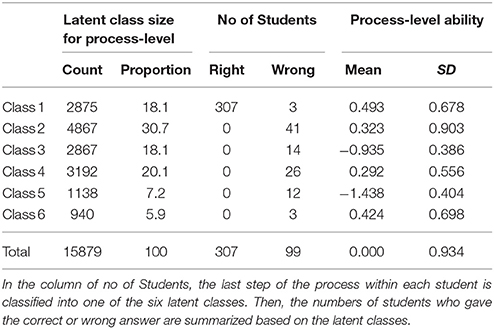

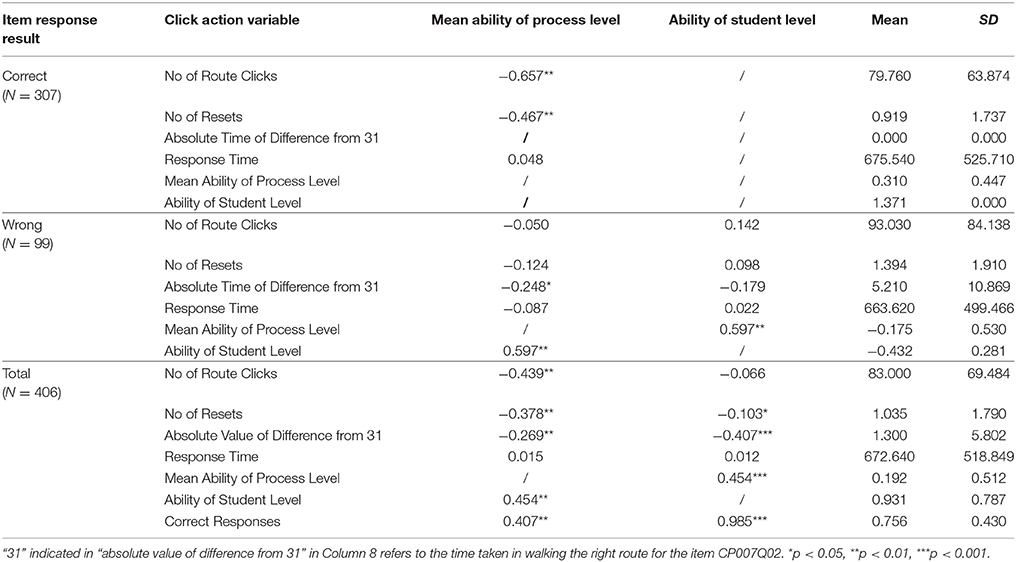

Results of Descriptive Statistics