Nonparametric Tests

Lisa Sullivan, PhD

Professor of Biostatistics

Boston University School of Public Health

Introduction

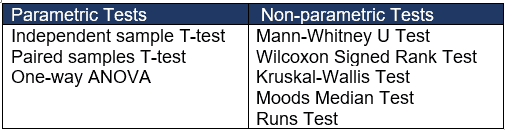

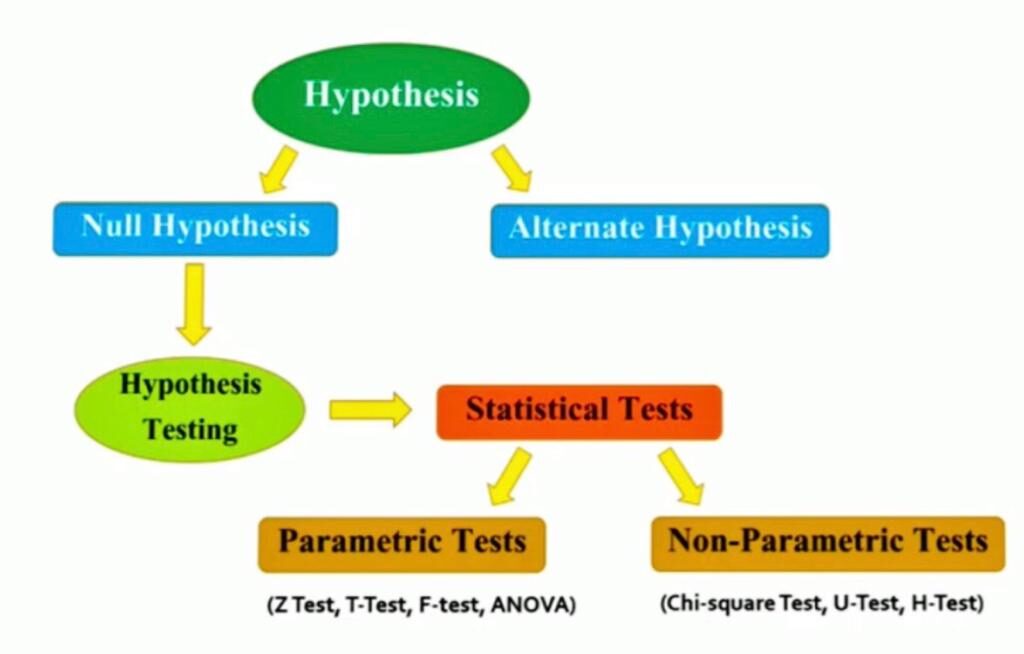

The three modules on hypothesis testing presented a number of tests of hypothesis for continuous, dichotomous and discrete outcomes. Tests for continuous outcomes focused on comparing means, while tests for dichotomous and discrete outcomes focused on comparing proportions. All of the tests presented in the modules on hypothesis testing are called parametric tests and are based on certain assumptions. For example, when running tests of hypothesis for means of continuous outcomes, all parametric tests assume that the outcome is approximately normally distributed in the population. This does not mean that the data in the observed sample follows a normal distribution, but rather that the outcome follows a normal distribution in the full population which is not observed. For many outcomes, investigators are comfortable with the normality assumption (i.e., most of the observations are in the center of the distribution while fewer are at either extreme). It also turns out that many statistical tests are robust, which means that they maintain their statistical properties even when assumptions are not entirely met. Tests are robust in the presence of violations of the normality assumption when the sample size is large based on the Central Limit Theorem (see page 11 in the module on Probability). When the sample size is small and the distribution of the outcome is not known and cannot be assumed to be approximately normally distributed, then alternative tests called nonparametric tests are appropriate.

Learning Objectives

After completing this module, the student will be able to:

- Compare and contrast parametric and nonparametric tests

- Identify multiple applications where nonparametric approaches are appropriate

- Perform and interpret the Mann Whitney U Test

- Perform and interpret the Sign test and Wilcoxon Signed Rank Test

- Compare and contrast the Sign test and Wilcoxon Signed Rank Test

- Perform and interpret the Kruskal Wallis test

- Identify the appropriate nonparametric hypothesis testing procedure based on type of outcome variable and number of samples

When to Use a Nonparametric Test

Nonparametric tests are sometimes called distribution-free tests because they are based on fewer assumptions (e.g., they do not assume that the outcome is approximately normally distributed). Parametric tests involve specific probability distributions (e.g., the normal distribution) and the tests involve estimation of the key parameters of that distribution (e.g., the mean or difference in means) from the sample data. The cost of fewer assumptions is that nonparametric tests are generally less powerful than their parametric counterparts (i.e., when the alternative is true, they may be less likely to reject H 0 ).

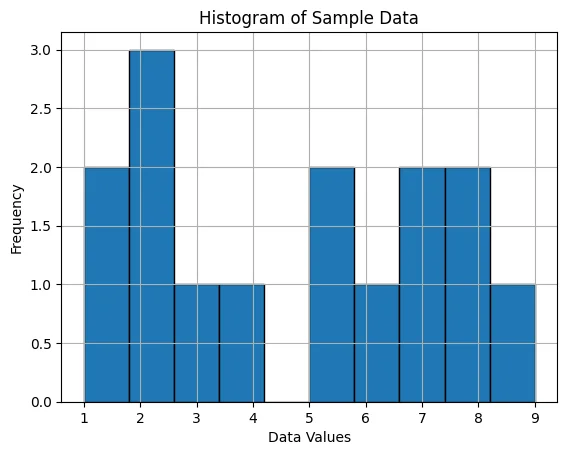

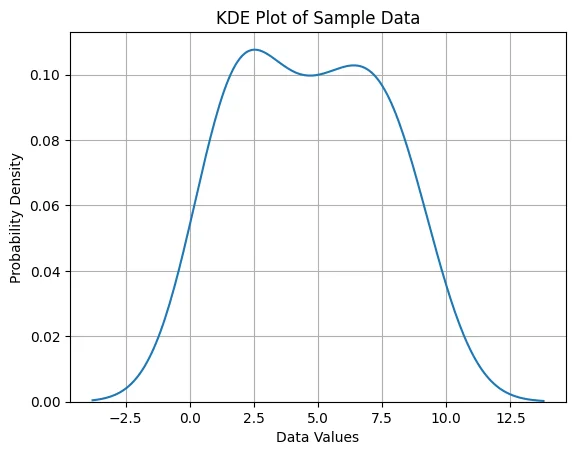

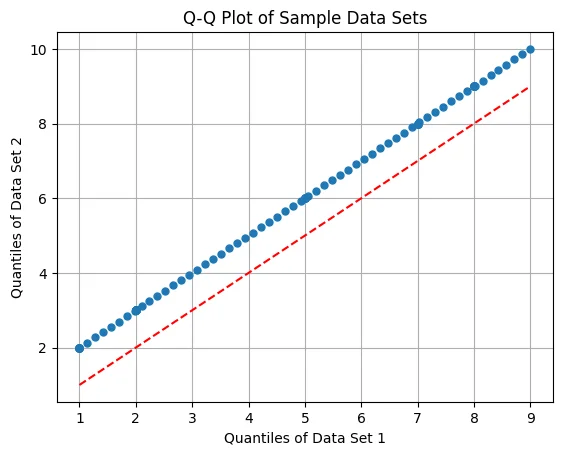

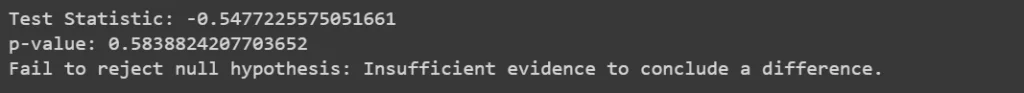

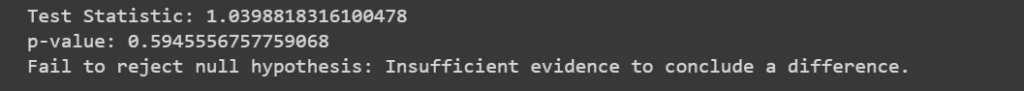

It can sometimes be difficult to assess whether a continuous outcome follows a normal distribution and, thus, whether a parametric or nonparametric test is appropriate. There are several statistical tests that can be used to assess whether data are likely from a normal distribution. The most popular are the Kolmogorov-Smirnov test, the Anderson-Darling test, and the Shapiro-Wilk test 1 . Each test is essentially a goodness of fit test and compares observed data to quantiles of the normal (or other specified) distribution. The null hypothesis for each test is H 0 : Data follow a normal distribution versus H 1 : Data do not follow a normal distribution. If the test is statistically significant (e.g., p<0.05), then data do not follow a normal distribution, and a nonparametric test is warranted. It should be noted that these tests for normality can be subject to low power. Specifically, the tests may fail to reject H 0 : Data follow a normal distribution when in fact the data do not follow a normal distribution. Low power is a major issue when the sample size is small - which unfortunately is often when we wish to employ these tests. The most practical approach to assessing normality involves investigating the distributional form of the outcome in the sample using a histogram and to augment that with data from other studies, if available, that may indicate the likely distribution of the outcome in the population.

There are some situations when it is clear that the outcome does not follow a normal distribution. These include situations:

- when the outcome is an ordinal variable or a rank,

- when there are definite outliers or

- when the outcome has clear limits of detection.

Using an Ordinal Scale

Consider a clinical trial where study participants are asked to rate their symptom severity following 6 weeks on the assigned treatment. Symptom severity might be measured on a 5 point ordinal scale with response options: Symptoms got much worse, slightly worse, no change, slightly improved, or much improved. Suppose there are a total of n=20 participants in the trial, randomized to an experimental treatment or placebo, and the outcome data are distributed as shown in the figure below.

Distribution of Symptom Severity in Total Sample

The distribution of the outcome (symptom severity) does not appear to be normal as more participants report improvement in symptoms as opposed to worsening of symptoms.

When the Outcome is a Rank

In some studies, the outcome is a rank. For example, in obstetrical studies an APGAR score is often used to assess the health of a newborn. The score, which ranges from 1-10, is the sum of five component scores based on the infant's condition at birth. APGAR scores generally do not follow a normal distribution, since most newborns have scores of 7 or higher (normal range).

When There Are Outliers

In some studies, the outcome is continuous but subject to outliers or extreme values. For example, days in the hospital following a particular surgical procedure is an outcome that is often subject to outliers. Suppose in an observational study investigators wish to assess whether there is a difference in the days patients spend in the hospital following liver transplant in for-profit versus nonprofit hospitals. Suppose we measure days in the hospital following transplant in n=100 participants, 50 from for-profit and 50 from non-profit hospitals. The number of days in the hospital are summarized by the box-whisker plot below.

Distribution of Days in the Hospital Following Transplant

Note that 75% of the participants stay at most 16 days in the hospital following transplant, while at least 1 stays 35 days which would be considered an outlier. Recall from page 8 in the module on Summarizing Data that we used Q 1 -1.5(Q 3 -Q 1 ) as a lower limit and Q 3 +1.5(Q 3 -Q 1 ) as an upper limit to detect outliers. In the box-whisker plot above, 10.2, Q 1 =12 and Q 3 =16, thus outliers are values below 12-1.5(16-12) = 6 or above 16+1.5(16-12) = 22.

Limits of Detection

In some studies, the outcome is a continuous variable that is measured with some imprecision (e.g., with clear limits of detection). For example, some instruments or assays cannot measure presence of specific quantities above or below certain limits. HIV viral load is a measure of the amount of virus in the body and is measured as the amount of virus per a certain volume of blood. It can range from "not detected" or "below the limit of detection" to hundreds of millions of copies. Thus, in a sample some participants may have measures like 1,254,000 or 874,050 copies and others are measured as "not detected." If a substantial number of participants have undetectable levels, the distribution of viral load is not normally distributed.

Advantages of Nonparametric Tests

Nonparametric tests have some distinct advantages. With outcomes such as those described above, nonparametric tests may be the only way to analyze these data. Outcomes that are ordinal, ranked, subject to outliers or measured imprecisely are difficult to analyze with parametric methods without making major assumptions about their distributions as well as decisions about coding some values (e.g., "not detected"). As described here, nonparametric tests can also be relatively simple to conduct.

Introduction to Nonparametric Testing

This module will describe some popular nonparametric tests for continuous outcomes. Interested readers should see Conover 3 for a more comprehensive coverage of nonparametric tests.

The techniques described here apply to outcomes that are ordinal, ranked, or continuous outcome variables that are not normally distributed. Recall that continuous outcomes are quantitative measures based on a specific measurement scale (e.g., weight in pounds, height in inches). Some investigators make the distinction between continuous, interval and ordinal scaled data. Interval data are like continuous data in that they are measured on a constant scale (i.e., there exists the same difference between adjacent scale scores across the entire spectrum of scores). Differences between interval scores are interpretable, but ratios are not. Temperature in Celsius or Fahrenheit is an example of an interval scale outcome. The difference between 30º and 40º is the same as the difference between 70º and 80º, yet 80º is not twice as warm as 40º. Ordinal outcomes can be less specific as the ordered categories need not be equally spaced. Symptom severity is an example of an ordinal outcome and it is not clear whether the difference between much worse and slightly worse is the same as the difference between no change and slightly improved. Some studies use visual scales to assess participants' self-reported signs and symptoms. Pain is often measured in this way, from 0 to 10 with 0 representing no pain and 10 representing agonizing pain. Participants are sometimes shown a visual scale such as that shown in the upper portion of the figure below and asked to choose the number that best represents their pain state. Sometimes pain scales use visual anchors as shown in the lower portion of the figure below.

Visual Pain Scale

In the upper portion of the figure, certainly 10 is worse than 9, which is worse than 8; however, the difference between adjacent scores may not necessarily be the same. It is important to understand how outcomes are measured to make appropriate inferences based on statistical analysis and, in particular, not to overstate precision.

Assigning Ranks

The nonparametric procedures that we describe here follow the same general procedure. The outcome variable (ordinal, interval or continuous) is ranked from lowest to highest and the analysis focuses on the ranks as opposed to the measured or raw values. For example, suppose we measure self-reported pain using a visual analog scale with anchors at 0 (no pain) and 10 (agonizing pain) and record the following in a sample of n=6 participants:

7 5 9 3 0 2

The ranks, which are used to perform a nonparametric test, are assigned as follows: First, the data are ordered from smallest to largest. The lowest value is then assigned a rank of 1, the next lowest a rank of 2 and so on. The largest value is assigned a rank of n (in this example, n=6). The observed data and corresponding ranks are shown below:

A complicating issue that arises when assigning ranks occurs when there are ties in the sample (i.e., the same values are measured in two or more participants). For example, suppose that the following data are observed in our sample of n=6:

Observed Data: 7 7 9 3 0 2

The 4 th and 5 th ordered values are both equal to 7. When assigning ranks, the recommended procedure is to assign the mean rank of 4.5 to each (i.e. the mean of 4 and 5), as follows:

Suppose that there are three values of 7. In this case, we assign a rank of 5 (the mean of 4, 5 and 6) to the 4 th , 5 th and 6 th values, as follows:

Using this approach of assigning the mean rank when there are ties ensures that the sum of the ranks is the same in each sample (for example, 1+2+3+4+5+6=21, 1+2+3+4.5+4.5+6=21 and 1+2+3+5+5+5=21). Using this approach, the sum of the ranks will always equal n(n+1)/2. When conducting nonparametric tests, it is useful to check the sum of the ranks before proceeding with the analysis.

To conduct nonparametric tests, we again follow the five-step approach outlined in the modules on hypothesis testing.

- Set up hypotheses and select the level of significance α. Analogous to parametric testing, the research hypothesis can be one- or two- sided (one- or two-tailed), depending on the research question of interest.

- Select the appropriate test statistic. The test statistic is a single number that summarizes the sample information. In nonparametric tests, the observed data is converted into ranks and then the ranks are summarized into a test statistic.

- Set up decision rule. The decision rule is a statement that tells under what circumstances to reject the null hypothesis. Note that in some nonparametric tests we reject H 0 if the test statistic is large, while in others we reject H 0 if the test statistic is small. We make the distinction as we describe the different tests.

- Compute the test statistic. Here we compute the test statistic by summarizing the ranks into the test statistic identified in Step 2.

- Conclusion. The final conclusion is made by comparing the test statistic (which is a summary of the information observed in the sample) to the decision rule. The final conclusion is either to reject the null hypothesis (because it is very unlikely to observe the sample data if the null hypothesis is true) or not to reject the null hypothesis (because the sample data are not very unlikely if the null hypothesis is true).

Mann Whitney U Test (Wilcoxon Rank Sum Test)

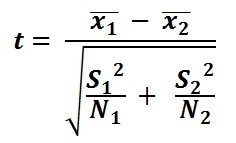

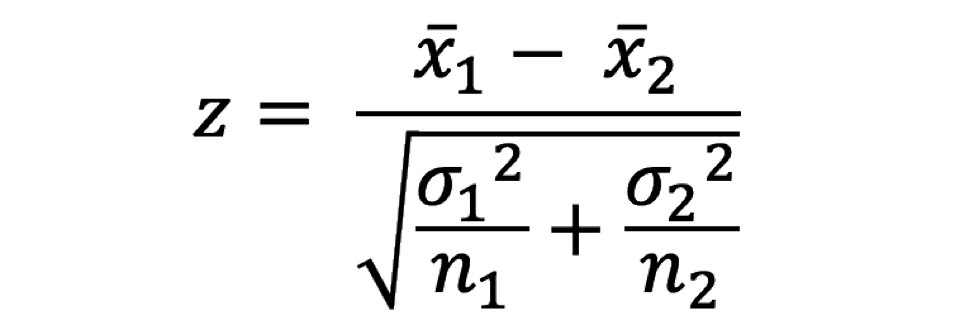

The modules on hypothesis testing presented techniques for testing the equality of means in two independent samples. An underlying assumption for appropriate use of the tests described was that the continuous outcome was approximately normally distributed or that the samples were sufficiently large (usually n 1 > 30 and n 2 > 30) to justify their use based on the Central Limit Theorem. When comparing two independent samples when the outcome is not normally distributed and the samples are small, a nonparametric test is appropriate.

A popular nonparametric test to compare outcomes between two independent groups is the Mann Whitney U test. The Mann Whitney U test, sometimes called the Mann Whitney Wilcoxon Test or the Wilcoxon Rank Sum Test, is used to test whether two samples are likely to derive from the same population (i.e., that the two populations have the same shape). Some investigators interpret this test as comparing the medians between the two populations. Recall that the parametric test compares the means (H 0 : μ 1 =μ 2 ) between independent groups.

In contrast, the null and two-sided research hypotheses for the nonparametric test are stated as follows:

H 0 : The two populations are equal versus

H 1 : The two populations are not equal.

This test is often performed as a two-sided test and, thus, the research hypothesis indicates that the populations are not equal as opposed to specifying directionality. A one-sided research hypothesis is used if interest lies in detecting a positive or negative shift in one population as compared to the other. The procedure for the test involves pooling the observations from the two samples into one combined sample, keeping track of which sample each observation comes from, and then ranking lowest to highest from 1 to n 1 +n 2 , respectively.

Consider a Phase II clinical trial designed to investigate the effectiveness of a new drug to reduce symptoms of asthma in children. A total of n=10 participants are randomized to receive either the new drug or a placebo. Participants are asked to record the number of episodes of shortness of breath over a 1 week period following receipt of the assigned treatment. The data are shown below.

Is there a difference in the number of episodes of shortness of breath over a 1 week period in participants receiving the new drug as compared to those receiving the placebo? By inspection, it appears that participants receiving the placebo have more episodes of shortness of breath, but is this statistically significant?

In this example, the outcome is a count and in this sample the data do not follow a normal distribution.

Frequency Histogram of Number of Episodes of Shortness of Breath

In addition, the sample size is small (n 1 =n 2 =5), so a nonparametric test is appropriate. The hypothesis is given below, and we run the test at the 5% level of significance (i.e., α=0.05).

Note that if the null hypothesis is true (i.e., the two populations are equal), we expect to see similar numbers of episodes of shortness of breath in each of the two treatment groups, and we would expect to see some participants reporting few episodes and some reporting more episodes in each group. This does not appear to be the case with the observed data. A test of hypothesis is needed to determine whether the observed data is evidence of a statistically significant difference in populations.

The first step is to assign ranks and to do so we order the data from smallest to largest. This is done on the combined or total sample (i.e., pooling the data from the two treatment groups (n=10)), and assigning ranks from 1 to 10, as follows. We also need to keep track of the group assignments in the total sample.

Note that the lower ranks (e.g., 1, 2 and 3) are assigned to responses in the new drug group while the higher ranks (e.g., 9, 10) are assigned to responses in the placebo group. Again, the goal of the test is to determine whether the observed data support a difference in the populations of responses. Recall that in parametric tests (discussed in the modules on hypothesis testing), when comparing means between two groups, we analyzed the difference in the sample means relative to their variability and summarized the sample information in a test statistic. A similar approach is employed here. Specifically, we produce a test statistic based on the ranks.

First, we sum the ranks in each group. In the placebo group, the sum of the ranks is 37; in the new drug group, the sum of the ranks is 18. Recall that the sum of the ranks will always equal n(n+1)/2. As a check on our assignment of ranks, we have n(n+1)/2 = 10(11)/2=55 which is equal to 37+18 = 55.

For the test, we call the placebo group 1 and the new drug group 2 (assignment of groups 1 and 2 is arbitrary). We let R 1 denote the sum of the ranks in group 1 (i.e., R 1 =37), and R 2 denote the sum of the ranks in group 2 (i.e., R 2 =18). If the null hypothesis is true (i.e., if the two populations are equal), we expect R 1 and R 2 to be similar. In this example, the lower values (lower ranks) are clustered in the new drug group (group 2), while the higher values (higher ranks) are clustered in the placebo group (group 1). This is suggestive, but is the observed difference in the sums of the ranks simply due to chance? To answer this we will compute a test statistic to summarize the sample information and look up the corresponding value in a probability distribution.

T est Statistic for the Mann Whitney U Test

The test statistic for the Mann Whitney U Test is denoted U and is the smaller of U 1 and U 2 , defined below.

where R 1 = sum of the ranks for group 1 and R 2 = sum of the ranks for group 2.

For this example,

In our example, U=3. Is this evidence in support of the null or research hypothesis? Before we address this question, we consider the range of the test statistic U in two different situations.

Situation #1

Consider the situation where there is complete separation of the groups, supporting the research hypothesis that the two populations are not equal. If all of the higher numbers of episodes of shortness of breath (and thus all of the higher ranks) are in the placebo group, and all of the lower numbers of episodes (and ranks) are in the new drug group and that there are no ties, then:

Therefore, when there is clearly a difference in the populations, U=0.

Situation #2

Consider a second situation where l ow and high scores are approximately evenly distributed in the two groups , supporting the null hypothesis that the groups are equal. If ranks of 2, 4, 6, 8 and 10 are assigned to the numbers of episodes of shortness of breath reported in the placebo group and ranks of 1, 3, 5, 7 and 9 are assigned to the numbers of episodes of shortness of breath reported in the new drug group, then:

When there is clearly no difference between populations, then U=10.

Thus, smaller values of U support the research hypothesis, and larger values of U support the null hypothesis.

In every test, we must determine whether the observed U supports the null or research hypothesis. This is done following the same approach used in parametric testing. Specifically, we determine a critical value of U such that if the observed value of U is less than or equal to the critical value, we reject H 0 in favor of H 1 and if the observed value of U exceeds the critical value we do not reject H 0 .

The critical value of U can be found in the table below. To determine the appropriate critical value we need sample sizes (for Example: n 1 =n 2 =5) and our two-sided level of significance (α=0.05). For Example 1 the critical value is 2, and the decision rule is to reject H 0 if U < 2. We do not reject H 0 because 3 > 2. We do not have statistically significant evidence at α =0.05, to show that the two populations of numbers of episodes of shortness of breath are not equal. However, in this example, the failure to reach statistical significance may be due to low power. The sample data suggest a difference, but the sample sizes are too small to conclude that there is a statistically significant difference.

Table of Critical Values for U

A new approach to prenatal care is proposed for pregnant women living in a rural community. The new program involves in-home visits during the course of pregnancy in addition to the usual or regularly scheduled visits. A pilot randomized trial with 15 pregnant women is designed to evaluate whether women who participate in the program deliver healthier babies than women receiving usual care. The outcome is the APGAR score measured 5 minutes after birth. Recall that APGAR scores range from 0 to 10 with scores of 7 or higher considered normal (healthy), 4-6 low and 0-3 critically low. The data are shown below.

Is there statistical evidence of a difference in APGAR scores in women receiving the new and enhanced versus usual prenatal care? We run the test using the five-step approach.

- Step 1. Set up hypotheses and determine level of significance.

H 1 : The two populations are not equal. α =0.05

- Step 2. Select the appropriate test statistic.

Because APGAR scores are not normally distributed and the samples are small (n 1 =8 and n 2 =7), we use the Mann Whitney U test. The test statistic is U, the smaller of

where R 1 and R 2 are the sums of the ranks in groups 1 and 2, respectively.

- Step 3. Set up decision rule.

The appropriate critical value can be found in the table above. To determine the appropriate critical value we need sample sizes (n 1 =8 and n 2 =7) and our two-sided level of significance (α=0.05). The critical value for this test with n 1 =8, n 2 =7 and α =0.05 is 10 and the decision rule is as follows: Reject H 0 if U < 10.

- Step 4. Compute the test statistic.

The first step is to assign ranks of 1 through 15 to the smallest through largest values in the total sample, as follows:

Next, we sum the ranks in each group. In the usual care group, the sum of the ranks is R 1 =45.5 and in the new program group, the sum of the ranks is R 2 =74.5. Recall that the sum of the ranks will always equal n(n+1)/2. As a check on our assignment of ranks, we have n(n+1)/2 = 15(16)/2=120 which is equal to 45.5+74.5 = 120.

We now compute U 1 and U 2 , as follows:

Thus, the test statistic is U=9.5.

- Step 5. Conclusion:

We reject H 0 because 9.5 < 10. We have statistically significant evidence at α =0.05 to show that the populations of APGAR scores are not equal in women receiving usual prenatal care as compared to the new program of prenatal care.

Example:

A clinical trial is run to assess the effectiveness of a new anti-retroviral therapy for patients with HIV. Patients are randomized to receive a standard anti-retroviral therapy (usual care) or the new anti-retroviral therapy and are monitored for 3 months. The primary outcome is viral load which represents the number of HIV copies per milliliter of blood. A total of 30 participants are randomized and the data are shown below.

Is there statistical evidence of a difference in viral load in patients receiving the standard versus the new anti-retroviral therapy?

- Step 1. Set up hypotheses and determine level of significance.

H 1 : The two populations are not equal. α=0.05

- Step 2. Select the appropriate test statistic.

Because viral load measures are not normally distributed (with outliers as well as limits of detection (e.g., "undetectable")), we use the Mann-Whitney U test. The test statistic is U, the smaller of

where R 1 and R 2 are the sums of the ranks in groups 1 and 2, respectively.

- Step 3. Set up the decision rule.

The critical value can be found in the table of critical values based on sample sizes (n 1 =n 2 =15) and a two-sided level of significance (α=0.05). The critical value 64 and the decision rule is as follows: Reject H 0 if U < 64.

- Step 4 . Compute the test statistic.

The first step is to assign ranks of 1 through 30 to the smallest through largest values in the total sample. Note in the table below, that the "undetectable" measurement is listed first in the ordered values (smallest) and assigned a rank of 1.

Next, we sum the ranks in each group. In the standard anti-retroviral therapy group, the sum of the ranks is R 1 =245; in the new anti-retroviral therapy group, the sum of the ranks is R 2 =220. Recall that the sum of the ranks will always equal n(n+1)/2. As a check on our assignment of ranks, we have n(n+1)/2 = 30(31)/2=465 which is equal to 245+220 = 465. We now compute U 1 and U 2 , as follows,

Thus, the test statistic is U=100.

- Step 5. Conclusion.

We do not reject H 0 because 100 > 64. We do not have sufficient evidence to conclude that the treatment groups differ in viral load.

Tests with Matched Samples

This section describes nonparametric tests to compare two groups with respect to a continuous outcome when the data are collected on matched or paired samples. The parametric procedure for doing this was presented in the modules on hypothesis testing for the situation in which the continuous outcome was normally distributed. This section describes procedures that should be used when the outcome cannot be assumed to follow a normal distribution. There are two popular nonparametric tests to compare outcomes between two matched or paired groups. The first is called the Sign Test and the second the Wilcoxon Signed Rank Test .

Recall that when data are matched or paired, we compute difference scores for each individual and analyze difference scores. The same approach is followed in nonparametric tests. In parametric tests, the null hypothesis is that the mean difference (μ d ) is zero. In nonparametric tests, the null hypothesis is that the median difference is zero.

Consider a clinical investigation to assess the effectiveness of a new drug designed to reduce repetitive behaviors in children affected with autism. If the drug is effective, children will exhibit fewer repetitive behaviors on treatment as compared to when they are untreated. A total of 8 children with autism enroll in the study. Each child is observed by the study psychologist for a period of 3 hours both before treatment and then again after taking the new drug for 1 week. The time that each child is engaged in repetitive behavior during each 3 hour observation period is measured. Repetitive behavior is scored on a scale of 0 to 100 and scores represent the percent of the observation time in which the child is engaged in repetitive behavior. For example, a score of 0 indicates that during the entire observation period the child did not engage in repetitive behavior while a score of 100 indicates that the child was constantly engaged in repetitive behavior. The data are shown below.

Looking at the data, it appears that some children improve (e.g., Child 5 scored 80 before treatment and 20 after treatment), but some got worse (e.g., Child 3 scored 40 before treatment and 50 after treatment). Is there statistically significant improvement in repetitive behavior after 1 week of treatment?.

Because the before and after treatment measures are paired, we compute difference scores for each child. In this example, we subtract the assessment of repetitive behaviors after treatment from that measured before treatment so that difference scores represent improvement in repetitive behavior. The question of interest is whether there is significant improvement after treatment.

In this small sample, the observed difference (or improvement) scores vary widely and are subject to extremes (e.g., the observed difference of 60 is an outlier). Thus, a nonparametric test is appropriate to test whether there is significant improvement in repetitive behavior before versus after treatment. The hypotheses are given below.

H 0 : The median difference is zero versus

H 1 : The median difference is positive α=0.05

In this example, the null hypothesis is that there is no difference in scores before versus after treatment. If the null hypothesis is true, we expect to see some positive differences (improvement) and some negative differences (worsening). If the research hypothesis is true, we expect to see more positive differences after treatment as compared to before.

The Sign Test

The Sign Test is the simplest nonparametric test for matched or paired data. The approach is to analyze only the signs of the difference scores, as shown below:

If the null hypothesis is true (i.e., if the median difference is zero) then we expect to see approximately half of the differences as positive and half of the differences as negative. If the research hypothesis is true, we expect to see more positive differences.

Test Statistic for the Sign Test

The test statistic for the Sign Test is the number of positive signs or number of negative signs, whichever is smaller. In this example, we observe 2 negative and 6 positive signs. Is this evidence of significant improvement or simply due to chance?

Determining whether the observed test statistic supports the null or research hypothesis is done following the same approach used in parametric testing. Specifically, we determine a critical value such that if the smaller of the number of positive or negative signs is less than or equal to that critical value, then we reject H 0 in favor of H 1 and if the smaller of the number of positive or negative signs is greater than the critical value, then we do not reject H 0 . Notice that this is a one-sided decision rule corresponding to our one-sided research hypothesis (the two-sided situation is discussed in the next example).

Table of Critical Values for the Sign Test

The critical values for the Sign Test are in the table below.

To determine the appropriate critical value we need the sample size, which is equal to the number of matched pairs (n=8) and our one-sided level of significance α=0.05. For this example, the critical value is 1, and the decision rule is to reject H 0 if the smaller of the number of positive or negative signs < 1. We do not reject H 0 because 2 > 1. We do not have sufficient evidence at α=0.05 to show that there is improvement in repetitive behavior after taking the drug as compared to before. In essence, we could use the critical value to decide whether to reject the null hypothesis. Another alternative would be to calculate the p-value, as described below.

Computing P-values for the Sign Test

With the Sign test we can readily compute a p-value based on our observed test statistic. The test statistic for the Sign Test is the smaller of the number of positive or negative signs and it follows a binomial distribution with n = the number of subjects in the study and p=0.5 (See the module on Probability for details on the binomial distribution). In the example above, n=8 and p=0.5 (the probability of success under H 0 ).

By using the binomial distribution formula:

we can compute the probability of observing different numbers of successes during 8 trials. These are shown in the table below.

Recall that a p-value is the probability of observing a test statistic as or more extreme than that observed. We observed 2 negative signs. Thus, the p-value for the test is: p-value = P(x < 2). Using the table above,

Because the p-value = 0.1446 exceeds the level of significance α=0.05, we do not have statistically significant evidence that there is improvement in repetitive behaviors after taking the drug as compared to before. Notice in the table of binomial probabilities above, that we would have had to observe at most 1 negative sign to declare statistical significance using a 5% level of significance. Recall the critical value for our test was 1 based on the table of critical values for the Sign Test (above).

One-Sided versus Two-Sided Test

In the example looking for differences in repetitive behaviors in autistic children, we used a one-sided test (i.e., we hypothesize improvement after taking the drug). A two sided test can be used if we hypothesize a difference in repetitive behavior after taking the drug as compared to before. From the table of critical values for the Sign Test, we can determine a two-sided critical value and again reject H 0 if the smaller of the number of positive or negative signs is less than or equal to that two-sided critical value. Alternatively, we can compute a two-sided p-value. With a two-sided test, the p-value is the probability of observing many or few positive or negative signs. If the research hypothesis is a two sided alternative (i.e., H 1 : The median difference is not zero), then the p-value is computed as: p-value = 2*P(x < 2). Notice that this is equivalent to p-value = P(x < 2) + P(x > 6), representing the situation of few or many successes. Recall in two-sided tests, we reject the null hypothesis if the test statistic is extreme in either direction. Thus, in the Sign Test, a two-sided p-value is the probability of observing few or many positive or negative signs. Here we observe 2 negative signs (and thus 6 positive signs). The opposite situation would be 6 negative signs (and thus 2 positive signs as n=8). The two-sided p-value is the probability of observing a test statistic as or more extreme in either direction (i.e.,

When Difference Scores are Zero

There is a special circumstance that needs attention when implementing the Sign Test which arises when one or more participants have difference scores of zero (i.e., their paired measurements are identical). If there is just one difference score of zero, some investigators drop that observation and reduce the sample size by 1 (i.e., the sample size for the binomial distribution would be n-1). This is a reasonable approach if there is just one zero. However, if there are two or more zeros, an alternative approach is preferred.

- If there is an even number of zeros, we randomly assign them positive or negative signs.

- If there is an odd number of zeros, we randomly drop one and reduce the sample size by 1, and then randomly assign the remaining observations positive or negative signs. The following example illustrates the approach.

A new chemotherapy treatment is proposed for patients with breast cancer. Investigators are concerned with patient's ability to tolerate the treatment and assess their quality of life both before and after receiving the new chemotherapy treatment. Quality of life (QOL) is measured on an ordinal scale and for analysis purposes, numbers are assigned to each response category as follows: 1=Poor, 2= Fair, 3=Good, 4= Very Good, 5 = Excellent. The data are shown below.

The question of interest is whether there is a difference in QOL after chemotherapy treatment as compared to before.

H 0 : The median difference is zero versus

H 1 : The median difference is not zero α=0.05

- Step 2. Select the appropriate test statistic.

The test statistic for the Sign Test is the smaller of the number of positive or negative signs.

- Step 3. Set up the decision rule.

The appropriate critical value for the Sign Test can be found in the table of critical values for the Sign Test. To determine the appropriate critical value we need the sample size (or number of matched pairs, n=12), and our two-sided level of significance α=0.05.

The critical value for this two-sided test with n=12 and a =0.05 is 2, and the decision rule is as follows: Reject H 0 if the smaller of the number of positive or negative signs < 2.

- Step 4. Compute the test statistic.

Because the before and after treatment measures are paired, we compute difference scores for each patient. In this example, we subtract the QOL measured before treatment from that measured after.

We now capture the signs of the difference scores and because there are two zeros, we randomly assign one negative sign (i.e., "-" to patient 5) and one positive sign (i.e., "+" to patient 8), as follows:

The test statistic is the number of negative signs which is equal to 3.

- Step 5. Conclusion.

We do not reject H 0 because 3 > 2. We do not have statistically significant evidence at α=0.05 to show that there is a difference in QOL after chemotherapy treatment as compared to before.

We can also compute the p-value directly using the binomial distribution with n = 12 and p=0.5. The two-sided p-value for the test is p-value = 2*P(x < 3) (which is equivalent to p-value = P(x < 3) + P(x > 9)). Again, the two-sided p-value is the probability of observing few or many positive or negative signs. Here we observe 3 negative signs (and thus 9 positive signs). The opposite situation would be 9 negative signs (and thus 3 positive signs as n=12). The two-sided p-value is the probability of observing a test statistic as or more extreme in either direction (i.e., P(x < 3) + P(x > 9)). We can compute the p-value using the binomial formula or a statistical computing package, as follows:

Because the p-value = 0.1460 exceeds the level of significance (α=0.05) we do not have statistically significant evidence at α =0.05 to show that there is a difference in QOL after chemotherapy treatment as compared to before.

Wilcoxon Signed Rank Test

Another popular nonparametric test for matched or paired data is called the Wilcoxon Signed Rank Test. Like the Sign Test, it is based on difference scores, but in addition to analyzing the signs of the differences, it also takes into account the magnitude of the observed differences.

Let's use the Wilcoxon Signed Rank Test to re-analyze the data in Example 4 on page 5 of this module. Recall that this study assessed the effectiveness of a new drug designed to reduce repetitive behaviors in children affected with autism. A total of 8 children with autism enroll in the study and the amount of time that each child is engaged in repetitive behavior during three hour observation periods are measured both before treatment and then again after taking the new medication for a period of 1 week. The data are shown below.

First, we compute difference scores for each child.

The next step is to rank the difference scores. We first order the absolute values of the difference scores and assign rank from 1 through n to the smallest through largest absolute values of the difference scores, and assign the mean rank when there are ties in the absolute values of the difference scores.

The final step is to attach the signs ("+" or "-") of the observed differences to each rank as shown below.

Similar to the Sign Test, hypotheses for the Wilcoxon Signed Rank Test concern the population median of the difference scores. The research hypothesis can be one- or two-sided. Here we consider a one-sided test.

Test Statistic for the Wilcoxon Signed Rank Test

The test statistic for the Wilcoxon Signed Rank Test is W, defined as the smaller of W+ (sum of the positive ranks) and W- (sum of the negative ranks). If the null hypothesis is true, we expect to see similar numbers of lower and higher ranks that are both positive and negative (i.e., W+ and W- would be similar). If the research hypothesis is true we expect to see more higher and positive ranks (in this example, more children with substantial improvement in repetitive behavior after treatment as compared to before, i.e., W+ much larger than W-).

In this example, W+ = 32 and W- = 4. Recall that the sum of the ranks (ignoring the signs) will always equal n(n+1)/2. As a check on our assignment of ranks, we have n(n+1)/2 = 8(9)/2 = 36 which is equal to 32+4. The test statistic is W = 4.

Next we must determine whether the observed test statistic W supports the null or research hypothesis. This is done following the same approach used in parametric testing. Specifically, we determine a critical value of W such that if the observed value of W is less than or equal to the critical value, we reject H 0 in favor of H 1 , and if the observed value of W exceeds the critical value, we do not reject H 0 .

Table of Critical Values of W

The critical value of W can be found in the table below:

To determine the appropriate one-sided critical value we need sample size (n=8) and our one-sided level of significance (α=0.05). For this example, the critical value of W is 6 and the decision rule is to reject H 0 if W < 6. Thus, we reject H 0 , because 4 < 6. We have statistically significant evidence at α =0.05, to show that the median difference is positive (i.e., that repetitive behavior improves.)

Note that when we analyzed the data previously using the Sign Test, we failed to find statistical significance. However, when we use the Wilcoxon Signed Rank Test, we conclude that the treatment result in a statistically significant improvement at α=0.05. The discrepant results are due to the fact that the Sign Test uses very little information in the data and is a less powerful test.

A study is run to evaluate the effectiveness of an exercise program in reducing systolic blood pressure in patients with pre-hypertension (defined as a systolic blood pressure between 120-139 mmHg or a diastolic blood pressure between 80-89 mmHg). A total of 15 patients with pre-hypertension enroll in the study, and their systolic blood pressures are measured. Each patient then participates in an exercise training program where they learn proper techniques and execution of a series of exercises. Patients are instructed to do the exercise program 3 times per week for 6 weeks. After 6 weeks, systolic blood pressures are again measured. The data are shown below.

Is there is a difference in systolic blood pressures after participating in the exercise program as compared to before?

- Step1. Set up hypotheses and determine level of significance.

- Step 2. Select the appropriate test statistic.

The test statistic for the Wilcoxon Signed Rank Test is W, defined as the smaller of W+ and W- which are the sums of the positive and negative ranks, respectively.

The critical value of W can be found in the table of critical values. To determine the appropriate critical value from Table 7 we need sample size (n=15) and our two-sided level of significance (α=0.05). The critical value for this two-sided test with n=15 and α=0.05 is 25 and the decision rule is as follows: Reject H 0 if W < 25.

Because the before and after systolic blood pressures measures are paired, we compute difference scores for each patient.

The next step is to rank the ordered absolute values of the difference scores using the approach outlined in Section 10.1. Specifically, we assign ranks from 1 through n to the smallest through largest absolute values of the difference scores, respectively, and assign the mean rank when there are ties in the absolute values of the difference scores.

The final step is to attach the signs ("+" or "-") of the observed differences to each rank as shown below.

In this example, W+ = 89 and W- = 31. Recall that the sum of the ranks (ignoring the signs) will always equal n(n+1)/2. As a check on our assignment of ranks, we have n(n+1)/2 = 15(16)/2 = 120 which is equal to 89 + 31. The test statistic is W = 31.

We do not reject H 0 because 31 > 25. Therefore, we do not have statistically significant evidence at α=0.05, to show that the median difference in systolic blood pressures is not zero (i.e., that there is a significant difference in systolic blood pressures after the exercise program as compared to before).

Tests with More than Two Independent Samples

In the modules on hypothesis testing we presented techniques for testing the equality of means in more than two independent samples using analysis of variance (ANOVA). An underlying assumption for appropriate use of ANOVA was that the continuous outcome was approximately normally distributed or that the samples were sufficiently large (usually n j > 30, where j=1, 2, ..., k and k denotes the number of independent comparison groups). An additional assumption for appropriate use of ANOVA is equality of variances in the k comparison groups. ANOVA is generally robust when the sample sizes are small but equal. When the outcome is not normally distributed and the samples are small, a nonparametric test is appropriate.

The Kruskal-Wallis Test

A popular nonparametric test to compare outcomes among more than two independent groups is the Kruskal Wallis test. The Kruskal Wallis test is used to compare medians among k comparison groups (k > 2) and is sometimes described as an ANOVA with the data replaced by their ranks. The null and research hypotheses for the Kruskal Wallis nonparametric test are stated as follows:

H 0 : The k population medians are equal versus

H 1 : The k population medians are not all equal

The procedure for the test involves pooling the observations from the k samples into one combined sample, keeping track of which sample each observation comes from, and then ranking lowest to highest from 1 to N, where N = n 1 +n 2 + ...+ n k . To illustrate the procedure, consider the following example.

A clinical study is designed to assess differences in albumin levels in adults following diets with different amounts of protein. Low protein diets are often prescribed for patients with kidney failure. Albumin is the most abundant protein in blood, and its concentration in the serum is measured in grams per deciliter (g/dL). Clinically, serum albumin concentrations are also used to assess whether patients get sufficient protein in their diets. Three diets are compared, ranging from 5% to 15% protein, and the 15% protein diet represents a typical American diet. The albumin levels of participants following each diet are shown below.

Is there is a difference in serum albumin levels among subjects on the three different diets. For reference, normal albumin levels are generally between 3.4 and 5.4 g/dL. By inspection, it appears that participants following the 15% protein diet have higher albumin levels than those following the 5% protein diet. The issue is whether this observed difference is statistically significant.

In this example, the outcome is continuous, but the sample sizes are small and not equal across comparison groups (n 1 =3, n 2 =5, n 3 =4). Thus, a nonparametric test is appropriate. The hypotheses to be tested are given below, and we will us a 5% level of significance.

H 0 : The three population medians are equal versus

H 1 : The three population medians are not all equal

To conduct the test we first order the data in the combined total sample of 12 subjects from smallest to largest. We also need to keep track of the group assignments in the total sample.

Notice that the lower ranks (e.g., 1, 2.5, 4) are assigned to the 5% protein diet group while the higher ranks (e.g., 10, 11 and 12) are assigned to the 15% protein diet group. Again, the goal of the test is to determine whether the observed data support a difference in the three population medians. Recall in the parametric tests, discussed in the modules on hypothesis testing, when comparing means among more than two groups we analyzed the difference among the sample means (mean square between groups) relative to their within group variability and summarized the sample information in a test statistic (F statistic). In the Kruskal Wallis test we again summarize the sample information in a test statistic based on the ranks.

Test Statistic for the Kruskal Wallis Test

The test statistic for the Kruskal Wallis test is denoted H and is defined as follows:

where k=the number of comparison groups, N= the total sample size, n j is the sample size in the j th group and R j is the sum of the ranks in the j th group.

In this example R 1 = 7.5, R 2 = 30.5, and R 3 = 40. Recall that the sum of the ranks will always equal n(n+1)/2. As a check on our assignment of ranks, we have n(n+1)/2 = 12(13)/2=78 which is equal to 7.5+30.5+40 = 78. The H statistic for this example is computed as follows:

We must now determine whether the observed test statistic H supports the null or research hypothesis. Once again, this is done by establishing a critical value of H. If the observed value of H is greater than or equal to the critical value, we reject H 0 in favor of H 1 ; if the observed value of H is less than the critical value we do not reject H 0 . The critical value of H can be found in the table below.

Critical Values of H for the Kruskal Wallis Test

To determine the appropriate critical value we need sample sizes (n 1 =3, n 2 =5 and n 3 =4) and our level of significance (α=0.05). For this example the critical value is 5.656, thus we reject H 0 because 7.52 > 5.656, and we conclude that there is a difference in median albumin levels among the three different diets.

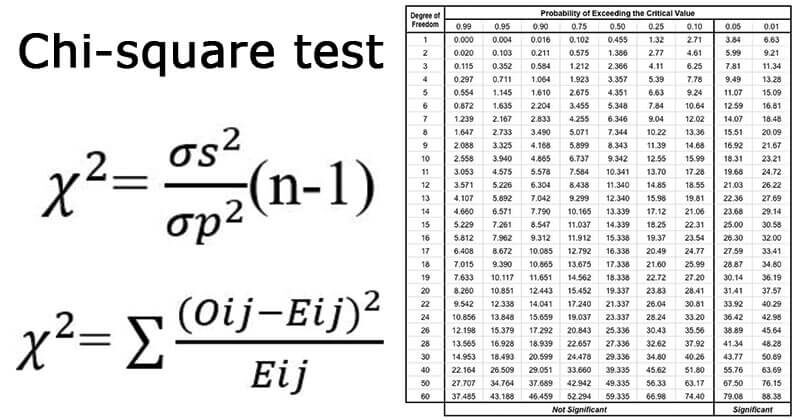

Notice that Table 8 contains critical values for the Kruskal Wallis test for tests comparing 3, 4 or 5 groups with small sample sizes. If there are 3 or more comparison groups and 5 or more observations in each of the comparison groups, it can be shown that the test statistic H approximates a chi-square distribution with df=k-1. 4 Thus, in a Kruskal Wallis test with 3 or more comparison groups and 5 or more observations in each group, the critical value for the test can be found in the table of Critical Values of the χ 2 Distribution below.

Critical Values of the χ 2 Distribution

The following example illustrates this situation.

A personal trainer is interested in comparing the anaerobic thresholds of elite athletes. Anaerobic threshold is defined as the point at which the muscles cannot get more oxygen to sustain activity or the upper limit of aerobic exercise. It is a measure also related to maximum heart rate. The following data are anaerobic thresholds for distance runners, distance cyclists, distance swimmers and cross-country skiers.

Is a difference in anaerobic thresholds among the different groups of elite athletes?

H 0 : The four population medians are equal versus

H 1 : The four population medians are not all equal α=0.05

The test statistic for the Kruskal Wallis test is denoted H and is defined as follows:

where k=the number of comparison groups, N= the total sample size, n j is the sample size in the j th group and R j is the sum of the ranks in the j th group.

Because there are 4 comparison groups and 5 observations in each of the comparison groups, we find the critical value in the table of critical values for the chi-square distribution for df=k-1=4-1=3 and α=0.05. The critical value is 7.81, and the decision rule is to reject H 0 if H > 7.81.

To conduct the test we assign ranks using the procedures outlined above. The first step in assigning ranks is to order the data from smallest to largest. This is done on the combined or total sample (i.e., pooling the data from the four comparison groups (n=20)), and assigning ranks from 1 to 20, as follows. We also need to keep track of the group assignments in the total sample. The table below shows the ordered data.

We now assign the ranks to the ordered values and sum the ranks in each group.

Recall that the sum of the ranks will always equal n(n+1)/2. As a check on our assignment of ranks, we have n(n+1)/2 = 20(21)/2=210 which is equal to 46+62+24+78 = 210. In this example,

Reject H 0 because 9.11 > 7.81. We have statistically significant evidence at α =0.05, to show that there is a difference in median anaerobic thresholds among the four different groups of elite athletes.

Notice that in this example, the anaerobic thresholds of the distance runners, cyclists and cross-country skiers are comparable (looking only at the raw data). The distance swimmers appear to be the athletes that differ from the others in terms of anaerobic thresholds. Recall, similar to analysis of variance tests, we reject the null hypothesis in favor of the alternative hypothesis if any two of the medians are not equal.

This module presents hypothesis testing techniques for situations with small sample sizes and outcomes that are ordinal, ranked or continuous and cannot be assumed to be normally distributed. Nonparametric tests are based on ranks which are assigned to the ordered data. The tests involve the same five steps as parametric tests, specifying the null and alternative or research hypothesis, selecting and computing an appropriate test statistic, setting up a decision rule and drawing a conclusion. The tests are summarized below.

Mann Whitney U Test

Use: To compare a continuous outcome in two independent samples.

Null Hypothesis: H 0 : Two populations are equal

Test Statistic: The test statistic is U, the smaller of

Decision Rule: Reject H 0 if U < critical value from table

Use: To compare a continuous outcome in two matched or paired samples.

Null Hypothesis: H 0 : Median difference is zero

Test Statistic: The test statistic is the smaller of the number of positive or negative signs.

Decision Rule: Reject H 0 if the smaller of the number of positive or negative signs < critical value from table.

Wilcoxon Signed Rank Test

Test Statistic: The test statistic is W, defined as the smaller of W+ and W- which are the sums of the positive and negative ranks of the difference scores, respectively.

Decision Rule: Reject H 0 if W < critical value from table.

Kruskal Wallis Test

Use: To compare a continuous outcome in more than two independent samples.

Null Hypothesis: H 0 : k population medians are equal

Test Statistic: The test statistic is H,

Decision Rule: Reject H 0 if H > critical value

- D'Agostino RB and Stevens MA. Goodness of Fit Techniques.

- Apgar, Virginia (1953). " A proposal for a new method of evaluation of the newborn infant ". Curr. Res. Anesth. Analg. 32 (4): 260-267.

- Conover WJ. Practical Nonparametric Statistics, 2 nd edition, New York: John Wiley and Sons.

- Siegel and Castellan. (1988). "Nonparametric Statistics for the Behavioral Sciences," 2nd edition, New York: McGraw-Hill.

- > Statistics

Non-Parametric Statistics: Types, Tests, and Examples

- Pragya Soni

- May 12, 2022

Statistics, an essential element of data management and predictive analysis , is classified into two types, parametric and non-parametric.

Parametric tests are based on the assumptions related to the population or data sources while, non-parametric test is not into assumptions, it's more factual than the parametric tests. Here is a detailed blog about non-parametric statistics.

What is the Meaning of Non-Parametric Statistics ?

Unlike, parametric statistics, non-parametric statistics is a branch of statistics that is not solely based on the parametrized families of assumptions and probability distribution. Non-parametric statistics depend on either being distribution free or having specified distribution, without keeping any parameters into consideration.

Non-parametric statistics are defined by non-parametric tests; these are the experiments that do not require any sample population for assumptions. For this reason, non-parametric tests are also known as distribution free tests as they don’t rely on data related to any particular parametric group of probability distributions.

In other terms, non-parametric statistics is a statistical method where a particular data is not required to fit in a normal distribution. Usually, non-parametric statistics used the ordinal data that doesn’t rely on the numbers, but rather a ranking or order. For consideration, statistical tests, inferences, statistical models, and descriptive statistics.

Non-parametric statistics is thus defined as a statistical method where data doesn’t come from a prescribed model that is determined by a small number of parameters. Unlike normal distribution model, factorial design and regression modeling, non-parametric statistics is a whole different content.

Unlike parametric models, non-parametric is quite easy to use but it doesn’t offer the exact accuracy like the other statistical models. Therefore, non-parametric statistics is generally preferred for the studies where a net change in input has minute or no effect on the output. Like even if the numerical data changes, the results are likely to stay the same.

Also Read | What is Regression Testing?

How does Non-Parametric Statistics Work ?

Parametric statistics consists of the parameters like mean, standard deviation , variance, etc. Thus, it uses the observed data to estimate the parameters of the distribution. Data are often assumed to come from a normal distribution with unknown parameters.

While, non-parametric statistics doesn’t assume the fact that the data is taken from a same or normal distribution. In fact, non-parametric statistics assume that the data is estimated under a different measurement. The actual data generating process is quite far from the normally distributed process.

Types of Non-Parametric Statistics

Non-parametric statistics are further classified into two major categories. Here is the brief introduction to both of them:

1. Descriptive Statistics

Descriptive statistics is a type of non-parametric statistics. It represents the entire population or a sample of a population. It breaks down the measure of central tendency and central variability.

2. Statistical Inference

Statistical inference is defined as the process through which inferences about the sample population is made according to the certain statistics calculated from the sample drawn through that population.

Some Examples of Non-Parametric Tests

In the recent research years, non-parametric data has gained appreciation due to their ease of use. Also, non-parametric statistics is applicable to a huge variety of data despite its mean, sample size, or other variation. As non-parametric statistics use fewer assumptions, it has wider scope than parametric statistics.

Here are some common examples of non-parametric statistics :

Consider the case of a financial analyst who wants to estimate the value of risk of an investment. Now, rather than making the assumption that earnings follow a normal distribution, the analyst uses a histogram to estimate the distribution by applying non-parametric statistics.

Consider another case of a researcher who is researching to find out a relation between the sleep cycle and healthy state in human beings. Taking parametric statistics here will make the process quite complicated.

So, despite using a method that assumes a normal distribution for illness frequency. The researcher will opt to use any non-parametric method like quantile regression analysis.

Similarly, consider the case of another health researcher, who wants to estimate the number of babies born underweight in India, he will also employ the non-parametric measurement for data testing.

A marketer that is interested in knowing the market growth or success of a company, will surely employ a non-statistical approach.

Any researcher that is testing the market to check the consumer preferences for a product will also employ a non-statistical data test. As different parameters in nutritional value of the product like agree, disagree, strongly agree and slightly agree will make the parametric application hard.

Any other science or social science research which include nominal variables such as age, gender, marital data, employment, or educational qualification is also called as non-parametric statistics. It plays an important role when the source data lacks clear numerical interpretation.

Also Read | Applications of Statistical Techniques

What are Non-Parametric Tests ?

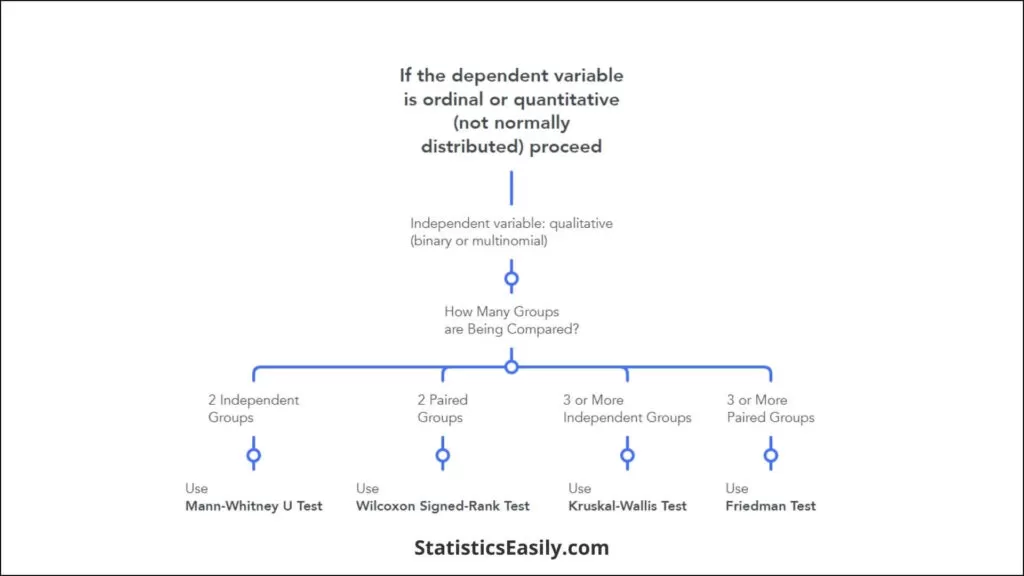

Types of Non-Parametric Tests

Here is the list of non-parametric tests that are conducted on the population for the purpose of statistics tests :

Wilcoxon Rank Sum Test

The Wilcoxon test also known as rank sum test or signed rank test. It is a type of non-parametric test that works on two paired groups. The main focus of this test is comparison between two paired groups. The test helps in calculating the difference between each set of pairs and analyses the differences.

The Wilcoxon test is classified as a statistical hypothesis tes t and is used to compare two related samples, matched samples, or repeated measurements on a single sample to assess whether their population mean rank is different or not.

Mann- Whitney U Test

The Mann-Whitney U test also known as the Mann-Whitney-Wilcoxon test, Wilcoxon rank sum test and Wilcoxon-Mann-Whitney test. It is a non-parametric test based on null hypothesis. It is equally likely that a randomly selected sample from one sample may have higher value than the other selected sample or maybe less.

Mann-Whitney test is usually used to compare the characteristics between two independent groups when the dependent variable is either ordinal or continuous. But these variables shouldn’t be normally distributed. For a Mann-Whitney test, four requirements are must to meet. The first three are related to study designs and the fourth one reflects the nature of data.

Kruskal Wallis Test

Sometimes referred to as a one way ANOVA on ranks, Kruskal Wallis H test is a nonparametric test that is used to determine the statistical differences between the two or more groups of an independent variable. The word ANOVA is expanded as Analysis of variance.

The test is named after the scientists who discovered it, William Kruskal and W. Allen Wallis. The major purpose of the test is to check if the sample is tested if the sample is taken from the same population or not.

Friedman Test

The Friedman test is similar to the Kruskal Wallis test. It is an alternative to the ANOVA test. The only difference between Friedman test and ANOVA test is that Friedman test works on repeated measures basis. Friedman test is used for creating differences between two groups when the dependent variable is measured in the ordinal.

The Friedman test is further divided into two parts, Friedman 1 test and Friedman 2 test. It was developed by sir Milton Friedman and hence is named after him. The test is even applicable to complete block designs and thus is also known as a special case of Durbin test.

Distribution Free Tests

Distribution free tests are defined as the mathematical procedures. These tests are widely used for testing statistical hypotheses. It makes no assumption about the probability distribution of the variables. An important list of distribution free tests is as follows:

- Anderson-Darling test: It is done to check if the sample is drawn from a given distribution or not.

- Statistical bootstrap methods: It is a basic non-statistical test used to estimate the accuracy and sampling distribution of a statistic.

- Cochran’s Q: Cochran’s Q is used to check constant treatments in block designs with 0/1 outcomes.

- Cohen’s kappa: Cohen kappa is used to measure the inter-rater agreement for categorical items.

- Kaplan-Meier test: Kaplan Meier test helps in estimating the survival function from lifetime data, modeling, and censoring.

- Two-way analysis Friedman test: Also known as ranking test, it is used to randomize different block designs.

- Kendall’s tau: The test helps in defining the statistical dependency between two different variables.

- Kolmogorov-Smirnov test: The test draws the inference if a sample is taken from the same distribution or if two or more samples are taken from the same sample.

- Kendall’s W: The test is used to measure the inference of an inter-rater agreement .

- Kuiper’s test: The test is done to determine if the sample drawn from a given distribution is sensitive to cyclic variations or not.

- Log Rank test: This test compares the survival distribution of two right-skewed and censored samples.

- McNemar’s test: It tests the contingency in the sample and revert when the row and column marginal frequencies are equal to or not.

- Median tests: As the name suggests, median tests check if the two samples drawn from the similar population have similar median values or not.

- Pitman’s permutation test: It is a statistical test that yields the value of p variables. This is done by examining all possible rearrangements of labels.

- Rank products: Rank products are used to detect expressed genes in replicated microarray experiments.

- Siegel Tukey tests: This test is used for differences in scale between two groups.

- Sign test: Sign test is used to test whether matched pair samples are drawn from distributions from equal medians.

- Spearman’s rank: It is used to measure the statistical dependence between two variables using a monotonic function.

- Squared ranks test: Squared rank test helps in testing the equality of variances between two or more variables.

- Wald-Wolfowitz runs a test: This test is done to check if the elements of the sequence are mutually independent or random.

Also Read | Factor Analysis

Advantages and Disadvantages of Non-Parametric Tests

The benefits of non-parametric tests are as follows:

It is easy to understand and apply.

It consists of short calculations.

The assumption of the population is not required.

Non-parametric test is applicable to all data kinds

The limitations of non-parametric tests are:

It is less efficient than parametric tests.

Sometimes the result of non-parametric data is insufficient to provide an accurate answer.

Applications of Non-Parametric Tests

Non-parametric tests are quite helpful, in the cases :

Where parametric tests are not giving sufficient results.

When the testing hypothesis is not based on the sample.

For the quicker analysis of the sample.

When the data is unscaled.

The current scenario of research is based on fluctuating inputs, thus, non-parametric statistics and tests become essential for in-depth research and data analysis .

Share Blog :

Be a part of our Instagram community

Trending blogs

5 Factors Influencing Consumer Behavior

Elasticity of Demand and its Types

What is PESTLE Analysis? Everything you need to know about it

An Overview of Descriptive Analysis

What is Managerial Economics? Definition, Types, Nature, Principles, and Scope

5 Factors Affecting the Price Elasticity of Demand (PED)

6 Major Branches of Artificial Intelligence (AI)

Dijkstra’s Algorithm: The Shortest Path Algorithm

Scope of Managerial Economics

Different Types of Research Methods

Latest Comments

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Hypothesis Testing | A Step-by-Step Guide with Easy Examples

Published on November 8, 2019 by Rebecca Bevans . Revised on June 22, 2023.

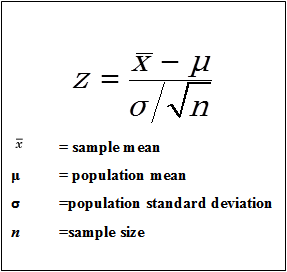

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics . It is most often used by scientists to test specific predictions, called hypotheses, that arise from theories.

There are 5 main steps in hypothesis testing:

- State your research hypothesis as a null hypothesis and alternate hypothesis (H o ) and (H a or H 1 ).

- Collect data in a way designed to test the hypothesis.

- Perform an appropriate statistical test .

- Decide whether to reject or fail to reject your null hypothesis.

- Present the findings in your results and discussion section.

Though the specific details might vary, the procedure you will use when testing a hypothesis will always follow some version of these steps.

Table of contents

Step 1: state your null and alternate hypothesis, step 2: collect data, step 3: perform a statistical test, step 4: decide whether to reject or fail to reject your null hypothesis, step 5: present your findings, other interesting articles, frequently asked questions about hypothesis testing.

After developing your initial research hypothesis (the prediction that you want to investigate), it is important to restate it as a null (H o ) and alternate (H a ) hypothesis so that you can test it mathematically.

The alternate hypothesis is usually your initial hypothesis that predicts a relationship between variables. The null hypothesis is a prediction of no relationship between the variables you are interested in.

- H 0 : Men are, on average, not taller than women. H a : Men are, on average, taller than women.

Prevent plagiarism. Run a free check.

For a statistical test to be valid , it is important to perform sampling and collect data in a way that is designed to test your hypothesis. If your data are not representative, then you cannot make statistical inferences about the population you are interested in.

There are a variety of statistical tests available, but they are all based on the comparison of within-group variance (how spread out the data is within a category) versus between-group variance (how different the categories are from one another).

If the between-group variance is large enough that there is little or no overlap between groups, then your statistical test will reflect that by showing a low p -value . This means it is unlikely that the differences between these groups came about by chance.

Alternatively, if there is high within-group variance and low between-group variance, then your statistical test will reflect that with a high p -value. This means it is likely that any difference you measure between groups is due to chance.

Your choice of statistical test will be based on the type of variables and the level of measurement of your collected data .

- an estimate of the difference in average height between the two groups.

- a p -value showing how likely you are to see this difference if the null hypothesis of no difference is true.

Based on the outcome of your statistical test, you will have to decide whether to reject or fail to reject your null hypothesis.

In most cases you will use the p -value generated by your statistical test to guide your decision. And in most cases, your predetermined level of significance for rejecting the null hypothesis will be 0.05 – that is, when there is a less than 5% chance that you would see these results if the null hypothesis were true.

In some cases, researchers choose a more conservative level of significance, such as 0.01 (1%). This minimizes the risk of incorrectly rejecting the null hypothesis ( Type I error ).

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

The results of hypothesis testing will be presented in the results and discussion sections of your research paper , dissertation or thesis .

In the results section you should give a brief summary of the data and a summary of the results of your statistical test (for example, the estimated difference between group means and associated p -value). In the discussion , you can discuss whether your initial hypothesis was supported by your results or not.

In the formal language of hypothesis testing, we talk about rejecting or failing to reject the null hypothesis. You will probably be asked to do this in your statistics assignments.

However, when presenting research results in academic papers we rarely talk this way. Instead, we go back to our alternate hypothesis (in this case, the hypothesis that men are on average taller than women) and state whether the result of our test did or did not support the alternate hypothesis.

If your null hypothesis was rejected, this result is interpreted as “supported the alternate hypothesis.”

These are superficial differences; you can see that they mean the same thing.

You might notice that we don’t say that we reject or fail to reject the alternate hypothesis . This is because hypothesis testing is not designed to prove or disprove anything. It is only designed to test whether a pattern we measure could have arisen spuriously, or by chance.

If we reject the null hypothesis based on our research (i.e., we find that it is unlikely that the pattern arose by chance), then we can say our test lends support to our hypothesis . But if the pattern does not pass our decision rule, meaning that it could have arisen by chance, then we say the test is inconsistent with our hypothesis .

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Descriptive statistics

- Measures of central tendency

- Correlation coefficient

Methodology

- Cluster sampling

- Stratified sampling

- Types of interviews

- Cohort study

- Thematic analysis

Research bias

- Implicit bias

- Cognitive bias

- Survivorship bias

- Availability heuristic

- Nonresponse bias

- Regression to the mean

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess — it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Null and alternative hypotheses are used in statistical hypothesis testing . The null hypothesis of a test always predicts no effect or no relationship between variables, while the alternative hypothesis states your research prediction of an effect or relationship.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bevans, R. (2023, June 22). Hypothesis Testing | A Step-by-Step Guide with Easy Examples. Scribbr. Retrieved April 10, 2024, from https://www.scribbr.com/statistics/hypothesis-testing/

Is this article helpful?

Rebecca Bevans

Other students also liked, choosing the right statistical test | types & examples, understanding p values | definition and examples, what is your plagiarism score.

- What DataKleenr Can Do For You

- How DataKleenr Works

- DataKleenr Credits

- Ways to Earn

- What CorrelViz Can Do For You

- How CorrelViz Works

- CorrelViz Credits

- Book a Call

- The Hive – Home

- Course Pricing

- Membership Pricing

- Members Area

- Need Help Subscribing? Start Here

- How To Enrol on Our Courses

- Log in to The Hive

- Reset Hive Password

- The Captain’s Blog

- Discover Data

Discover Stats

- Discover Visualisation

Mastering the Art of Hypothesis Testing: Parametric and Non-parametric Approaches

0 comments

Welcome to the fascinating world of hypothesis testing . Whether you're a student diving into statistics for the first time or a curious researcher looking to sharpen your skills, this is the perfect place to start. In this blog post, we'll demystify the art of hypothesis testing and equip you with the knowledge to master it like a pro. So, let's get started, shall we?

Disclosure: This post contains affiliate links. This means that if you click one of the links and make a purchase we may receive a small commission at no extra cost to you. As an Amazon Associate we may earn an affiliate commission for purchases you make when using the links in this page.

You can find further details in our TCs

Welcome to the World of Hypothesis Testing! What's the Buzz?

Imagine this: You have a burning question in your mind, like, "Does drinking coffee really improve concentration?" Well, hypothesis testing is the tool that helps you find the answer. It's a powerful method used in statistical analysis to make decisions and draw conclusions based on evidence .

Embracing the Power of Hypothesis Testing

Hypothesis testing allows you to explore the unknown, challenge assumptions, and uncover hidden truths. It's like embarking on a thrilling investigation, but with data as your detective. By formulating a hypothesis, collecting data, and analyzing it, you can discover meaningful patterns, draw insightful conclusions, and make confident claims about your findings .

Why Hypothesis Testing Matters