Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

Content Analysis | A Step-by-Step Guide with Examples

Published on 5 May 2022 by Amy Luo . Revised on 5 December 2022.

Content analysis is a research method used to identify patterns in recorded communication. To conduct content analysis, you systematically collect data from a set of texts, which can be written, oral, or visual:

- Books, newspapers, and magazines

- Speeches and interviews

- Web content and social media posts

- Photographs and films

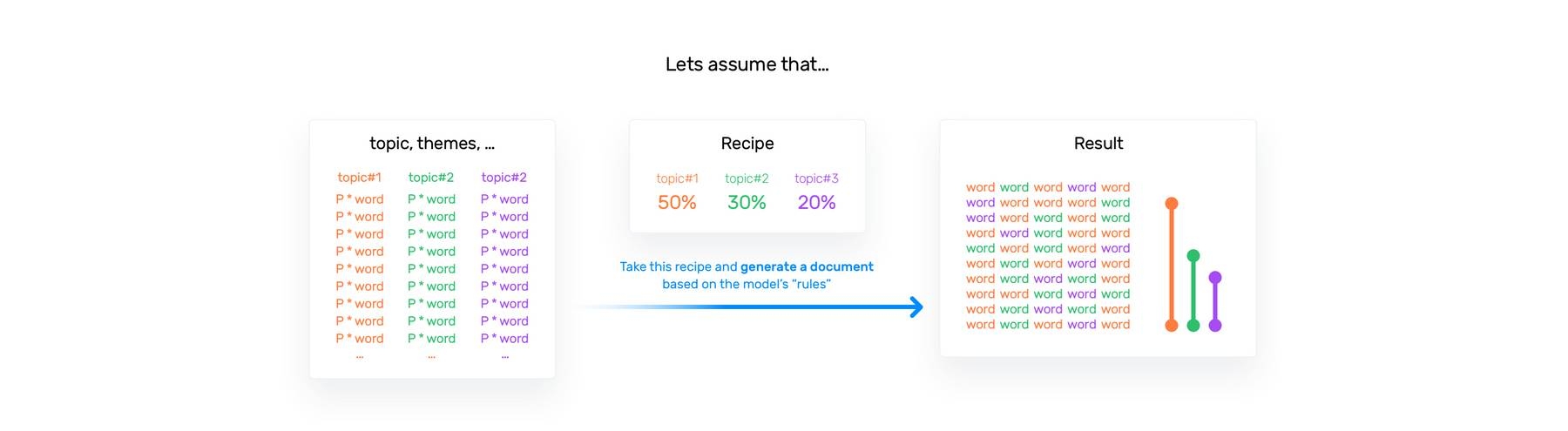

Content analysis can be both quantitative (focused on counting and measuring) and qualitative (focused on interpreting and understanding). In both types, you categorise or ‘code’ words, themes, and concepts within the texts and then analyse the results.

Table of contents

What is content analysis used for, advantages of content analysis, disadvantages of content analysis, how to conduct content analysis.

Researchers use content analysis to find out about the purposes, messages, and effects of communication content. They can also make inferences about the producers and audience of the texts they analyse.

Content analysis can be used to quantify the occurrence of certain words, phrases, subjects, or concepts in a set of historical or contemporary texts.

In addition, content analysis can be used to make qualitative inferences by analysing the meaning and semantic relationship of words and concepts.

Because content analysis can be applied to a broad range of texts, it is used in a variety of fields, including marketing, media studies, anthropology, cognitive science, psychology, and many social science disciplines. It has various possible goals:

- Finding correlations and patterns in how concepts are communicated

- Understanding the intentions of an individual, group, or institution

- Identifying propaganda and bias in communication

- Revealing differences in communication in different contexts

- Analysing the consequences of communication content, such as the flow of information or audience responses

Prevent plagiarism, run a free check.

- Unobtrusive data collection

You can analyse communication and social interaction without the direct involvement of participants, so your presence as a researcher doesn’t influence the results.

- Transparent and replicable

When done well, content analysis follows a systematic procedure that can easily be replicated by other researchers, yielding results with high reliability .

- Highly flexible

You can conduct content analysis at any time, in any location, and at low cost. All you need is access to the appropriate sources.

Focusing on words or phrases in isolation can sometimes be overly reductive, disregarding context, nuance, and ambiguous meanings.

Content analysis almost always involves some level of subjective interpretation, which can affect the reliability and validity of the results and conclusions.

- Time intensive

Manually coding large volumes of text is extremely time-consuming, and it can be difficult to automate effectively.

If you want to use content analysis in your research, you need to start with a clear, direct research question .

Next, you follow these five steps.

Step 1: Select the content you will analyse

Based on your research question, choose the texts that you will analyse. You need to decide:

- The medium (e.g., newspapers, speeches, or websites) and genre (e.g., opinion pieces, political campaign speeches, or marketing copy)

- The criteria for inclusion (e.g., newspaper articles that mention a particular event, speeches by a certain politician, or websites selling a specific type of product)

- The parameters in terms of date range, location, etc.

If there are only a small number of texts that meet your criteria, you might analyse all of them. If there is a large volume of texts, you can select a sample .

Step 2: Define the units and categories of analysis

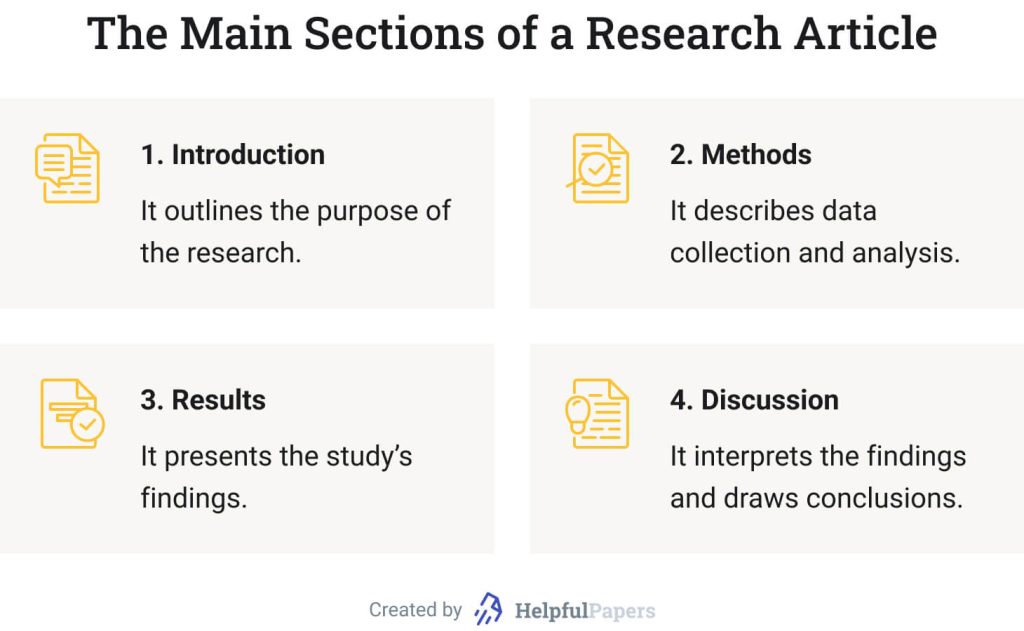

Next, you need to determine the level at which you will analyse your chosen texts. This means defining:

- The unit(s) of meaning that will be coded. For example, are you going to record the frequency of individual words and phrases, the characteristics of people who produced or appear in the texts, the presence and positioning of images, or the treatment of themes and concepts?

- The set of categories that you will use for coding. Categories can be objective characteristics (e.g., aged 30–40, lawyer, parent) or more conceptual (e.g., trustworthy, corrupt, conservative, family-oriented).

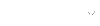

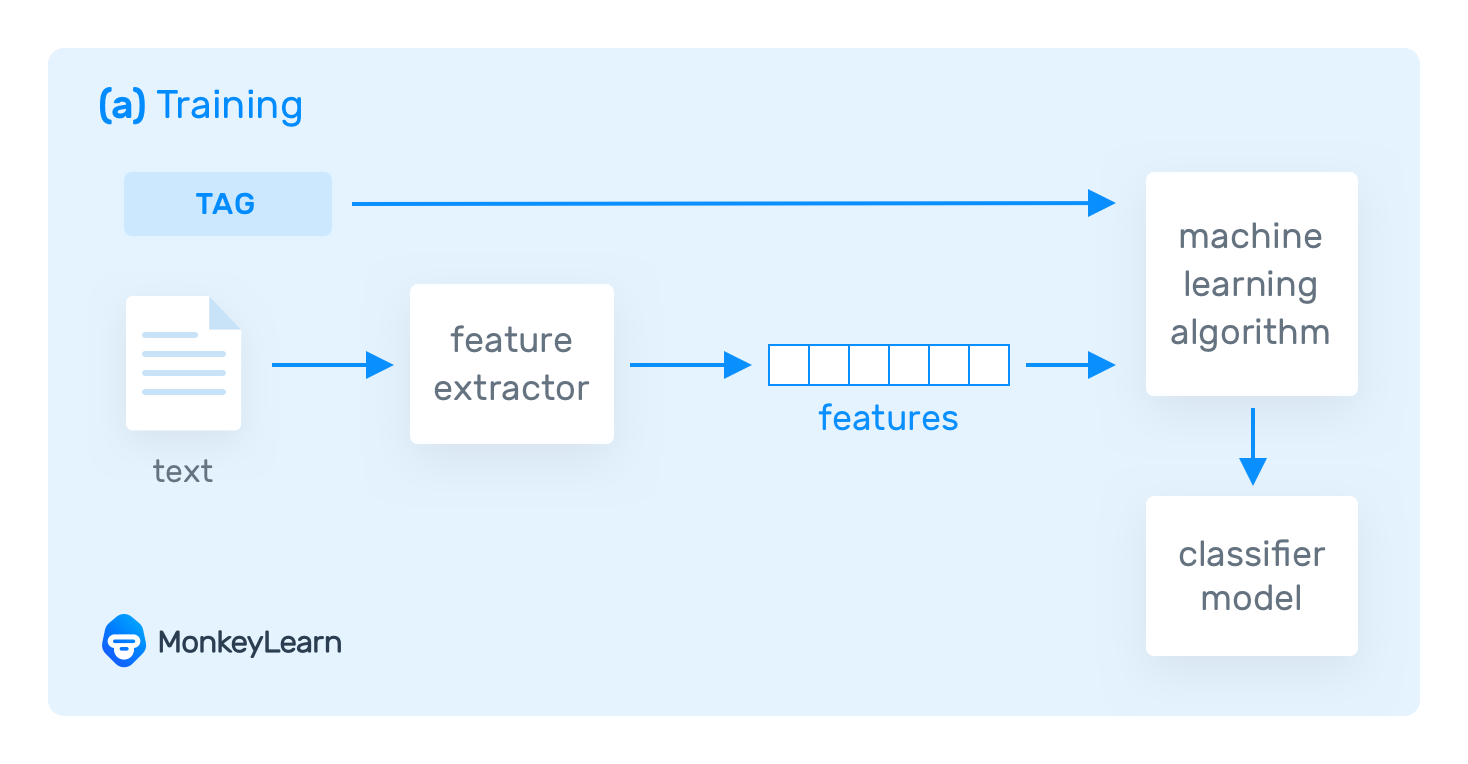

Step 3: Develop a set of rules for coding

Coding involves organising the units of meaning into the previously defined categories. Especially with more conceptual categories, it’s important to clearly define the rules for what will and won’t be included to ensure that all texts are coded consistently.

Coding rules are especially important if multiple researchers are involved, but even if you’re coding all of the text by yourself, recording the rules makes your method more transparent and reliable.

Step 4: Code the text according to the rules

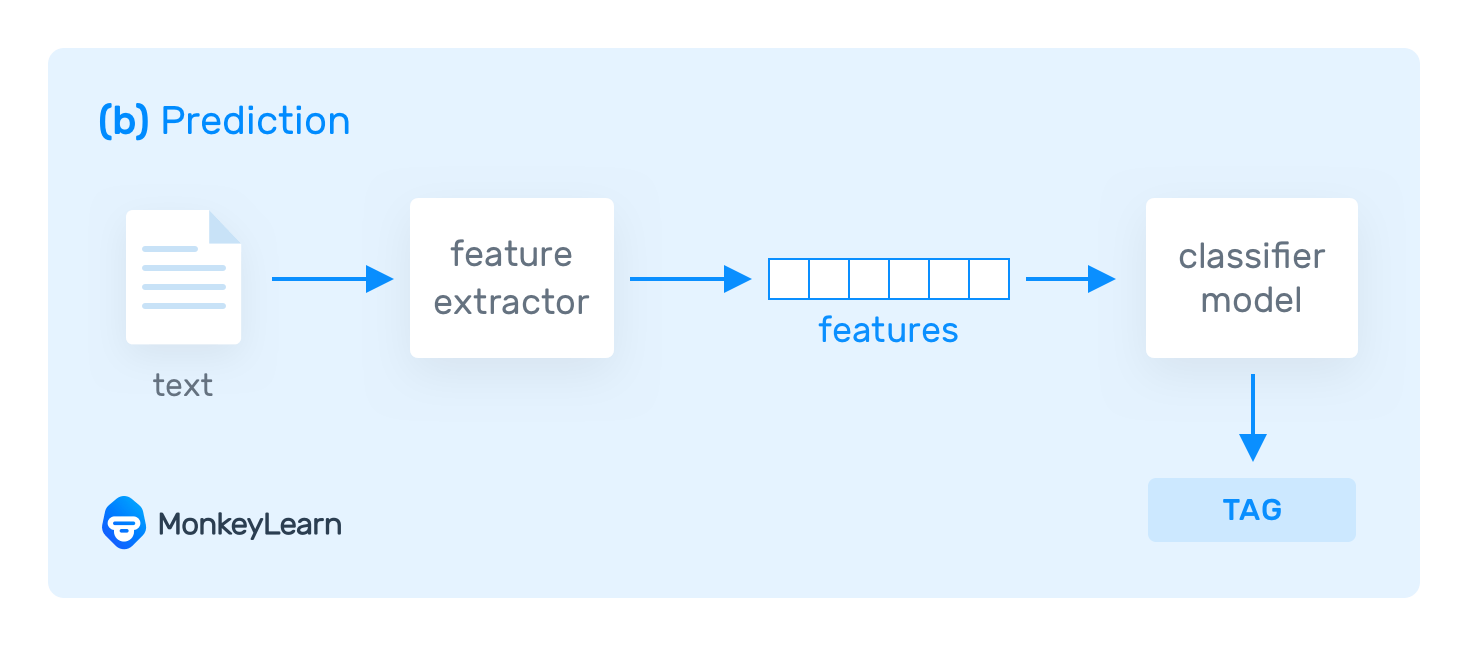

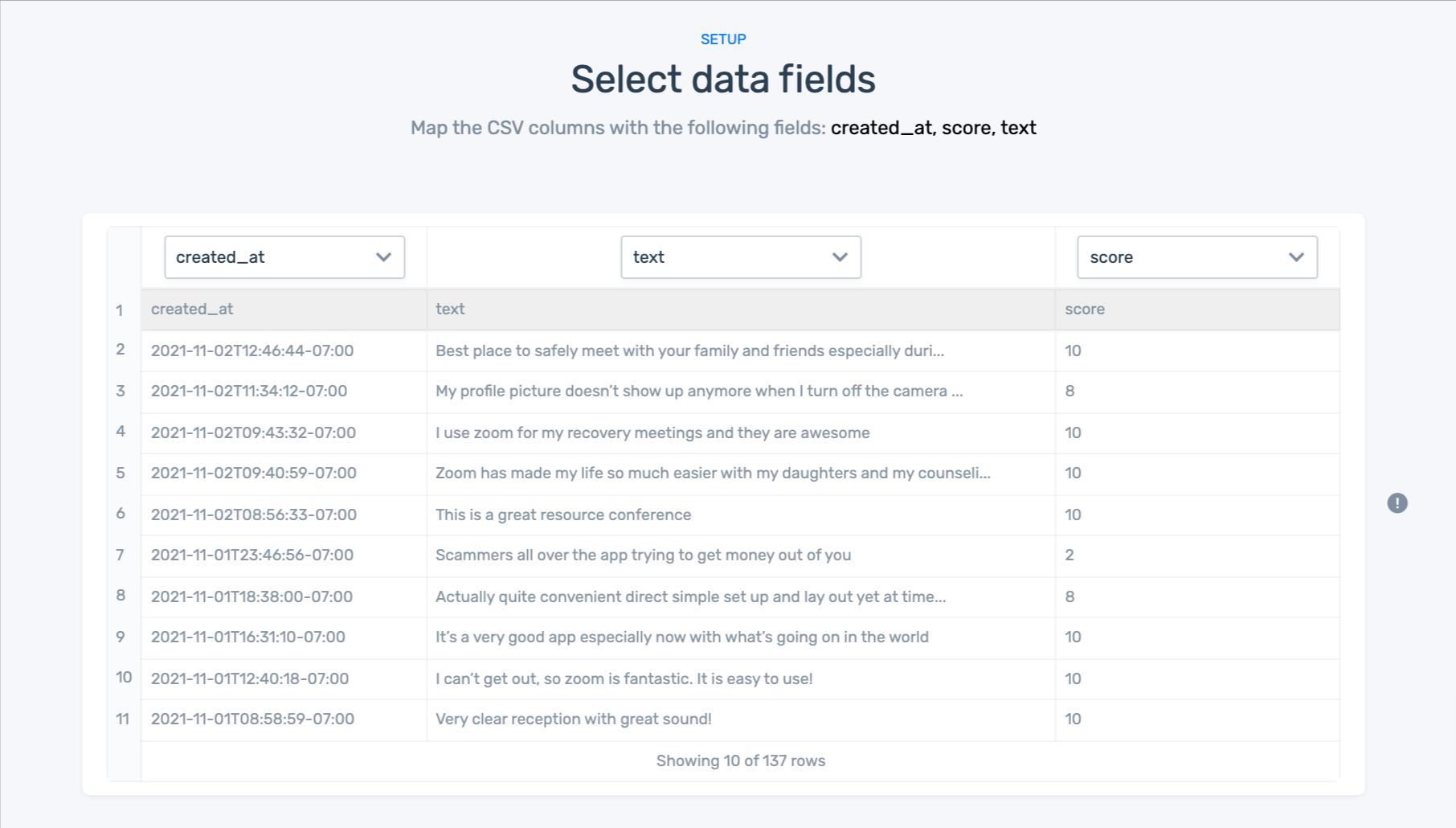

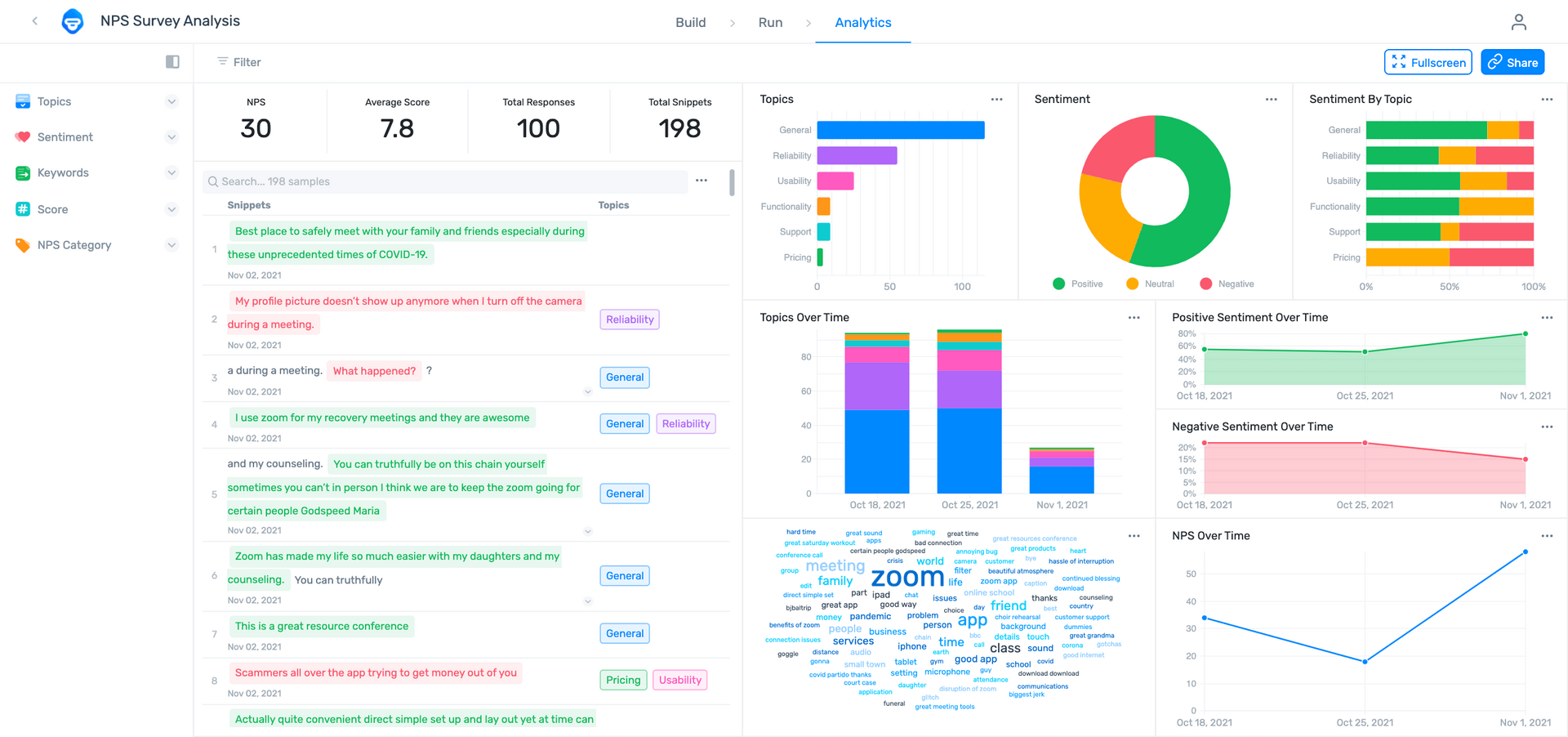

You go through each text and record all relevant data in the appropriate categories. This can be done manually or aided with computer programs, such as QSR NVivo , Atlas.ti , and Diction , which can help speed up the process of counting and categorising words and phrases.

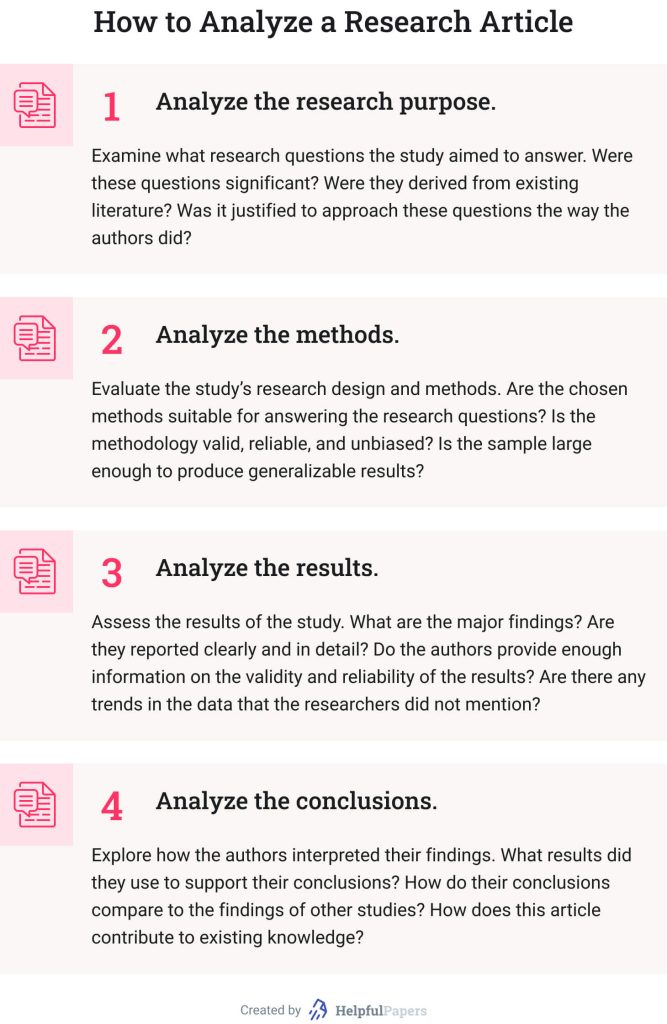

Step 5: Analyse the results and draw conclusions

Once coding is complete, the collected data is examined to find patterns and draw conclusions in response to your research question. You might use statistical analysis to find correlations or trends, discuss your interpretations of what the results mean, and make inferences about the creators, context, and audience of the texts.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Luo, A. (2022, December 05). Content Analysis | A Step-by-Step Guide with Examples. Scribbr. Retrieved 22 April 2024, from https://www.scribbr.co.uk/research-methods/content-analysis-explained/

Is this article helpful?

Other students also liked

How to do thematic analysis | guide & examples, data collection methods | step-by-step guide & examples, qualitative vs quantitative research | examples & methods.

Chapter 17. Content Analysis

Introduction.

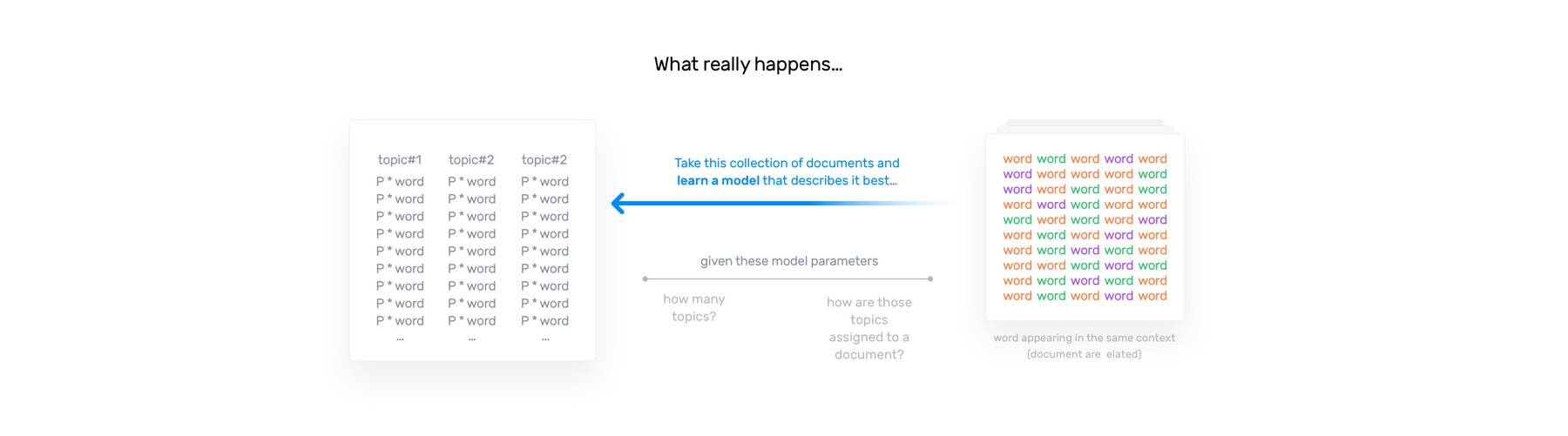

Content analysis is a term that is used to mean both a method of data collection and a method of data analysis. Archival and historical works can be the source of content analysis, but so too can the contemporary media coverage of a story, blogs, comment posts, films, cartoons, advertisements, brand packaging, and photographs posted on Instagram or Facebook. Really, almost anything can be the “content” to be analyzed. This is a qualitative research method because the focus is on the meanings and interpretations of that content rather than strictly numerical counts or variables-based causal modeling. [1] Qualitative content analysis (sometimes referred to as QCA) is particularly useful when attempting to define and understand prevalent stories or communication about a topic of interest—in other words, when we are less interested in what particular people (our defined sample) are doing or believing and more interested in what general narratives exist about a particular topic or issue. This chapter will explore different approaches to content analysis and provide helpful tips on how to collect data, how to turn that data into codes for analysis, and how to go about presenting what is found through analysis. It is also a nice segue between our data collection methods (e.g., interviewing, observation) chapters and chapters 18 and 19, whose focus is on coding, the primary means of data analysis for most qualitative data. In many ways, the methods of content analysis are quite similar to the method of coding.

Although the body of material (“content”) to be collected and analyzed can be nearly anything, most qualitative content analysis is applied to forms of human communication (e.g., media posts, news stories, campaign speeches, advertising jingles). The point of the analysis is to understand this communication, to systematically and rigorously explore its meanings, assumptions, themes, and patterns. Historical and archival sources may be the subject of content analysis, but there are other ways to analyze (“code”) this data when not overly concerned with the communicative aspect (see chapters 18 and 19). This is why we tend to consider content analysis its own method of data collection as well as a method of data analysis. Still, many of the techniques you learn in this chapter will be helpful to any “coding” scheme you develop for other kinds of qualitative data. Just remember that content analysis is a particular form with distinct aims and goals and traditions.

An Overview of the Content Analysis Process

The first step: selecting content.

Figure 17.2 is a display of possible content for content analysis. The first step in content analysis is making smart decisions about what content you will want to analyze and to clearly connect this content to your research question or general focus of research. Why are you interested in the messages conveyed in this particular content? What will the identification of patterns here help you understand? Content analysis can be fun to do, but in order to make it research, you need to fit it into a research plan.

Figure 17.1. A Non-exhaustive List of "Content" for Content Analysis

To take one example, let us imagine you are interested in gender presentations in society and how presentations of gender have changed over time. There are various forms of content out there that might help you document changes. You could, for example, begin by creating a list of magazines that are coded as being for “women” (e.g., Women’s Daily Journal ) and magazines that are coded as being for “men” (e.g., Men’s Health ). You could then select a date range that is relevant to your research question (e.g., 1950s–1970s) and collect magazines from that era. You might create a “sample” by deciding to look at three issues for each year in the date range and a systematic plan for what to look at in those issues (e.g., advertisements? Cartoons? Titles of articles? Whole articles?). You are not just going to look at some magazines willy-nilly. That would not be systematic enough to allow anyone to replicate or check your findings later on. Once you have a clear plan of what content is of interest to you and what you will be looking at, you can begin, creating a record of everything you are including as your content. This might mean a list of each advertisement you look at or each title of stories in those magazines along with its publication date. You may decide to have multiple “content” in your research plan. For each content, you want a clear plan for collecting, sampling, and documenting.

The Second Step: Collecting and Storing

Once you have a plan, you are ready to collect your data. This may entail downloading from the internet, creating a Word document or PDF of each article or picture, and storing these in a folder designated by the source and date (e.g., “ Men’s Health advertisements, 1950s”). Sølvberg ( 2021 ), for example, collected posted job advertisements for three kinds of elite jobs (economic, cultural, professional) in Sweden. But collecting might also mean going out and taking photographs yourself, as in the case of graffiti, street signs, or even what people are wearing. Chaise LaDousa, an anthropologist and linguist, took photos of “house signs,” which are signs, often creative and sometimes offensive, hung by college students living in communal off-campus houses. These signs were a focal point of college culture, sending messages about the values of the students living in them. Some of the names will give you an idea: “Boot ’n Rally,” “The Plantation,” “Crib of the Rib.” The students might find these signs funny and benign, but LaDousa ( 2011 ) argued convincingly that they also reproduced racial and gender inequalities. The data here already existed—they were big signs on houses—but the researcher had to collect the data by taking photographs.

In some cases, your content will be in physical form but not amenable to photographing, as in the case of films or unwieldy physical artifacts you find in the archives (e.g., undigitized meeting minutes or scrapbooks). In this case, you need to create some kind of detailed log (fieldnotes even) of the content that you can reference. In the case of films, this might mean watching the film and writing down details for key scenes that become your data. [2] For scrapbooks, it might mean taking notes on what you are seeing, quoting key passages, describing colors or presentation style. As you might imagine, this can take a lot of time. Be sure you budget this time into your research plan.

Researcher Note

A note on data scraping : Data scraping, sometimes known as screen scraping or frame grabbing, is a way of extracting data generated by another program, as when a scraping tool grabs information from a website. This may help you collect data that is on the internet, but you need to be ethical in how to employ the scraper. A student once helped me scrape thousands of stories from the Time magazine archives at once (although it took several hours for the scraping process to complete). These stories were freely available, so the scraping process simply sped up the laborious process of copying each article of interest and saving it to my research folder. Scraping tools can sometimes be used to circumvent paywalls. Be careful here!

The Third Step: Analysis

There is often an assumption among novice researchers that once you have collected your data, you are ready to write about what you have found. Actually, you haven’t yet found anything, and if you try to write up your results, you will probably be staring sadly at a blank page. Between the collection and the writing comes the difficult task of systematically and repeatedly reviewing the data in search of patterns and themes that will help you interpret the data, particularly its communicative aspect (e.g., What is it that is being communicated here, with these “house signs” or in the pages of Men’s Health ?).

The first time you go through the data, keep an open mind on what you are seeing (or hearing), and take notes about your observations that link up to your research question. In the beginning, it can be difficult to know what is relevant and what is extraneous. Sometimes, your research question changes based on what emerges from the data. Use the first round of review to consider this possibility, but then commit yourself to following a particular focus or path. If you are looking at how gender gets made or re-created, don’t follow the white rabbit down a hole about environmental injustice unless you decide that this really should be the focus of your study or that issues of environmental injustice are linked to gender presentation. In the second round of review, be very clear about emerging themes and patterns. Create codes (more on these in chapters 18 and 19) that will help you simplify what you are noticing. For example, “men as outdoorsy” might be a common trope you see in advertisements. Whenever you see this, mark the passage or picture. In your third (or fourth or fifth) round of review, begin to link up the tropes you’ve identified, looking for particular patterns and assumptions. You’ve drilled down to the details, and now you are building back up to figure out what they all mean. Start thinking about theory—either theories you have read about and are using as a frame of your study (e.g., gender as performance theory) or theories you are building yourself, as in the Grounded Theory tradition. Once you have a good idea of what is being communicated and how, go back to the data at least one more time to look for disconfirming evidence. Maybe you thought “men as outdoorsy” was of importance, but when you look hard, you note that women are presented as outdoorsy just as often. You just hadn’t paid attention. It is very important, as any kind of researcher but particularly as a qualitative researcher, to test yourself and your emerging interpretations in this way.

The Fourth and Final Step: The Write-Up

Only after you have fully completed analysis, with its many rounds of review and analysis, will you be able to write about what you found. The interpretation exists not in the data but in your analysis of the data. Before writing your results, you will want to very clearly describe how you chose the data here and all the possible limitations of this data (e.g., historical-trace problem or power problem; see chapter 16). Acknowledge any limitations of your sample. Describe the audience for the content, and discuss the implications of this. Once you have done all of this, you can put forth your interpretation of the communication of the content, linking to theory where doing so would help your readers understand your findings and what they mean more generally for our understanding of how the social world works. [3]

Analyzing Content: Helpful Hints and Pointers

Although every data set is unique and each researcher will have a different and unique research question to address with that data set, there are some common practices and conventions. When reviewing your data, what do you look at exactly? How will you know if you have seen a pattern? How do you note or mark your data?

Let’s start with the last question first. If your data is stored digitally, there are various ways you can highlight or mark up passages. You can, of course, do this with literal highlighters, pens, and pencils if you have print copies. But there are also qualitative software programs to help you store the data, retrieve the data, and mark the data. This can simplify the process, although it cannot do the work of analysis for you.

Qualitative software can be very expensive, so the first thing to do is to find out if your institution (or program) has a universal license its students can use. If they do not, most programs have special student licenses that are less expensive. The two most used programs at this moment are probably ATLAS.ti and NVivo. Both can cost more than $500 [4] but provide everything you could possibly need for storing data, content analysis, and coding. They also have a lot of customer support, and you can find many official and unofficial tutorials on how to use the programs’ features on the web. Dedoose, created by academic researchers at UCLA, is a decent program that lacks many of the bells and whistles of the two big programs. Instead of paying all at once, you pay monthly, as you use the program. The monthly fee is relatively affordable (less than $15), so this might be a good option for a small project. HyperRESEARCH is another basic program created by academic researchers, and it is free for small projects (those that have limited cases and material to import). You can pay a monthly fee if your project expands past the free limits. I have personally used all four of these programs, and they each have their pluses and minuses.

Regardless of which program you choose, you should know that none of them will actually do the hard work of analysis for you. They are incredibly useful for helping you store and organize your data, and they provide abundant tools for marking, comparing, and coding your data so you can make sense of it. But making sense of it will always be your job alone.

So let’s say you have some software, and you have uploaded all of your content into the program: video clips, photographs, transcripts of news stories, articles from magazines, even digital copies of college scrapbooks. Now what do you do? What are you looking for? How do you see a pattern? The answers to these questions will depend partially on the particular research question you have, or at least the motivation behind your research. Let’s go back to the idea of looking at gender presentations in magazines from the 1950s to the 1970s. Here are some things you can look at and code in the content: (1) actions and behaviors, (2) events or conditions, (3) activities, (4) strategies and tactics, (5) states or general conditions, (6) meanings or symbols, (7) relationships/interactions, (8) consequences, and (9) settings. Table 17.1 lists these with examples from our gender presentation study.

Table 17.1. Examples of What to Note During Content Analysis

One thing to note about the examples in table 17.1: sometimes we note (mark, record, code) a single example, while other times, as in “settings,” we are recording a recurrent pattern. To help you spot patterns, it is useful to mark every setting, including a notation on gender. Using software can help you do this efficiently. You can then call up “setting by gender” and note this emerging pattern. There’s an element of counting here, which we normally think of as quantitative data analysis, but we are using the count to identify a pattern that will be used to help us interpret the communication. Content analyses often include counting as part of the interpretive (qualitative) process.

In your own study, you may not need or want to look at all of the elements listed in table 17.1. Even in our imagined example, some are more useful than others. For example, “strategies and tactics” is a bit of a stretch here. In studies that are looking specifically at, say, policy implementation or social movements, this category will prove much more salient.

Another way to think about “what to look at” is to consider aspects of your content in terms of units of analysis. You can drill down to the specific words used (e.g., the adjectives commonly used to describe “men” and “women” in your magazine sample) or move up to the more abstract level of concepts used (e.g., the idea that men are more rational than women). Counting for the purpose of identifying patterns is particularly useful here. How many times is that idea of women’s irrationality communicated? How is it is communicated (in comic strips, fictional stories, editorials, etc.)? Does the incidence of the concept change over time? Perhaps the “irrational woman” was everywhere in the 1950s, but by the 1970s, it is no longer showing up in stories and comics. By tracing its usage and prevalence over time, you might come up with a theory or story about gender presentation during the period. Table 17.2 provides more examples of using different units of analysis for this work along with suggestions for effective use.

Table 17.2. Examples of Unit of Analysis in Content Analysis

Every qualitative content analysis is unique in its particular focus and particular data used, so there is no single correct way to approach analysis. You should have a better idea, however, of what kinds of things to look for and what to look for. The next two chapters will take you further into the coding process, the primary analytical tool for qualitative research in general.

Further Readings

Cidell, Julie. 2010. “Content Clouds as Exploratory Qualitative Data Analysis.” Area 42(4):514–523. A demonstration of using visual “content clouds” as a form of exploratory qualitative data analysis using transcripts of public meetings and content of newspaper articles.

Hsieh, Hsiu-Fang, and Sarah E. Shannon. 2005. “Three Approaches to Qualitative Content Analysis.” Qualitative Health Research 15(9):1277–1288. Distinguishes three distinct approaches to QCA: conventional, directed, and summative. Uses hypothetical examples from end-of-life care research.

Jackson, Romeo, Alex C. Lange, and Antonio Duran. 2021. “A Whitened Rainbow: The In/Visibility of Race and Racism in LGBTQ Higher Education Scholarship.” Journal Committed to Social Change on Race and Ethnicity (JCSCORE) 7(2):174–206.* Using a “critical summative content analysis” approach, examines research published on LGBTQ people between 2009 and 2019.

Krippendorff, Klaus. 2018. Content Analysis: An Introduction to Its Methodology . 4th ed. Thousand Oaks, CA: SAGE. A very comprehensive textbook on both quantitative and qualitative forms of content analysis.

Mayring, Philipp. 2022. Qualitative Content Analysis: A Step-by-Step Guide . Thousand Oaks, CA: SAGE. Formulates an eight-step approach to QCA.

Messinger, Adam M. 2012. “Teaching Content Analysis through ‘Harry Potter.’” Teaching Sociology 40(4):360–367. This is a fun example of a relatively brief foray into content analysis using the music found in Harry Potter films.

Neuendorft, Kimberly A. 2002. The Content Analysis Guidebook . Thousand Oaks, CA: SAGE. Although a helpful guide to content analysis in general, be warned that this textbook definitely favors quantitative over qualitative approaches to content analysis.

Schrier, Margrit. 2012. Qualitative Content Analysis in Practice . Thousand Okas, CA: SAGE. Arguably the most accessible guidebook for QCA, written by a professor based in Germany.

Weber, Matthew A., Shannon Caplan, Paul Ringold, and Karen Blocksom. 2017. “Rivers and Streams in the Media: A Content Analysis of Ecosystem Services.” Ecology and Society 22(3).* Examines the content of a blog hosted by National Geographic and articles published in The New York Times and the Wall Street Journal for stories on rivers and streams (e.g., water-quality flooding).

- There are ways of handling content analysis quantitatively, however. Some practitioners therefore specify qualitative content analysis (QCA). In this chapter, all content analysis is QCA unless otherwise noted. ↵

- Note that some qualitative software allows you to upload whole films or film clips for coding. You will still have to get access to the film, of course. ↵

- See chapter 20 for more on the final presentation of research. ↵

- . Actually, ATLAS.ti is an annual license, while NVivo is a perpetual license, but both are going to cost you at least $500 to use. Student rates may be lower. And don’t forget to ask your institution or program if they already have a software license you can use. ↵

A method of both data collection and data analysis in which a given content (textual, visual, graphic) is examined systematically and rigorously to identify meanings, themes, patterns and assumptions. Qualitative content analysis (QCA) is concerned with gathering and interpreting an existing body of material.

Introduction to Qualitative Research Methods Copyright © 2023 by Allison Hurst is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License , except where otherwise noted.

Using Content Analysis

This guide provides an introduction to content analysis, a research methodology that examines words or phrases within a wide range of texts.

- Introduction to Content Analysis : Read about the history and uses of content analysis.

- Conceptual Analysis : Read an overview of conceptual analysis and its associated methodology.

- Relational Analysis : Read an overview of relational analysis and its associated methodology.

- Commentary : Read about issues of reliability and validity with regard to content analysis as well as the advantages and disadvantages of using content analysis as a research methodology.

- Examples : View examples of real and hypothetical studies that use content analysis.

- Annotated Bibliography : Complete list of resources used in this guide and beyond.

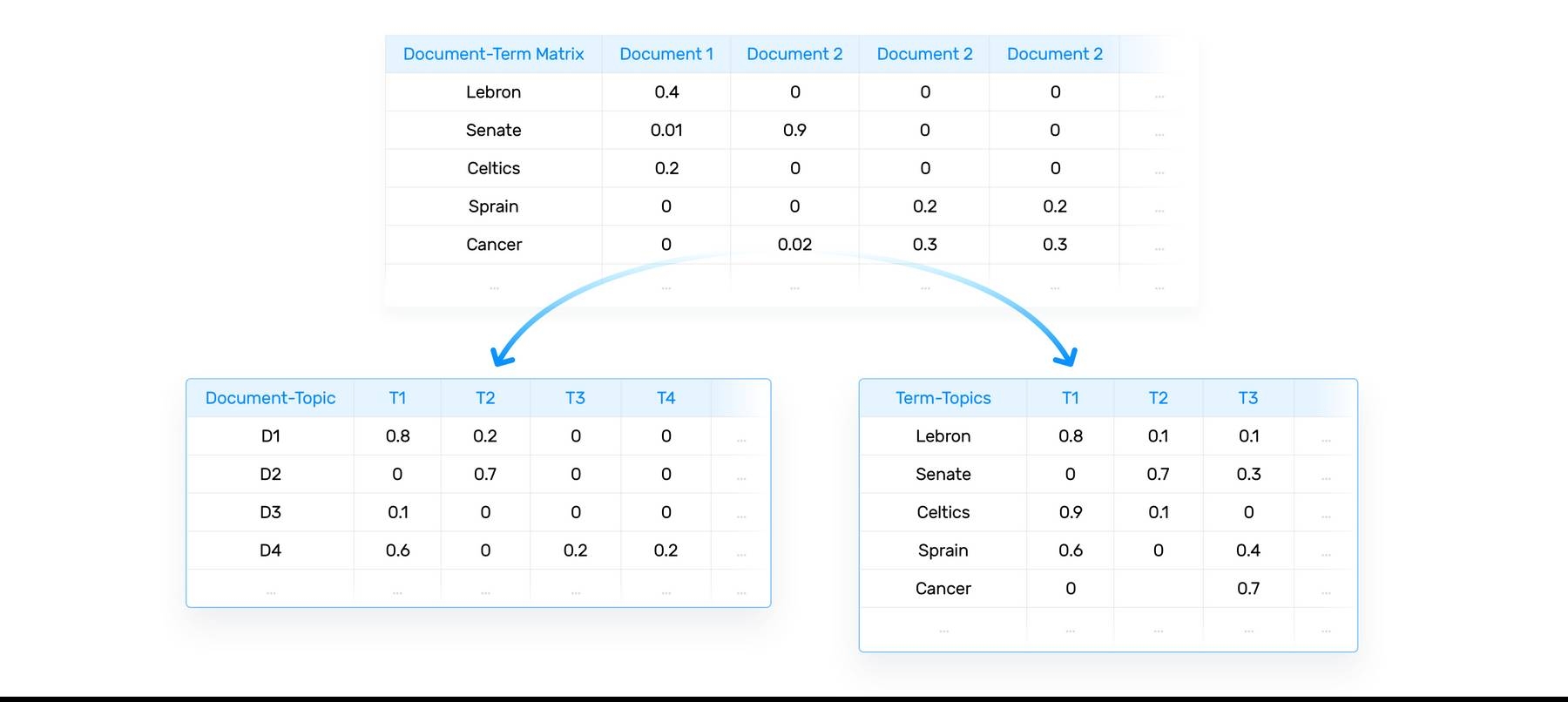

An Introduction to Content Analysis

Content analysis is a research tool used to determine the presence of certain words or concepts within texts or sets of texts. Researchers quantify and analyze the presence, meanings and relationships of such words and concepts, then make inferences about the messages within the texts, the writer(s), the audience, and even the culture and time of which these are a part. Texts can be defined broadly as books, book chapters, essays, interviews, discussions, newspaper headlines and articles, historical documents, speeches, conversations, advertising, theater, informal conversation, or really any occurrence of communicative language. Texts in a single study may also represent a variety of different types of occurrences, such as Palmquist's 1990 study of two composition classes, in which he analyzed student and teacher interviews, writing journals, classroom discussions and lectures, and out-of-class interaction sheets. To conduct a content analysis on any such text, the text is coded, or broken down, into manageable categories on a variety of levels--word, word sense, phrase, sentence, or theme--and then examined using one of content analysis' basic methods: conceptual analysis or relational analysis.

A Brief History of Content Analysis

Historically, content analysis was a time consuming process. Analysis was done manually, or slow mainframe computers were used to analyze punch cards containing data punched in by human coders. Single studies could employ thousands of these cards. Human error and time constraints made this method impractical for large texts. However, despite its impracticality, content analysis was already an often utilized research method by the 1940's. Although initially limited to studies that examined texts for the frequency of the occurrence of identified terms (word counts), by the mid-1950's researchers were already starting to consider the need for more sophisticated methods of analysis, focusing on concepts rather than simply words, and on semantic relationships rather than just presence (de Sola Pool 1959). While both traditions still continue today, content analysis now is also utilized to explore mental models, and their linguistic, affective, cognitive, social, cultural and historical significance.

Uses of Content Analysis

Perhaps due to the fact that it can be applied to examine any piece of writing or occurrence of recorded communication, content analysis is currently used in a dizzying array of fields, ranging from marketing and media studies, to literature and rhetoric, ethnography and cultural studies, gender and age issues, sociology and political science, psychology and cognitive science, and many other fields of inquiry. Additionally, content analysis reflects a close relationship with socio- and psycholinguistics, and is playing an integral role in the development of artificial intelligence. The following list (adapted from Berelson, 1952) offers more possibilities for the uses of content analysis:

- Reveal international differences in communication content

- Detect the existence of propaganda

- Identify the intentions, focus or communication trends of an individual, group or institution

- Describe attitudinal and behavioral responses to communications

- Determine psychological or emotional state of persons or groups

Types of Content Analysis

In this guide, we discuss two general categories of content analysis: conceptual analysis and relational analysis. Conceptual analysis can be thought of as establishing the existence and frequency of concepts most often represented by words of phrases in a text. For instance, say you have a hunch that your favorite poet often writes about hunger. With conceptual analysis you can determine how many times words such as hunger, hungry, famished, or starving appear in a volume of poems. In contrast, relational analysis goes one step further by examining the relationships among concepts in a text. Returning to the hunger example, with relational analysis, you could identify what other words or phrases hunger or famished appear next to and then determine what different meanings emerge as a result of these groupings.

Conceptual Analysis

Traditionally, content analysis has most often been thought of in terms of conceptual analysis. In conceptual analysis, a concept is chosen for examination, and the analysis involves quantifying and tallying its presence. Also known as thematic analysis [although this term is somewhat problematic, given its varied definitions in current literature--see Palmquist, Carley, & Dale (1997) vis-a-vis Smith (1992)], the focus here is on looking at the occurrence of selected terms within a text or texts, although the terms may be implicit as well as explicit. While explicit terms obviously are easy to identify, coding for implicit terms and deciding their level of implication is complicated by the need to base judgments on a somewhat subjective system. To attempt to limit the subjectivity, then (as well as to limit problems of reliability and validity ), coding such implicit terms usually involves the use of either a specialized dictionary or contextual translation rules. And sometimes, both tools are used--a trend reflected in recent versions of the Harvard and Lasswell dictionaries.

Methods of Conceptual Analysis

Conceptual analysis begins with identifying research questions and choosing a sample or samples. Once chosen, the text must be coded into manageable content categories. The process of coding is basically one of selective reduction . By reducing the text to categories consisting of a word, set of words or phrases, the researcher can focus on, and code for, specific words or patterns that are indicative of the research question.

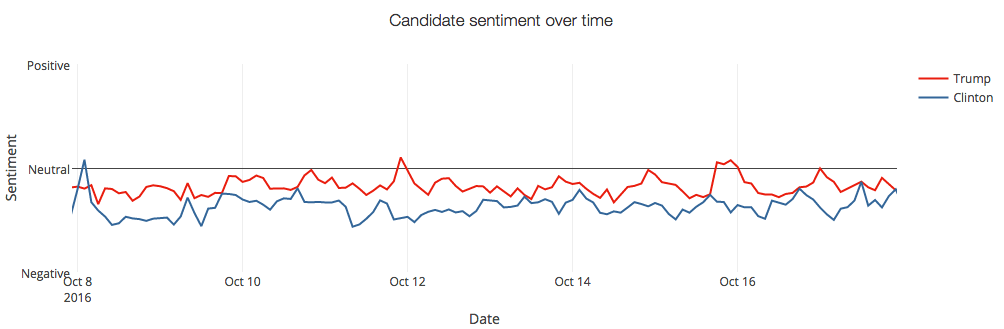

An example of a conceptual analysis would be to examine several Clinton speeches on health care, made during the 1992 presidential campaign, and code them for the existence of certain words. In looking at these speeches, the research question might involve examining the number of positive words used to describe Clinton's proposed plan, and the number of negative words used to describe the current status of health care in America. The researcher would be interested only in quantifying these words, not in examining how they are related, which is a function of relational analysis. In conceptual analysis, the researcher simply wants to examine presence with respect to his/her research question, i.e. is there a stronger presence of positive or negative words used with respect to proposed or current health care plans, respectively.

Once the research question has been established, the researcher must make his/her coding choices with respect to the eight category coding steps indicated by Carley (1992).

Steps for Conducting Conceptual Analysis

The following discussion of steps that can be followed to code a text or set of texts during conceptual analysis use campaign speeches made by Bill Clinton during the 1992 presidential campaign as an example. To read about each step, click on the items in the list below:

- Decide the level of analysis.

First, the researcher must decide upon the level of analysis . With the health care speeches, to continue the example, the researcher must decide whether to code for a single word, such as "inexpensive," or for sets of words or phrases, such as "coverage for everyone."

- Decide how many concepts to code for.

The researcher must now decide how many different concepts to code for. This involves developing a pre-defined or interactive set of concepts and categories. The researcher must decide whether or not to code for every single positive or negative word that appears, or only certain ones that the researcher determines are most relevant to health care. Then, with this pre-defined number set, the researcher has to determine how much flexibility he/she allows him/herself when coding. The question of whether the researcher codes only from this pre-defined set, or allows him/herself to add relevant categories not included in the set as he/she finds them in the text, must be answered. Determining a certain number and set of concepts allows a researcher to examine a text for very specific things, keeping him/her on task. But introducing a level of coding flexibility allows new, important material to be incorporated into the coding process that could have significant bearings on one's results.

- Decide whether to code for existence or frequency of a concept.

After a certain number and set of concepts are chosen for coding , the researcher must answer a key question: is he/she going to code for existence or frequency ? This is important, because it changes the coding process. When coding for existence, "inexpensive" would only be counted once, no matter how many times it appeared. This would be a very basic coding process and would give the researcher a very limited perspective of the text. However, the number of times "inexpensive" appears in a text might be more indicative of importance. Knowing that "inexpensive" appeared 50 times, for example, compared to 15 appearances of "coverage for everyone," might lead a researcher to interpret that Clinton is trying to sell his health care plan based more on economic benefits, not comprehensive coverage. Knowing that "inexpensive" appeared, but not that it appeared 50 times, would not allow the researcher to make this interpretation, regardless of whether it is valid or not.

- Decide on how you will distinguish among concepts.

The researcher must next decide on the , i.e. whether concepts are to be coded exactly as they appear, or if they can be recorded as the same even when they appear in different forms. For example, "expensive" might also appear as "expensiveness." The research needs to determine if the two words mean radically different things to him/her, or if they are similar enough that they can be coded as being the same thing, i.e. "expensive words." In line with this, is the need to determine the level of implication one is going to allow. This entails more than subtle differences in tense or spelling, as with "expensive" and "expensiveness." Determining the level of implication would allow the researcher to code not only for the word "expensive," but also for words that imply "expensive." This could perhaps include technical words, jargon, or political euphemism, such as "economically challenging," that the researcher decides does not merit a separate category, but is better represented under the category "expensive," due to its implicit meaning of "expensive."

- Develop rules for coding your texts.

After taking the generalization of concepts into consideration, a researcher will want to create translation rules that will allow him/her to streamline and organize the coding process so that he/she is coding for exactly what he/she wants to code for. Developing a set of rules helps the researcher insure that he/she is coding things consistently throughout the text, in the same way every time. If a researcher coded "economically challenging" as a separate category from "expensive" in one paragraph, then coded it under the umbrella of "expensive" when it occurred in the next paragraph, his/her data would be invalid. The interpretations drawn from that data will subsequently be invalid as well. Translation rules protect against this and give the coding process a crucial level of consistency and coherence.

- Decide what to do with "irrelevant" information.

The next choice a researcher must make involves irrelevant information . The researcher must decide whether irrelevant information should be ignored (as Weber, 1990, suggests), or used to reexamine and/or alter the coding scheme. In the case of this example, words like "and" and "the," as they appear by themselves, would be ignored. They add nothing to the quantification of words like "inexpensive" and "expensive" and can be disregarded without impacting the outcome of the coding.

- Code the texts.

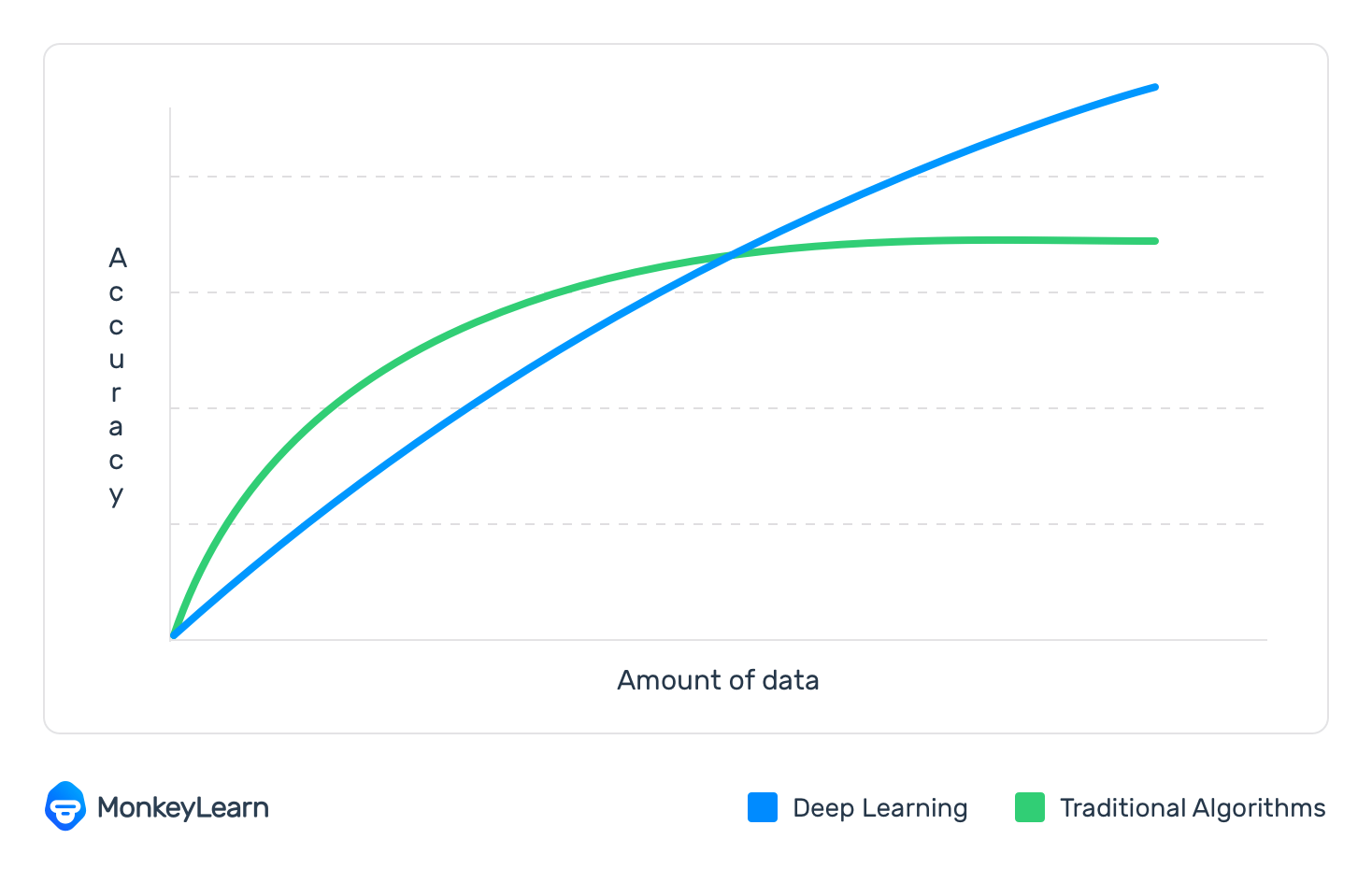

Once these choices about irrelevant information are made, the next step is to code the text. This is done either by hand, i.e. reading through the text and manually writing down concept occurrences, or through the use of various computer programs. Coding with a computer is one of contemporary conceptual analysis' greatest assets. By inputting one's categories, content analysis programs can easily automate the coding process and examine huge amounts of data, and a wider range of texts, quickly and efficiently. But automation is very dependent on the researcher's preparation and category construction. When coding is done manually, a researcher can recognize errors far more easily. A computer is only a tool and can only code based on the information it is given. This problem is most apparent when coding for implicit information, where category preparation is essential for accurate coding.

- Analyze your results.

Once the coding is done, the researcher examines the data and attempts to draw whatever conclusions and generalizations are possible. Of course, before these can be drawn, the researcher must decide what to do with the information in the text that is not coded. One's options include either deleting or skipping over unwanted material, or viewing all information as relevant and important and using it to reexamine, reassess and perhaps even alter one's coding scheme. Furthermore, given that the conceptual analyst is dealing only with quantitative data, the levels of interpretation and generalizability are very limited. The researcher can only extrapolate as far as the data will allow. But it is possible to see trends, for example, that are indicative of much larger ideas. Using the example from step three, if the concept "inexpensive" appears 50 times, compared to 15 appearances of "coverage for everyone," then the researcher can pretty safely extrapolate that there does appear to be a greater emphasis on the economics of the health care plan, as opposed to its universal coverage for all Americans. It must be kept in mind that conceptual analysis, while extremely useful and effective for providing this type of information when done right, is limited by its focus and the quantitative nature of its examination. To more fully explore the relationships that exist between these concepts, one must turn to relational analysis.

Relational Analysis

Relational analysis, like conceptual analysis, begins with the act of identifying concepts present in a given text or set of texts. However, relational analysis seeks to go beyond presence by exploring the relationships between the concepts identified. Relational analysis has also been termed semantic analysis (Palmquist, Carley, & Dale, 1997). In other words, the focus of relational analysis is to look for semantic, or meaningful, relationships. Individual concepts, in and of themselves, are viewed as having no inherent meaning. Rather, meaning is a product of the relationships among concepts in a text. Carley (1992) asserts that concepts are "ideational kernels;" these kernels can be thought of as symbols which acquire meaning through their connections to other symbols.

Theoretical Influences on Relational Analysis

The kind of analysis that researchers employ will vary significantly according to their theoretical approach. Key theoretical approaches that inform content analysis include linguistics and cognitive science.

Linguistic approaches to content analysis focus analysis of texts on the level of a linguistic unit, typically single clause units. One example of this type of research is Gottschalk (1975), who developed an automated procedure which analyzes each clause in a text and assigns it a numerical score based on several emotional/psychological scales. Another technique is to code a text grammatically into clauses and parts of speech to establish a matrix representation (Carley, 1990).

Approaches that derive from cognitive science include the creation of decision maps and mental models. Decision maps attempt to represent the relationship(s) between ideas, beliefs, attitudes, and information available to an author when making a decision within a text. These relationships can be represented as logical, inferential, causal, sequential, and mathematical relationships. Typically, two of these links are compared in a single study, and are analyzed as networks. For example, Heise (1987) used logical and sequential links to examine symbolic interaction. This methodology is thought of as a more generalized cognitive mapping technique, rather than the more specific mental models approach.

Mental models are groups or networks of interrelated concepts that are thought to reflect conscious or subconscious perceptions of reality. According to cognitive scientists, internal mental structures are created as people draw inferences and gather information about the world. Mental models are a more specific approach to mapping because beyond extraction and comparison because they can be numerically and graphically analyzed. Such models rely heavily on the use of computers to help analyze and construct mapping representations. Typically, studies based on this approach follow five general steps:

- Identifing concepts

- Defining relationship types

- Coding the text on the basis of 1 and 2

- Coding the statements

- Graphically displaying and numerically analyzing the resulting maps

To create the model, a researcher converts a text into a map of concepts and relations; the map is then analyzed on the level of concepts and statements, where a statement consists of two concepts and their relationship. Carley (1990) asserts that this makes possible the comparison of a wide variety of maps, representing multiple sources, implicit and explicit information, as well as socially shared cognitions.

Relational Analysis: Overview of Methods

As with other sorts of inquiry, initial choices with regard to what is being studied and/or coded for often determine the possibilities of that particular study. For relational analysis, it is important to first decide which concept type(s) will be explored in the analysis. Studies have been conducted with as few as one and as many as 500 concept categories. Obviously, too many categories may obscure your results and too few can lead to unreliable and potentially invalid conclusions. Therefore, it is important to allow the context and necessities of your research to guide your coding procedures.

The steps to relational analysis that we consider in this guide suggest some of the possible avenues available to a researcher doing content analysis. We provide an example to make the process easier to grasp. However, the choices made within the context of the example are but only a few of many possibilities. The diversity of techniques available suggests that there is quite a bit of enthusiasm for this mode of research. Once a procedure is rigorously tested, it can be applied and compared across populations over time. The process of relational analysis has achieved a high degree of computer automation but still is, like most forms of research, time consuming. Perhaps the strongest claim that can be made is that it maintains a high degree of statistical rigor without losing the richness of detail apparent in even more qualitative methods.

Three Subcategories of Relational Analysis

Affect extraction: This approach provides an emotional evaluation of concepts explicit in a text. It is problematic because emotion may vary across time and populations. Nevertheless, when extended it can be a potent means of exploring the emotional/psychological state of the speaker and/or writer. Gottschalk (1995) provides an example of this type of analysis. By assigning concepts identified a numeric value on corresponding emotional/psychological scales that can then be statistically examined, Gottschalk claims that the emotional/psychological state of the speaker or writer can be ascertained via their verbal behavior.

Proximity analysis: This approach, on the other hand, is concerned with the co-occurrence of explicit concepts in the text. In this procedure, the text is defined as a string of words. A given length of words, called a window , is determined. The window is then scanned across a text to check for the co-occurrence of concepts. The result is the creation of a concept determined by the concept matrix . In other words, a matrix, or a group of interrelated, co-occurring concepts, might suggest a certain overall meaning. The technique is problematic because the window records only explicit concepts and treats meaning as proximal co-occurrence. Other techniques such as clustering, grouping, and scaling are also useful in proximity analysis.

Cognitive mapping: This approach is one that allows for further analysis of the results from the two previous approaches. It attempts to take the above processes one step further by representing these relationships visually for comparison. Whereas affective and proximal analysis function primarily within the preserved order of the text, cognitive mapping attempts to create a model of the overall meaning of the text. This can be represented as a graphic map that represents the relationships between concepts.

In this manner, cognitive mapping lends itself to the comparison of semantic connections across texts. This is known as map analysis which allows for comparisons to explore "how meanings and definitions shift across people and time" (Palmquist, Carley, & Dale, 1997). Maps can depict a variety of different mental models (such as that of the text, the writer/speaker, or the social group/period), according to the focus of the researcher. This variety is indicative of the theoretical assumptions that support mapping: mental models are representations of interrelated concepts that reflect conscious or subconscious perceptions of reality; language is the key to understanding these models; and these models can be represented as networks (Carley, 1990). Given these assumptions, it's not surprising to see how closely this technique reflects the cognitive concerns of socio-and psycholinguistics, and lends itself to the development of artificial intelligence models.

Steps for Conducting Relational Analysis

The following discussion of the steps (or, perhaps more accurately, strategies) that can be followed to code a text or set of texts during relational analysis. These explanations are accompanied by examples of relational analysis possibilities for statements made by Bill Clinton during the 1998 hearings.

- Identify the Question.

The question is important because it indicates where you are headed and why. Without a focused question, the concept types and options open to interpretation are limitless and therefore the analysis difficult to complete. Possibilities for the Hairy Hearings of 1998 might be:

What did Bill Clinton say in the speech? OR What concrete information did he present to the public?

- Choose a sample or samples for analysis.

Once the question has been identified, the researcher must select sections of text/speech from the hearings in which Bill Clinton may have not told the entire truth or is obviously holding back information. For relational content analysis, the primary consideration is how much information to preserve for analysis. One must be careful not to limit the results by doing so, but the researcher must also take special care not to take on so much that the coding process becomes too heavy and extensive to supply worthwhile results.

- Determine the type of analysis.

Once the sample has been chosen for analysis, it is necessary to determine what type or types of relationships you would like to examine. There are different subcategories of relational analysis that can be used to examine the relationships in texts.

In this example, we will use proximity analysis because it is concerned with the co-occurrence of explicit concepts in the text. In this instance, we are not particularly interested in affect extraction because we are trying to get to the hard facts of what exactly was said rather than determining the emotional considerations of speaker and receivers surrounding the speech which may be unrecoverable.

Once the subcategory of analysis is chosen, the selected text must be reviewed to determine the level of analysis. The researcher must decide whether to code for a single word, such as "perhaps," or for sets of words or phrases like "I may have forgotten."

- Reduce the text to categories and code for words or patterns.

At the simplest level, a researcher can code merely for existence. This is not to say that simplicity of procedure leads to simplistic results. Many studies have successfully employed this strategy. For example, Palmquist (1990) did not attempt to establish the relationships among concept terms in the classrooms he studied; his study did, however, look at the change in the presence of concepts over the course of the semester, comparing a map analysis from the beginning of the semester to one constructed at the end. On the other hand, the requirement of one's specific research question may necessitate deeper levels of coding to preserve greater detail for analysis.

In relation to our extended example, the researcher might code for how often Bill Clinton used words that were ambiguous, held double meanings, or left an opening for change or "re-evaluation." The researcher might also choose to code for what words he used that have such an ambiguous nature in relation to the importance of the information directly related to those words.

- Explore the relationships between concepts (Strength, Sign & Direction).

Once words are coded, the text can be analyzed for the relationships among the concepts set forth. There are three concepts which play a central role in exploring the relations among concepts in content analysis.

- Strength of Relationship: Refers to the degree to which two or more concepts are related. These relationships are easiest to analyze, compare, and graph when all relationships between concepts are considered to be equal. However, assigning strength to relationships retains a greater degree of the detail found in the original text. Identifying strength of a relationship is key when determining whether or not words like unless, perhaps, or maybe are related to a particular section of text, phrase, or idea.

- Sign of a Relationship: Refers to whether or not the concepts are positively or negatively related. To illustrate, the concept "bear" is negatively related to the concept "stock market" in the same sense as the concept "bull" is positively related. Thus "it's a bear market" could be coded to show a negative relationship between "bear" and "market". Another approach to coding for strength entails the creation of separate categories for binary oppositions. The above example emphasizes "bull" as the negation of "bear," but could be coded as being two separate categories, one positive and one negative. There has been little research to determine the benefits and liabilities of these differing strategies. Use of Sign coding for relationships in regard to the hearings my be to find out whether or not the words under observation or in question were used adversely or in favor of the concepts (this is tricky, but important to establishing meaning).

- Direction of the Relationship: Refers to the type of relationship categories exhibit. Coding for this sort of information can be useful in establishing, for example, the impact of new information in a decision making process. Various types of directional relationships include, "X implies Y," "X occurs before Y" and "if X then Y," or quite simply the decision whether concept X is the "prime mover" of Y or vice versa. In the case of the 1998 hearings, the researcher might note that, "maybe implies doubt," "perhaps occurs before statements of clarification," and "if possibly exists, then there is room for Clinton to change his stance." In some cases, concepts can be said to be bi-directional, or having equal influence. This is equivalent to ignoring directionality. Both approaches are useful, but differ in focus. Coding all categories as bi-directional is most useful for exploratory studies where pre-coding may influence results, and is also most easily automated, or computer coded.

- Code the relationships.

One of the main differences between conceptual analysis and relational analysis is that the statements or relationships between concepts are coded. At this point, to continue our extended example, it is important to take special care with assigning value to the relationships in an effort to determine whether the ambiguous words in Bill Clinton's speech are just fillers, or hold information about the statements he is making.

- Perform Statisical Analyses.

This step involves conducting statistical analyses of the data you've coded during your relational analysis. This may involve exploring for differences or looking for relationships among the variables you've identified in your study.

- Map out the Representations.

In addition to statistical analysis, relational analysis often leads to viewing the representations of the concepts and their associations in a text (or across texts) in a graphical -- or map -- form. Relational analysis is also informed by a variety of different theoretical approaches: linguistic content analysis, decision mapping, and mental models.

The authors of this guide have created the following commentaries on content analysis.

Issues of Reliability & Validity

The issues of reliability and validity are concurrent with those addressed in other research methods. The reliability of a content analysis study refers to its stability , or the tendency for coders to consistently re-code the same data in the same way over a period of time; reproducibility , or the tendency for a group of coders to classify categories membership in the same way; and accuracy , or the extent to which the classification of a text corresponds to a standard or norm statistically. Gottschalk (1995) points out that the issue of reliability may be further complicated by the inescapably human nature of researchers. For this reason, he suggests that coding errors can only be minimized, and not eliminated (he shoots for 80% as an acceptable margin for reliability).

On the other hand, the validity of a content analysis study refers to the correspondence of the categories to the conclusions , and the generalizability of results to a theory.

The validity of categories in implicit concept analysis, in particular, is achieved by utilizing multiple classifiers to arrive at an agreed upon definition of the category. For example, a content analysis study might measure the occurrence of the concept category "communist" in presidential inaugural speeches. Using multiple classifiers, the concept category can be broadened to include synonyms such as "red," "Soviet threat," "pinkos," "godless infidels" and "Marxist sympathizers." "Communist" is held to be the explicit variable, while "red," etc. are the implicit variables.

The overarching problem of concept analysis research is the challenge-able nature of conclusions reached by its inferential procedures. The question lies in what level of implication is allowable, i.e. do the conclusions follow from the data or are they explainable due to some other phenomenon? For occurrence-specific studies, for example, can the second occurrence of a word carry equal weight as the ninety-ninth? Reasonable conclusions can be drawn from substantive amounts of quantitative data, but the question of proof may still remain unanswered.

This problem is again best illustrated when one uses computer programs to conduct word counts. The problem of distinguishing between synonyms and homonyms can completely throw off one's results, invalidating any conclusions one infers from the results. The word "mine," for example, variously denotes a personal pronoun, an explosive device, and a deep hole in the ground from which ore is extracted. One may obtain an accurate count of that word's occurrence and frequency, but not have an accurate accounting of the meaning inherent in each particular usage. For example, one may find 50 occurrences of the word "mine." But, if one is only looking specifically for "mine" as an explosive device, and 17 of the occurrences are actually personal pronouns, the resulting 50 is an inaccurate result. Any conclusions drawn as a result of that number would render that conclusion invalid.

The generalizability of one's conclusions, then, is very dependent on how one determines concept categories, as well as on how reliable those categories are. It is imperative that one defines categories that accurately measure the idea and/or items one is seeking to measure. Akin to this is the construction of rules. Developing rules that allow one, and others, to categorize and code the same data in the same way over a period of time, referred to as stability , is essential to the success of a conceptual analysis. Reproducibility , not only of specific categories, but of general methods applied to establishing all sets of categories, makes a study, and its subsequent conclusions and results, more sound. A study which does this, i.e. in which the classification of a text corresponds to a standard or norm, is said to have accuracy .

Advantages of Content Analysis

Content analysis offers several advantages to researchers who consider using it. In particular, content analysis:

- looks directly at communication via texts or transcripts, and hence gets at the central aspect of social interaction

- can allow for both quantitative and qualitative operations

- can provides valuable historical/cultural insights over time through analysis of texts

- allows a closeness to text which can alternate between specific categories and relationships and also statistically analyzes the coded form of the text

- can be used to interpret texts for purposes such as the development of expert systems (since knowledge and rules can both be coded in terms of explicit statements about the relationships among concepts)

- is an unobtrusive means of analyzing interactions

- provides insight into complex models of human thought and language use

Disadvantages of Content Analysis

Content analysis suffers from several disadvantages, both theoretical and procedural. In particular, content analysis:

- can be extremely time consuming

- is subject to increased error, particularly when relational analysis is used to attain a higher level of interpretation

- is often devoid of theoretical base, or attempts too liberally to draw meaningful inferences about the relationships and impacts implied in a study

- is inherently reductive, particularly when dealing with complex texts

- tends too often to simply consist of word counts

- often disregards the context that produced the text, as well as the state of things after the text is produced

- can be difficult to automate or computerize

The Palmquist, Carley and Dale study, a summary of "Applications of Computer-Aided Text Analysis: Analyzing Literary and Non-Literary Texts" (1997) is an example of two studies that have been conducted using both conceptual and relational analysis. The Problematic Text for Content Analysis shows the differences in results obtained by a conceptual and a relational approach to a study.

Related Information: Example of a Problematic Text for Content Analysis

In this example, both students observed a scientist and were asked to write about the experience.

Student A: I found that scientists engage in research in order to make discoveries and generate new ideas. Such research by scientists is hard work and often involves collaboration with other scientists which leads to discoveries which make the scientists famous. Such collaboration may be informal, such as when they share new ideas over lunch, or formal, such as when they are co-authors of a paper.

Student B: It was hard work to research famous scientists engaged in collaboration and I made many informal discoveries. My research showed that scientists engaged in collaboration with other scientists are co-authors of at least one paper containing their new ideas. Some scientists make formal discoveries and have new ideas.

Content analysis coding for explicit concepts may not reveal any significant differences. For example, the existence of "I, scientist, research, hard work, collaboration, discoveries, new ideas, etc..." are explicit in both texts, occur the same number of times, and have the same emphasis. Relational analysis or cognitive mapping, however, reveals that while all concepts in the text are shared, only five concepts are common to both. Analyzing these statements reveals that Student A reports on what "I" found out about "scientists," and elaborated the notion of "scientists" doing "research." Student B focuses on what "I's" research was and sees scientists as "making discoveries" without emphasis on research.

Related Information: The Palmquist, Carley and Dale Study

Consider these two questions: How has the depiction of robots changed over more than a century's worth of writing? And, do students and writing instructors share the same terms for describing the writing process? Although these questions seem totally unrelated, they do share a commonality: in the Palmquist, Carley & Dale study, their answers rely on computer-aided text analysis to demonstrate how different texts can be analyzed.

Literary texts

One half of the study explored the depiction of robots in 27 science fiction texts written between 1818 and 1988. After texts were divided into three historically defined groups, readers look for how the depiction of robots has changed over time. To do this, researchers had to create concept lists and relationship types, create maps using a computer software (see Fig. 1), modify those maps and then ultimately analyze them. The final product of the analysis revealed that over time authors were less likely to depict robots as metallic humanoids.

Non-literary texts

The second half of the study used student journals and interviews, teacher interviews, texts books, and classroom observations as the non-literary texts from which concepts and words were taken. The purpose behind the study was to determine if, in fact, over time teacher and students would begin to share a similar vocabulary about the writing process. Again, researchers used computer software to assist in the process. This time, computers helped researchers generated a concept list based on frequently occurring words and phrases from all texts. Maps were also created and analyzed in this study (see Fig. 2).

Annotated Bibliography

Resources On How To Conduct Content Analysis

Beard, J., & Yaprak, A. (1989). Language implications for advertising in international markets: A model for message content and message execution. A paper presented at the 8th International Conference on Language Communication for World Business and the Professions. Ann Arbor, MI.

This report discusses the development and testing of a content analysis model for assessing advertising themes and messages aimed primarily at U.S. markets which seeks to overcome barriers in the cultural environment of international markets. Texts were categorized under 3 headings: rational, emotional, and moral. The goal here was to teach students to appreciate differences in language and culture.

Berelson, B. (1971). Content analysis in communication research . New York: Hafner Publishing Company.

While this book provides an extensive outline of the uses of content analysis, it is far more concerned with conveying a critical approach to current literature on the subject. In this respect, it assumes a bit of prior knowledge, but is still accessible through the use of concrete examples.

Budd, R. W., Thorp, R.K., & Donohew, L. (1967). Content analysis of communications . New York: Macmillan Company.

Although published in 1967, the decision of the authors to focus on recent trends in content analysis keeps their insights relevant even to modern audiences. The book focuses on specific uses and methods of content analysis with an emphasis on its potential for researching human behavior. It is also geared toward the beginning researcher and breaks down the process of designing a content analysis study into 6 steps that are outlined in successive chapters. A useful annotated bibliography is included.

Carley, K. (1992). Coding choices for textual analysis: A comparison of content analysis and map analysis. Unpublished Working Paper.

Comparison of the coding choices necessary to conceptual analysis and relational analysis, especially focusing on cognitive maps. Discusses concept coding rules needed for sufficient reliability and validity in a Content Analysis study. In addition, several pitfalls common to texts are discussed.

Carley, K. (1990). Content analysis. In R.E. Asher (Ed.), The Encyclopedia of Language and Linguistics. Edinburgh: Pergamon Press.

Quick, yet detailed, overview of the different methodological kinds of Content Analysis. Carley breaks down her paper into five sections, including: Conceptual Analysis, Procedural Analysis, Relational Analysis, Emotional Analysis and Discussion. Also included is an excellent and comprehensive Content Analysis reference list.

Carley, K. (1989). Computer analysis of qualitative data . Pittsburgh, PA: Carnegie Mellon University.

Presents graphic, illustrated representations of computer based approaches to content analysis.

Carley, K. (1992). MECA . Pittsburgh, PA: Carnegie Mellon University.

A resource guide explaining the fifteen routines that compose the Map Extraction Comparison and Analysis (MECA) software program. Lists the source file, input and out files, and the purpose for each routine.

Carney, T. F. (1972). Content analysis: A technique for systematic inference from communications . Winnipeg, Canada: University of Manitoba Press.

This book introduces and explains in detail the concept and practice of content analysis. Carney defines it; traces its history; discusses how content analysis works and its strengths and weaknesses; and explains through examples and illustrations how one goes about doing a content analysis.

de Sola Pool, I. (1959). Trends in content analysis . Urbana, Ill: University of Illinois Press.

The 1959 collection of papers begins by differentiating quantitative and qualitative approaches to content analysis, and then details facets of its uses in a wide variety of disciplines: from linguistics and folklore to biography and history. Includes a discussion on the selection of relevant methods and representational models.

Duncan, D. F. (1989). Content analysis in health educaton research: An introduction to purposes and methods. Heatlth Education, 20 (7).

This article proposes using content analysis as a research technique in health education. A review of literature relating to applications of this technique and a procedure for content analysis are presented.

Gottschalk, L. A. (1995). Content analysis of verbal behavior: New findings and clinical applications. Hillside, NJ: Lawrence Erlbaum Associates, Inc.

This book primarily focuses on the Gottschalk-Gleser method of content analysis, and its application as a method of measuring psychological dimensions of children and adults via the content and form analysis of their verbal behavior, using the grammatical clause as the basic unit of communication for carrying semantic messages generated by speakers or writers.

Krippendorf, K. (1980). Content analysis: An introduction to its methodology Beverly Hills, CA: Sage Publications.

This is one of the most widely quoted resources in many of the current studies of Content Analysis. Recommended as another good, basic resource, as Krippendorf presents the major issues of Content Analysis in much the same way as Weber (1975).

Moeller, L. G. (1963). An introduction to content analysis--including annotated bibliography . Iowa City: University of Iowa Press.

A good reference for basic content analysis. Discusses the options of sampling, categories, direction, measurement, and the problems of reliability and validity in setting up a content analysis. Perhaps better as a historical text due to its age.

Smith, C. P. (Ed.). (1992). Motivation and personality: Handbook of thematic content analysis. New York: Cambridge University Press.

Billed by its authors as "the first book to be devoted primarily to content analysis systems for assessment of the characteristics of individuals, groups, or historical periods from their verbal materials." The text includes manuals for using various systems, theory, and research regarding the background of systems, as well as practice materials, making the book both a reference and a handbook.

Solomon, M. (1993). Content analysis: a potent tool in the searcher's arsenal. Database, 16 (2), 62-67.

Online databases can be used to analyze data, as well as to simply retrieve it. Online-media-source content analysis represents a potent but little-used tool for the business searcher. Content analysis benchmarks useful to advertisers include prominence, offspin, sponsor affiliation, verbatims, word play, positioning and notational visibility.

Weber, R. P. (1990). Basic content analysis, second edition . Newbury Park, CA: Sage Publications.

Good introduction to Content Analysis. The first chapter presents a quick overview of Content Analysis. The second chapter discusses content classification and interpretation, including sections on reliability, validity, and the creation of coding schemes and categories. Chapter three discusses techniques of Content Analysis, using a number of tables and graphs to illustrate the techniques. Chapter four examines issues in Content Analysis, such as measurement, indication, representation and interpretation.

Examples of Content Analysis

Adams, W., & Shriebman, F. (1978). Television network news: Issues in content research . Washington, DC: George Washington University Press.

A fairly comprehensive application of content analysis to the field of television news reporting. The books tripartite division discusses current trends and problems with news criticism from a content analysis perspective, four different content analysis studies of news media, and makes recommendations for future research in the area. Worth a look by anyone interested in mass communication research.

Auter, P. J., & Moore, R. L. (1993). Buying from a friend: a content analysis of two teleshopping programs. Journalism Quarterly, 70 (2), 425-437.

A preliminary study was conducted to content-analyze random samples of two teleshopping programs, using a measure of content interactivity and a locus of control message index.

Barker, S. P. (???) Fame: A content analysis study of the American film biography. Ohio State University. Thesis.

Barker examined thirty Oscar-nominated films dating from 1929 to 1979 using O.J. Harvey Belief System and the Kohlberg's Moral Stages to determine whether cinema heroes were positive role models for fame and success or morally ambiguous celebrities. Content analysis was successful in determining several trends relative to the frequency and portrayal of women in film, the generally high ethical character of the protagonists, and the dogmatic, close-minded nature of film antagonists.

Bernstein, J. M. & Lacy, S. (1992). Contextual coverage of government by local television news. Journalism Quarterly, 69 (2), 329-341.

This content analysis of 14 local television news operations in five markets looks at how local TV news shows contribute to the marketplace of ideas. Performance was measured as the allocation of stories to types of coverage that provide the context about events and issues confronting the public.

Blaikie, A. (1993). Images of age: a reflexive process. Applied Ergonomics, 24 (1), 51-58.

Content analysis of magazines provides a sharp instrument for reflecting the change in stereotypes of aging over past decades.

Craig, R. S. (1992). The effect of day part on gender portrayals in television commercials: a content analysis. Sex Roles: A Journal of Research, 26 (5-6), 197-213.

Gender portrayals in 2,209 network television commercials were content analyzed. To compare differences between three day parts, the sample was chosen from three time periods: daytime, evening prime time, and weekend afternoon sportscasts. The results indicate large and consistent differences in the way men and women are portrayed in these three day parts, with almost all comparisons reaching significance at the .05 level. Although ads in all day parts tended to portray men in stereotypical roles of authority and dominance, those on weekends tended to emphasize escape form home and family. The findings of earlier studies which did not consider day part differences may now have to be reevaluated.

Dillon, D. R. et al. (1992). Article content and authorship trends in The Reading Teacher, 1948-1991. The Reading Teacher, 45 (5), 362-368.

The authors explore changes in the focus of the journal over time.

Eberhardt, EA. (1991). The rhetorical analysis of three journal articles: The study of form, content, and ideology. Ft. Collins, CO: Colorado State University.

Eberhardt uses content analysis in this thesis paper to analyze three journal articles that reported on President Ronald Reagan's address in which he responded to the Tower Commission report concerning the IranContra Affair. The reports concentrated on three rhetorical elements: idea generation or content; linguistic style or choice of language; and the potential societal effect of both, which Eberhardt analyzes, along with the particular ideological orientation espoused by each magazine.

Ellis, B. G. & Dick, S. J. (1996). 'Who was 'Shadow'? The computer knows: applying grammar-program statistics in content analyses to solve mysteries about authorship. Journalism & Mass Communication Quarterly, 73 (4), 947-963.

This study's objective was to employ the statistics-documentation portion of a word-processing program's grammar-check feature as a final, definitive, and objective tool for content analyses - used in tandem with qualitative analyses - to determine authorship. Investigators concluded there was significant evidence from both modalities to support their theory that Henry Watterson, long-time editor of the Louisville Courier-Journal, probably was the South's famed Civil War correspondent "Shadow" and to rule out another prime suspect, John H. Linebaugh of the Memphis Daily Appeal. Until now, this Civil War mystery has never been conclusively solved, puzzling historians specializing in Confederate journalism.